Search the Community

Showing results for tags 'esxi', 'serial port' or 'vm'.

-

Hello everyone. Brand new on this forum and on playing with esxi. I set up jun's loader and get two DSM 6.1 running. Why two? I have a raid volume ( hardware, lsi) and want to secure there my pictures. Regarding music and films , I dont care much loosing it , so a simple volume would be ok. Not finding to do it with 1 DSM, I thought installing two, one with RAID volume and the other , the basic one. The problem is that I see on the net the first one installed, and the second one ( installed with OVF) doesnot show up . The MAC address for both is the same. I tried to enter a manual Mac one, but DSM replies error due to mac address being protected ( dont recall the specific message). What could I do? Would there be an alternative by using only one DSM; and the Hardware RAID? Thank you for any help or ideas. Phil

-

Пришлось создать тему, в которой смогу возможно получить ответы по существующей думаю не только у меня, острой проблеме, а именно - 1. Перенести виртуальную машину с Synology на ESXI; 2. Какие есть варианты переноса; 3. Кто и как уже это делал; Я пробовал и через Архивацию диска через винду, и через восстановление ее - не помогает, после приветствия винды, постоянный ребут! Причина - создал удаленный комп на 1С, жена поработала и понравилось, теперь нужно его перенести на сервак, с другими компами, так чтобы не на моем NASe...

-

USE NVMe AS VIRTUAL DISK / HOW TO LIMIT DSM TO SPECIFIC DRIVES NOTE: if you just want to use NVMe drives as cache and can't get them to work on DSM 6.2.x, go here. Just thought to share some of the performance I'm seeing after converting from baremetal to ESXi in order to use NVMe SSDs. My hardware: SuperMicro X11SSH-F with E3-1230V6, 32GB RAM, Mellanox 10GBe, 8-bay hotplug chassis, with 2x WD Red 2TB (sda/sdb) in RAID1 as /volume1 and 6x WD Red 4TB (sdc-sdh) in RAID10 as /volume2 I run a lot of Docker apps installed on /volume1. This worked the 2TB Reds (which are not very fast) pretty hard, so I thought to replace them with SSD. I ambitiously acquired NVMe drives (Intel P3500 2TB) to try and get those to work in DSM. I tried many tactics to get them running in the baremetal configuration. But ultimately, the only way was to virtualize them and present them as SCSI devices. After converting to ESXi, /volume1 is on a pair of vmdk's (one on each NVMe drive) in the same RAID1 configuration. This was much faster, but I noted that Docker causes a lot of OS system writes which spanned all the drives (since Synology replicates the system and swap partitions across all devices). I was able to isolate DSM I/O to the NVMe drives by disabling the system partition (the example below excludes disks 3 and 4 from a 4-drive RAID): root@dsm62x:~# mdadm /dev/md0 -f /dev/sdc1 /dev/sdd1 mdadm: set /dev/sdc1 faulty in /dev/md0 mdadm: set /dev/sdd1 faulty in /dev/md0 root@dsm62x:~# mdadm --manage /dev/md0 --remove faulty mdadm: hot removed 8:49 from /dev/md0 mdadm: hot removed 8:33 from /dev/md0 root@dsm62x:~# mdadm --grow /dev/md0 --raid-devices=2 raid_disks for /dev/md0 set to 2 root@dsm62x:~# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] md2 : active raid5 sdc3[5] sda3[0] sdd3[4] sdb3[2] 23427613632 blocks super 1.2 level 5, 64k chunk, algorithm 2 [4/4] [UUUU] md1 : active raid1 sdc2[1] sda2[0] sdb2[3] sdd2[2] 2097088 blocks [16/4] [UUUU____________] md0 : active raid1 sda1[0] sdb1[1] 2490176 blocks [2/2] [UU] unused devices: <none> root@dsm62x:~# mdadm --add /dev/md0 /dev/sdc1 /dev/sdd1 mdadm: added /dev/sdc1 mdadm: added /dev/sdd1 root@dsm62x:~# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] md2 : active raid5 sdc3[5] sda3[0] sdd3[4] sdb3[2] 23427613632 blocks super 1.2 level 5, 64k chunk, algorithm 2 [4/4] [UUUU] md1 : active raid1 sdc2[1] sda2[0] sdb2[3] sdd2[2] 2097088 blocks [16/4] [UUUU____________] md0 : active raid1 sdd1[2](S) sdc1[3](S) sda1[0] sdb1[1] 2490176 blocks [2/2] [UU] root@dsm62x:~# If you want to disable the swap partition I/O in the same way, substitute /dev/md1 and its members sd(x)2 with the procedure above (NOTE: swap partition management not verified with DSM 6.1.x but it should work). After this, no Docker or DSM system I/O ever touches a spinning disk. Results: The DSM VM now boots in about 15 seconds. Docker used to take a minute or more to start and launch all the containers, now about 5 seconds. Copying to/from the NVMe volume maxes out the 10GBe interface (1 gigaBYTE per second) and it cannot fill the DSM system cache; the NVMe disks can sustain the write rate indefinitely. This is some serious performance, and a system configuration only possible because of XPEnology! Just as a matter of pointing out what is possible with Jun's boot loader, I was able to move the DSM directly from baremetal to ESXi, without reinstalling, by passthru of the SATA controller and the 10GBe NIC to the VM. I also was able to switch back and forth between USB boot using the baremetal bootloader menu option and ESXi boot image using the ESXi bootloader menu option. Without the correct VM settings, this will result in hangs, crashes and corruption, but it can be done. I did have to shrink /volume1 to convert it to the NVMe drives (because some space was lost by virtualizing them), but ultimately was able to retain all aspects of the system configuration and many months of btrfs snapshots converting from baremetal to ESXi. For those who are contemplating such a conversion, it helps to have a mirror copy to fall back on, because it took many iterations to learn the ideal ESXi configuration.

-

Hallo zusammen, hier ist eine Anleitung zum Update des Boot Loaders auf Jun's Bootloader V 1.02b.Basiert auf Synology DS3615xs und VMware ESXi v6.5. Upgrade des Jun's Bootloaders von V1.01 auf V.1.02b, damit man DSM 6.1.x (i.d.F. 6.1.5-15254 Update 1) installieren kann. 1. Vorher DSM ausschalten und die betroffene VM mit DSM bzw. Xpenology vom ESXi-Datastore auf lokale Festplatte sichern. Im Falle eines Problem ist somit für Backup vorgesorgt 2. In der gesicherten VM-Ordner befindet sich ein synoboot.img. Diese Datei entpacken und in der "grub.cfg" -Datei (synoboot\boot\grub\grub.cfg) nachschauen, welche Einstellungen aktuell hinterlegt sind. Dieser Textbereich ist relevant: set vid=0x058f set pid=0x6387 set sn=B3Lxxxxx (Eure SN-Nummer!) set mac1=011xxxxxxx (Eure MAC-Adresse!) set rootdev=/dev/md0 set netif_num=1 set extra_args_3615='' 3. Die aktuelle Jun's Bootloader (synoboot.img) in der aktuellen Version (derzeit V.1.02b) für DS3615xs hierüber herunterladen. 4. Die heruntergeladene synoboot.img mit der OSF-Mount Software mounten. Dabei beachten, dass beim Mounten die Optionen " Mount All Volumes" angehackt und "Read Only drive" nicht ausgewählt ist 5. Danach im Windows-Explorer in das gemountete Laufwerk navigieren. In der "grub.cfg" der gemounteten synoboot.img-Datei (\boot\grub\grub.cfg) die Informationen(MAC-Adresse, SN-Nummer usw.) von der alten "grub.cfg" -Datei übernehmen bzw. überschreiben und die Änderungen speichern. Danach die zuvor gemountete Datei wieder über OSF-Mount dismounten (Dismount All & Exit) 6. Die Alte "synoboot.iso" Datei auf dem VMware-ESXi Datastore löschen und durch die aktuelle synoboot.img ersetzen (hochladen) 7. Danach die VM mit DSM bzw. Xpenology auf dem ESXi wieder starten. Wichtig: Über die VMware Konsole unmittelbar nach dem Start mit der Tastatur "Jun's Bootloader for ESXi" auswählen und mit ENTER bestätigen 8. Im Web-Browser "find.synology.com" und mit ENTER bestätigen. Synology Web Assistant sucht jetzt nach der DSM und zeigt den Status als "migrierbar" an. Dort auf "Verbinden" klicken und auf die DSM verbinden. Dort steht jetzt: "Wir haben festgestellt, dass die Festplatten Ihres aktuellen DS3615xs aus einem vorhergehenden DS3615xs entnommen wurden. Bevor Sie fortfahren, müssen Sie einen neueren DSM installieren." 9. Nun kann man die .pat-Datei (DSM-Firmware) hinzufügen und manuell installieren lassen aber oder automatisch die neueste DSM Version vom Synology-Server (online) installieren lassen. Ich empfehle euch die manuelle Installation, da es keine Garantien gibt ob die aktuellste DSM-Version vom Synology-Server (online) mit eurem Xpenology Boot Looder funktiert. Ich habe meine (diese) Installation mit der DSM Version "6.1.5-15254 Update 1" erfolgreich hingekriegt. Hier könnt ihr diese Version herunterladen: DSM_DS3615xs_15254.pat 10. Nun auf "Installieren" anklicken und danach "Migration: Meine Dateien und die meisten Einstellungen behalten" auswählen. Auf "Weiter" und "Jetzt installieren" anklicken 11. DSM wird nun auf dem neuen Jun's Boot Loader installiert (dauert ca. 10 Minuten) 12. Nach der Installation im DSM anmelden und die durch den DHCP-zugewiesenen IP nach Wunsch wieder anpassen bzw. ändern Fertig.

-

(извините много букв, прошу разъяснить про виртуальные машины новичку) я изначально использовал xpenology на голом железе в hp microserver gen8 (стоковый celeron 4+8 Гб ram). пара первых рейдов с ext4 организованных самой xpenlogy были, ssd-кэш для чтения пробовал делать. c btrfs экспериментов не проводил. в целом всё выглядело вполне надёжно: загрузчик на sd-карточке, с неё грузился, в случае чего, карточка менялась, на неё залилвался такой же загрузчик. сама xpenology получалась какбы размазана по всем hdd, то есть если какой-то выходил из строя, то даже установленные пакеты не переставали функционировать после устранения неполадки. недавно был заменён процессор на xeon 1270v2. с установкой нового процессора решил расширить горизонты своих познаний и перейти на esxi. для этого у hp есть даже спец образ. его прекрасно установил на sd-карточку, с которой система загружается. не то чтобы сразу, но всё что хотелось реализовать получилось: крутится xpenology, рядом пара debian'ов. с rdm и прочими толстыми виртуальными дисками кое-как разобрался. в производительности hdd немного сомневаюсь, но для текущих нужд пока это не критично. usb 3.0 не работает - вот это засада, но пользуюсь им не часто, поэтому пока не обращаю внимание на это (хотя когда надо, хочется, конечно, чтобы "оно" побыстрее копировалось). так вот вопрос: я понимаю, общественность утверждает что esxi в отличие от proxmox больше заточена под установку на sd-карточки. но всё же, сдохнет карточка, ну не через месяц, но через полгода точно. и что меня ждёт? как-то можно снимать образы с этой карточки, чтобы в случае чего восстановить всё "как было"? тут конечно кто-то скажет, что можно нагородить рейд для esxi, но в моём случае это слишком расточительно. и я размышляю дальше. есть ведь virtual machine manager. который synology, позиционируют как гипервизор. судя по всему там имеют место проблемы с динамическим распределением ресурсов оперативной памяти. но если прикинуть, так, на вскидку, если завести это дело на raid1 с btrfs на текущем железе (3,5 ггц 4 ядра, 8 потоков, 12 гб озу) и крутить там 3-4 виртуальных машины на линуксе для домашних экспериментов, хватит ведь ресурсов (им ведь будет выдаваться отдельные ip роутером? свобод докера, кажется, мне не хватает)? в целом я конечно склоняюсь к xpenology на чистом железе, но сомневаюсь из-за поверхностных знаний. спасибо за внимание.

-

Hi, I'm new to XPenology and trying it out on an Intel D2700DC mini-itx mainboard with an Atom D2700 processor and NM10 chipset. From what I read on the forum the Atom and chipset should work, however when booting the DS3615xs image it seems to get stuck on: Screen will stop updating shortly, please open http://find.synology.com to continue. I've left it for an hour or so and nothing happens, also no network activity. The same USB stick works fine on another machine. Notable is that this board does not have a serial port, and that the next thing that should show is "early console in...". Could this be related? I think that without a serial port I'm also not able to see any kernel messages, right?

-

Hello folks, I have search on the forum and internet and I can't find an information on how to do it. There is some tutorial to do it without esxi but not with it. Currently, I don't have enough space on other disk to save everything and make a fresh install. So I would like to update without loosing any data. Does someone already did it ? For my understanding, I should select Jun's mod 1.02b vmdk file and start it as a primary drive. But will it update or overwrite the current xpenology? Thx, David

-

Dear all, I was installing mine Xpenology with manuals and files from pigr8 https://xpenology.com/forum/topic/7667-how-to-clean-install-in-vm-using-102b/?do=findComment&comment=74129 All went fine, until 1st reboot. Afer reboot i lost RAID group and Volume. When Xpenology boot without RAID group and Volume, i can easybuild RAID group again and create Volume and it will work again untill 1st reboot (normaly if there were any data, all is lost). The RAID group and Volume creatad on VMware virtual disk won't diapear after restart: Hard disk 1: 8GB Thin provisioned SCSI controller 0, SCSI (0:0) Dependent Hard disk 3: 3TB Thick provisioned, lazly zeroed SCSI controller 0, SCSI (0:1) Independent - persistant Hard disk 4: 3TB Thick provisioned, lazly zeroed SCSI controller 0, SCSI (0:2) Independent - persistant System: HP N54L, 16GB RAM, 250GB HDD + 2x 3TB HDD in RAW mode mounted in RDM loader: 1.02b DS3615xs, DSM 6.1.4-15217 Update1 Hope nayone will have idea how to solve that, as i was searching forum and wasn't able to find solution how to solve. Thank you! Alex_G

-

Turned my trusty HP54L off over the Christmas period for a few days when I wasn't using it. Came home, booted it and Esxi won't boot. While trying to boot, it gives: error loading /s.v00 fatal error: 6 (Buffer too small) This sounded to me like a RAM issue. 2GB RAM is successfully detected upon boot. When I initially installed Esxi years ago, I followed a guide to hack the Esxi install to allow 2GB RAM. I took the RAM out, gave it a technical blow, put it back into the other RAM slot. Re-booted and same error. Again, 2GB RAM is successfully detected. Any suggestions on how to troubleshoot further?

-

Hi ya all, I'm new to ESXi and XPEnology and mabe someone here can help me out with my problem. I have the problem that none of my harddisks are vissable in XPEnology Storage Manager. The SATA controller is setup as passthrough in ESXi 6.5 This is my setup: 2x Xeon L5640 @ 2.27GHz 64GB 1333MHz RAM 2x 250GB SanDisk NGFF SSD LSI SAS9201-16i 16-Port SAS/SATA controller (the card is set passthrough in ESXi) Software = ESXi 6.1 Update-1 XPEnology 6.1 DS3615xs 6.1 Jun's Mod V1.02-alpha Is there something I have to do to make XPEnology recognise all de harddisks that are connected to the SATA controller? Could it be that it is driver related, however I have read that my SATA controller 9201-16i is compatible http://xpenology.me/compatible/ Any help on this is greatly appriciated. Thanks in advance!

-

Bonjour, je suis dans un environnement vmware esxi 6.5 je me pose la question suivante: si je mets une vm 3615xs, est-ce que si je lui affecte 4 vCPU les 4 seront utilisés? ou suis-je obligé de passer par la vm 3617xs étant donné que le 3617xs est un model avec un proc 4 cœurs contrairement au 3615xs qui est model avec un proc 2 cœurs. mog

-

Hi, - I have a DVB-Sat-Card in my System - Until yet i have used ESXI on my System and 2 VMs 1. XPEnology 2. VDR Server - With latest DSM we have a QEMU based VM inside the Synology My Idea is Stopping my ESXI Project and Using XPEnology as Baremetal with the Buildin Synology VM The project stops on the point that Synology has not enabled Passthrough. But based on the knowledge they use QEMU / libvirt it should be possible to mod the VM config files Has Anybody tried to modify the Settings of GUI generated virtual machines with modding the config files on file Base? i have checked for IOMMU working or not (yes it works): dmesg | grep -e DMAR -e IOMMU Running Service: /var/packages/Virtualization/target/usr/local/bin/libvirtd -d --listen --config /etc/libvirt/libvirtd.conf /usr/local/bin/qemu-system-x86_64 -name fabf0876-7580-............................ so checked on console : qemu-system-x86_64 --h anybody knows where the config parameters are stored and how to modify / expand it? Best Regards Synologix

-

Ich habe hier auf zwei HP Microserver ESXi 6.5u1 laufen. Auf beiden habe ich zusätzlich ein vSwitch ohne NIC mit 9000er MTU erstellt. Auf beiden ESXi Host habe ich jeweils ein DS3617sx 1.02b mit folgender Konfig: - Nic1 ist auf vSwitch0 (mit pysikalischer NIC, MTU 1500) - Nic2 ist auf vSwitch1 (ohne pysikalischer NIC, MTU 9000) Bei einem von beiden Hosts, ist DSM nach der Umstellung von Nic2 auf MTU 9000 über kein Interface mehr erreichbar, so als wenn generell auf MTU9000 umgestellt würde. Bei dem anderen Hosts funktioniert das Ganze interessanterweise problemlos. In beiden Fällen handelt es sich um eine ehemalge DS3615sx 1.01 installation, die auf DS3617sx mit "Daten behalten" migriert wurde. Ich hab einfach das img genommen und die grub.cfg angepasst, mit StarWind V2V Image Converter zu einer ESXi Server vmdk umgewandelt, diese in den Datastore hochgeladen und dann die Bootfestplatte in der VM-Konfig ausgetauscht. Um die DS3617sx wieder zum leben zu erwecken, habe ich wieder die alte Bootplatte von DS3615sx genommen, gestartet und neu migriert. Dann VM gestopped und wieder die DS3617sx Bootplatte zu verwenden und erneut zu migrieren. Sprich: ich bin wieder auf dem Stand vor der MTU9000 Umstellung. Hat jemand eine Idee warum die Umstellung von Nic2 auf MTU9000 auch die MTU von Nic1 berührt hat?

-

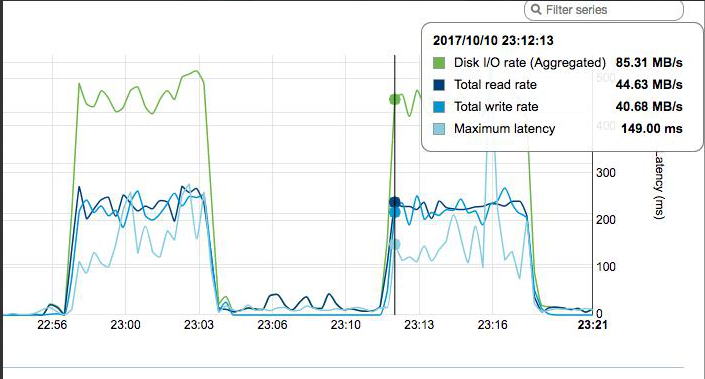

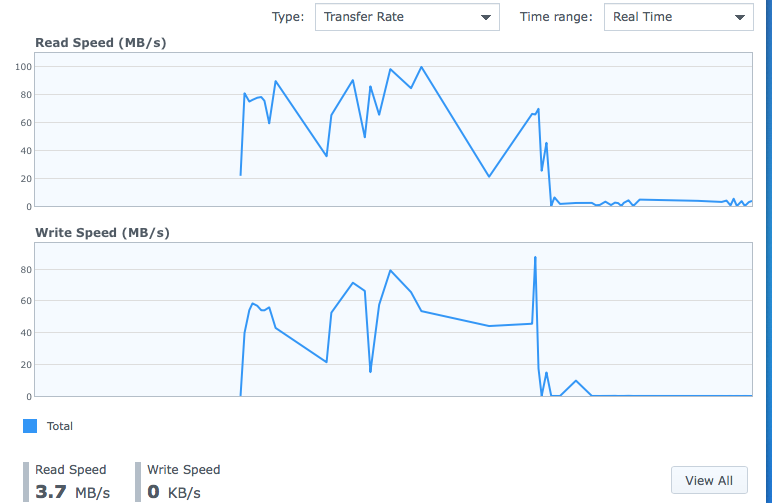

Всем привет. Столкнулся с проблемой вопроизведения фильмов в Video Station. DSM установлена на esxi 6.0. Сервер Supermicro, Adaptec Raid, диск WD 3Tb Green просто как Volume. Ресурсов нормально, Диски в DSM подцеплены как vmdk-ашки, полный проброс не могу себе позволить, живут еще виртуалки) Смотрю фильмы с iPad и ТВ-приставки на Android. Жил довольно долго на DSM 5.2, но сломались обложки, обновился на 6.0, какое-то время тоже было хорошо. Где-то месяц назад стал замечать, что пошли лаги (долго открывается, перематывать вообще не реально, некоторые видосики вообще не играет). Единственное что стояло, так это авто-обновление Пакетов. Соответственно обновился Video Station. Первое - подумал хард, проверил - ок. Дальше пробовал ставить более старые версии VS. Пробовал включать Video Conversion for Mobile Devices, проц грузит, толку нет. Обновил на 6.1, картина не изменилась. Собственно само странное поведение выражается тем, что сначала загрузка по 80Мбит, через минут 5 падает до загрузки равной битрейту (например в 20мбит), Причем странно, что как на чтение, так и на запись идет нагрузка. Если фильм остановить и начать играть заново, картина повторяется. Вот собственно скрин. Начал играть в 22:56 до 23:12, потом заново. Если смотреть это же фильм, но через DLNA или через SMB, все летает. Но так удобна эта чертова Video Station, что не хочу отказываться. Спасибо за любые советы. UPD, выяснилось, что такое происходит только с mkv.

-

I have one ITX box and two SO-DIMM rams on J3455 but J3455 graph card not support pass-through in ESXI I'm searching ITX board and graph card with these features: 1. ITX board with 2 SO-DIMM slot, 4 SATA slot, 1 PCI-E 16x slot, support VT-D, support USB & SATA pass-through on ESXI 2. graph card support pass-through on ESXI I'm not sure whether ASROCK H110M-ITX can do so and it have 2 DIMM slot. Is that possible? Thanks.

- 12 replies

-

- pass-through

- htpc

-

(and 1 more)

Tagged with:

-

Hello, I have a nice N54L running 6.0.2 bare metal and I'm wondering if I should migrate to ESXi hypervisor. I know not much about ESXi, but would it be possible to make xpenology "see" an intel processor instead of an amd ? (would make 6.1.3 migration easier) Is there anything special to know about the migration ? Is this even possible without wiping all data ? (have 4x4 To in AHCI RAID 5) Regards

- 1 reply

-

- esxi

- bare metal

-

(and 1 more)

Tagged with:

-

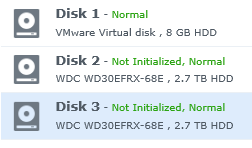

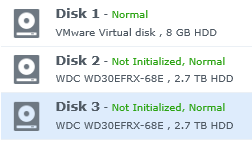

Hello I install xpenology 6.1.3 with bootloader 1.03b on esxi6.5 I want to attach physical disk to xpenology, so I mount disk as RDM. First I set disk like this but I fall in DSM install loop. So I made 8GB virtual disk in my ssd and attach it it like this. Than the installation process was find but when I made RAID group and volume and reboot my vm, all the RAID group and volumes are gone. I'm dealing this problem around 12hr but I don't know what the problem is...... Plz help me

-

Hello all, Unfortunately, I was unable to install 1.02b on esxi 6.0 Update 3 by following different guides throughout the forum. So after a few hours of testing, I wrote myself a guide for future use. I tested these situations: 1. DS3615 baremetal in basic disk -> VM 2. DS3615 baremetal in raid 1 disk -> VM 3. DS3617 baremetal in basic disk -> VM 4. DS3617 baremetal in raid 1 disk -> VM 5. VMs back to baremetal in both basic and raid 1 disk without losing any data. My personal guide is attached as a PDF. Install on ESXi 6.0U3.pdf

-

Im running ESXi 6.5 on a HP Proliant MicroServer G8. I managed successfully to run the latest XPEnology on a new VM and I attached a couple of virtual disk on it for testing before I transfer my data. Everything looks fine until I tried to mount my USB Disk which will store my MacBook's Time Machine Backups. The same disk can be mounted on my Win Server VM but, not on the XPEnology one. I even tried to hard mount it via SSH without any luck. I installed vmware tools but nothing again. I tried both USB2 and USB3 ports. What else would you advised me to try in order to mount that disk?

-

Hi, did anybody manage to start a VM from the Synology Virtual Machine Manager? Im on Junes 1.02b @DSM 6.1.3-15152 Update 4. Tried it with an N54L and an intel xeon custom build (bare metal). On both maschines, i couldnt start any created VM. On N54L the message is not enough memory (have 8GB installed) on the Xeon build it only says that starting the VM failed. Would be soooo awesome to get this work. Any information about that would be apriciated TIA, the Wurst

-

Hey guys, I hope anybody can help me, because I've already tried nearly everything. I'm running XPEnology 6.1 Update 4 with Juns Loader 1.02b on a ESXi 6.0 VM. The ESXi Host is a HP Microserver Gen8 and i'm already using the hpvsa-5.5.0-88OEM driver. I've created 3 basic volumes, all on a seperate disk. If i move a file from one disk to another on the DSM interface, the transmission is really quick (more then 100mb/s). If I write a file on one of the shares from a Windows-VM on the same ESXi-Host, it is also quick (about 70mb/s). But if read a file on the SMB share, it starts at 6-7mb/s and gets a bit faster, but not more than 20mb/s. I have created a new XPE-VM, changed the network-card of the VM, gave the VMs more RAM, but nothing helped. Does anyone have an idea, why it is working perfect in one direction but so bad in the other?! I've found some threads, where the people wrote, that it works nicely on a baremetal installation. But i really need the Windows-vm on that machine and I also like ESXi very much.

- 15 replies

-

- esxi

- performance

-

(and 2 more)

Tagged with:

-

Hi everyone I am a little bit lost reading all forums and tutorials, where people which take some assumptions for granted don't bother to explain the basics. So first let me explain where I am right now and where I've come from. First I was considering buying the NAS device from the shop. #SYNOLOGY At first, I was considering buying Synology NAS. What I liked about it was the simplicity of DSM, the flexibility of SHR, the idea of private cloud available from everywhere and mobile apps to support it. I was considering buying 4+ bay NAS as using anything less seems like a huge sacrifice in terms of the amount of drives used for redundancy comparing to amount of drives available for use. What I didn't like was the price tag on those multibay devices, fact that to utilise the power of multiple HDs connected to RAID you would have to use 2-4 wired aggregated network connections which would require further investments in the special network switch and infrastructure, special card to PC and the whole multi-wire absurd all over the place. So this is when I started to consider another brand. #QNAP I liked that especially for the idea of adding DAS functionality on top of similar NAS functionality offered by Synology. Unfortunately, the USB solution provided in some "cheaper" devices is limited only to 100MB/s transfer so there is no improvement over the single 1Gb/s network connection. They offer also 10Gb/s network cards in higher spec models but that would also require a huge investment in the network card, not mentioning lack of connectivity on the laptop - Mac in particular which holds already Thunderbolt 2 port instead, the same as some QNAP models. This solution is really tempting as some people reported performance better than standard SSD reaching 1900MB/s read 900 MB/s write but the price tag on those models is not acceptable 2-3 thousands for an empty case. #unRAID Then I discovered this Linus video https://www.youtube.com/watch?v=dpXhSrhmUXo and I loved that idea from the beginning as it seems to solve the performance bottleneck problem as everything seats under the same hood and allow to put money where they are really needed - to buy better and bigger hard disks, maximise storage and horsepower of the machine, GPU, RAM, etc. So this is where I am right now, I've bought better hard disks 6 x 3TB HGST 7200 RPM and fresh new powerful gaming rig for less than cheapest QNAP with Thunderbolt adapter. I've followed the setup ... and this is where my doubts have shown up and I started to look for alternatives to unRAID and I started to consider XPE. And here is why? Unlike Linus on the youtube video, I've chosen new hardware setup based on AMD solution Ryzen + Vega GPU. The first problem is that Ryzen, unlike the Intel processor, doesn't allow you to have second video card yet even motherboard is equipt with HDMI output. It might be possible in the future when a new version of Ryzens would be released. I might have to put additional cheapest video card to solve that problem but my attempt to work with just one video card has failed so far even such option seems to be available. Posible poor performance symptoms. Right now I am waiting for the second day for the Parity-Sync to finish. It's been 2 days already and covered only 77% of space (2,3 TB out of 3TB in 1 day 18 hours and 18 minutes). It was promising at the beginning as the single drive was showing above 150MB/s read write speed and combined read speed from all 5 drives 750MB/s and predicted time to finish this process was just 4 hours. #XPE So I can see some people, like me right now, are considering to go the same way as me from unRAID to XPE https://xpenology.com/forum/topic/5270-reliability-and-performance-vs-unraid/ but at the same time some other people considering to go opposite way https://xpenology.com/forum/topic/3591-xpenology-vs-unraid/ So I do have doubts about performance, reliability, functionality to access the NAS from outside - as I understand Synology is trying to block some functionality so special hacks of network cards are required, the old website with some few months link in one of that post has gone already https://myxpenology.com doesn't sound promising, etc, and so on. #VM On top of this, I do not quite understand virtualisation approach in here. I can understand that I can install XPE bootloader which would allow me to install DSM as the first OS, right? So like in Synology (and unRAID) I can create VM running lat's say Windows 10, right? but I've not seen anything like Linus video prepared with XPE yet so I'm not sure if this would be possible at all to setup this the same way as in case of unRAID as a gaming rig on top of XPE+DSM? And if yes would the performance be the same/similar like in case of unRAID? Now there seems to be another installation path possible to install XPE in VM. So this would be running on Windows already, right? So this would be XPE+DSM running on top native Windows OS. But is it possible to utilise all functionality and performance of NAS native system in this case? Windows would have to be running all the time to keep server availability, I'm not sure about how the performance of each drive would be affected and I would have to leave one just to run Windows instead of having full space of array available for virtualized Windows. I wasn't sure but it seems like XPE also doesn't require any RAID hardware - the same like unRAID, right? I had some doubts as AMD motherboards supports only RAID 1, 0, and 10 as I remember where I would like to go with 5 or 6 or SHR equivalent if possible. Any comments and answers would be appretiated, especially around virtualisation subject.

- 3 replies

-

- unraid

- perfromance

-

(and 3 more)

Tagged with:

-

I'm out of ideas of how to debug this, but perhaps someone else knows what's going on. This is probably not an Xpenology specific issue, but I'm sure others here have experience using the LSI 9211-8i and ESXi together. I recently did a new build for my NAS with the following hardware: Ryzen 5 1600 ASRock B350 Pro 4 Mobo WD Green m.2 SATA SSD (used as VM datastore only) LSI 9211-8i SAS Controller I am running ESXi 6.5u1 on this setup which was perfectly stable until I added the LSI 9211-8i SAS Controller, since adding this card the system crashes if left idle for more than 30 minutes. Timeline of events: Initially using a LSI 3081E-R controller in pass-thru mode (with test drives since I didn't realise >2TB drives were not supported when I picked up the card for $30.) Online - Several weeks without issue until my LSI 9211-8i controller arrived. Added LSI 9211-8i controller to DSM VM in pass-thru mode with 4x 4TB WD RED HDDs Did disk parity check performed on new SHR array. Took about 8 hours. Offline - Less than an hour after parity check completed the system 'hard locks'. Thought it might be a one-off incident. Rebooted the system, did another parity check to make sure everything was ok. Offline - Again, less than an hour after parity check completed the system 'hard locks'. Updated the firmware on the LSI 9211-8i to v20 using IT mode. Thinking the problem was solved as I was now using ESXi supported firmware, started a data transfer task of 8TB of data, this took about 18 hours and the system stayed online the entire time without a hitch. Data transfer finishes, feeling pretty confident the firmware upgrade has worked since it's been online for this long, spend a few hours doing benchmarks and setting things up. System was online for over 24 hours. Offline - An hour or so after I stop 'doing stuff' with the system, it's hard locked again. Thinking the issue might be IOMMU pass-thru support, I disabled pass-thru mode, installed the official driver from the vmware site for the LSI 9211-8i and mounted the disks into the DSM using ESXi Raw Disk Mappings. Offline - An hour or so after I stop 'doing stuff' with the system, it's hard locked again. Realised it's only crashing when the system is 'idle'. Looked into power saving modes, disabled "C-State" and "Cool 'n' Quiet" in the host bios. Also disabled all power saving features in ESXi. Offline - An hour or so after I stop 'doing stuff' with the system, it's hard locked again. This morning I hooked up a screen to the host in an attempt to see if there was a PSOD or any message before it locks, there was not - just a black screen. System not responsive to short-press power button or keyboard. Offline - less than 40 minutes after sitting idle from boot, it's hard locked again. In addition to the steps taken above, I have tried to find a reason for the lock ups in the ESXi logs, there is seemingly nothing, one minute it's working fine, then nothing until it's booting up again after I power cycle the system. There are no core dumps. Each time nothing was displayed on the screen of the host . It just seems odd the system would crash only when sitting idle - it would make more sense if it was crashing under load. Now these issues only started to occur once I added the LSI 9211-8i to the mix, the LSI 3081E-R did not cause these same issues. Do I just have a dodgy card? I don't want to buy another LSI 9211-8i if I'm going to have the same issues. Is there another card I should get instead? Are there any other settings I should try to make this system stable?

-

Greetings everyone... I was just hoping someone might know where I can find Jun's most up-to-date loader files (1.02b is the most current at the time of writing) for VMWare Workstation. I am currently using 1.02a, and I'm concerned about doing the current DSM update while not having the freshest of loader files. Unfortunately, all I can find are iso and img files, which aren't used for Workstation. Any one have a link or something that they could share, that way I can safely update my rig? Thanks in advance! Tom

-

Bonjour, je voudrais savoir comment faire pour créer une sur vmware en partant du loader en .img ? soit avec ce loader ou bien celui ci D'avance merci