Search the Community

Showing results for 'Supermicro x7spa'.

-

Peter my Supermicro board uses the x540-T2 and I have no problems with your loader. I'm curious - I wonder if the fellow I wrote to above this comment who has "No disks FOund" has the same problem I did where the incorrect ixgbe module was preventing the mpt3 module loading properly.

-

на AMD по моему только одну модель можно поставить ну по крайне мере я сходу смог поставить. вот я ставил на AMD - Результат: Успешно (DS2422+) - Версия и модель загрузчика: arpl-latest - Версия DSM: DSM 7.1.1-42962 Update 1 - Аппаратное решение: Bare Metal, Supermicro H11SSL-i, AMD EPYC 7551P, 128Gb RAM EСC Kingston 2400 пробовал поставить 3622+ не взлетела, 2422+ взлетела сразу без проблема, установка - запуск загрузчика, выбор модели, генерация серийника, билд загрузчика и загрузка - ВСЕ Вряд ли буду рассматривать эту конфигурацию как постоянную - так был просто интерес взлетит или нет. 32 ядра и 128 памяти как то не разумно тратить на NAS - если только не поднимать на нем кучу виртуалок. Поставил VMM - видит только 16 ядер из 32.

-

New build, synology migration and sanity check

Rhubarb replied to Kashiro's topic in The Noob Lounge

Hi Kashiro, sorry, I'm not much help but can advise that my Xpenology DS3622XS+ is running with HBA (LSI SAS9300-8i HBA in IT mode (non-raid)) plus a second LSI SAS9300-8i incorporated in the Supermicro M'rd X11SSL-CF. My son used Redpill to create the loader so I can't provide all details. System is currently on DSM 7.2-64570 Update 3. System is running with 16 x 16TB HDDs, 8 on each HBA. A separate volume is on each HBA. CPU is Xeon E3-1245v6. Not using any SATA ports on the M'brd. System mainly used as a Plex server. -

Hi Guys, Today I'm trying to install a vmware Xpenology nas. The vmware server is ESXI 6.7 u3. The motherboard is supermicro x9sri-f-0. There is a 4 ports SCU controller on this board. I passthrough this controller to vm guests. I tried it in window 10 and ubuntu 20. The SCU controller shows in both system and the 4 disks could be used. While in the Xpenology NAS, the DSM installation is successful, except the SCU controller won't show. The load is Tinycore-Redpill, I downloaded the loader from https://github.com/pocopico/tinycore-redpill I followed the instruction of The diskstation is DS3622xs+ and DSM version is 7.1.1. And I think the passthrough to the Xpenology vmware is successful because if I boot to "tinycore image build", and run fdisk -l, I saw the 4 disks. And if I run "satamap", the output shows: Found "02:01.0 Vmware SATA AHCI controller" 30Ports/1 drives. Mapping SATABOOT drive after max disks. Found "02:02.0 Vmware SATA AHCI controller" 30Ports/1 drives. Default 8 virtual ports for typical system compatibility. Found SCSI/HBA" 03:00.0 Intel Corporation C602 chipset 4-Port SATA Storage Control Unit(rev 06)" (4 drives) I did try to play with SataPortMap and DiskIdxMap. But I couldn't get these disks show in DSM. Then I also ssh to the DSM and run lspci, I found a PCI passthrough device without driver. So I'm just wondering. Do I need SCU driver for DSM to get the disks show? I have to install the driver for windows 10 and ubuntu seems support it by default. How about DSM 7.1.1? Does it have the driver? BTW, I also have another two general question regarding use TCRP loader. 1. After modified user_config.json, do I need to rebuild the loader to make it take effect? I rebuilded every time. Just want to confirm it's must. 2. After rebuild the loader, do I need to reinstall DSM to make the changes take effect? I guess it shouldn't need to but I still reset the DSM and reinstall. However no luck to get the disks show. Thanks

-

System is Supermicro M'brd X11SSL-CF including 8xSAS (Storage Pool 1, volume 1); Kaby Lake Xeon E3-1245v6; second 8 port SAS controller in PCIe slot (Storage Pool 2, volume 2); two GbE ports. Both Storage Pools are completely populated with 16TB HDDs (14 x Exos 16; 2x IronWolf). All files/folders are BTRFS. DSM 7.2-64570 Update 1. Trying to Install Container Manager yesterday caused the install to 'hang' - nothing happened for several hours and unable to cancel/end the install, I tried to force shutdown/restart the system with damaging consequences. Now no applications are working; the system is not showing up in Synology Assistant and it was not possible to get a login until we ran the command "syno-ready" via a "Putty" session to the system. We've managed to reboot the system; and using "PUTTY", we've managed to mount the disks: Storage Pools/volumes (appear intact and can be read). Some screen captures are available of what's been achieved, so far. root@dumbo:/dev/mapper# lvdisplay --- Logical volume --- LV Path /dev/vg2/syno_vg_reserved_area LV Name syno_vg_reserved_area VG Name vg2 LV UUID 7In8lA-Tkar-3pbr-4Hl7-aVVH-G3lY-GV905o LV Write Access read/write LV Creation host, time , LV Status available # open 0 LV Size 12.00 MiB Current LE 3 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 1536 Block device 249:0 --- Logical volume --- LV Path /dev/vg2/volume_2 LV Name volume_2 VG Name vg2 LV UUID TuHfCo-Ui9K-VlYg-TzLg-AIQL-eYTu-V5fOVu LV Write Access read/write LV Creation host, time , LV Status available # open 1 LV Size 87.26 TiB Current LE 22873600 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 1536 Block device 249:1 --- Logical volume --- LV Path /dev/vg1/syno_vg_reserved_area LV Name syno_vg_reserved_area VG Name vg1 LV UUID ywtTe5-Nxt7-L3Q3-o9mZ-KGrt-hnfe-3zpE5r LV Write Access read/write LV Creation host, time , LV Status available # open 0 LV Size 12.00 MiB Current LE 3 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 1536 Block device 249:2 --- Logical volume --- LV Path /dev/vg1/volume_1 LV Name volume_1 VG Name vg1 LV UUID 2SBxA1-6Nd6-Z9B2-M0lx-PL8x-NFjv-KkxqT3 LV Write Access read/write LV Creation host, time , LV Status available # open 1 LV Size 87.29 TiB Current LE 22882304 Segments 2 Allocation inherit Read ahead sectors auto - currently set to 1536 Block device 249:3 root@dumbo:/dev/mapper# lvm vgscan Reading all physical volumes. This may take a while... Found volume group "vg2" using metadata type lvm2 Found volume group "vg1" using metadata type lvm2 root@dumbo:/dev/mapper# lvm lvscan ACTIVE '/dev/vg2/syno_vg_reserved_area' [12.00 MiB] inherit ACTIVE '/dev/vg2/volume_2' [87.26 TiB] inherit ACTIVE '/dev/vg1/syno_vg_reserved_area' [12.00 MiB] inherit ACTIVE '/dev/vg1/volume_1' [87.29 TiB] inherit root@dumbo:/# cat /proc/mdstat Personalities : [raid1] [raid6] [raid5] [raid4] [raidF1] md3 : active raid6 sdi6[0] sdl6[7] sdj6[6] sdn6[5] sdm6[4] sdp6[3] sdk6[2] sdo6[1] 46871028864 blocks super 1.2 level 6, 64k chunk, algorithm 2 [8/8] [UUUUUUUU] md2 : active raid6 sdi5[0] sdk5[6] sdp5[7] sdm5[8] sdn5[9] sdo5[3] sdl5[11] sdj5[10] 46855172736 blocks super 1.2 level 6, 64k chunk, algorithm 2 [8/8] [UUUUUUUU] md4 : active raid6 sda5[0] sdh5[7] sdg5[6] sdf5[5] sde5[4] sdd5[3] sdc5[2] sdb5[1] 93690928896 blocks super 1.2 level 6, 64k chunk, algorithm 2 [8/8] [UUUUUUUU] md1 : active raid1 sdi2[0] sdh2[12](S) sdc2[13](S) sdb2[14](S) sda2[15](S) sdf2[11] sdd2[10] sde2[9] sdg2[8] sdo2[7] sdm2[6] sdn2[5] sdp2[4] sdk2[3] sdl2[2] sdj2[1] 2097088 blocks [12/12] [UUUUUUUUUUUU] md0 : active raid1 sdi1[0] sda1[12](S) sdb1[13](S) sdc1[14](S) sdh1[15](S) sdf1[11] sdd1[10] sde1[9] sdg1[8] sdo1[7] sdl1[6] sdm1[5] sdn1[4] sdp1[3] sdj1[2] sdk1[1] 2490176 blocks [12/12] [UUUUUUUUUUUU] root@dumbo:/dev/mapper# vgchange -ay 2 logical volume(s) in volume group "vg2" now active 2 logical volume(s) in volume group "vg1" now active root@dumbo:/dev/mapper# mount /dev/vg1/volume_1 /volume1 mount: /volume1: /dev/vg1/volume_1 already mounted or mount point busy. Main Question Now is: How to repair the system such that: a: It appears to Synology Assistant as a functioning DS3622XS+ with correct IP, Status, MAC address, Version, Model and Serial no. b: It functions again correctly; presenting Login prompt and applications work correctly and accessing relevant data in Storage Pools/Volumes/Folders and files. In the absence of responses of workable solutions, we're considering actually "blowing away" backed up data (Storage Pool 2/Volume 2), Creating a new system on that volume group, then copying back the data stored in the original Storage Pool 1/Volume 1 to the new installation.

-

Thanks for the reply dj_nsk The ARC loader seems to be most advandced. But with the same result When the loader start i have one red line but perhaps it's normal ? "user requested editing..." What i try : I put a formated 6 Tb disk and the usb stick with fresh ARC loader I choose DS3622xs+ then 7.2 version ... And again when i put the pat file, with DSM link or with my pat file on computer same result, 55% then error said image is corrupted. i have an xpenology since 5.2 loader With 5.2 loader install was very simple after editing config file With 6.X loader, sataportmap parameters need to be edit all the time we add a new disk Example with 6.X loader, i started with 6 diks, my sataportmap was 42 then 43 with 7 disk then 44 with 8 disks On the motherboard their are 2 hosts : SATA/SAS Storage motherboard : PCH Built-in Storage- Intel® C224 : 4 x SATA3 6.0 Gb/s (from mini SAS connector), 2 x SATA2 3.0 Gb/s, Support RAID 0, 1, 5, 10 and Intel® Rapid Storage Additional SAS Controller- LSI 2308: 8 x SAS2 6Gbps ( from 2x mini SAS 8087 connector) I must said than LSI SAS controller was in IT mode (unraid )and not in IR mode As i use only 4 port on C224 (because last 2 was only 3 gb/s) then i want use 8 port on LSI 2308 So as i said before sataportmap work good with 6.x loader with 4x parameters I found this strange with 7.X loader (with only 1 blank disk for test) : With TCRP loader : I ve seen loader haved driver for intel c220 then it find 1 disk (test disk connected so normal) and 6 ports (Yes it's right but i don't want to use lhe last 2) And it find LSI SAS and it download SCSI driver for it, said nothing about port then it write sataportmap = 6 and DiskIdxMap=00 With ARPL loader it write sataportmap = 6 and DiskIdxMap=00ff and i read sataportmap infos in loader it said 6 green port for intel and 7 green port for LSI With ARC loader it write sataportmap = 1 and DiskIdxMap=00 So i have a different result with 3 different loader I don't know if this is the reason why i have the error with pat file corrupted at 55% ? I can't find a solution alone, i need help for a solution so thanks to all for help link to the motherboard : https://www.asrockrack.com/general/productdetail.asp?Model=E3C224D4I-14S#Specifications link to the motherboard manual : https://download.asrock.com/Manual/E3C224D4I-14S.pdf link to the LSI 2308 manual : https://www.supermicro.com/manuals/other/LSI_HostRAID_2308.pdf Thanks for the help

-

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 7.1.1-42962 (really!) - Loader version and model: TCRP v0.9.4.9c w\Friend 0.0.8j DS3622xs+ - Using custom extra.lzma: NO - Installation type: BAREMETAL - SuperMicro X10SL7-F with integrated LSI RAID, 8*4tb SAS drives - Additional comments: Loaded the PAT, rebooted no further intervention, all drives and data preserved

-

Moin ersa, erstmal Danke, daß du dir Zeit genommen hast zu antworten. Bei dem zusammen stellen gehen ja die Probleme los, ich denke wahrscheinlich viel zu leistungsorientiert und bin dadurch zu teuer. Hatte mich an einen alten Hardware-Thread angehängt, aber da hat niemand geantwortet. Habe bei geizhals mal 3 Wunschlisten gebastelt, was ich mir für das Projekt vorstellen würde. i5-12600 mit ECC RAM i5-12500 mit ECC RAM i3 mit ECC RAM Ja, ich weiss, 32GB RAM und dann auch noch ECC, die Prozessoren wahrscheinlich alle zu gross. Wenn das alles drüber ist, keine Ahnung. Wollte halt ein Board mit 8 x SATA und noch ein paar Erweiterungsmöglichkeiten, Mellanox 10G-Karten liegen hier noch vom alten Supermicro-Board. Und 19" hätte ich gerne, weil ich einen Schrank habe und es dann aufgeräumter aussieht. Preislich liegt das natürlich deutlich über den alten Dell-Kisten, aber bei denen weiss man halt auch nie, wann die (saumässig lauten) Lüfter den Geist aufgeben. Echte Synology 19" Kisten liegen je nach Alter immer noch im 4-stelligen Bereich bei - sagen wir mal - fragwürdiger Hardware-Ausstattung. Wenn jemand eine Zusammenstellung hat, die deutlich drunter liegt und auch vom Stromverbrauch her günstig ist, gerne her damit. Kopieren ist ja nach chinesischem Giusto das grösste Lob für den Verfasser der Idee. Danke und Gruss Clemens

-

- Outcome of the update: FAILED - DSM version prior update: DSM 7.1.1-42962 Update 5 - Loader version and model: tcpl v0.9.4.9c DS3622xs+ - Using custom extra.lzma: NO - Installation type: ESXi 6.5 on Supermicro X8 - Additional comments: I use the LSI2308 which is on the mainboard of the Supermicro. In DSM 6 it required mpt2sas, in DSM 7 it requires mpt3sas. If I add the extension, the build fails because there is no entry for ds3622xsp_65470 in the mpt3sas json index. If I don't add the extension, the build finishes fine, but after boot the mpt3sas kernel driver is present but not loaded, so the disks aren't present. Manually doing an "insmod /usr/lib/modules/mpt3sas.ko" fixes that, but the recovery still fails, assuming because this problem persists after the recovery reboot. I tried to figure out if I could work around that. but /etc.defaults doesn't seem to be presistent, so any fix is lost after reboot. Same for adding "supportsas" to synoinfo.conf which could also fix it.

-

TinyCore RedPill loader (TCRP) - Development release 0.9

pLastUn replied to pocopico's topic in Developer Discussion Room

Greetings, there is a problem with the latest version of tinycore-redpill.v0.9.4.9. Supermicro X7SPA-HF-D525 board, Intel Atom D525, 2x Intel 82574L Gigabit LAN, 6x SATA (3.0Gbps) Ports. The boot loader is built and written to flash drive well. When installing the DSM version: ds3617xs-7.1.0-42661 ds3617xs-7.1.1-42962 ds3617xs-7.2.0-64570 DSM installation error at 58% - file is corrupted. Tried both original PAT file and the one made by TC. During installation: ds3615xs-7.1.0-42661 ds3615xs-7.1.1-42962 No network when booting! Until 2 weeks ago, ds3615xs-7.1.1-42962 was installing fine (tinycore-redpill.v0.9.4.6) What needs to be done? Advice or fix TCRP! Thanks! -

Отвалились все диски Seagate

-iliya- replied to Sky Jumper's topic in Аппаратное обеспечение и совместимость

корпус очень добротный, не хуже Supermicro, внутри 3 по 120*38 кулера продувают лучше чем 80 у супремикро, решетка на вдув то же лучше универсальный под 3 варианта блока питания. Не без минусов - нет мануала вообще, Backplane имеет кучу разъемов питания 2шт Molex + 1 Molex черный + по 2 CPU8pin 12V и это только на половину дисков, и то же самое на вторую. Поддержка говорит что хватит по 2 молекса на 12 дисков - но я что то сомневаюсь. -

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 7.2-64561 to DSM7.2-64570 - Loader version and model: ARPL-i18n 23.6.4 / DS3622xs+ - Using custom extra.lzma: NO - Installation type: BAREMETAL - E3 1260L v5 + SuperMicro X11SSH-LN4F - Additional comments: Rebuilt the bootloader (edit Sn, Mac1), compile and reboot, then installed DSM (.pat)

-

Hi all! I have an existing install that I cobbled together with a decommissioned supermicro 1u server. I used ARPL and chose RS4021xs+ as the base. In my mind... this would match my processing power the closest? Now that I'm less of a noob... I think it doesn't really matter. (Or does it?) Anyway... Is there a reason I should consider rebuilding the loader to go with a different serial number? If I do, can I keep the data on my drives? I've got an ssd cache and 4 drives in raid 0 in "bay 11 - 14" in storage manager.

-

Отвалились все диски Seagate

-iliya- replied to Sky Jumper's topic in Аппаратное обеспечение и совместимость

https://aliexpress.ru/item/1005004239107691.html?spm=a2g2w.orderdetail.0.0.ff104aa699gCHZ&sku_id=12000029351792164 в такой корпус планирую поставить, вроде все детали уже пришли, осталось скрутить. равда материнку которую планировал под сервак стало жалко на него тратить Supermicro X11SPL-F и Xeon Gold6138 -

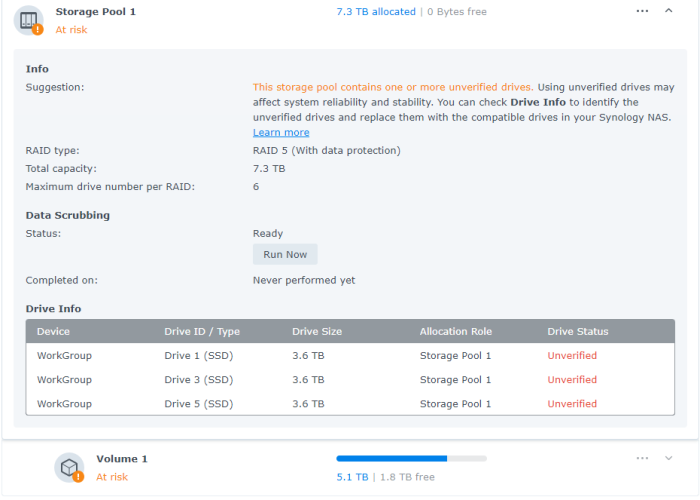

Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 7.2-64561 - Loader version and model: TinyCore RedPill v0.9.4.3 with Friend - DS3622xs+ - Using custom extra.lzma: NO - Installation type: BAREMETAL - supermicro x10sdv-6c-tln4f - Additional comments: shows unverified drives - Storage pool contains one or more unverified drives- raid 5 array is ok but have to perform a benchmak test

-

Обновления XPEnology DSM (успешные и не очень)

Vincent666 replied to XerSonik's topic in Програмное обеспечение

DVA3221: DSM 7.2-64561 - результат обновления: УСПЕШНЫЙ - версия DSM до обновления: DSM 7.1.1-42962 Update 5 - версия и модель загрузчика до обновления: arpl-v1.1-beta2a DVA3221 - версия и модель загрузчика после обновления: arpl-i18n-23.5.8 DVA3221 - железо: Supermicro x10SRA / Intel Xeon E5-2667 v4 / 64Gb DDR4 ECC - комментарий: Обновление пакетом через веб-интерфейс, затем смена и сборка загрузчика. Докер подтянулся без проблем. Виртуалки пришлось импортировать.- 218 replies

-

- dsm update

- ошибка обновления

-

(and 3 more)

Tagged with:

-

Cовместимость sas\sata контроллеров?

Sultantiran replied to decide's topic in Аппаратное обеспечение и совместимость

Была ситуация 1 в 1, с таким же контроллером. Помогла установка с DS3622xs+. Указывал SataPortMap=1, правда диски со 2-го начинались, не с первого по порядку (первый пустой как-бы). Про SataPortMap вот тут была у людей переписка с автором - я так понял для разных моделей NAS и для разных версий TCRP его смысл менялся. Вот примерно с этой страницы и дальше: У меня 8 дисков в Raid5, мать Supermicro A1SRi-2758F на проце Atom C2758, LSI SAS 9211-8i HBA (IT Mode), режим UEFI. tinycore-redpill-uefi.v0.9.4.3, DSM_DS3622xs+_42962 После установки система предложила миграцию - я сделал вариант без сохранения настроек (второй). Затем подгрузил сохраненные ранее с DS3615xs. -

Это не ты случайно у меня на Авито купил его? :)))) Я тут как раз недавно продал свой... уже слабоват под мои задачи, переехал на Supermicro X11SPM-F + корпус с 8 дисками...

-

Help with Ableconn PEXM2-130 Dual PCIe NVMe M.2 SSD Adapter

R2D2 posted a question in General Questions

Hello- I need some help and advice on how to get my PCIe cards working in DSM. Specifically, the Ableconn dual NVMe adapter. I am not really sure if this is a hardware or software issue. I have a baremetal install and it is working quite well. Here is the information: MB: Supermicro X10SLM+-F CPU: Intel Xeon E3-1270v3 RAM: 32GB 10Gb Mellanox card in PCIe2 slot Loader: ARPL v1.1-beta2a Model: DS3622xs+ Build: 42962 DSM 7.1.1-42962 Update 4 Volume 1: 4x 2.5” 1TB SSD, RAIDF1, Btrfs Volume 2: 2x 8TB Seagate IronWolf, RAID 1, Btrfs 2U rackmount chassis DSM is showing 6 filled HD slots, and 6 open ones. I have 2 PCIe 3x8 slots that I want to install the Ableconn PEXM2-130 Dual PCIe NVMe M.2 SSD Adapter Cards in. I have confirmed with the manufacturer that the motherboard does not support bifurcation, hence these cards have an onboard controller. Despite the card’s support for different flavors of linux, the ARPL loader is not recognizing the card. (I only have one card installed at the moment). I have tried to reconfigure and rebuild the loader, but the DSM is not showing the NVMe drives. I am stuck. I will be using the drives for storage, not caches. 1) Is there a driver available to fix the controller and card recognition, and get where I can use the SSD drives? 2) Is it possible to keep the baremetal installation and “virtualize” the SSD’s through some kind of docker app? 3) Is there a different Model number that would work better for me? 4) Should I scrap the baremetal install, then virtualize everything? If so, which hypervisor should I use? I think I have a registered copy of ESXi laying around, but is that the best one to use? I thank you in advance for your help! -

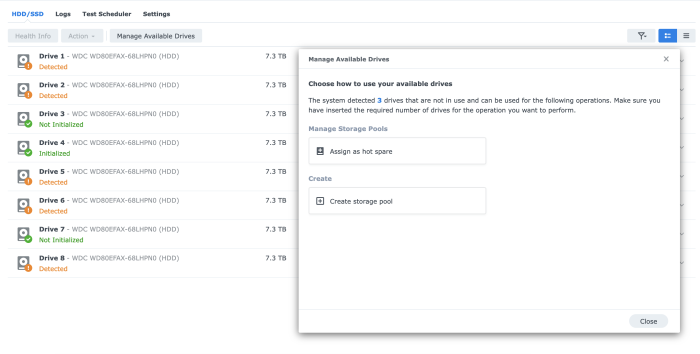

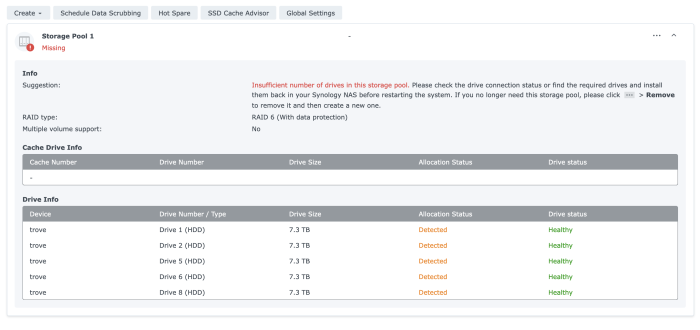

Had issues after upgrading to DSM 7.1.1-42962 Update 4. After changing to ARPL 1.03b, I was able to recover the system after a migration and log into a fresh DSM, however, the storage pool is missing/lost. Looking for tips on how to rebuild the original storage pool. Thanks. Hardware: Motherboard with UEFi disabled: SUPERMICRO X10SDV-TLN4F D-1541 (2 x 10 GbE LAN & 2 x Intel i350-AM2 GbE LAN) LSI HBA with 8 HDDs (onboard STA controllers disabled) root@trove:~# cat /proc/mdstat Personalities : [raid1] md1 : active raid1 sdd2[1] 2097088 blocks [12/1] [_U__________] md0 : active raid1 sdd1[1] 2490176 blocks [12/1] [_U__________] unused devices: <none> root@trove:~# mdadm --detail /dev/md1 /dev/md1: Version : 0.90 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Array Size : 2097088 (2047.94 MiB 2147.42 MB) Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 1 Persistence : Superblock is persistent Update Time : Wed Mar 8 11:46:28 2023 State : clean, degraded Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Events : 0.38 Number Major Minor RaidDevice State - 0 0 0 removed 1 8 50 1 active sync /dev/sdd2 - 0 0 2 removed - 0 0 3 removed - 0 0 4 removed - 0 0 5 removed - 0 0 6 removed - 0 0 7 removed - 0 0 8 removed - 0 0 9 removed - 0 0 10 removed - 0 0 11 removed root@trove:~# mdadm --detail /dev/md0 /dev/md0: Version : 0.90 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Array Size : 2490176 (2.37 GiB 2.55 GB) Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Wed Mar 8 12:38:17 2023 State : clean, degraded Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Events : 0.2850 Number Major Minor RaidDevice State - 0 0 0 removed 1 8 49 1 active sync /dev/sdd1 - 0 0 2 removed - 0 0 3 removed - 0 0 4 removed - 0 0 5 removed - 0 0 6 removed - 0 0 7 removed - 0 0 8 removed - 0 0 9 removed - 0 0 10 removed - 0 0 11 removed root@trove:~# mdadm --examine /dev/sd* /dev/sda: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sda1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 0 Update Time : Wed Mar 8 10:31:50 2023 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 11 Spare Devices : 0 Checksum : b4fd4b61 - correct Events : 1764 Number Major Minor RaidDevice State this 0 8 1 0 active sync /dev/sda1 0 0 8 1 0 active sync /dev/sda1 1 1 0 0 1 faulty removed 2 2 0 0 2 faulty removed 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sda2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 1 Update Time : Wed Mar 8 10:31:34 2023 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 11 Spare Devices : 0 Checksum : dfcee89f - correct Events : 31 Number Major Minor RaidDevice State this 0 8 2 0 active sync /dev/sda2 0 0 8 2 0 active sync /dev/sda2 1 1 0 0 1 faulty removed 2 2 0 0 2 faulty removed 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sda3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 3875a9e5:a7260006:808f7c11:9cedf8a0 Update Time : Tue Mar 7 18:23:17 2023 Checksum : 82ba8080 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 0 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdb: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) mdadm: No md superblock detected on /dev/sdb1. /dev/sdb2: Magic : a92b4efc Version : 0.90.00 UUID : d552bb6c:670b1dca:05d949f7:b0bbaec7 Creation Time : Sat Jul 2 16:17:54 2022 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 3 Preferred Minor : 1 Update Time : Tue Mar 7 18:16:53 2023 State : clean Active Devices : 3 Working Devices : 3 Failed Devices : 9 Spare Devices : 0 Checksum : 63069156 - correct Events : 125 Number Major Minor RaidDevice State this 1 8 18 1 active sync /dev/sdb2 0 0 8 2 0 active sync /dev/sda2 1 1 8 18 1 active sync /dev/sdb2 2 2 8 34 2 active sync 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdb3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 178434ea:f014dcc9:f52e6b43:460d8647 Update Time : Tue Mar 7 18:23:17 2023 Checksum : 239d3cd0 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 3 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdc: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) mdadm: No md superblock detected on /dev/sdc1. /dev/sdc3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : active Device UUID : 86058974:c155190f:4805d800:856081a4 Update Time : Mon Mar 6 20:03:55 2023 Checksum : d9eeeb2 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 2 Array State : AAAAAAAA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdd: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sdd1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 0 Update Time : Wed Mar 8 12:22:28 2023 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 10 Spare Devices : 0 Checksum : b4fd6ba7 - correct Events : 2592 Number Major Minor RaidDevice State this 1 8 49 1 active sync /dev/sdd1 0 0 0 0 0 removed 1 1 8 49 1 active sync /dev/sdd1 2 2 0 0 2 faulty removed 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdd2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 1 Update Time : Wed Mar 8 11:46:28 2023 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 10 Spare Devices : 0 Checksum : dfcefa9b - correct Events : 38 Number Major Minor RaidDevice State this 1 8 50 1 active sync /dev/sdd2 0 0 0 0 0 removed 1 1 8 50 1 active sync /dev/sdd2 2 2 0 0 2 faulty removed 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sde: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sde1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 0 Update Time : Tue Mar 7 19:36:00 2023 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 8 Spare Devices : 0 Checksum : b4fc79cf - correct Events : 1720 Number Major Minor RaidDevice State this 2 8 65 2 active sync /dev/sde1 0 0 8 1 0 active sync /dev/sda1 1 1 8 49 1 active sync /dev/sdd1 2 2 8 65 2 active sync /dev/sde1 3 3 0 0 3 faulty removed 4 4 8 113 4 active sync /dev/sdh1 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sde2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 1 Update Time : Tue Mar 7 19:31:47 2023 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 8 Spare Devices : 0 Checksum : dfce16e9 - correct Events : 22 Number Major Minor RaidDevice State this 2 8 66 2 active sync /dev/sde2 0 0 8 2 0 active sync /dev/sda2 1 1 8 50 1 active sync /dev/sdd2 2 2 8 66 2 active sync /dev/sde2 3 3 0 0 3 faulty removed 4 4 8 114 4 active sync /dev/sdh2 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sde3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 1f5ac73f:a2d2d169:44fbeeaa:f0293f1d Update Time : Tue Mar 7 18:23:17 2023 Checksum : 5662b981 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 7 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdf: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sdf1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 5 Preferred Minor : 0 Update Time : Tue Mar 7 19:31:40 2023 State : clean Active Devices : 5 Working Devices : 5 Failed Devices : 7 Spare Devices : 0 Checksum : b4fc787f - correct Events : 1629 Number Major Minor RaidDevice State this 3 8 81 3 active sync /dev/sdf1 0 0 8 1 0 active sync /dev/sda1 1 1 8 49 1 active sync /dev/sdd1 2 2 8 65 2 active sync /dev/sde1 3 3 8 81 3 active sync /dev/sdf1 4 4 8 113 4 active sync /dev/sdh1 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdf2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 5 Preferred Minor : 1 Update Time : Tue Mar 7 19:05:08 2023 State : clean Active Devices : 5 Working Devices : 5 Failed Devices : 7 Spare Devices : 0 Checksum : dfce110f - correct Events : 19 Number Major Minor RaidDevice State this 3 8 82 3 active sync /dev/sdf2 0 0 8 2 0 active sync /dev/sda2 1 1 8 50 1 active sync /dev/sdd2 2 2 8 66 2 active sync /dev/sde2 3 3 8 82 3 active sync /dev/sdf2 4 4 8 114 4 active sync /dev/sdh2 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdf3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 5e941a18:8b4def78:efcc7281:413e6a0f Update Time : Tue Mar 7 18:23:17 2023 Checksum : 68254a4 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 6 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdg: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) mdadm: No md superblock detected on /dev/sdg1. /dev/sdg3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : active Device UUID : e95d48ae:87297dfa:8571bbc2:89098f37 Update Time : Mon Mar 6 20:03:55 2023 Checksum : 87b33020 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 5 Array State : AAAAAAAA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdh: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sdh1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 0 Update Time : Tue Mar 7 19:36:05 2023 State : active Active Devices : 4 Working Devices : 4 Failed Devices : 8 Spare Devices : 0 Checksum : b4fc7350 - correct Events : 1721 Number Major Minor RaidDevice State this 4 8 113 4 active sync /dev/sdh1 0 0 8 1 0 active sync /dev/sda1 1 1 8 49 1 active sync /dev/sdd1 2 2 8 65 2 active sync /dev/sde1 3 3 0 0 3 faulty removed 4 4 8 113 4 active sync /dev/sdh1 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdh2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 1 Update Time : Tue Mar 7 19:31:47 2023 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 8 Spare Devices : 0 Checksum : dfce171d - correct Events : 22 Number Major Minor RaidDevice State this 4 8 114 4 active sync /dev/sdh2 0 0 8 2 0 active sync /dev/sda2 1 1 8 50 1 active sync /dev/sdd2 2 2 8 66 2 active sync /dev/sde2 3 3 0 0 3 faulty removed 4 4 8 114 4 active sync /dev/sdh2 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdh3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 7a2f8c59:2331f484:50fbb33a:bfe5fa58 Update Time : Tue Mar 7 18:23:17 2023 Checksum : 56caa935 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 4 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing)

-

Need help,DS2422+ with lsi 9260-8i,Can't install

BlackLotus replied to BlackLotus's topic in DSM 7.x

motherboard : supermicro h11ssl-i -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

SecundiS replied to Peter Suh's topic in Software Modding

Good day. I need help with discover additional hard drives in my system. I have: Supermicro 4U chassis with 16HDD Bays Supermicro X9DRH-iF motherboard (Specs) 2x Xeon e5-2620v2 Processors 64Gb DDR3 ECC REG ASMEDIA PCI-E to 20x SATA port controller 6x HDD WD4000F9YZ and 5x HDD SD4000DM00 (11 total) I attempt to install latest TCRP with PeterSuh's M-Shell (Friend Mode). I choose RS4021xs+ build, paste serial and mac's but after build and first boot i didn't see all my disks and see only 7 HDD's In user_config i see parameter "max_disks=16" and understood that DSM scan sata slots on motherboard first, but that motherboard have 10 sata slots (8x SATA2 and 2 SATA3). For this reason i use Asmedia controller with 20x SATA3 port and all disks connected to that controller. I attempt to modify max_disks parameter with value 30 (understood 10 internal + 20 external), but DSM again show me only 7 HDD's Can you help me to solve that problem, please -

Good day. I need help with discover additional hard drives in my system. I have: Supermicro 4U case with 16HDD Bays Supermicro X9DRH-iF motherboard (Specs) 2x Xeon e5-2620v2 Processors 64Gb DDR3 ECC REG ASMEDIA PCI-E to 20x SATA port controller 6x HDD WD4000F9YZ and 5x HDD SD4000DM00 (11 total) I attempt to install latest TCRP with PeterSuh's M-Shell (Friend Mode). I choose RS4021xs+ build, paste serial and mac's but after build and first boot i didn't see all my disks and see only 7 HDD's In user_config i see parameter "max_disks=16" and understood that DSM scan sata slots on motherboard first, but that motherboard have 10 sata slots (8x SATA2 and 2 SATA3). For this reason i use Asmedia controller with 20x SATA3 port and all disks connected to that controller. I attempt to modify max_disks parameter with value 30, but DSM again show me only 7 HDD's Can you help me to solve that problem, please

-

@pehun Pour info si j'ai choisi cette carte mère (Supermicro X11SCA-F) c'est aussi surtout parce que je voulais garder ce que permet de faire le microserveur HP, à savoir la prise de contrôle à distance sans écran, même si le système est OFF. les Supermicro -F ont l'IPMI 2.0 avec iKVM. Ca permet grosso modo la même chose que sur le HP, afficher l'écran via une page Web HTML5 ou bien en Java. Pour moi c'était indispensable de pouvoir prendre virtuellement l'écran en remote, voir de pouvoir stop/start le système sans avoir à être réellement devant la machine. Mais ces cartes mères ne sont pas données... Edit 2 : # iperf -c 192.168.1.XX ------------------------------------------------------------ Client connecting to 192.168.1.XX, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 1] local 192.168.1.XX port 36482 connected with 192.168.1.XX port 5001 (icwnd/mss/irtt=14/1448/250) [ ID] Interval Transfer Bandwidth [ 1] 0.0000-10.0034 sec 29.9 GBytes 25.7 Gbits/sec iperf -c 192.168.1.XX -p 5002 ------------------------------------------------------------ Client connecting to 192.168.1.XX, TCP port 5002 TCP window size: 85.0 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.1.XX port 35016 connected with 192.168.1.XX port 5002 [ ID] Interval Transfer Bandwidth [ 3] 0.0000-10.0000 sec 37.3 GBytes 32.0 Gbits/sec En GBytes/s : iperf -c 192.168.1.XX -p 5002 -f G ------------------------------------------------------------ Client connecting to 192.168.1.XX, TCP port 5002 TCP window size: 0.000 GByte (default) ------------------------------------------------------------ [ 3] local 192.168.1.XX port 49258 connected with 192.168.1.XX port 5002 [ ID] Interval Transfer Bandwidth [ 3] 0.0000-10.0000 sec 37.5 GBytes 3.75 GBytes/sec

-

Je viens de changer tout mon setup. J'avais 3 machines : Mon PC Windows (I7 4th gen 16giga), HP Gen8 Synology "data", Tour HP I5 pour Synology "Surveillance Station" J'ai acheté une carte mère Supermicro X11SCA-F (neuve car introuvable en occasion) (8 ports SATA, 2 slot PCI-e 16x, jusqu'à 128Gb de RAM) Un Core I9-9900k sur LBC 2x32GB de RAM sur Amazon. J'ai gardé la carte graphique que j'avais dans mon Windows (Nvivia GTX980), mon boitier, et le radiateur que j'avais sur l'ancienne CM (un Noctua NH-D15) et récupéré également la carte graphique GTX 1650 du "Surveillance Station" DVA3221. J'ai donc virtualisé mon Windows baremétal avec ses 2 disques physiques J'ai logé les 4 disques du Gen8, et j'ai réuni mes 2 "Synology" en un seul sous DVA3221. Et pour éviter les galère de passthrough de son et USB sous Windows, j'ai acheté 2 cartes PCI pour son et USB, dédiée au Windows. ça fonctionne plutôt bien.