-

Posts

4,641 -

Joined

-

Last visited

-

Days Won

212

Everything posted by IG-88

-

be careful with this one, it will finish the update to 6.2.4 and results in a not completely working system tested this one about 2 weeks ago error message on login in web gui, no packages (not even file station), no network settings, ... also it will make some drivers not working as you combine a binary older kernel with newer binary drivers from different kernel source the resulting system is iffy at best and without sorting out the obvious problems and extensive testing (it can break btrfs if you are unlucky?) i would not dare to suggest it at least ssh was still working so access to the system is possible (IF the nic driver is still working) @use-nas any solutions you found for the resulting iffy 6.2.4 install that will be the result of your suggestion? in theory it might be easier to downgrade a system in that state back to 6.2.3 as you don't need a recovery linux (when ssh was active before the update) but i had no time to test this thoroughly with diffrent hardware and nic's and the mix of different drivers and kernels might also result in a not accessible systems for some people when nic drivers fail (not sure about storage drivers, might get more messy?) it seemed much safer to get back to the old system before applying the 6.2.4 update to the whole system and try to boot a mix of drivers and kernel (the steps above remove the update files before the update is executed) keep in mind there are essential 6.2.4 drivers in rd.gz that are combined with a 6.2.3 kernel that way

-

remember the faq? dsm system partitions are on all disks and its a raid1 over all disks even if you tweak it to read from the ssd to make it faster it still needs to sync all drives on writing i dont know how the kernels MD decides what drive its using for reading on boot, might be the 1st found, so connect the ssd to the 1st sata onboard (sata0) there is also a switch --write-mostly when creating a raid1 that marks slower drives, you would need to apply that marker to drives in the raid1, excluding the ssd that would bring the reads primarily to the ssd as much as that is possible i never tried this so be careful,i'm not even sure if its a good idea to to so, lets just say its possible @flyride - any comment on this one? https://raid.wiki.kernel.org/index.php/Write-mostly setting it on a already existing raid https://www.tansi.org/hybrid/ (->"How to do it for an existing RAID1")

-

most people dont get that one right, synology did some kernel changes in 6.2.1/6.2.2 that made most extra drivers not working, only the drivers originally within dsm (aka native) where still working, as no one was willing to have a look into this (jun even pointed out where the problem came from) and make new drivers some people needed to revert to 6.2.0 other bought certain intel nic's to get around the problem (often seen are cases with hp microserver's onboard broadcom driver not working anymore and people buying a hp intel based nic to get the system back into network - it was running, the raid was up and anything but without the nic working most deemed the system dead or bricked), i later came back here and made drivers for 6.2.2 but after about half a year i did that synology reverted the change in 6.2.3 and the old drivers where back working again (in retrospect i wasted a ton of time with that 6.2.2 stuff), that case never happened before and i dont think it will repeat. if you want to make your system less fall int problem area you can choose hardware that has driver present in the dsm version you are using (tutorials about native drivers), like ahci is part of synology's base kernel and will work for sure on all 3 types we use now, also as we dont know what dsm system the person hacking dsm and creating a loader will choose (jun in that case) there is not much future proof when choosing, we dont know what dsm type will it be (like for 7.0 - if there will be a loader, dont expect anything before official 7.0 release) anyway you can use the onboard nic, beside jun's base set (that should be enough) there is also my extended set that cover even more exotic, older hardware or 10G nic's and newer chip revisions of nic's needing newer driver versions

-

https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ min. spec for 1.04b is 4th gen intel (haswel) loader 1.03b needs CSM mode and also needs to boot from the non-uefi usb boot device 1.02b 3615 is good for practice as it can do uefi and csm, might be ok too for some/most (DSM 6.1) as final system but when knowing how to set up the loader and you are sure you got it right then its easier to know that csm mode and boot device are the culprits when using 1.03b https://xpenology.com/forum/topic/38692-new-structure-and-gui-for-synology-downloads-on-httpsarchivesynologycom/ whats the hardware? Atom D410 1,6 Ghz (Single core)? RAM? chipset? (should be capable and set to AHCI) network controller? maybe boot a normal linux and use "lspci -k" and post it here also the forum search can help https://xpenology.com/forum/topic/13499-reinstall-xpenology-on-netgear-rndu2000/ https://xpenology.com/forum/topic/212-netgear-readynas-ultra-2-rndu2000/ https://xpenology.com/forum/topic/1343-how-to-install-xpenology-on-an-old-netgear-rndu-4000/page/ but tbh with a single core atom cpu? - get rid of that thing and find something better, even if you get it to work, it might not be able to reach full 1GBit network speed (112MB/s) maybe contact this user as he seem to have nearly the same https://xpenology.com/forum/topic/7939-dsm-613-15152-update-4/?tab=comments#comment-76583 https://xpenology.com/forum/profile/7862-cookie/

-

i checked the i915.ko files offered there (only checked the u3 files) and they where binary the same as the files offered in the 1st post (also same file date, i guess they just used my files and repacked them to match pci id's instead of dsm version) there is still the possibility that there are different 10100 cpu around (different SKU's, like seen with the i5-9400) i found some material but i need to check it out and compare information's but i guess there might only be one type of 10100 because on ark intel there is only one pci id for the gpu its not that much of a problem for you to test it with dsm 6.2.0 and the matching modded driver you just take you usb, replace the zImage and rd.gz on the 2nd partition with the files from the 6.2.0 (v23739) *.pat file - make copy's of the files there so you can revert it - and then use it to install 6.2.0 to a single empty disk if you are done testing just put the kernel files from before back on the usb and reconnect you disks (even if you boot you "old" disks with the usb that has 6.2.0 kernel files, dsm would offer to fix it an in a few seconds it would be up and running again)

-

as 6.2 is a new major version you need a new loader https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ in theory you could use 918+ (loader 1.04b) but the only gain would be the option to use nvme cache drives (after additional patching), it has a newer kernel but is most cases thats not important, downside of 918+ is problems with lsi sas controller (and scsi/sas controller in general) its best to stick with 3617 (especially when using lsi sas controllers) and use loader 1.03b (that needs csm mode in uefi bios and booting from the non-uefi usb device) you can "migrate" to a different dsm type any time by changing the loader and installing the other dsm type of the same version loader preparation and install process is still the same as for 6.1 but you use loader 1.03b/1.04b and install dsm 6.2.3 (not 6.2.4) https://xpenology.com/forum/topic/7973-tutorial-installmigrate-dsm-52-to-61x-juns-loader/ safe way is to use a new usb and a single empty disk, disconnecting "old" 6.1 usb and disks and try to successfull install 6.2.3 with the new usb (you can use the same sn and mac as you did with 6.1 but you have to match usb vid/pid in grub.cfg to the new usb you are using) if you get this working you put away the single disk and use the usb with you "old" disks, boot the new 1.03b usb and "migrate" (keep settings and data) to the new 6.2 dsm version that way you know the new loader works before you start the udpate

-

thanks added both to the howto

-

thats the first step of the tutorial for downgrading with loss of configuration (kind of what you had in mind, you wanted to use the fresh 918+ 6.2.3 on the old 916+ disks and keep the data volume (in your case the old disks took over as "master" on boot instead of the 918+ disk) so read the tutorial on how to do it, the way is about to create a new installation on a single disk and overwrite the old disks system partitions with that new installation (technically you create a new raid1 with one disk and then make the other disks part of that new raid1 so all the other disks will get whats on your 1st single disk - its nor the whole disk, its about the 1st and 2nd partitions that are dsm system and swap and these are raid1 in every disk in the system, you data volume is 3rd or above and is not touched when just "repair" the system raid1) https://xpenology.com/forum/topic/12778-tutorial-how-to-downgrade-from-62-to-61-recovering-a-bricked-system/

-

Drivers requests for DSM 6.2

IG-88 replied to Polanskiman's topic in User Reported Compatibility Thread & Drivers Requests

nic is a ATHEROS AR8121 -> atl1e.ko thats part of juns default driver set, so nothing to request as the driver is already there you might want to have a look in the tutorial section https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ 1.04b 918+ needs min. 4th gen intel cpu (haswell), no way with that old cpu ??? your links seem to be all about 918+, not going to work i'd say try 1.03b (dsm 6.2) or 1.02b (dsm 6.1) loader seems not to be uefi bios so even 1.03b should be working easily sata in bios needs to be set to ahci (might also be called extended mode) -

HP Microserver DSM 6.2.3-25426 Update 3 failed

IG-88 replied to sandisxxx's topic in The Noob Lounge

i'd suggest booting a rescue linux and check if it was a update of 6.2.4 i opened a new thread in the tutorial section https://xpenology.com/forum/topic/42765-how-to-undo-a-unfinished-update-623-to-624-no-boot-after-1st-step-of-update/ if its unclear if it was a update you don't start deleteing anything instead you check if update files are present its also good the check the content of /etc/VERSION and /etc.defaults/VERSION, it should not have 6.2.4 in it also a indicator for 6.2.4 update would be the content of the 2nd partition,zImage and rd.gz are renewed (look for file date) -

maybe you updated to 6.2.4? you can try this https://xpenology.com/forum/topic/42765-how-to-undo-a-unfinished-update-623-to-624-no-boot-after-1st-step-of-update/?do=getNewComment but before trying to delete anything you should check if the files are present, if there are no update files then its something else and you should check /etc.defaults/VERSION file for the dsm version installed your data are on different partitions on the disks and dsm does not touch them on system udpates you can use the same method with the rescue linux to access your data without dsm in most cases its also possible to have a additional disk (or usb) with open media vault and boot this, it should detect the raid of the dsm data volume and show it the link above also mentions a method where you use a new dsm installation on a separate disk to overwrite the dsm (system partition) on the original drives (still no touching of the data volume, repair of system only messes with the 1st partitions on all disks)

-

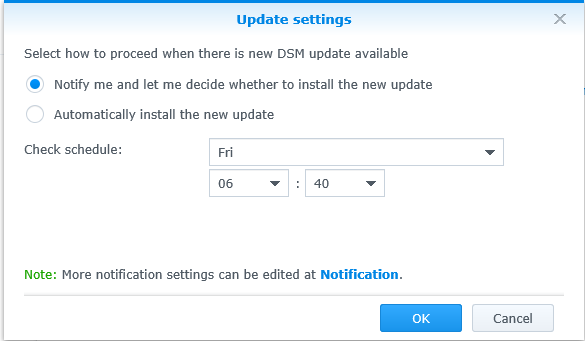

this is kind of work in progress and not perfect for all scenarios but as synology now offers 6.2.4 in the DSM GUI as update (instead of 6.2.3 U3) more people might have that problem, some people tested that already here (https://xpenology.com/forum/topic/41473-dsm-624-25554-install-experience/) but i wanted to continue in a easier to find area and try to sort out problems and fine tune here in the tutorial and guides section there is the more complicated way of going back to 6.2.3, keeping all settings and data, that needs some experience in general and some linux skills and a easier way where you keep your data but loose the settings (user, shares, ...), the skills needed for this is more or less the same what you need to install xpenology, a link to the less complex way is added down below as a PS download a rescue/live linux like system rescue cd (i used a "older" version 6.0.3, mdadm and lvm worked ootb, any newer should be fine too), transfer it to a usb (not your dsm boot usb) to boot from it assemble your raid1 system partition like here (1st partitions of all disks as /dev/md0) skip anything about swap or volume1 data partition, we only need access to the dsm system partition https://xpenology.com/forum/topic/7004-tutorial-how-to-access-dsms-data-system-partitions/ mount the assembled raid1 to /mnt with this mount /dev/md0 /mnt then remove some files with this rm -rf /mnt/SynoUpgradePackages rm -f /mnt/SynoUpgrade.tar rm -f /mnt/SynoUpgradeindex.txz rm -f /mnt/SynoUpgradeSynohdpackImg.txz rm -f /mnt/checksum.syno rm -f /mnt/.syno/patch/* also check the files containing the DSM Version cat /etc/VERSION cat /etc.defaults/VERSION if the one in /etc shows 6.2.3 and the one in /etc.defaults shows 6.2.4 delete or rename one with 6.2.4 and copy the one with 6.2.3 to that place (a version file with slightly more content is also in the *.pat file that can be opened with 7zip, that can be used for comparison too) report say's its working too if you just delete both VERSION files shutdown the rescue linux shutdown -h now now you will have to restore the kernel files on your boot usb to 6.2.3 (the update also replace files on the loaders 2nd partition) win10 can have some difficulties with mounting the 2nd partitions of the loader, so look here https://xpenology.com/forum/topic/29872-tutorial-mount-boot-stick-partitions-in-windows-edit-grubcfg-add-extralzma/ (it can also be done with linux but i have not tried what other tools will extract the kernel files but if you are familiar with linux you will find out https://xpenology.com/forum/topic/25833-tutorial-use-linux-to-create-bootable-xpenology-usb/) on 2nd partition delete all files except extra.lzma and extra2.lzma (if its 3615/3617 then its just extra.lzma) use 7zip to open "DSM_xxxxx_25426.pat" (dsm 6.2.3 install file, depends on you dsm type 3615/3617/918+) extract "rd.gz" and "zImage" and copy it to the 2nd partition of your xpenology usb the assumption here is you already had 6.2.3 before the 6.2.4 update try, if not you would use the kernel files from the *.pat of the 6.2.x you had installed as a example what could go wrong: if you had 6.2.2 and did 6.2.4 your extra/extra2.lzma on the loader where usually replaced with special ones made for 6.2.2, if you add 6.2.3 kernel files to the 6.2.2 extra/extra2 (drivers) then most of the drivers will not work as of the incompatibility with 6.2.1/6.2.2 with 6.2.0/6.2.3 if you are unsure about the extra/extra2 or you dsm version then use the original extra/extra2 from jun's loader (img file can be opened with 7zip, loaders kernel files and drivers in its extra/extra2 are 6.2.0 level) or use a extended version of the extra/extra2 made for 6.2.3 and use the kernel files of 6.2.3 if you had a older 6.2.x that would do a update to 6.2.3 put back your usb to the xpenology system, boot up, find it in network (i used synology assistant) and "migrate" to version 6.2.3 (keeps data and settings) - dont try internet that will do 6.2.4 again, use manual install and give it the 6.2.3 *.pat file (you already have as you needed it to restore the kernel files on the loader) it will boot two times, one for 6.2.3, 2nd for 6.2.3_U3 (it will be downloaded automatically if internet connection is present) everything should be back to normal except patches like nvme ssd patch (or other stuff you patched after installing 6.2.3 that is not dsm update resistant) oh and one more thing - check your update settings, it should look like this please comment on how to make it easier to follow, its just a short version i tried once PS: if that sounds all to complicated then its still possible to use the other downgrade method (but you will loose all settings and end with a factory default DSM - but you data volume(s) would still be unchanged) https://xpenology.com/forum/topic/12778-tutorial-how-to-downgrade-from-62-to-61-recovering-a-bricked-system/

-

HP Microserver DSM 6.2.3-25426 Update 3 failed

IG-88 replied to sandisxxx's topic in The Noob Lounge

in ther process of the update the kernel is written to the usb and on next boot it starts with the new kernel - and in case of 6.2.4 it stops there as it cant boot anymore you could post the rd.gz or extract it yourself (7zip, 1st extract the rd.gz, i gnore warnings, go into the directory created and extract the file "rd", that results in a directory rd~ that contains the files, in /etc you will find the file VERSION and that tells you what the update was) -

that usually is about using a hypervisor and virtual hardware, kind of levels the field for hardware selection but (as you use a kind of standard hardware in the vm) also adds new complexities as you still need to configure the vm (kvm if its unraid?) und still need to run dsm and can have lots of fun with updates, also unraid is a nas distro of its own, kind of weird to use it as base for a nas vm, proxmox seems to be more often used in the end you trade one problem for another and still need knowhow and time there are lots of questions from people with esxi and kvm (like proxmox) too so it might not be that much easier if one already has knowledge about the hypervisor used it can shave of time and spare problems but for a usual non-it 1st timer it might be easier to digest the problems with the usb loader on baremetal (maybe 2-3 youtube videos help) and go with that, if there are no long term benefits from hypervisor it adds complexity as you would need to update backup (and do documentation) about the hypervisor and dsm

-

HP Microserver DSM 6.2.3-25426 Update 3 failed

IG-88 replied to sandisxxx's topic in The Noob Lounge

there are some options btw. if redoing the usb loader the one thing thats needs to be right is the usb vid/pid, sn and mac's can be changed later the description sounds more like you updated to 6.2.4 one thing if its a amd microserver - check the the C1E function in the bios (needs to be disabled) maybe it was reset at some point (cmos battery empty and disconnect from power can do that) can you boot a rescue linux (like system rescuecd) form usb and mount the system partition? i wrote a small howto on how to remove the unfinished update https://xpenology.com/forum/topic/41473-dsm-624-25554-install-experience/?do=findComment&comment=195970 you would check if there are update files and directory present and check the content of the VERSION files in /etc and /etc.defaults if there are no update files and the VERSION files show 6.2.3 u3 then its would be kind of odd as hp microservers have no problems like this when updating from 6.2.3 u2 to u3 you would be able to reset the version files to the state of 6.2.3 (without update), put the 6.2.3 kernel files (zImage and rd.gz) on the loader and then "migrate" to 6.2.3 without update - the VERSION file to compare with and the kernel files are in the "DSM_DS3615xs_25426.pat" (just open the *.pat file with zip and all 3 files should be visible) there are other methods like installing fresh (6.2.3) to a single empty disk and then "adding" the old disks to that (there is a tutorial for downgrading like this) but its not to best choice as it would reset dsm so you would loose user and shares, plugins would be needed to reinstall and docker would be also needed to reconfigured - that method does not need much special skills or tools, mostly the basics already known to setup a new usb and install dsm https://xpenology.com/forum/topic/12778-tutorial-how-to-downgrade-from-62-to-61-recovering-a-bricked-system/ -

ds1815+? seems both are 4 core systems, having enough cpu cores for parallel threads seem still important, a 4 core cpu is suggested i had a older core2quad for a while and no problems getting 1G nic speed, it looks like the amd 2 core cpu is holding the system from being faster older amd cpu's are know to be slower at the same clock as intel and seeing that this cpu has just 1GHz ... and only 2 cores ... you could fire up open media vault from a external media like usb, let the synology data volume mount ant try in network if it does more then dsm maybe reasonably priced gemini lake (like in 920+) is a better alternative - or waiting for some newer elkhart lake https://www.dfi.com/product/index/1535 with its pcie 3.0 it can add a pcie ahci card capable of pcie 3.0 (5-6 ports like jmb585 or asm1166) and have a good performance (and if there is a 2.5Gbit realtek nic onboard you could have also some more network speed (intel 2.5G is not supported as intel does not deliver drivers outside kernel 5.x)

-

oh the driver is present but jun's original extra.lzma (rc.modules) does not seem to load it i kept the driver in my extended extra.lzma and added it to rc.modules (the version i compiled from the synology kernel source did not work at all) might work or not, if not the synologys kernel prevents it (as with the rest of the pata_..., sata_... drivers -> https://xpenology.com/forum/topic/9508-driver-extension-jun-102bdsm61x-for-3615xs-3617xs-916/, nothing changed in 6.2 in that regard) her you can download the extended 1.03b extra.lzma https://xpenology.com/forum/topic/28321-driver-extension-jun-103b104b-for-dsm623-for-918-3615xs-3617xs/ ahci ist the way to go, NM10 chipset does support ahci, usually labeled "extended" mode in bios ("compatible" would be the old ide mode) might be seen unter "Integrated Peripherals / Onboard SATA Function"

-

versuchs mal damit mdadm --stop /dev/md127 mdadm --zero-superblock /dev/sda3 mdadm --zero-superblock /dev/sdb3 oder du installierst in diesem zustand wie oben zu sehen (system und swap raid1 partitionen gelöscht) noch mal dsm mit dem loader, dabei werden die platten auch komplett zurückgesetzt (daten volume des alten raid wird entfernt), die resultierende installation ist dann nur systrem und selbst wenn das datan volume noch da wäre könntest du es über die dsm gui löschen (volume und storage pool löschen) ich mache das in der regel unter windwos (usb dock für 2 platten) und nutze "dmde" (ist eher ein recovery tool aber man kann auch schön an der partitionstabelle ändern aka löschen) gehen würde sicher auch "Mini Tool Partitons Wizard Free 12"

-

i edited a little with a comment what could happen if you had a 6.2.1/6.2.2 dsm before, is that case the often used extended special made (for 6.2.2) extra/extra2 will often prevent the system from loader drivers, it would also need extra/extra2 for 6.2.3 in that case in general its best to have a serial cable (null-modem-cable) to monitor the console with putty in serial mode, you would see drivers loading or crashing and even if network drivers failed you would be able to login local on the console after boot and check with "dmesg" if the version files in /etc and /etc.defaults are showing 6.2.3 it should be ok (i guess the one in /etc would be overwritten on next boot so the one in /etc.defaults is the important one), if both VERSION files are 6.2.3 then look for the extra.lzma on the loader and replace it with either jun#s original or my newer one made for 6.2.3

-

so you dint have the problem from above and can run (a older) dsm thats not how dsm work, disks get "initialized" before creating a data volume and that means the dsm system is copied to the disk dsm system is a raid1 over all disks, its 2.4GB (system) and 2GB (swap) that is used on every disk before the data volume partiton is created anyway, good luck with you new nas

-

OMV ist auch nicht so schlecht error 13 ist üblicherweise falsche vid/pid in der grub.cfg, es gibt zwar immer wieder fälle bei denen es trotz korrekter vid/pid nicht gehen soll aber reproduzieren konnte ich das hier noch nie

-

ja aber wenn du die daten behalten willst solltest du nur die ersten zwei partitionen löschen (wenn er dich denn lässt, es sind ja raid partitonen, eigentlich startet man das raid mit mdadm und dann würde man partitionen managen) du kannst aber auch einfach mit einer zusätzlichen platte eine saubere installtion machen (6.1 oder 6.2), diese als erstes im system und dann die andeen platten dazuhängen, dann sollte er von der einen booten, das volume auf den anderen erkennen und anbieten die system partitonen auf den anderen plaaten zu repaieren, was bedeutet das er das system deiner einzelenen platte auf die anderen bügelt, danach runterfahren, die hilfsplatte entfernen und das war's gibts als tutorial https://xpenology.com/forum/topic/12778-tutorial-how-to-downgrade-from-62-to-61-recovering-a-bricked-system/ man erzeugt ein neues raid1 (system und swap) macht die alten platten mitglied dieses raid (dabei wird das alte system überschrieben) und dann nimmt man die zusätzliche platte wieder aus dem raid1 das raid (3. partitonone) auf den alten platte bleibt dabei unverändert

-

Dell R720XD With H310 in IT Mode - Not all HDD seen

IG-88 replied to Delluser's topic in The Noob Lounge

ever used a search engine on the internet? try "rdm esxi vmware" sadly no one take the time to watch all three parts of that - maybe search the forum, i wrote about that more then once he never found out what went wrong and gave up (and he never had a deeper look into xpenology or he would have known that 45 is not possible like that) 24 ist the max for now until someone takes some time and effort to look into quicknicks loader, but thats a different story thats more what you need: Expand XPenology beyond 12 drives, https://www.youtube.com/watch?v=2PSGAZy7LVQ -

yes and NO its a combination a two standard tools in linux, mdadm and lvm2 (and the file systems ability to "grow") any linux should be able to read a SHR volume, the special of synology is automatically creating and extending things without the user having any trouble or even seeing how its working (but it needs some basic understanding of the matter - your example with 3 x 8TB and adding a fourth disk in a 4 disk units will not work as the added bigger disk part over 8TB will not have redundancy and would not be used in a SHR, only if one the three 8TB disks is also replaced with a bigger disk you would be able to user the additional space because there would be space of two disks above 8TB and that would be a raid1 - i'd suggest reading synology's article about SHR as intro) it would be possible to do all this (creation and extending of SHR volumes) manually, but it would need some knowledge about the tools used the clever scripting and using the ability's of linux is what makes it SHR if something goes wrong such volumes more difficult to recover, nothing serious for professionals but can be a challenge for 1st timer's but in a well organized nas world there is also a backup, resulting in no or very little need for recovery of broken volumes especially when usig old and unreliable hardware there is a higher chance of things going south, there is a reason why server hardware is of higher quality (and the nas is a server), using desktop hardware can be a risk, if its worth the money is on the user to decide (some go with ecc ram, some use more proven controller, ...) no, seems no other nas company is pursuing this also synology disabled this feature in the business hardware running dsm look for docker support, that way the "extension" in question depends not on the brand as it's seen with synology or qnap specific packats maybe look into open media vault as alternative, way more open and might be easier to handle dsm is a closed source appliance and has some problems because of this (and its hacked so from time to time synology "act's", usually with new major versions)