-

Posts

4,641 -

Joined

-

Last visited

-

Days Won

212

Everything posted by IG-88

-

Tutorial: Install 6.x on Oracle Virtualbox (Jun's loader)

IG-88 replied to kitmsg's topic in Tutorials and Guides

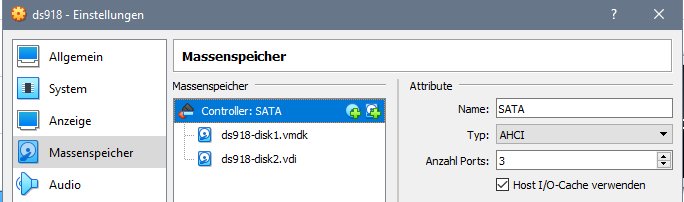

if the sata is configured to 4 drives then its normal that the following controller (scsi or whatever as long as dsm has a driver) will be 5 and ap, dsm counts the ports, used or not and will place the controller one after another never tested this as i only use it for tests and compiling drivers, according to the documentation virtualbox can have up to 30 sata drives in a vm https://www.virtualbox.org/manual/ch05.html and loader 1.04b 918+has a preconfigured max. of 16 in esxi its suggested to have the boot image on the 1st sata controller and data disks (where dsm in installed to) on 2nd or above controller seem not needed in virtualbox on my system but can't hurt to try it -

it a full linux and is open to much more hardware then dsm no dsm works with ryzen too, you would need a additional gpu when you already have a 10G nic in the one slot ... thats one of the reasons to think about microATX, more options if the new build still need some more after a while also going hypervisor or baremetal is not final and can be changed if needed, when disks are in direct control of dsm (like controller in vm or rdm mapping) its still possible to just remove the hypervisor and use the whole install (disks) baremetal without reinstalling dsm or the data on the disks (or the other way around from baremetal to hypervisor) then mini-itx is the better choice and choose more wisely before buying btw. there where more expensive server mini-itx boards with 10G nic anboard, that way you can keep the pcie slot open but its over 2 years as i looked into that so it might be outdated (the market moves and compact servers might not be that interesting anymore)

-

about possible hardware GIGABYTE W480M Vision W (microATX, 2 x Pcie 16x (1x16, 1x8), 2xM.2 Nvme, 8xsata) Intel Core i5-10500T, 6C/12T (TDP 35W) cpu can be different the example is low power and affordable 200 bugs the board keeps everything open as it can have nvme and more disks (but 8xsata is a comfy start) might be a overkill of options, if you go with mini-itx you would have less options but might be good enough only negative with the board would be you cant use the 2nd nic as thw 2.5GBit nic from intel has no driver outside kernel 5.x and dsm is based in kernel 3.10 and 4.4 but you already planning a 10G nic ASUS XG-C100C will work but if the systems are not to far apart sfp+ might be a better choice (cheap DAC cable ap to 7.5m and affordable 4 or 8 port switch) (sfp+ would be my choice now because of the switch option, multiport 10G rj45 a way more expansive and power hungry, also sfp+ has a lower latency)

-

along with its flexibility the hypervisor add a amount of complexity, if one already has enough experience with with a certain hypervisor it will not cost to much time the hypervisor is also a instance that needs update/maintenance in addition to the system that is the real thing, that complexity can add up to a point where you have work work with the hypervisor then with the dsm vm if its really about having a single purpose dsm install without bigger plans to extend to additional vm's (dsm can do docker and vm's with vmm on a smaller scale) if its put it in place and forget about and not tinkering all the time then carefully chosen hardware for having 2 baremetal systems might be the better choice the soul purpose a having a dsm (synology) appliance is often to not care to much about it if its set up and running, adding a hypervisor adds complexity in a way that it can be a bother i dont know if the gain of nvme in a vm (as virtual ssd) ist that much faster that its worth the effort also when thinking about a baremetal with nvme (918+, 8 thread limit) it needs two nvme's in dsm to use it as read/write cache and most mimi-itx boards will just have one nvme slot will be the same as a normal connected ssd but i would not do that because it much more difficult to handle and to replace that just normal 2.5" sata drives its easier to have 2 or 4 drives of the same type and one spare (cold), if one fails its just one part to replace, if its m.2 sata too beside normal sata then its two different spare parts one point we have not touched yet is power consumption, if its 2 x NUC atm then it will not draw much power, using a oversized hardware may result in a nice additional fee for power, so it might be worth a thought and few minutes with a calculator to see what it will cost in a year. thats TLC ssd (and ok), if you look for different models don't use QLC, way slower when the internal cache of the drive is exceeded also it might be better to use 4 drives in raid10 for more throughput (but you can start with 2 drives in raid1 and extend if you feel you want more speed) btw. my last build was mini-itx and i changed to microATX last year, way more options with its 4 pcie slots and there are also models with 2xnvme (if you want to keep that a option)

-

i tested the hpsa.ko driver in my last extra.lzma with a p410 (that does not support hba mode) as raid1 single disk and the driver seemed to work as it was loaded (dis not crash) and found the disk the P420 should be switchable into hba mode and should work with disks in the same way as the lsi sas hba'a in IT mode you might need to boot with a service linux to switch the p420 into hba mode as (afaik) that one does not have a switch in the controller bios (later P4xx might have that)

-

if its more about ram and cpu power you can use 3617 baremetal and just use normal ssd's as data volumes (raid f1 mode) or as cache drives instead of nvme using a 8 core with HT or beefier one with 12 or 16 cores without HT (disabled in bios) is no problem if sata ssd's in raid1, 10 or raid f1 (ssd equivalent to raid5) will be ok depends on you reqirements so 3617 with sata ssd's might be a simple to handle solution (you can still add normal hdd's for slower but bigger storage as long as you have sata ports - if the 10G nic blocks your single pcie slot you cant extend for more sata ports)- security seems to to be your concern when you still run a 5.2 system and as long as you are doing operations and maintenance yourself (or/and make a good documentation) it should be fine, you 5.2 did not get updated to 6.x by accident so no problem from that side i guess

-

yes, xpenology uses the original dsm kernel (for different reasons) so you are bound to the limits of the dsm type you are using https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ atm you user 8 real cores and 8 HT "virtual cores" (~20-25% of a real core for a HT core) so its ~10 core performave atm when disabling HT in bios you will have real 12 cores (of the ~15 core performace you could expect of that 12+12 cpu) if you want to stay with DSM you could use a hypervisor like esxi or proxmox and have 2 DSM VM's to make use of all cpu power depending on you use case you can also consider a baremetal install of open media vault

-

mini-itx brings some limitations when it comes to extensions as it does not have much pcie slots (usually just one) you did read about the general limitations of xpenology? https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ when doing docker and vm then ram is more important, very few people use ecc as most build low cost systems with desktop hardware that does not support ecc, can't hurt to have it when its more important is it worth the money? depends on how much more it costs and how you see it here we have the 1st problem, if you look for the limits you will see only 918+ supports nvme (and only as cache and manually patching) but its kernel is limited to 8 threads even 3617 "only" hast 16 threads so its a max. 8 core with HT or more cores and disabling HT (if the bios can do that), like when having a 12 core with HT you might be better off with esxi or proxmox and using a dsm vm, that way you can handle resources more flexible (cpu power for other vm's) and the nvme's can be made virtual ssd's and 3617 can handle them as normal drivers or as cache - but you loose a lot of simplicity compared to a baremetal install that sounds a little low for HA, consult synologys design guides for that, maybe plan a added 10G nic for the pcie slot (4x or better) and use a direct connection between the two HA units over the 10G connection (you don't need a switch for just connection the two units) also check the forum if HA is available without a valid serial xpenology is still a hacked dsm appliance and there are things not working without "tweaking" and you can loose functions when updating if synology changes things and if you can't install all security updates there are risk's involved like you cant install 6.2.4 that already contains new security fixes, also its easy to "semi" brick or damage the system on updates when doing things wrong (like not disabling write cache on updates when using 918+ with nvme cache or installing "to new" updates like 6.2.4) like when having other people not aware of the specialty's handling the system - keep that in mind) have you given open media vault a thought (i guess they will have no equivalent to HA but webgui, nas and docker will be there too) i don't know how close to production that system is supposed to be but it can be riskier to do that then you expect it to be (if you dont have longer experience with xpenology)

-

Tutorial: Install 6.x on Oracle Virtualbox (Jun's loader)

IG-88 replied to kitmsg's topic in Tutorials and Guides

if you try loader 1.03b for dsm 6.2 use sata controller (ich9 as chipset) as seen in my screenshot above, i also user 3615/17 as vm that way there is not much difference beside the hardware transcoding support and support for nvme ssd's (SHR will work ootb but you already figured out how to activate that on 3615/17, there is also something about that in the faq here in the forum) also 918+ loader has already a default of max 16 disks, 3615/17 loader comes with max. 12 disks but as you plans are based on more recent hdd sizes that will not make a difference for you -

just to make szre, please post the grub.cfg you used

-

a original usb module has f400/f400 for vid/pid, to completly replace a original module you would also need to change a usb flash drive to have this id's - and that part need some reading, work and the right source material (usb flash drive with the right hardware that you can get tools for)

-

sorry to much los in translation here, i cant get what you are on here VM? we were talking about a normal baremetal install also the loader you use defines the main dsm version you have to use and also the type of dsm (like 3615 or 3617) i'd szggest using loader 1.03b for 3617 dsm 6.2.3 if you have problems with csm mode you can try loader 1.02b dsm 6.1, this can run legacy (csm) and uefi https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ you should read normal install thread about 6.1, its the same when doing a 6.2 install https://xpenology.com/forum/topic/7973-tutorial-installmigrate-dsm-52-to-61x-juns-loader/ maybe look for a youtube video in you native language to get a better picture

-

i'm not doing anything with wifi as synology dropped that themself (beside other things) lspci_syno.txt show it 0000:00:1f.6 Class 0200: Device 8086:15fa (rev 11) not unexpected as the board is from a new chipset for 10th gen intel, they also used a newer onboard nic revision, it will need a newer driver for sure as we got a new 3rd wave shut down on easter i guess i will have time to do something in that direction

-

where did you picked that up? ich6 upward can do ahci according to my sources https://ata.wiki.kernel.org/index.php/SATA_hardware_features maybe you should really read it? page 2-18 you could even search (text) for ahci in the pdf and find about 10 references to ahci i'm not going to check any further, if you don't bother to do even search in a manual you referencing ... good luck with your further efforts

-

-

even in your 2nd, you dont give any details about your hardware (board, cpu, added cards), kind of hard to give advise without any specific information in general there is less support in dsm 6.1/6.2 as synology did changes to there custom kernel code and broke drivers, without someone fixing it there are lots of sata and pata drivers that dont work anymore https://xpenology.com/forum/topic/9508-driver-extension-jun-102bdsm61x-for-3615xs-3617xs-916/ afair it did work in 6.0 so you might try that version (DS3615xs 6.0.2 Jun's Mod V1.01) https://xpenology.com/forum/topic/7848-links-to-loaders/

-

Drivers requests for DSM 6.2

IG-88 replied to Polanskiman's topic in User Reported Compatibility Thread & Drivers Requests

afaics thats mcp78 https://de.wikipedia.org/wiki/Nvidia_nForce_700 and thats ahci compatible in linux https://ata.wiki.kernel.org/index.php/SATA_hardware_features so check you bios settings for sata to be ahci -

migh read the faq, raid is not supported, dsm is build around software raid with single disks, ahci mode in bios for the onboard (not ide mode or raid mode) extra controller depends on what it is but in most cases its not supported, look into using the onboard sata in ahci mode start usb from scratch, check bios settings (if its a uefi bios check csm mode is on and you boot ftom the non uefi usb boot device) and network cable also try loader 1.02b for 3615/17 for dsm 6.1

-

Tutorial: Install 6.x on Oracle Virtualbox (Jun's loader)

IG-88 replied to kitmsg's topic in Tutorials and Guides

whats your point? you say 6.2.4 is *** but you had to update your original system to it - btw. thats no option for xpenology users as the current loader is not booting with 6.2.4 anymore (presumably new protection in the same way as in 7.0) 6.2.3 u3 cant be that bad, beside my own system there is a good amount of people having done the u3 update without problems, so i would not issue a warning for u3 https://xpenology.com/forum/topic/37652-dsm-623-25426-update-3/ -

is that NEWS or a RUMOUR? - because you posted in that section

-

Tutorial: Install 6.x on Oracle Virtualbox (Jun's loader)

IG-88 replied to kitmsg's topic in Tutorials and Guides

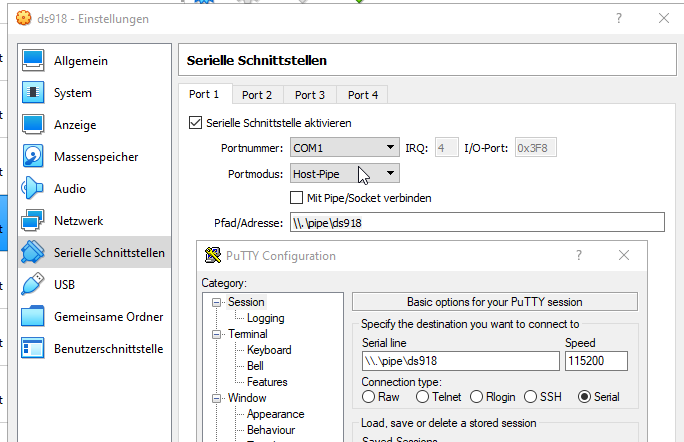

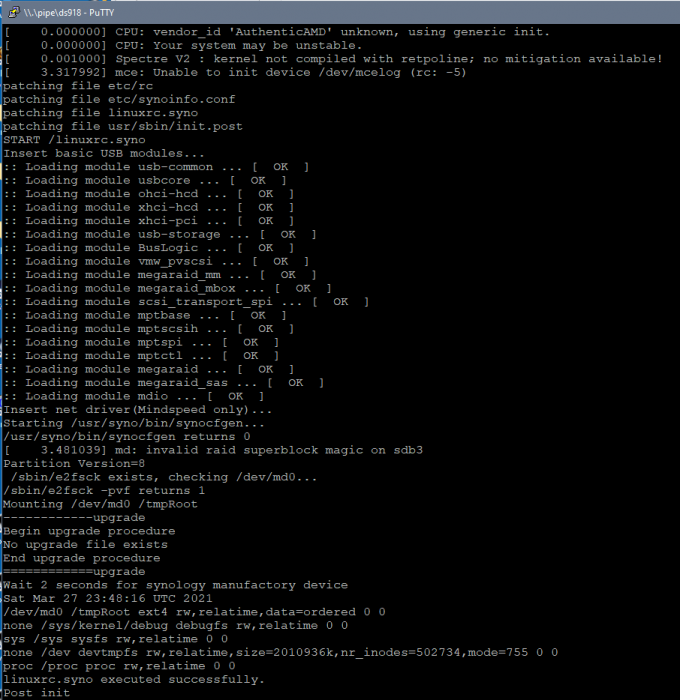

"current" as in recent would be 6.2.4 but thats not working, so the last recent (usable) is 6.2.3 (u3) as for the loader there it just loader 1.04b for 6.2.0 as the kernel on jun's loader is the one from dsm 6.2.0 (and the drivers in the default extra.lzma are made for 6..2.0) as of driver incompatibility it should only be 6.2.0 or 6.2.3, using 6.2.1 and 6.2.2 will result in crashing drivers when booting with the kernel from 6.2.1/6.2.2 (not all but most) if adding a extra.lzma (and extra2.lzma if its 918+) then it needs to be for 6.2.3 as any other version like 6.2.1/6.2.2 will be incompatible) you should start with the original loaders from here https://xpenology.com/forum/topic/7848-links-to-loaders/ no changes to extra.lzma or kernel files, just mod the grub.cfg for the right mac that your vm is using, if you have no other xpenology running (that might already use that mac) in network you can (to minimize things) just take the loader and enter its mac into your vm (use 7zip to pee into the img file and read the grub.cfg) if you try 1.04b 918+ you hardware still need to fulfill the requirements for that and thats 4th gen intel (haswell) or above https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ so maybe try 3615/17 and loader 1.03b you can have serial console working when using vm's this would be the settings for virtualbox and putty to communicate, start the vm and shortly after that the putty session with the serial console you would see if the boot process is running its supposed to look like this, when loading just the loader without a installed system there would also be a good amount of messages about network drivers loading -

thats not how dsm works, bootloader on usb (just grub and kernel) and the system is on EVERY disk as raid1 the log was just an example because sometime people just throw in some lines of log, but es we are on the logs now as long as the system in installed do disk (like when having 6.1 running) there will be a log written disk and a failed update to 6.2 will leave a log and even if the system can't be found in network anymore, as long as it writes to the log an disk you would be able to mount the system partition witch a rescue linux and extract the logs in /var/log/ dmesg and messages are the most common but there are more and some synology specifics, so there might be a special file for updates (i've never checked as i never had a unexpected broken update) did you re-use the same usb from 6.1 for 6.2,if its a different then try the one thats working for 6.1 also for 6.2 in theory its possible to have problems on installing when writing the kernel to usb in the process of installing (u guess if you managed to adjust the usb vid/pid for 6.1 you will know how to handle this as its the same for 6.2)

-

Virtualbox.spk cant be installed in DSM 7

IG-88 replied to smileyworld's question in General Questions

if you want to try it yourself DSM_Developer_Guide_7_0_Beta.pdf page 64 Privilege DSM 7.0, packages are forced to lower the privilege by applying privilege mechanism explicitly. To reduce security risks, package should run as an user rather than root . Package can apply such mechanism by providing a configuration file named pivilege : With the configuration, package developer is capable to Control default user / group name of process in scripts Control permission of files in package.tgz Control file capabilities in package.tgz Control if special system resources are accessible Control group relationship between packages To overcome the limitation that normal user cannot be used to do privileged operations, we provide a way for package to request system resources. Please refer to Resource for more information. Setup privilege configuration Just create a file at conf/privilege with prefered configuration. { "defaults": { "run-as": "package" } } DSM_Developer_Guide_6_0.pdf page 104 to compare with- 3 replies

-

- 1

-

-

- virtualbox

- dsm 7

-

(and 2 more)

Tagged with: