Search the Community

Showing results for 'Supermicro x7spa'.

-

Hello I followed the tutorials to install 918+ with TCRP 0.9. Everything whent fine after many attempts and I didn'"t understand why it was not going to the end. Finally, I had to disconnect the network during installation and that solved the problem. So I guess the SN and/or network card used was the real problem. That is, I used default settings with RPLOADER and so, I wonder about using genuine SN and MAC adress. Let me explain : - I'm the owner of a dead 918+. But I know the SN and mac adress. My question is : - as I do not want to setup everything again, is there a way to change SN and MAC with genuine ones without reinstall everything ? I mean , is this easy to do ? Will I lose things ? Sorry if this question has been asked before but I searched the forum without success. Things to know : - My supermicro motherboard owns 2 NIC. - My ASUS C100 is well recognized and has now a mac adress from Synology (run fine) : used to transfer file over network without gateway. - the onboard 1gb NIC has his own real mac and is the gateway of the system. (so i think that was the problem) So, MAC1 C100 : synology MAC (but not used) MAC2 1gb : supermicro MAC (used) MAC3 ??? 1gb : not recognized ( I wonder why...?) Well, sorry for my english. I hope i'm clear enough. Thank you thousand times for your help and answer.

-

Обновления XPEnology DSM (успешные и не очень)

Vincent666 replied to XerSonik's topic in Програмное обеспечение

DVA3221: DSM 7.2-64561 - результат обновления: УСПЕШНЫЙ - версия DSM до обновления: DSM 7.1.1-42962 Update 5 - версия и модель загрузчика до обновления: arpl-v1.1-beta2a DVA3221 - версия и модель загрузчика после обновления: arpl-i18n-23.5.8 DVA3221 - железо: Supermicro x10SRA / Intel Xeon E5-2667 v4 / 64Gb DDR4 ECC - комментарий: Обновление пакетом через веб-интерфейс, затем смена и сборка загрузчика. Докер подтянулся без проблем. Виртуалки пришлось импортировать.- 218 replies

-

- dsm update

- ошибка обновления

-

(and 3 more)

Tagged with:

-

Cовместимость sas\sata контроллеров?

Sultantiran replied to decide's topic in Аппаратное обеспечение и совместимость

Была ситуация 1 в 1, с таким же контроллером. Помогла установка с DS3622xs+. Указывал SataPortMap=1, правда диски со 2-го начинались, не с первого по порядку (первый пустой как-бы). Про SataPortMap вот тут была у людей переписка с автором - я так понял для разных моделей NAS и для разных версий TCRP его смысл менялся. Вот примерно с этой страницы и дальше: У меня 8 дисков в Raid5, мать Supermicro A1SRi-2758F на проце Atom C2758, LSI SAS 9211-8i HBA (IT Mode), режим UEFI. tinycore-redpill-uefi.v0.9.4.3, DSM_DS3622xs+_42962 После установки система предложила миграцию - я сделал вариант без сохранения настроек (второй). Затем подгрузил сохраненные ранее с DS3615xs. -

Hardware System: Supermicro X8SIE-F Intel Core i5 660@3,33GHz | 32 GB DDR3 ECC | Intel® 3420-Chipsatz | 4x Intel® 82574L Gigabit-Ethernet-Controller | 6x HDD-T4000-HUS726040ALE61 | Boot: Juns Loader 1.02b (ds3617xs) DSM_DS3617xs_15217 und alle neueren. Habe auch DS3617xs 6.1 Jun's Mod V1.02b (MBR_Genesys) .img und alle für DS3615 xxx ausprobiert. Meldung ein Fehler ist aufgetreten - Wir können Ihren NAS nicht Identifizieren. Kann mir jemand dabei Helfen oder sagen, woran es liegt das, das System nach dem Neustart nicht funktioniert und die Anmeldeseite /Registrierung Admin anzeigt. Oder gibt es bei den Erweiterungen besondere Treiber, die dafür hinzugefügt werden müssen?

- 1 reply

-

- supermicro x8sie-f

- juns loader 1.02b

-

(and 1 more)

Tagged with:

-

Ciao, chiedo a chi ha più esperienza di me sulla questione, sto provando a installare DSM 6.2.0 in una scheda madre Supermicro che mi è capitata tra le mani, il modello è X8DTL-i con 2xIntel Xeon L5640, sto usando il loader 1.03b con DS3617xs, la scheda ha 6 porte SATA e 3 HBA controller DELL H200 (quindi SAS2008) con firmware LSI9211-8i in IT MODE per la gestione degli HDD, il case ha 24 slot da 3,5” ma in totale potresti collegarne 30 (6 su mobo e 8x3=24 sugli HBA), un bel po di spazio...comunque il punto è che non riesco ad impostare correttamente il BIOS della mobo per farlo funzionare in modo egregio, qualcuno ha avuto oppure ha esperienza in merito? Soprattutto su impostazioni BIOS Supermicro, Grazie

-

So, the other day I was gifted an old server, based on the Supermicro X8SI6-F. It sits in a Fractal Design Define R5, that easy supports 8+ drives. As always, the first thing that came to mind, was that this could be used with XPEnology ;-) It has no problem booting Jun's 1.3b, and installing DSM 6.3.x (DS3615xs), but it insists on using the 6*Sata2(3Gb/s) as drive 1-6, before the 8*6Gb/s ports on the integrated SAS controller (IT-mode). This give me a count of 6+8=14 drives, and if I want to use them all, I will have to modify the max disk in synoinfo.conf. with the risk of "loosing" the last 2 SAS ports when updating. I was hoping I could just disable the SATA ports in BIOS, and use just the 8 SAS ports, but I found no option to do so. Now, my question to the guru's here: Can I remap the drive order in a config file (and not having it broken during updates) and keep my 8 SAS ports as drive 1-8? (and maybe use 4 of the 6 SATA ports if needed)

- 11 replies

-

- remap

- supermicro

-

(and 2 more)

Tagged with:

-

Help with Ableconn PEXM2-130 Dual PCIe NVMe M.2 SSD Adapter

R2D2 posted a question in General Questions

Hello- I need some help and advice on how to get my PCIe cards working in DSM. Specifically, the Ableconn dual NVMe adapter. I am not really sure if this is a hardware or software issue. I have a baremetal install and it is working quite well. Here is the information: MB: Supermicro X10SLM+-F CPU: Intel Xeon E3-1270v3 RAM: 32GB 10Gb Mellanox card in PCIe2 slot Loader: ARPL v1.1-beta2a Model: DS3622xs+ Build: 42962 DSM 7.1.1-42962 Update 4 Volume 1: 4x 2.5” 1TB SSD, RAIDF1, Btrfs Volume 2: 2x 8TB Seagate IronWolf, RAID 1, Btrfs 2U rackmount chassis DSM is showing 6 filled HD slots, and 6 open ones. I have 2 PCIe 3x8 slots that I want to install the Ableconn PEXM2-130 Dual PCIe NVMe M.2 SSD Adapter Cards in. I have confirmed with the manufacturer that the motherboard does not support bifurcation, hence these cards have an onboard controller. Despite the card’s support for different flavors of linux, the ARPL loader is not recognizing the card. (I only have one card installed at the moment). I have tried to reconfigure and rebuild the loader, but the DSM is not showing the NVMe drives. I am stuck. I will be using the drives for storage, not caches. 1) Is there a driver available to fix the controller and card recognition, and get where I can use the SSD drives? 2) Is it possible to keep the baremetal installation and “virtualize” the SSD’s through some kind of docker app? 3) Is there a different Model number that would work better for me? 4) Should I scrap the baremetal install, then virtualize everything? If so, which hypervisor should I use? I think I have a registered copy of ESXi laying around, but is that the best one to use? I thank you in advance for your help! -

Это не ты случайно у меня на Авито купил его? :)))) Я тут как раз недавно продал свой... уже слабоват под мои задачи, переехал на Supermicro X11SPM-F + корпус с 8 дисками...

-

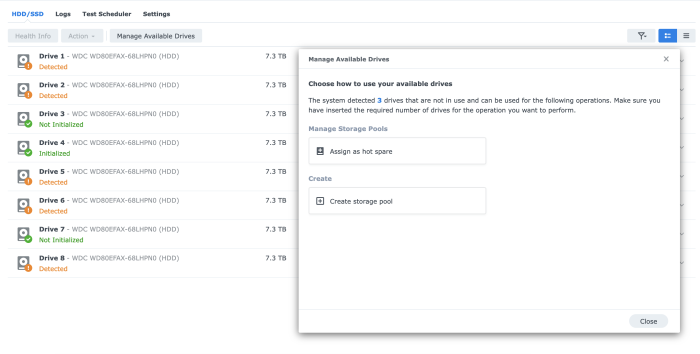

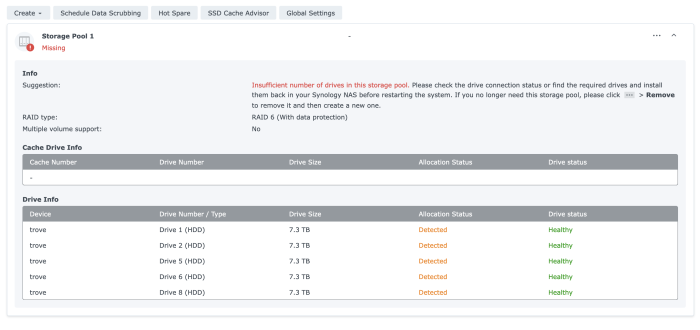

Had issues after upgrading to DSM 7.1.1-42962 Update 4. After changing to ARPL 1.03b, I was able to recover the system after a migration and log into a fresh DSM, however, the storage pool is missing/lost. Looking for tips on how to rebuild the original storage pool. Thanks. Hardware: Motherboard with UEFi disabled: SUPERMICRO X10SDV-TLN4F D-1541 (2 x 10 GbE LAN & 2 x Intel i350-AM2 GbE LAN) LSI HBA with 8 HDDs (onboard STA controllers disabled) root@trove:~# cat /proc/mdstat Personalities : [raid1] md1 : active raid1 sdd2[1] 2097088 blocks [12/1] [_U__________] md0 : active raid1 sdd1[1] 2490176 blocks [12/1] [_U__________] unused devices: <none> root@trove:~# mdadm --detail /dev/md1 /dev/md1: Version : 0.90 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Array Size : 2097088 (2047.94 MiB 2147.42 MB) Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 1 Persistence : Superblock is persistent Update Time : Wed Mar 8 11:46:28 2023 State : clean, degraded Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Events : 0.38 Number Major Minor RaidDevice State - 0 0 0 removed 1 8 50 1 active sync /dev/sdd2 - 0 0 2 removed - 0 0 3 removed - 0 0 4 removed - 0 0 5 removed - 0 0 6 removed - 0 0 7 removed - 0 0 8 removed - 0 0 9 removed - 0 0 10 removed - 0 0 11 removed root@trove:~# mdadm --detail /dev/md0 /dev/md0: Version : 0.90 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Array Size : 2490176 (2.37 GiB 2.55 GB) Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Wed Mar 8 12:38:17 2023 State : clean, degraded Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Events : 0.2850 Number Major Minor RaidDevice State - 0 0 0 removed 1 8 49 1 active sync /dev/sdd1 - 0 0 2 removed - 0 0 3 removed - 0 0 4 removed - 0 0 5 removed - 0 0 6 removed - 0 0 7 removed - 0 0 8 removed - 0 0 9 removed - 0 0 10 removed - 0 0 11 removed root@trove:~# mdadm --examine /dev/sd* /dev/sda: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sda1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 0 Update Time : Wed Mar 8 10:31:50 2023 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 11 Spare Devices : 0 Checksum : b4fd4b61 - correct Events : 1764 Number Major Minor RaidDevice State this 0 8 1 0 active sync /dev/sda1 0 0 8 1 0 active sync /dev/sda1 1 1 0 0 1 faulty removed 2 2 0 0 2 faulty removed 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sda2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 1 Update Time : Wed Mar 8 10:31:34 2023 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 11 Spare Devices : 0 Checksum : dfcee89f - correct Events : 31 Number Major Minor RaidDevice State this 0 8 2 0 active sync /dev/sda2 0 0 8 2 0 active sync /dev/sda2 1 1 0 0 1 faulty removed 2 2 0 0 2 faulty removed 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sda3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 3875a9e5:a7260006:808f7c11:9cedf8a0 Update Time : Tue Mar 7 18:23:17 2023 Checksum : 82ba8080 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 0 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdb: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) mdadm: No md superblock detected on /dev/sdb1. /dev/sdb2: Magic : a92b4efc Version : 0.90.00 UUID : d552bb6c:670b1dca:05d949f7:b0bbaec7 Creation Time : Sat Jul 2 16:17:54 2022 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 3 Preferred Minor : 1 Update Time : Tue Mar 7 18:16:53 2023 State : clean Active Devices : 3 Working Devices : 3 Failed Devices : 9 Spare Devices : 0 Checksum : 63069156 - correct Events : 125 Number Major Minor RaidDevice State this 1 8 18 1 active sync /dev/sdb2 0 0 8 2 0 active sync /dev/sda2 1 1 8 18 1 active sync /dev/sdb2 2 2 8 34 2 active sync 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdb3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 178434ea:f014dcc9:f52e6b43:460d8647 Update Time : Tue Mar 7 18:23:17 2023 Checksum : 239d3cd0 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 3 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdc: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) mdadm: No md superblock detected on /dev/sdc1. /dev/sdc3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : active Device UUID : 86058974:c155190f:4805d800:856081a4 Update Time : Mon Mar 6 20:03:55 2023 Checksum : d9eeeb2 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 2 Array State : AAAAAAAA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdd: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sdd1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 0 Update Time : Wed Mar 8 12:22:28 2023 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 10 Spare Devices : 0 Checksum : b4fd6ba7 - correct Events : 2592 Number Major Minor RaidDevice State this 1 8 49 1 active sync /dev/sdd1 0 0 0 0 0 removed 1 1 8 49 1 active sync /dev/sdd1 2 2 0 0 2 faulty removed 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdd2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 1 Preferred Minor : 1 Update Time : Wed Mar 8 11:46:28 2023 State : clean Active Devices : 1 Working Devices : 1 Failed Devices : 10 Spare Devices : 0 Checksum : dfcefa9b - correct Events : 38 Number Major Minor RaidDevice State this 1 8 50 1 active sync /dev/sdd2 0 0 0 0 0 removed 1 1 8 50 1 active sync /dev/sdd2 2 2 0 0 2 faulty removed 3 3 0 0 3 faulty removed 4 4 0 0 4 faulty removed 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sde: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sde1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 0 Update Time : Tue Mar 7 19:36:00 2023 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 8 Spare Devices : 0 Checksum : b4fc79cf - correct Events : 1720 Number Major Minor RaidDevice State this 2 8 65 2 active sync /dev/sde1 0 0 8 1 0 active sync /dev/sda1 1 1 8 49 1 active sync /dev/sdd1 2 2 8 65 2 active sync /dev/sde1 3 3 0 0 3 faulty removed 4 4 8 113 4 active sync /dev/sdh1 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sde2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 1 Update Time : Tue Mar 7 19:31:47 2023 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 8 Spare Devices : 0 Checksum : dfce16e9 - correct Events : 22 Number Major Minor RaidDevice State this 2 8 66 2 active sync /dev/sde2 0 0 8 2 0 active sync /dev/sda2 1 1 8 50 1 active sync /dev/sdd2 2 2 8 66 2 active sync /dev/sde2 3 3 0 0 3 faulty removed 4 4 8 114 4 active sync /dev/sdh2 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sde3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 1f5ac73f:a2d2d169:44fbeeaa:f0293f1d Update Time : Tue Mar 7 18:23:17 2023 Checksum : 5662b981 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 7 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdf: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sdf1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 5 Preferred Minor : 0 Update Time : Tue Mar 7 19:31:40 2023 State : clean Active Devices : 5 Working Devices : 5 Failed Devices : 7 Spare Devices : 0 Checksum : b4fc787f - correct Events : 1629 Number Major Minor RaidDevice State this 3 8 81 3 active sync /dev/sdf1 0 0 8 1 0 active sync /dev/sda1 1 1 8 49 1 active sync /dev/sdd1 2 2 8 65 2 active sync /dev/sde1 3 3 8 81 3 active sync /dev/sdf1 4 4 8 113 4 active sync /dev/sdh1 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdf2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 5 Preferred Minor : 1 Update Time : Tue Mar 7 19:05:08 2023 State : clean Active Devices : 5 Working Devices : 5 Failed Devices : 7 Spare Devices : 0 Checksum : dfce110f - correct Events : 19 Number Major Minor RaidDevice State this 3 8 82 3 active sync /dev/sdf2 0 0 8 2 0 active sync /dev/sda2 1 1 8 50 1 active sync /dev/sdd2 2 2 8 66 2 active sync /dev/sde2 3 3 8 82 3 active sync /dev/sdf2 4 4 8 114 4 active sync /dev/sdh2 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdf3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 5e941a18:8b4def78:efcc7281:413e6a0f Update Time : Tue Mar 7 18:23:17 2023 Checksum : 68254a4 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 6 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdg: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) mdadm: No md superblock detected on /dev/sdg1. /dev/sdg3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : active Device UUID : e95d48ae:87297dfa:8571bbc2:89098f37 Update Time : Mon Mar 6 20:03:55 2023 Checksum : 87b33020 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 5 Array State : AAAAAAAA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdh: MBR Magic : aa55 Partition[0] : 4294967295 sectors at 1 (type ee) /dev/sdh1: Magic : a92b4efc Version : 0.90.00 UUID : 6f2d4c18:1dd9383c:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:16:56 2023 Raid Level : raid1 Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Array Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 0 Update Time : Tue Mar 7 19:36:05 2023 State : active Active Devices : 4 Working Devices : 4 Failed Devices : 8 Spare Devices : 0 Checksum : b4fc7350 - correct Events : 1721 Number Major Minor RaidDevice State this 4 8 113 4 active sync /dev/sdh1 0 0 8 1 0 active sync /dev/sda1 1 1 8 49 1 active sync /dev/sdd1 2 2 8 65 2 active sync /dev/sde1 3 3 0 0 3 faulty removed 4 4 8 113 4 active sync /dev/sdh1 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdh2: Magic : a92b4efc Version : 0.90.00 UUID : 362d3d5d:81b0f1c8:05d949f7:b0bbaec7 Creation Time : Tue Mar 7 18:17:00 2023 Raid Level : raid1 Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Array Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 4 Preferred Minor : 1 Update Time : Tue Mar 7 19:31:47 2023 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 8 Spare Devices : 0 Checksum : dfce171d - correct Events : 22 Number Major Minor RaidDevice State this 4 8 114 4 active sync /dev/sdh2 0 0 8 2 0 active sync /dev/sda2 1 1 8 50 1 active sync /dev/sdd2 2 2 8 66 2 active sync /dev/sde2 3 3 0 0 3 faulty removed 4 4 8 114 4 active sync /dev/sdh2 5 5 0 0 5 faulty removed 6 6 0 0 6 faulty removed 7 7 0 0 7 faulty removed 8 8 0 0 8 faulty removed 9 9 0 0 9 faulty removed 10 10 0 0 10 faulty removed 11 11 0 0 11 faulty removed /dev/sdh3: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 469081f8:c1645cc8:e6a51b6e:988a538f Name : trove:2 (local to host trove) Creation Time : Wed Dec 12 01:11:23 2018 Raid Level : raid6 Raid Devices : 8 Avail Dev Size : 15618409120 (7447.44 GiB 7996.63 GB) Array Size : 46855227264 (44684.63 GiB 47979.75 GB) Used Dev Size : 15618409088 (7447.44 GiB 7996.63 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=32 sectors State : clean Device UUID : 7a2f8c59:2331f484:50fbb33a:bfe5fa58 Update Time : Tue Mar 7 18:23:17 2023 Checksum : 56caa935 - correct Events : 13093409 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 4 Array State : A..AA.AA ('A' == active, '.' == missing, 'R' == replacing)

-

Need help,DS2422+ with lsi 9260-8i,Can't install

BlackLotus replied to BlackLotus's topic in DSM 7.x

motherboard : supermicro h11ssl-i -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

SecundiS replied to Peter Suh's topic in Software Modding

Good day. I need help with discover additional hard drives in my system. I have: Supermicro 4U chassis with 16HDD Bays Supermicro X9DRH-iF motherboard (Specs) 2x Xeon e5-2620v2 Processors 64Gb DDR3 ECC REG ASMEDIA PCI-E to 20x SATA port controller 6x HDD WD4000F9YZ and 5x HDD SD4000DM00 (11 total) I attempt to install latest TCRP with PeterSuh's M-Shell (Friend Mode). I choose RS4021xs+ build, paste serial and mac's but after build and first boot i didn't see all my disks and see only 7 HDD's In user_config i see parameter "max_disks=16" and understood that DSM scan sata slots on motherboard first, but that motherboard have 10 sata slots (8x SATA2 and 2 SATA3). For this reason i use Asmedia controller with 20x SATA3 port and all disks connected to that controller. I attempt to modify max_disks parameter with value 30 (understood 10 internal + 20 external), but DSM again show me only 7 HDD's Can you help me to solve that problem, please -

Good day. I need help with discover additional hard drives in my system. I have: Supermicro 4U case with 16HDD Bays Supermicro X9DRH-iF motherboard (Specs) 2x Xeon e5-2620v2 Processors 64Gb DDR3 ECC REG ASMEDIA PCI-E to 20x SATA port controller 6x HDD WD4000F9YZ and 5x HDD SD4000DM00 (11 total) I attempt to install latest TCRP with PeterSuh's M-Shell (Friend Mode). I choose RS4021xs+ build, paste serial and mac's but after build and first boot i didn't see all my disks and see only 7 HDD's In user_config i see parameter "max_disks=16" and understood that DSM scan sata slots on motherboard first, but that motherboard have 10 sata slots (8x SATA2 and 2 SATA3). For this reason i use Asmedia controller with 20x SATA3 port and all disks connected to that controller. I attempt to modify max_disks parameter with value 30, but DSM again show me only 7 HDD's Can you help me to solve that problem, please

-

[Tuto] DSM 7 Pour Proxmox en 8 minutes ( Update DSM-7.1.1 )

Orphée replied to Sabrina's topic in Installation Virtuelle

@pehun Pour info si j'ai choisi cette carte mère (Supermicro X11SCA-F) c'est aussi surtout parce que je voulais garder ce que permet de faire le microserveur HP, à savoir la prise de contrôle à distance sans écran, même si le système est OFF. les Supermicro -F ont l'IPMI 2.0 avec iKVM. Ca permet grosso modo la même chose que sur le HP, afficher l'écran via une page Web HTML5 ou bien en Java. Pour moi c'était indispensable de pouvoir prendre virtuellement l'écran en remote, voir de pouvoir stop/start le système sans avoir à être réellement devant la machine. Mais ces cartes mères ne sont pas données... Edit 2 : # iperf -c 192.168.1.XX ------------------------------------------------------------ Client connecting to 192.168.1.XX, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 1] local 192.168.1.XX port 36482 connected with 192.168.1.XX port 5001 (icwnd/mss/irtt=14/1448/250) [ ID] Interval Transfer Bandwidth [ 1] 0.0000-10.0034 sec 29.9 GBytes 25.7 Gbits/sec iperf -c 192.168.1.XX -p 5002 ------------------------------------------------------------ Client connecting to 192.168.1.XX, TCP port 5002 TCP window size: 85.0 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.1.XX port 35016 connected with 192.168.1.XX port 5002 [ ID] Interval Transfer Bandwidth [ 3] 0.0000-10.0000 sec 37.3 GBytes 32.0 Gbits/sec En GBytes/s : iperf -c 192.168.1.XX -p 5002 -f G ------------------------------------------------------------ Client connecting to 192.168.1.XX, TCP port 5002 TCP window size: 0.000 GByte (default) ------------------------------------------------------------ [ 3] local 192.168.1.XX port 49258 connected with 192.168.1.XX port 5002 [ ID] Interval Transfer Bandwidth [ 3] 0.0000-10.0000 sec 37.5 GBytes 3.75 GBytes/sec -

[Tuto] DSM 7 Pour Proxmox en 8 minutes ( Update DSM-7.1.1 )

Orphée replied to Sabrina's topic in Installation Virtuelle

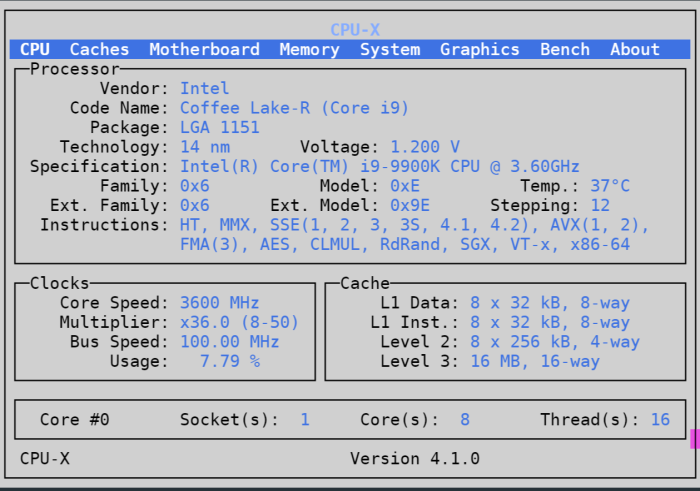

Je viens de changer tout mon setup. J'avais 3 machines : Mon PC Windows (I7 4th gen 16giga), HP Gen8 Synology "data", Tour HP I5 pour Synology "Surveillance Station" J'ai acheté une carte mère Supermicro X11SCA-F (neuve car introuvable en occasion) (8 ports SATA, 2 slot PCI-e 16x, jusqu'à 128Gb de RAM) Un Core I9-9900k sur LBC 2x32GB de RAM sur Amazon. J'ai gardé la carte graphique que j'avais dans mon Windows (Nvivia GTX980), mon boitier, et le radiateur que j'avais sur l'ancienne CM (un Noctua NH-D15) et récupéré également la carte graphique GTX 1650 du "Surveillance Station" DVA3221. J'ai donc virtualisé mon Windows baremétal avec ses 2 disques physiques J'ai logé les 4 disques du Gen8, et j'ai réuni mes 2 "Synology" en un seul sous DVA3221. Et pour éviter les galère de passthrough de son et USB sous Windows, j'ai acheté 2 cartes PCI pour son et USB, dédiée au Windows. ça fonctionne plutôt bien. -

Have just grabbed one of these on ebay, came with a 12 bay chassis with a sas backplane. I have tried the loader for 3615 and 3617 and both appear to work fine apart from once formatted the fault lights on each drive flash red continuously without reason. It has installed fine, picks up all the drives ok, just has this synchronised flashing red on all the front bays. Wondered if anyone else has come across this and knows what might be causing it??? Thanks.

-

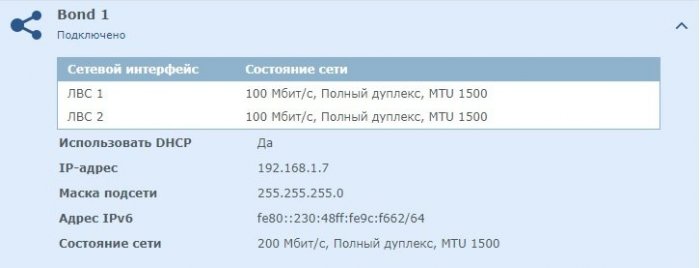

После установки сразу не обратил внимания, а при создании Bond`а увидел что сеть определилась как 100 Мбит/с В чём может быть проблема? (Свитч гигабитный, патчи "4 парные", ноут определяется на этих "хвостах" на гигабите) Мать SuperMicro X7DVL-3 Загрузчик Jun's Loader v1.03b DS3615xs Версия DSM: DSM 6.2-23739

-

- скорость сети

- intel 82563eb

-

(and 2 more)

Tagged with:

-

RS4021xs+ loader development thread

Orphée replied to Peter Suh's topic in Developer Discussion Room

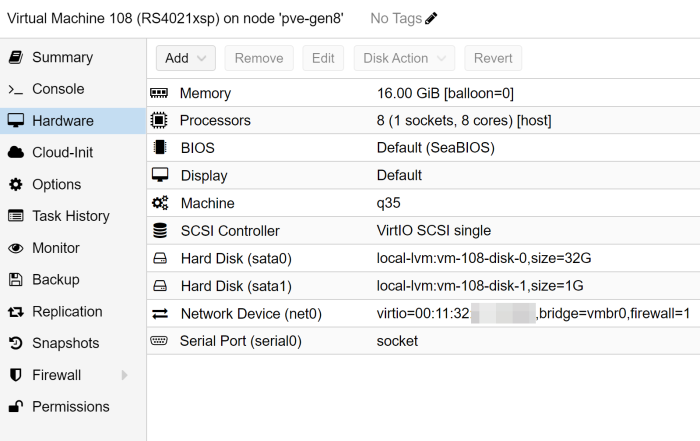

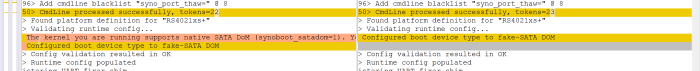

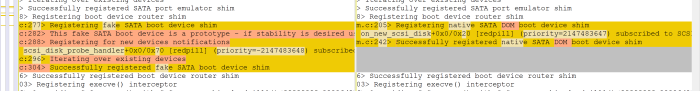

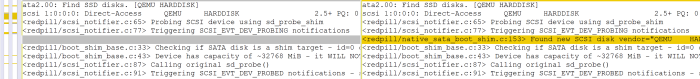

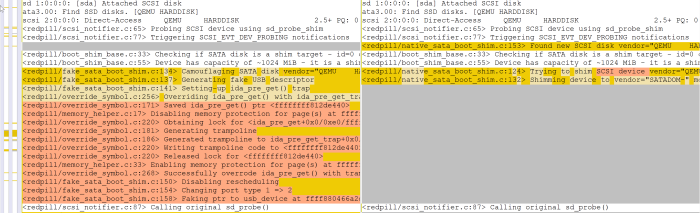

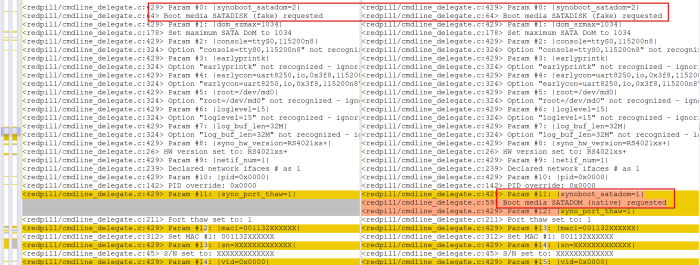

@Peter Suh I agree it may work for you, but as long as synoboot_satadom=1 works on this loader/kernel, then it should be considered instead of synoboot_satadom=2. The warning shown in the log was written by Redpill team for a reason. I know some loaders like DS918+ / DVA3221 does not support satadom 1, but when it can be selected, it should be the choice. my VM conf : I do not passthough any physical disk, only using the virtualised 32Gb one. Host : Motherboard : Supermicro X11SCA-F CPU : Intel i9-9900k (8 cores / 16 thread) Memory : 64Gb (2x32) Edit : Comparing bootlogs well its start OK (left = 2, right = 1) : Coincidence or not, since running with synoboot_satadom=1 did not have any KP yet. -

Здравствуйте. Удалось поиметь старый сервак СуперМикро на базе матери от Интел и 2мя процами Xeon. Хочу попробовать туда накатить Синолоджи. Как думаете, шансы есть ?

-

Hello, i have several xpen boxes up and running, all of them with less than 12 disks and worked like a charm. I used this awesome Forum as the main source of knowledge, but at my new project i stuck for several days on the same problem. But first my system infos: DSM version: DSM 6.1.7 - 15284 Loader version and model: JUN'S LOADER v1.02b - DS3615xs Installation type: BAREMETAL - Case: CSE846 Backplane: SAS846TQ - MB: Supermicro X10SRL-F - HBA: 2x LSI9207-8i IT Mode DSM is running on two SSDs connected at the onboard SATA Connectors Edit grub.cfg: SataPortMap=6488 Edit synoinfo.conf: maxdisks="26", esataportcfg="0x0", usbportcfg="0x3C000000", internalportcfg="0x3ffffff" Problem: When i plug a USB Stick in to the USB3.0 or USB2 ports on the MB they will be recognized as disk 21 in DSM, when i add a second USB Stick they will be disk 22. When i fill the System complete with 26 disks, the USB sticks will be recognized as a external device, how it should be. But after a reboot with all disks installed they will appear as disk 21+ and take the position of the real HDD. I played around with several Jun loader versions, installed it as a DS918+, as a DS3617xs with DSM 6.2.2 and DSM 6.1.x, every time the same issue. Has anyone an idea witch settings i have to change. Second trivial issue: DSM only recognized USB-Devices on the back side usb port, it ignores the internal USB-headder. Thanks in advance Phithue

-

Это да, но по 5в производители бп как раз филонят, они от общей мощности его отделяют и получается блок 500Вт, а по факту 350Вт. С 12в как правило всё сходится. С другой стороны БП на 12 дисков имеют 12sata разъёмов, а когда смотришь характеристики, то получается что запитать их можно только через molex-sata разъёмы. Тогда какой смысл покупать на 12 разъёмов, если бп на 6-8 разъёмов стоит дешевле, а характеристики лучше (нужны как минимум 3-4 косы отдельные с molex разъёмами. Причём всё что до 6 дисков вообще пофигу какой, а вот от 8 начинается игра с производителем. Пока прихожу к выводу в сторону серверного блока от какой-нибудь полки, но они шумные или переходить на сборку до 6 дисков увеличивая объём дисков, что в корне идёт в разрез изначальной идеи собрать nas на 1тб дисках ибо их и сейчас у меня много лежит с пробегом до 10к часов и менять по мере необходимости. Раз у Вас есть оборудование Supermicro, то у меня вопрос к вам. Есть хранилище данных на материнке supermicro X8DTL с контроллером Adaptec RAID 51645 Увидет ли хрень материнку и контроллер? Были случае, что диски видит через контроллер, а вот ошибки, температуру нет. В подписе стоят DVA3221 и DVA1622 тоже через хрень сделаны? Красным выделенны это версия загрузчиков?

-

I successfully installed xpenology on my new supermicro but it won't read any of the drives. I have an SSD inside that it reads as a storage device but will not read any of the drives in the bays or in the USB ports in the back. I know the drives are good ive checked them in my PC and the server is reading them they spin up and blink and the BIOS show them. So synology isn't recognizing them. Please help. Juns 1.02b 3615 6.1 Drives are Seagate 8tb I also tried a toshiba 1tb This is what I have https://www.ebay.com/itm/173977893894

-

HI guys, I received a Supermicro x10sdv-4c-tln2f for free and would like to use Xpenology on it. I am using ds3617xs loader because both use similar CPU (D1521 vs D1527). But once the bootloader printed the "... find.synology.com ... " I wasn't able to find it on the network. What should I do? I know people has success with the board using ESXi, but I rather run bare metal. I did the following 1. update pid/vid, sn, mac1 and mac2 in grub.cfg 2. change in BIOS to Legacy boot Serial-over-Lan show me nothing regard the console output. I also use the same procedure and tried ds3615xs. same thing. Please let me know how I should proceed. Thanks

-

Hello @Peter Suh Hope you will have a look at this , thank you in advance. I'm really stuck without understanding what's going wrong Previous 6.2 JUN'S setup was running fine with same materials, same cpu, ram and disks. 1151 Supermicro XSCH with 8 onboard sata i3-8300 16Go ECC I started again from scratch. used TCRP 0.9 from https://github.com/pocopico/tinycore-redpill update fullupgrade identifyusb serialgen DS918+ satamap edit user_config.json change generated SN with my SN change generated MAC1 with my MAC add line MAC2=001132xxxxxx (*) build ds918p-7.1.1-42962 => I can see that the Aquantia driver is found and add to the build ==> After reboot, menu appears. I choose first choice (USB VERBOSE) . Then the system boot but no way to find DSM. DSFinder does not find anything. I can see that a DHCP IP adress is suddenly seen by the router but disappears after 3 seconds after the kernel is up and running. DSM does not load neither (no flashing lights on hard drive after loading kernel) (*) If I DO NOT add MAC2, systems boot up and DSM 7.1 run fine with MAC1 attribute to ASUS10gb additionnal NIC, onboard MAC card is REAL, I can use both. But I loose MY genuine Synology MAC on onboard NIC. As my 918+ SN works fine now, my goal is to find a way to give my 2 Synology MAC to both onboard and additionnal NIC. Hope I'm clear enough, sorry for my english. Any help would be really awesome, as i've red all the threads related to MAC but didn't find anything looking same as my problem.

-

Стоит сейчас 10 дисков. Я не случайно написал, что по шине 5в 150ватт, это как раз уходит на питание дисков, всё остальное питается от 12в. Если взять разные блоки мой Cougar VTX700 [CGR BS-700] 3,3 и 5в 150w, как и у вас nWin Power Rebel 600W [RB-S600AQ3-0] 150W, а у HIPER HPB-750SM-PRO [HPB-750SM-PRO] 125W, AeroCool AERO BRONZE 700W [ACPB-AR70AEC.A1] 130W, Corsair CX750M [CP-9020222-EU] 130W все эти блоки имеют по 6-8 портов sata. По идеи мой должен за глаза хватать. Если посмотреть на блоки для полок Supermicro PWS-1K21P-1R у них на 12 и на 5в держат нагрузку 1200w пропорционально 12 и 5 в смотря чем нагрузить. Пока перестраивается райд буду наблюдать.... Кстати сколько кушает 3070? Пока цена реальная думаю прикупить.

-

First, thank you for your help. Well, i've edited the grub.cfg but no way to find any ip adress whatever the NIC I used. I can ''feel'' that DSM is booting (fans are getting quiet once the system is up and HD light are on then off, just like normal) Then I started from scratched. I rebuild the kernel with previously editing the user_config and change SYNO MAC and SN with my MAC and SN. I add the mac2=...... line also => no way to boot Then I made a complete rebuild starting again from scratch with default TCRP settings => Boot. DSM didn't even ask for update or install or anything. System was (and is still) perfectly running like a normal reboot. Supermicro onboard NIC 1 : Supermicro MAC Supermicro onboard NIC 2 : no show on DSM system, no show in TCRP ASUS G100C 10gb : SYNO MAC (set with ./rploader.sh serialgen) There is something i'm doing wrong but I don't know what. I'm sure the ASUS NIC (loading drivers from github when the build is launched) is the key but I'm not pro enough to change this behaviour. If you have any idea.... Thank you for reading