flyride

Moderator-

Posts

2,438 -

Joined

-

Last visited

-

Days Won

127

Everything posted by flyride

-

It's not the script, it's Jun's loader that will boot up to 6.2.3 https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/

-

Driver extension jun 1.03a2/DSM6.2.x for DS918+

flyride replied to IG-88's topic in Additional Compiled Modules

That is what NFS is for ... -

Your reported write performance (the 400MBps test result) is consistent with Tweaktown's review of this model in 128GB. https://www.tweaktown.com/reviews/6589/samsung-xp941-128gb-pcie-ssd-review/index.html

-

Not a typical configuration to have NVMe as a regular DSM volume. How did you set it up? It might have an impact. You did not say what model your SSD was. Generally, write performance on low-capacity Samsung models is not great. PM961 is a typical 128GB unit and its spec is "up to" 600MBps sequential write which is a raw value, and the score you are citing is full throughput including filesystem overhead etc. Also all consumer Samsung drives have TLC with SLC/MLC cache so once that internal cache fills up they slow down on writes a lot. I suspect Windows is lying to you with 1GBps write. But post more info about what you have if you want

-

Yeah, it's not really possible to backtrack drives out of a SHR. For the best results, build an initial SHR with the 4TB and 6TB drives. The extra 2TB on the 6TB won't be initially usable. Then, add in the 14TB drives and you'll get full capacity. EDIT: actually you'll get the same result if you build with all the drives at once... was stuck thinking about the sequence from your original post https://www.synology.com/en-us/support/RAID_calculator?hdds=4 TB|4 TB|6 TB|14 TB|14 TB

-

You only can add a disk equal to or larger than the largest member drive, or exactly the size of an existing drive. https://kb.synology.com/en-br/DSM/help/DSM/StorageManager/storage_pool_expand_add_disk?version=6

-

I don't understand why someone would want a single drive when they can have the performance of a four-drive array. But to each his own. Personally, I would build a new array with the 4TB drives for the best SHR performance and least internal array complexity. If the NAS setup can support eight drives at once, he can just build the new arrays and copy everything directly over. If he has only four bays available, it can be done by: Drop the redundancy on the RAID 1 (remove one drive) Create a new Storage Pool with a 4TB in the empty slot Copy data from old RAID 1 to new 4TB Storage Pool Remove remaining RAID 1 drive Add the 4TB Storage Pool with a second 4TB drive (convert to SHR/RAID 1) Use a free 3TB on a PC and copy all the data off of one of the single drives (preferably the one that would be collapsed into the 4TB array) Remove that single drive, install a 4TB drive and create new single drive Storage Pool Copy remaining single 3TB drive's data to new single 4TB Storage Pool. Remove remaining single 3TB and replace with last 4TB drive Add/grow the 4TB Storage Pool with the last 4TB drive (add to SHR/convert to RAID 5) Copy transient data from 3TB drive installed on the PC into the 4TB array There are other solution permutations, but they are all about as complex.

-

You can't without freeing up a drive bay, which means offloading at least one disk's worth of data from the system. Does he want to keep the single drives the way they are? How big are the new disks?

-

Discussion on LSI Array Cards of DSM7.0+

flyride replied to yanjun's topic in Developer Discussion Room

Just a reminder that if you have a card (or any HBA) that does not work with DSM, but is supported by your hypervisor - disk-based passthrough may be possible. On ESXi, this is via Raw Device Mapping (RDM). -

@CatWhisperer I'm afraid you may be missing the point entirely. DSM offers the most value when it has control over the disks and manages the RAID array in software. OP was asking about a "hardware" RAID controller which makes an array appear as a single, plain disk drive to DSM - therefore preventing DSM from doing the things it does best. This isn't window dressing - the btrfs bit-rot healing features that DSM has pioneered leverages direct control over the array and available redundancy to correct errors in real-time, and this simply cannot be done with a hardware RAID controller. The Syba 40064 card you are referring to is not a RAID controller but a multi-port SATA controller - exactly what I was talking about. However, it is a PCIe 2.0 x1 device which will bottleneck if you install sufficiently fast disks. There are better add-on cards to use rather than that one.

-

Jun's ds918+ loader modified for DSM-6.2.4

flyride replied to sunnyqeen's topic in Developer Discussion Room

As always, nothing gets by you man! And using my own post to refute. My point was that the realtek driver was part of the base image without Jun's add. But I didn't phrase it that way, did I? Technically they were built for 6.2.x as 6.2.3 did not exist when they were released. And honestly, I've never checked to see if they are identical to the mods embedded in 1.02b (6.1.x) version of Jun's loader. I don't think there would be much harm in leaving them in there if they did not cause a panic. Thank you for clarifying your reasoning. -

Jun's ds918+ loader modified for DSM-6.2.4

flyride replied to sunnyqeen's topic in Developer Discussion Room

Interesting, will have to play with this a bit. Can you explain this further? Is your modification causing kernel panics? This is because the native DS918+ has this NIC. -

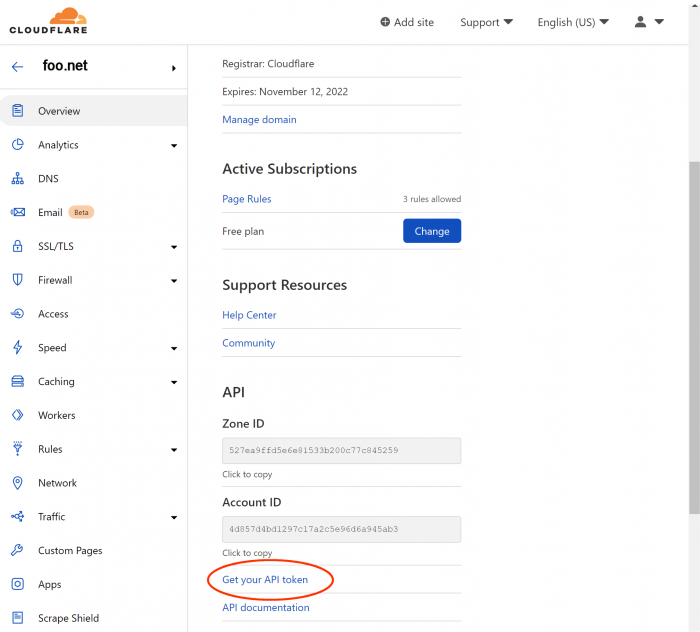

Not quite sure what to post, my config is right out of the ddns-updater docker documentation example: config.toml: [[settings]] provider = "cloudflare" domain = "foo.net" host = "ddns" proxied = false ip_method = "dnspod" delay = 300 token = "(from the cloudflare control panel for the domain, see below)"

-

Tutorial/Reference: 6.x Loaders and Platforms

flyride replied to flyride's topic in Tutorials and Guides

We all need to remember that DSM is built by Synology for specific hardware platforms. The fact that things generally work on a wide variety of hardware is remarkable and a testament to the work that the loader coders have done. It does surprise me that folks want to bring cutting edge and completely divergent hardware to the table (such as NUCs and high-density data center motherboards which are not offered even at the Synology low-end and high-end) and think it's going to be easy to set up. Outside of just making old/spare equipment to work, it would seem that selecting hardware that is close to the desired Syno spec should be a primary objective for a XPe project. The further away from the Syno hardware configurations we diverge, the less likely things will be supported. This can be somewhat mitigated by virtualizing the hardware, which is part of the troubleshooting recommendation in the top post of this thread. For those looking for reference information regarding the "original" hardware, please refer to these threads: https://xpenology.com/forum/topic/13922-guide-to-native-drivers-dsm-617-and-621-on-ds3615/ https://xpenology.com/forum/topic/14127-guide-to-native-drivers-dsm-621-on-ds918/ -

Question: Compiling for Other Models and Chipsets (Denverton)

flyride replied to tlsnine's topic in The Noob Lounge

In short, the loader itself is coded to work around unique issues presented by each of the supported Synology hardware platforms, including physical hardware emulation. Synology doesn't recompile a standard DSM for a new platform, they do a lot of customization and backporting. So a new platform for us isn't as simple as compiling against a new toolkit. Also, toolkits != kernel source so there are a lot of things that are heavily obfuscated or just plain don't exist for review and analysis. Some relevant posts: https://xpenology.com/forum/topic/45795-redpill-the-new-loader-for-624-discussion/?do=findComment&comment=208585 https://xpenology.com/forum/topic/45795-redpill-the-new-loader-for-624-discussion/?do=findComment&comment=218147 (see topic on Kernel/driver interactions) -

You got 7 years out of it, I don't think I've ever kept a computer for more than 4-5 years before replacing it. It is entirely possible to use Linux to retrieve your data, but it's easier with a DSM platform (real Synology or XPenology). However, if you are happy with DSM, I really suggest a used DS918+ (if you can fit into 4 disks) or a DS1517+ or DS1817+ (these models do not have the well known Intel chip bug that probably is the reason yours died). Then your disks will migrate and you'll be back up and running for a lot less cash. You can definitely use XPenology, but you have to source the PC, a compatible chassis for your drives (hot-swap?), and learn the system before you even think of using your old data disks. I think you will find that given where you are, getting a used Synology box is probably a cheaper and easier solution, and then you can consider XPenology with all its benefits and limitations when you aren't in duress.

-

I wasn't referring to the SATA speed itself, but rather the aggregate from the controller. There are motherboard implementations (and a number of plug-in cards) out there where a SATA controller is implemented with just 1x or 2x PCIe 2.0 uplink which cannot handle the burst SATA bandwidth in aggregate if all the ports are filled. P67 motherboards typically have SATA ports connected directly to the PCH (chipset) which is interfaced to the CPU via DMI (a big pipe for all chipset/CPU traffic) so you don't need to worry about this.

-

I hope you mean 450 MBps (megabytes per second) not mbps (megabits). It will burst at full 10Gbps (about 1.1 GBps) until the RAM fills up. With 32GB of RAM, that will take about 20 seconds. Then throughput will drop to 450 MBps and you will see your drives go to maximum as it tries to catch up. You need more spindles (more drives) or faster drives if you want to fully leverage 10Gbe. As you have found, Reds are not terribly fast. At some point the bandwidth available to your SATA controller matters also. A controller with a PCIe 2.0 x2 uplink cannot saturate a 10Gbps card.

-

This is from the original post. You don't need to install it but it won't hurt anything. I suggest you post your backup question as a new question on a general thread so people can find it.

-

From an instruction support standpoint, any x64 processor should work with DS3615xs and DS3617xs platforms. DS918+ however does have a requirement for advanced instruction sets (we believe it to be FMA3; why a SIMD instruction is needed for a NAS is unknown, but it may simply be a compile-time standard at Synology for this platform). There may be other reasons an AMD motherboard doesn't work well, but it will not be because of the processor.

-

Probably. The only way to actually know 100% for sure is to get the PCI ID of the card and check against the drivers (or just try it and see). For $15 you can try it and return it right? The Intel CT card is deliberately made by Intel with an older silicon for backward compatibility reasons. That is not the case with the silicon on newer motherboards, for example.

-

The alternative is to buy an Intel CT PCIe card and use that.

-

Post a new question with specific information about your motherboard and CPU in a support forum. https://xpenology.com/forum/forum/38-dsm-installation/ https://xpenology.com/forum/forum/82-general-questions/

-

What model drive and how is it connected? What are you using for storage on the NAS? What array configuration? These all would be required to assess what is happening. If your source drive is very old, that throughput may be plausible, but that is pretty slow.