flyride

Moderator-

Posts

2,438 -

Joined

-

Last visited

-

Days Won

127

Everything posted by flyride

-

Check for bad SATA cable or it is routed so that the connector is breaking contact.

-

Obviously we need to prove that you do not have a fs mounted on /volume1 per the previous post (incidentally, it looks like you have some jobs - ie Plex - that have written to /volume1 and in the absence of the mounted filesystem, those files are in the root filesystem. You should stop your Plex from running while this is going on) I would see if you can do a restore (copy out) using the alternate tree roots before you try and make corrections from the filesystem. http://www.infotinks.com/btrfs-restoring-a-corrupt-filesystem-from-another-tree-location/ If nothing works I would go ahead with at least the first two check options.

-

I think perhaps you mean that you see a /volume1 folder. If the volume is not mounted, that folder will be blank. Check to see what volumes have been mounted with df -v at the command line. You should see a /volume1 entry connected to your /dev/lv device if it is mounted successfully. You can also sudo mount from the command line and any unmounted but valid volumes will be mounted (or you will receive an error message as to why they cannot be).

-

Ok, we know your Storage Pool is functional but degraded. Again, degraded = loss of redundancy, not your data, as long as we don't do something to break it further. Your btrfs filesystem has some corruption that appears to be causing it not to mount. btrfs attempts to heal itself in real-time and when it cannot, it throws errors or refuses to mount. Your goal is to get it to mount read-only through combination of discovery and leveraging redundancy in btrfs. btrfs is not like ext4 and fsck. You should not expect to "fix" the problem. If you can make your data accessible through command-line directives, then COPY everything off, delete the volume entirely, and recreate it. I'm no expert at ferreting out btrfs problems, but you might find some of the strategies in this thread helpful (starting with the linked post): https://xpenology.com/forum/topic/14337-volume-crash-after-4-months-of-stability/?do=findComment&comment=107979 Post back here if you aren't sure what to do.

-

Migrating Baremetal to ESXi - Passthrough HDDs or Controller?

flyride replied to WiteWulf's question in Answered Questions

NVMe = PCIe. They are different form factors for the same interface type. So they have the same rules for ESXi and DSM as an onboard NVMe slot. I use two of them on my NAS to drive enterprise NVMe disks, then RDM them into my VM for extremely high performance volume. I also use an NVMe slot on the motherboard to run ESXi datastore and scratch. -

Looks like everything is good on the array side. Your btrfs filesystem has some corruption. btrfs attempts to heal itself in real-time and when it cannot, it throws errors or refuses to mount. Your goal is to get it to mount read-only through combination of discovery and leveraging redundancy in btrfs. btrfs is not like ext4 and fsck. You should not expect to "fix" the problem. If you can make your data accessible through command-line directives, then COPY everything off, delete the volume entirely, and recreate it. I'm no expert at ferreting out btrfs problems, but you might find some of the strategies in this thread helpful (starting with the linked post): https://xpenology.com/forum/topic/14337-volume-crash-after-4-months-of-stability/?do=findComment&comment=107979 Post back here if you aren't sure what to do.

-

Migrating Baremetal to ESXi - Passthrough HDDs or Controller?

flyride replied to WiteWulf's question in Answered Questions

This is incorrect for AHCI controller passthrough. SMART does not work with RDM (also, trying to TRIM will crash RDM'd SSDs) on Jun's loader but it's perfectly fine with drives attached to passthrough controllers. I passthrough my onboard SATA controller and it is exactly as baremetal. Some folks have more than one SATA controller, or use a NVMe disk as a datastore and scratch volume. The reasons to use RDM is to split drives between ESXi datastore and DSM on one controller (Orphée's case) or provide DSM access to disks that cannot otherwise be used at all (no controller support, NVMe, etc). -

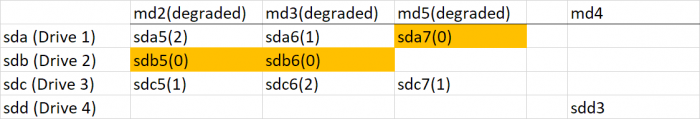

Ok, let's define a few things first: 1. Storage Pool - the suite of arrays that make up your storage system 2. Volume - the filesystem built upon the Storage Pool 3. Crashed (Storage Pool) - one or more arrays cannot be started and/or the vg that binds them cannot be started 4. Crashed (Volume) - the volume cannot be mounted for a variety of reasons 5. Crashed (Disk) - the disk probably has an unrecoverable bad sector which has resulted in array failure and possible data loss. The disk may still be operational. 6. Failing (Disk) - this means that the drive has failing a SMART test. The disk may be (and probably is) still operational. Your system has four drives. It looks like three of them participate in a SHR which spans three arrays (md2, md3, md5). Each array has its own redundancy, but all need to be operational in order to access data in the Storage Pool. One drive seems to be set up as a Basic volume all by itself (Disk 4, sdd, md4). If this doesn't sound right to you, please explain what is incorrect. Otherwise, this is your disk layout. Please note that the rank order of your arrays, and the members within the arrays, is not at all consistent. This is not a problem, but it can be confusing and a source of error if you are trying to correct array problems via command line. This means that arrays /dev/md2 and /dev/md3 are degraded because of missing /dev/sdb (Drive 2) and array /dev/md5 is degraded because of missing /dev/sda. This is a bit unusual (missing/stale array members that span multiple physical disks). And it also means that there is cross-linked loss of redundancy across the arrays. In simple terms, you have no redundancy and ALL THREE DRIVES are required to provide access to your data. I'm not 100% sure what you actually did here. Did you stop one of the drives using the UI? If so, your Storage Pool should now show Crashed and your data should not be accessible. If this is the case, do a new cat /proc/mdstat and post the results. If the Storage Pool still shows Degraded, then your data should be accessible. In that case, your #1 job is to copy all the data off of your NAS, because if ANYTHING goes wrong now, your data is lost. Don't try and repair anything until all your data is copied and safe. Then we can experiment with restoring redundancy and replacing drives.

-

BTRFS RAID1 Volume crashed and size is not correct

flyride replied to tdse13's question in General Questions

Answer provided via PM. Basically the volume is accessible but damaged. Best practice is always to offload data, delete btrfs volume and rebuild. -

Yes, this is a problem. You have a SHR, which is comprised of multiple arrays bound together. So all the arrays are important, there are none that are not "current." Follow this post specifically and post the discovery of your SHR arrays, then we can build an array map that helps us visualize what is going on. https://xpenology.com/forum/topic/14337-volume-crash-after-4-months-of-stability/?do=findComment&comment=107971

-

Intel CT PCIe is compatible. I like to have one around for troubleshooting since it's only $20. The latest onboard Intel LAN silicon is often not supported by existing drivers - extra.lzma documentation will show exactly which versions will work.

-

Not going to speak to RedPill driver support when it's not even out of alpha, but.... r8169 support is in the skge driver which is native to DS3615xs. Not sure what rev your silicon is but it also might be updated by extra.lzma.

-

Consider the hardware platforms that are being used for the emulated platforms. DS918+ = Apollo Lake - i.e. J41xx/42xx/5xxx and RealTek NIC DS3615xs = Bromolow - i.e. Haswell and Intel NIC DS3617xs = Broadwell - i.e. close cousin to Skylake and Intel NIC Choosing something close to these platforms has the greatest chance of success. Going AMD makes things more complicated, and the latest desktop platform often has issues with NIC support. If you are willing to virtualize, you have much more flexibility with hardware. Also, look in the forums to see what others are successfully using. There is a lot of historical information there. FMI: https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ https://xpenology.com/forum/topic/13922-guide-to-native-drivers-dsm-617-and-621-on-ds3615/ https://xpenology.com/forum/topic/14127-guide-to-native-drivers-dsm-621-on-ds918/

-

Neither of the storage decisions you made are well supported. It is possible to set up a boot loader on a physical HDD/SDD, but it's not really supportable and is hacky. It is possible to set up an external HDD/SDD in a DSM Storage Pool, but it's not really supportable and is hacky. I would suggest that you first install DSM via XPEnology as usual (with a USB, and to an internal SATA device). Then do some research to understand exactly what hacky things you need in order to deploy the system the way you are describing (or don't, as it will cause you problems later).

-

How to instal Xpenology directly to internal HDD

flyride replied to Dvalin21's topic in The Noob Lounge

Something like this? https://xpenology.com/forum/topic/26864-tutorial-bootloader-dsm-61-storage-on-a-single-ssd/ Never done it, no real good reason to, YMMV. -

RedPill - the new loader for 6.2.4 - Discussion

flyride replied to ThorGroup's topic in Developer Discussion Room

Which accomplishes what? Is there any different OS support for geminilake vs apollolake? -

Sorry I don't know. I expect that there are Linux memory structure limits that correspond to maxlanport. 200 is non-sensical because there are no CPU's that can support that number of PCIe channels. Ignoring the fact that significant scale-out aggregation does not result in useful performance unless you have comparatively scaled-out utilization (many users), you can aggregate as many devices as DSM can see. The most I have ever used, or observed anyone else use, is 4.

-

RedPill - the new loader for 6.2.4 - Discussion

flyride replied to ThorGroup's topic in Developer Discussion Room

Got it, so maybe it does actually modify the DSM file... I didn't look that closely before. Unlikely that maxdisks=32 will work and will probably be overridden by DSM as it starts. I think 24 is the practical maximum. -

RedPill - the new loader for 6.2.4 - Discussion

flyride replied to ThorGroup's topic in Developer Discussion Room

SataPortMap, DiskIdxMap, etc are not in /etc/synoinfo.conf, it's part of options passed to the Linux kernel via grub, i.e. it's just assembling the argument string from the options you provide. I don't think that particular RedPill provisioning option will help with /etc/synoinfo.conf parameters. Just to be clear, /etc.defaults/synoinfo.conf is copied to /etc/synoinfo.conf each boot. And some of the /etc.defaults/synoinfo.conf parameters are "reset" by DSM on upgrades or on each boot, hence the Jun loader patch. -

RedPill - the new loader for 6.2.4 - Discussion

flyride replied to ThorGroup's topic in Developer Discussion Room

I believe Jun's loader patches these on each boot. This may need to be added to RedPill. -

RedPill - the new loader for 6.2.4 - Discussion

flyride replied to ThorGroup's topic in Developer Discussion Room

That is what FixSynoboot is intended to correct (and does exactly what you show above) https://xpenology.com/forum/topic/28183-running-623-on-esxi-synoboot-is-broken-fix-available/ Synoboot devices are not required after install, until you want to do an upgrade. The loader storage is always modified by an upgrade. I realize that is getting ahead of things with regard to RedPill, but that's how it works. -

I don't think that the telemetry suppression that RedPill has implemented applies to actually logging in with an Synology account. @ThorGroup? As always, consult the FAQ. Using Synology cloud services is discouraged.