Search the Community

Showing results for 'transcoding'.

-

I'm running DVA3221 with a Nvidia 1050 Ti graphics card. I'm on DSM 7.2-64570 Update 1. My goal is to have Plex hardware transcoding within docker. Hardware transcoding without docker in the Synology Plex app works. It wasn't easy, but it's working. A piece I was missing was installing the NVIDIA Runtime Library from Package Center. I didn't see that mentioned anywhere in any instructions. My drivers are loaded properly on the NAS: root@nas:/volume1/docker/appdata# nvidia-smi Sat Sep 2 11:26:23 2023 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 440.44 Driver Version: 440.44 CUDA Version: N/A | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 GeForce GTX 105... On | 00000000:01:00.0 Off | N/A | | 30% 30C P8 N/A / 75W | 0MiB / 4039MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+ I believe I have successfully exposed the GPU to my Plex docker. Confirming by running nvidia-smi from within the docker: root@nas:/volume1/docker/appdata# docker exec plex nvidia-smi Sat Sep 2 11:26:30 2023 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 440.44 Driver Version: 440.44 CUDA Version: N/A | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 GeForce GTX 105... On | 00000000:01:00.0 Off | N/A | | 30% 30C P8 N/A / 75W | 0MiB / 4039MiB | 0% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+ I accomplished this by copying over the files necessary for the Nvidia-container-runtime as outlined on this post. Maybe some of these files don't work on 7.2? However, Plex hardware transcoding does not work. I get the following in my Plex logs: DEBUG - [Req#583/Transcode] Codecs: testing h264_nvenc (encoder) DEBUG - [Req#583/Transcode] Codecs: hardware transcoding: testing API nvenc for device '' () ERROR - [Req#583/Transcode] [FFMPEG] - Cannot load libcuda.so.1 ERROR - [Req#583/Transcode] [FFMPEG] - Could not dynamically load CUDA DEBUG - [Req#583/Transcode] Codecs: hardware transcoding: opening hw device failed - probably not supported by this system, error: Operation not permitted DEBUG - [Req#583/Transcode] Could not create hardware context for h264_nvenc DEBUG - [Req#583/Transcode] Codecs: testing h264 (decoder) with hwdevice vaapi DEBUG - [Req#583/Transcode] Codecs: hardware transcoding: testing API vaapi for device '' () DEBUG - [Req#583/Transcode] Codecs: hardware transcoding: opening hw device failed - probably not supported by this system, error: Generic error in an DEBUG - [Req#583/Transcode] Could not create hardware context for h264 DEBUG - [Req#583/Transcode] Codecs: testing h264 (decoder) with hwdevice nvdec DEBUG - [Req#583/Transcode] Codecs: hardware transcoding: testing API nvdec for device '' () ERROR - [Req#583/Transcode] [FFMPEG] - Cannot load libcuda.so.1 ERROR - [Req#583/Transcode] [FFMPEG] - Could not dynamically load CUDA DEBUG - [Req#583/Transcode] Codecs: hardware transcoding: opening hw device failed - probably not supported by this system, error: Operation not permitted DEBUG - [Req#583/Transcode] Could not create hardware context for h264 Since native Plex works, the following emby-ffmpeg command also works: /volume1/\@appstore/EmbyServer/bin/emby-ffmpeg -i source.mp4 -c:v h264_nvenc -b:v 1000k -c:a copy destination.mp4 Any ideas on what could be wrong? Thanks!

-

Personnellement je ne fais pas de HW transcoding. Mais j'ai une Shield Pro pour lire les films ultraHD a très haut nitrate. La Shield normale n'était pas assez performante... J'avais des saccades. Emby serveur tourne sur le Synology. L'inconvénient de faire tourner le serveur sur la Shield c'est que quand elle est en veille, je suis pas sûre que Emby/Plex soit accessible depuis les clients... Si ? Le NAS tourne H24 lui...

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

asaf replied to Peter Suh's topic in Software Modding

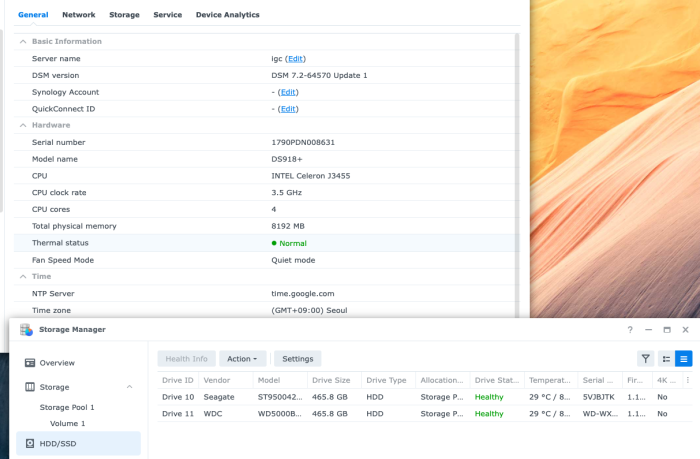

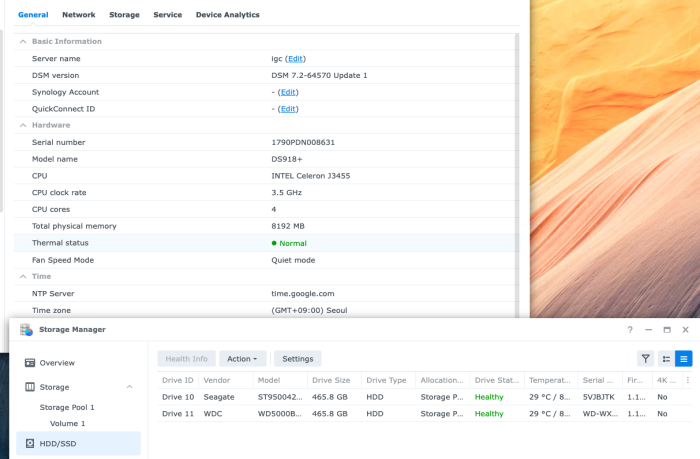

Excellent news! I'm playing around at the moment with ARC loader. Seems like it supports both Transcoding and HBA (with proper SN) on 918+ for a while now. -

Well I have resolved my issue another way, I found that PeterSuh's loader had an mpt3sas support with DS918+ so I've installed that and migrated successfully. With Transcoding working straight away with the 918+ is there any reason to go 920+ now?

-

Hi All, I'm struggling to install DS920p and load the MPT3SAS Driver. I'm running the command to add it: ./rploader.sh ext ds920p-7.2.0-64570 add https://raw.githubusercontent.com/pocopico/rp-ext/master/mpt3sas/rpext-index.json This appears to work correctly and pull the drivers. I see the following: tc@box:~$ ./rploader.sh ext ds920p-7.2.0-64570 add https://raw.githubusercontent.com/pocopico/rp-ext/master/mpt3sas/rpext-index.json Rploader Version : 0.9.4.9 Loader source : https://github.com/pocopico/redpill-load.git Loader Branch : develop Redpill module source : https://github.com/pocopico/redpill-lkm.git : Redpill module branch : master Extensions : all-modules eudev disks misc Extensions URL : "https://github.com/pocopico/tcrp-addons/raw/main/all-modules/rpext-index.json", "https://github.com/pocopico/tcrp-addons/raw/main/eudev/rpext-index.json", "https://github.com/pocopico/tcrp-addons/raw/main/disks/rpext-index.json", "https://github.com/pocopico/tcrp-addons/raw/main/misc/rpext-index.json" TOOLKIT_URL : https://sourceforge.net/projects/dsgpl/files/toolkit/DSM7.0/ds.bromolow-7.0.dev.txz/download TOOLKIT_SHA : a5fbc3019ae8787988c2e64191549bfc665a5a9a4cdddb5ee44c10a48ff96cdd SYNOKERNEL_URL : https://sourceforge.net/projects/dsgpl/files/Synology%20NAS%20GPL%20Source/25426branch/bromolow-source/linux-3.10.x.txz/download SYNOKERNEL_SHA : 18aecead760526d652a731121d5b8eae5d6e45087efede0da057413af0b489ed COMPILE_METHOD : toolkit_dev TARGET_PLATFORM : ds920p TARGET_VERSION : 7.2.0 TARGET_REVISION : 64570 REDPILL_LKM_MAKE_TARGET : dev-v7 KERNEL_MAJOR : 4 MODULE_ALIAS_FILE : modules.alias.4.json SYNOMODEL : ds920p_64570 MODEL : DS920+ Local Cache Folder : /mnt/sda3/auxfiles DATE Internet : 30082023 Local : 30082023 Checking Internet Access -> OK Cloning into 'redpill-lkm'... remote: Enumerating objects: 1715, done. remote: Counting objects: 100% (483/483), done. remote: Compressing objects: 100% (95/95), done. remote: Total 1715 (delta 415), reused 395 (delta 388), pack-reused 1232 Receiving objects: 100% (1715/1715), 5.84 MiB | 11.44 MiB/s, done. Resolving deltas: 100% (1049/1049), done. Cloning into 'redpill-load'... remote: Enumerating objects: 5098, done. remote: Counting objects: 100% (5098/5098), done. remote: Compressing objects: 100% (2251/2251), done. remote: Total 5098 (delta 2703), reused 4983 (delta 2644), pack-reused 0 Receiving objects: 100% (5098/5098), 125.90 MiB | 11.84 MiB/s, done. Resolving deltas: 100% (2703/2703), done. [#] Checking runtime for required tools... [OK] [#] Adding new extension from https://raw.githubusercontent.com/pocopico/rp-ext/master/mpt3sas/rpext-index.json... [#] Downloading remote file https://raw.githubusercontent.com/pocopico/rp-ext/master/mpt3sas/rpext-index.json to /home/tc/redpill-load/custom/extensions/_new_ext_index.tmp_json ################################################################################################################################################### 100.0% [OK] [#] ========================================== pocopico.mpt3sas ========================================== [#] Extension name: mpt3sas [#] Description: Adds LSI MPT Fusion SAS 3.0 Device Driver Support [#] To get help visit: <todo> [#] Extension preparer/packer: https://github.com/pocopico/rp-ext/tree/main/mpt3sas [#] Software author: https://github.com/pocopico [#] Update URL: https://raw.githubusercontent.com/pocopico/rp-ext/master/mpt3sas/rpext-index.json [#] Platforms supported: ds1621p_42218 ds1621p_42951 ds918p_41890 dva3221_42661 ds3617xs_42621 ds3617xs_42218 ds920p_42661 dva3221_42962 ds918p_42661 ds3622xsp_42962 ds3617xs_42951 dva1622_42218 dva1622_42621 ds920p_42962 ds1621p_42661 dva1622_42951 ds918p_25556 dva3221_42218 ds3615xs_42661 dva3221_42951 ds3622xsp_42661 ds2422p_42661 ds3622xsp_42218 ds2422p_42962 rs4021xsp_42621 dva1622_42962 ds2422p_42218 rs4021xsp_42962 dva3221_42621 ds3615xs_42962 ds3617xs_42962 ds3615xs_41222 ds920p_42951 rs4021xsp_42218 ds2422p_42951 ds918p_42621 ds3617xs_42661 ds3615xs_25556 ds920p_42218 rs4021xsp_42951 ds920p_42621 ds918p_42962 ds3615xs_42951 ds3622xsp_42951 dva1622_42661 ds918p_42218 ds2422p_42621 ds1621p_42621 ds3615xs_42621 ds3615xs_42218 ds1621p_42962 ds3622xsp_42621 rs4021xsp_42661 [#] ======================================================================================= However when I do a build ./rploader.sh build ds920p-7.2.0-64570 I get the following: [-] The extension pocopico.mpt3sas was found. However, the extension index has no recipe for ds920p_64570 platform. It may not be [-] supported on that platform, or author didn't updated it for that platform yet. You can try running [-] "ext-manager.sh update" to refresh indexes for all extensions manually. Is there any know version of MPT3SAS that will work with the DS920P? I was keen to use it for the Intel Quick Shift transcoding options. My current install is ds3617xs however it doesn't support transcoding at all. Thanks, KS

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

This is the news you've been waiting for. However, improvements to MSHELL for TCRP related to 10th generation CPU transcoding are planned. ARPL-i18n has an addon called i915le10th. -

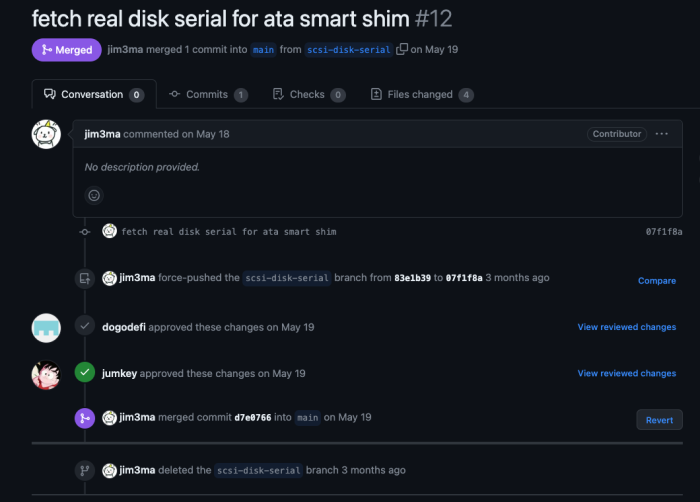

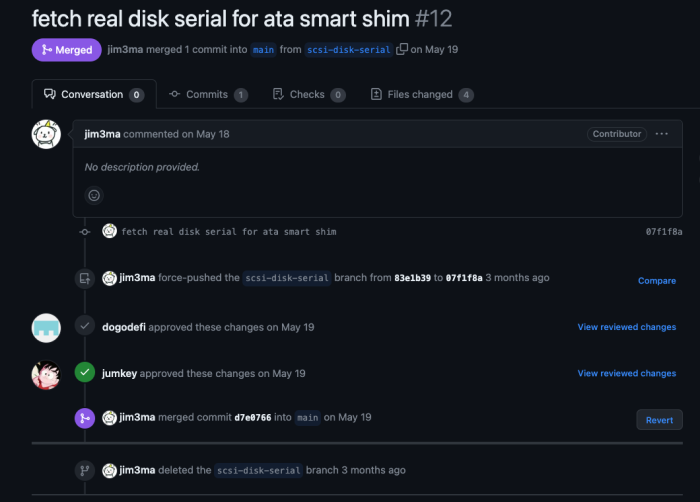

[NOTICE] Now, when using DS918+ and HBA in REDPILL, the hard drive serial number is displayed. I've been hanging here all day today, and for the first time in a while, I'm bringing you some good news. It seems that DS918+ (apollolake) will now rise again to the level of the most perfect model. Transcoding, HBA everything is perfect. This makes it the only model that supports these two conditions. Until now, there has been a problem with not being able to distribute the serial number, which is the S.M.A.R.T information of the hard disk, when using it with HBA. There was a fatal problem that made it difficult to replace the hard drive when using it in raid mode. Today, the source of lkm5 for Kernel 5 developed for SA6400 was developed targeting Kernel 5, but various improvements were seen. https://github.com/XPEnology-Community/redpill-lkm5 I imported only the improved parts of the existing lkm source for Kernel 4 and compiled it. A new source that stands out is scsi_disk_serial.c / scsi_disk_serial.h. I could smell it just by looking at the name, so I brought it right away. lol scsi corresponds to the hba device and means the serial source of the disk. https://github.com/PeterSuh-Q3/redpill-lkm/blob/master/shim/storage/scsi_disk_serial.c Many experts came together to solve this problem in order to improve this source. Development was completed 3 months ago, but it seems no one has thought to apply it to Kernel 4.^^ Currently only applied to M-SHELL. I will spread this information separately to ARPL developers. As always, you will need to rebuild your loader. The lkm version that must be updated to the new version during the build process is 23.5.8. https://github.com/PeterSuh-Q3/redpill-lkm/releases/tag/23.5.8 @wjz304 Please see my lkm linked. I think you can consider the sources modified today as the target for syncing. Please try it and contact us if you have any problems. https://github.com/PeterSuh-Q3/redpill-lkm

-

[NOTICE] Now, when using DS918+ and HBA in REDPILL, the hard drive serial number is displayed. I've been hanging here all day today, and for the first time in a while, I'm bringing you some good news. It seems that DS918+ will now rise again to the level of the most perfect model. Transcoding, HBA everything is perfect. This makes it the only model that supports these two conditions. Until now, there has been a problem with not being able to distribute the serial number, which is the S.M.A.R.T information of the hard disk, when using it with HBA. There was a fatal problem that made it difficult to replace the hard drive when using it in raid mode. Today, the source of lkm5 for Kernel 5 developed for SA6400 was developed targeting Kernel 5, but various improvements were seen. https://github.com/XPEnology-Community/redpill-lkm5 I imported only the improved parts of the existing lkm source for Kernel 4 and compiled it. A new source that stands out is scsi_disk_serial.c / scsi_disk_serial.h. I could smell it just by looking at the name, so I brought it right away. lol scsi corresponds to the hba device and means the serial source of the disk. https://github.com/PeterSuh-Q3/redpill-lkm/blob/master/shim/storage/scsi_disk_serial.c Many experts came together to solve this problem in order to improve this source. Development was completed 3 months ago, but it seems no one has thought to apply it to Kernel 4.^^ Currently only applied to M-SHELL. I will spread this information separately to ARPL developers. As always, you will need to rebuild your loader. The lkm version that must be updated to the new version during the build process is 23.5.8. https://github.com/PeterSuh-Q3/redpill-lkm/releases/tag/23.5.8

-

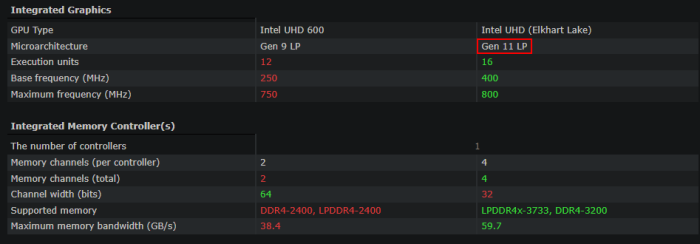

En therme de perf si on compare à un Synology original... à mon avis un CPU comme le J6413 tu verras pas une énorme différence de performance : https://www.cpu-world.com/Compare/423/Intel_Celeron_J4125_vs_Intel_Celeron_J6413.html En revanche comparé à un Xpenology ayant un vrai CPU qui tient la route, forcément ça fera un changement... J'ai eu l'occasion d'avoir un vrai DVA1622 entre les mains, punaise que ça rame... comparé à un core i5/i7/i9 ... (virtu ou non...) Edit : Bref, si tu veux être tranquille pour le HW transcoding, cherche un iGPU 9th gen... ou éventuellement 10th gen si compatible avec l'addon 10th i915.

-

Je n'ai malheureusement aucune expérience en transcodage HW avec de l'IGPU en therme de performances... Je pense qu'il faut surtout regarder les performances iGPU plutôt que CPU pures dans le cas de HW transcoding je pense... https://www.intel.fr/content/www/fr/fr/products/sku/207909/intel-celeron-processor-j6413-1-5m-cache-up-to-3-00-ghz/specifications.html (attention c'est un gen 10th ça veut dire addon obligatoire, c'est pas géré nativement par Syno sauf erreur) https://ark.intel.com/content/www/us/en/ark/products/212327/intel-pentium-silver-n6005-processor-4m-cache-up-to-3-30-ghz.html (D'après google, iGPU Gen11, c'est même pas dit que ce soit compatible du tout Synology ? je crois pas qu'il existe d'addon qui gère les 11th gen) https://ark.intel.com/content/www/us/en/ark/products/212328/intel-celeron-processor-n5105-4m-cache-up-to-2-90-ghz.html (même constat que le n6005) infos à prendre avec des pincettes...

-

Ok, I think I discovered part of the problem. The script I am using doesn't pass in --device to the docker container, so it can't transcode using HW acceleration. However when I add --device, I get an error saying that "Container Manager API has failed", and "Error gathering device information while adding custom device "/dev/dri/renderD128" no such file or directory". Here is the script I am using, but doesn't work because there is no /dev/dri directory on my system. docker run -d --name=jellyfin \ -v /volume1/docker/jellyfin/config:/config \ -v /volume1/docker/jellyfin/cache:/cache \ -v /volume1/MEDIA:/media \ --user 1026:100 \ --net=host \ --restart=unless-stopped \ --device /dev/dri/renderD128:/dev/dri/renderD128 \ --device /dev/dri/card0:/dev/dri/card0 \ jellyfin/jellyfin:latest If I remove the two "--device " then it will work without transcoding. I am using the DS923+ which I thought was able to transcode 4kstreams. Does anyone know how to fix this?

-

Hello all, I've read through every post and like many others am trying to get transcoding back on 11th Gen Intel baremetal. Previously had QSV working just fine on DS918 with ARPL but upgraded to more cores and may have jumped too quickly into DS3622xs (as it doesn't support QSV). Is SA6400 the way to go, if so, which version? Currently on ARPL DS3622xs 7.2-64570-3. Thanks in advance.

-

HI. I am giving Video Station a try. It does work. It indexes videos and the work ok. But I access the setting to change some parameters (activate transcoding and include TMDB API) and I can not save the setting. I press OK and I have no response. When I change to another tap (library, privileges, DTv, etc) I see a pop up warning me that if I abandon that page (tab) the changes won't be saved. Then, the changes are not saved. What am I missing? My build is an Asrock Q1900-itx with a 2Tb REd WD Hdd. Everything else seems to work fine. Can anyone help me, please? Thanks.

-

Transcoding WITHOUT a valid serial number

darknebular replied to likeadoc's topic in Software Modding

with the alex presso, you can't play HEVC 4K + TrueHD 7.1, and you haven't 5.1 when It need to transcoding, and movies with AAC 5.1 is not fully working, the Media Server needs another wrapper using the alex presso, because the wrapper of Alex Presso only patch Video Station. If you had license issues, Alex's installer may cause long-term system issues even after it has been uninstalled. That's because it doesn't check the license status of the AME. He is fixing these problems now in his installer. Please uninstall everything, and do a clean install, if your NAS performs well, I recommend the Advanced Wrapper. -

The only limitation of Device-Tree-based models like the DS920+(Geminilake) is the inability to use HBA (SAS RAID) controllers. And it is an Intel CPU-based model that many users who want transcoding choose. If you want to use VMM, it is recommended to stay on an INTEL based platform.

-

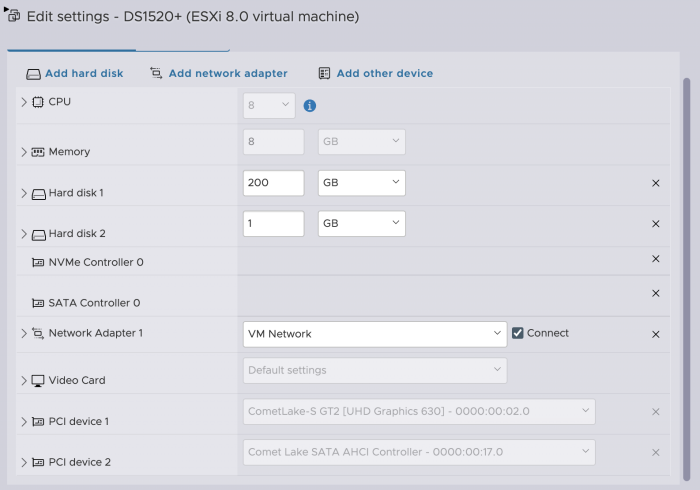

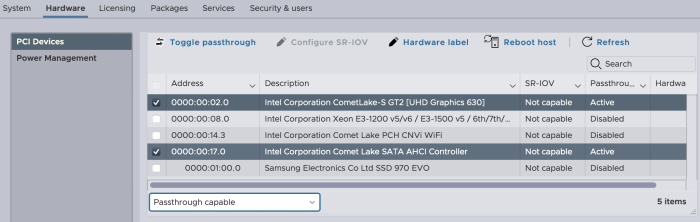

Hey, so I haven't followed this tutorial since I've ended up using ESXi for testing purposes and was astonished by what I was able to achieve. The main reason for me was to get hardware transcoding, and as it turns out, an Optiplex 5080 cannot get the i915 activated, always results in crash upon the loading of the driver. So I figured that I'd set up Linux for Jellyfin, and then DSM... but I discovered that anything passthrough worked in DSM... I installed ESXi on a 512GB NVME SSD, which will also be used for the Datastore Downloaded the Arc loader vmdk flat version: https://github.com/AuxXxilium/arc/releases added it to the datastore (in the DS1520+ folder, since the image gets modified), and set it up as an existing hard drive on virtual SATA controller Created a 200 GB hard drive and placed it on a virtual NVME controller (for cache) - you can create 2x200GB disks on the same SSD for read and write cache, but I've read horror stories of people loosing their volume if/when a SSD fails. Save the configuration enable the SATA controller as a passthrough device (raw access to disks, SMART, possible to boot baremetal without having to change anything, etc.): [root@ESXi:~] lspci 0000:00:17.0 SATA controller: Intel Corporation Comet Lake SATA AHCI Controller [root@ESXi:~] lspci -n 0000:00:17.0 Class 0106: 8086:06d2 [root@ESXi:~] vi /etc/vmware/passthru.map and I added the following (corresponding info at the bottom of the file). # Intel SATA CONTROLLER 8086 06d2 d3d0 false After reboot, I can toggle both the PCI devices that I care about Back to the configuration: add two PCI devices: the GPU the SATA controller Save and start machine. Follow the normal process to setup the DSM, it uses the hard drives attached to the SATA controller, like Bare metal. Now, the Arc loader has an add-on for patching the i915 driver, however this wouldn't work for me, so I did it manually by using this patch (attached) made by a Chinese modder (https://imnks.com/6421.html). so I ran the patch: Optiplex_fan@Syno5080:/volume1/docker$ sudo python3 mod_i915_id-0913.py Password: [I] 请输入4位十六进制显卡ID(eg:7270):9bc8 <<= Here I entered the PCI_ID of my GPU [I] 备份 /usr/lib/modules/i915.ko 到 /usr/lib/modules/i915.ko.bak [I] 替换 9bc8 到 /usr/lib/modules/i915.ko 的 906784 位置 [I] 删除 /usr/lib/modules/i915.ko 的 2144968 位置的校验 [I] 修改完成, 请重启确认, (源文件备份到/usr/lib/modules/i915.ko.bak, 如有问题请还原). ------ translation ------ [I] Please enter a 4-digit hexadecimal graphics card ID (e.g., 7270): 9bc8 [I] Backup /usr/lib/modules/i915.ko to /usr/lib/modules/i915.ko.bak [I] Replace 9bc8 at position 906784 in /usr/lib/modules/i915.ko [I] Remove validation at position 2144968 in /usr/lib/modules/i915.ko [I] Modification completed. Please restart to confirm. (The original file is backed up to /usr/lib/modules/i915.ko.bak. If any issues arise, please restore the backup.) And rebooted the VM! Upon reboot, the GPU is available: optiplex_fan@Syno5080:/$ ls /dev/dri by-path card0 renderD128 in Jellyfin, the transcoding works fine up to 200+ fps so while the baremetal installation wouldn't work, the passthrough with ESXi solved the problem. Bonus, I can create other VMs :p mod_i915_id-0913.py

- 89 replies

-

- virtualization

- tcrp

-

(and 2 more)

Tagged with:

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

It sounds like you want to use both transcoding and HBA. As of now, DS918+ is the only model. However, unlike Jun's loader whose HBA was used up to DSM 6.2.3, this model has a problem of not displaying the disk's serial information properly in S.M.A.R.T. If this part is used as a RAID, it can be fatal because the location to be replaced is determined by serial information when a bad disk is replaced. So if you are considering RAID, we recommend adding a SATA controller that supports AHCI instead of HBA and DS920+ or DS1520+ that supports automatic disk mapping. This symptom is also common with the redpill loader covered. -

Great stuff on this forum! Is there a bootloader that offers at the same time support for: 1. 10th gen Intel CPU 2. LSI SCSI controler 3. Hardware acceleration for transcoding with Intel Graphics 4. NVMe Cache read only Keep up the good work guys!

-

Hi guys! I have a pretty new build for my main XPEnology and I'm trying to figure something out. Is there at the moment a bootloader that offers support for: 1. 10th gen Intel CPU 2. LSI SCSI controler 3. Hardware acceleration for transcoding with Intel Graphics 4. NVMe Cache read only I've based my new build on a 10th gen Intel i3-10100 in order to use it for a hardware accelarated transoding in PLEX. I'm a few days away from collecting an LSI 9707 8i which I'll base my new XPEnology on. And I'm also on the market for a NVMe SSD to use for a read only cache. Can anybody advise on a bootloader/DS model to use?

-

Hardware Transcoding - Xpenology DS918+ / Bootloader 1.04b

iamcytrox posted a question in General Questions

Hey guys, I am currently trying to get HW Transcoding running. I've built my own NAS with the following specs: CPU: Intel Core i3 6100 MB: Asus P10S-i Originally I've installed 6.2 with Bootloader 1.04b and since yesterday I am using 6.2.3-25426 Update 3. I tried to use HW Transcoding with Plex and the software only used software transcoding. I've looked up the forum and tried to figure a way out .. As far as I understood it, there needs to be a driver for the iGPU right? And when everything is set up correctly, there should be a folder in etc/dev/ called dri right? Well there isnt one and I really want to use HW transcoding for my media gallery. EDIT: cat /usr/syno/etc/codec/activation.conf isnt working either Thanks in advance -Yannik -

I'm like, wtf... I booted ESXi, loaded arc loader, installed a DS918+ and GPU pass-through. Modded the 918.ko and I get hardware transcoding active I'm going to try the DS918+ in baremetal, see what it does, instead of sticking to DSxx20+ series... EDIT: no go on DS918+ Baremetal :-/

-

If you don’t need hardware transcoding you can choose any modern CPU. So yes, intel 12th gen or 13th gen will work absolutely fine. one of the other issue that people have can be the LAN chip on the motherboard. So choose the MB accordingly.

-

Hi @blackmanga It may be a silly question but I really needs some guidance I am planning to build a custom Xpenology NAS using ARC loader. I currently have machine with Intel i5 7400 but I want to move to at least 10th gen i3 or i5. I started looking for i5-10400T but for some reason I can't find any new processors for sale (even T version of other 10 gens aren't available in my country). So my question to you is.. Is Xpenology compatible with latest 12 or 13 gen i3, i5 processors? If 12/13 are out of question, can I at least get 10th gen CPU like yours and don't apply any patches? I my case I don't need hardware transcoding. Are there other aspects of CPU (other than hardware transcoding) that are not compatible with Xpenology DSM 7.2