flyride

Moderator-

Posts

2,438 -

Joined

-

Last visited

-

Days Won

127

Everything posted by flyride

-

https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/

-

To be a little bit more specific: Practice installing on a spare drive until you understand the process completely and you have a combination of USB stick, DSM version and XPe loader that you are certain works with your hardware. Then burn a new clean stick, and do the initial boot with your three array disks attached. The Synology installer should offer to do a migration install. If it suggests that your data is being overwritten in any way, don't proceed (you're picking the wrong option). This is the only way to keep all your settings in place. It is possible to import an array into a running DSM system but it will only preserve your data, not your settings.

-

ECC is a hardware memory correction scheme that is implemented by the RAM and motherboard, and is not functionally visible to operating systems. So, yes, the memory type does not matter to DSM. ECC is definitely better, but not supported by most non-server motherboards. I believe we will soon see ECC (again) become ubiquitous, as memory density is getting so high that error frequency is becoming statistically significant. Yes, that is DSM core functionality (Linux "NUT") and works fine when running DSM with XPe.

-

First off, RAID is an algorithm that takes several different forms involving data redundancy. So "hardware" is implementing this algorithm just like software. If a motherboard provides RAID services, it is doing so with CPU in the BIOS. This isn't any more "hardware" than DSM. If a dedicated RAID controller is providing services, it is doing so with a microcontroller or ASIC. A long time ago, CPUs were not fast and the computational load was such that the dedicated hardware approach was faster/better. Those days are long past and now a modest CPU can more than keep up with a typical RAID workload at network interface speeds. Also, a "software" approach like is done with DSM allows much more sophisticated error management, data recovery and additional features like we can achieve with btrfs. Therefore, for XPEnology and DSM, to achieve best functionality, performance and reliability, it is best NOT to use RAID services from "hardware" but provide direct access to disks to the DSM operating system.

-

Read up a little on hypervisors. ESXi, ProxMox VE, KVM, XenServer, etc are all hypervisors, which run as the initial boot environment (before an operating system). Hypervisors manage virtual machines, each of which are completely seperate and independent of each other. DSM is then run as a virtual machine workload within the hypervisor environment. We can support NVMe services because they are managed completely outside of the DSM VM. DSM has the ability to act as a hypervisor (VMM), but this is not useful to support NVMe drives (since DSM doesn't support them in the first place). Docker is not a hypervisor, it's an aliased environment within Linux. Docker apps share the same Linux working environment. You might think of it working in the same way as a hypervisor, but it is quite different. It is possible to import your baremetal XPe DSM system into an ESXi VM, but that is a technically demanding task and you should be very familiar with XPe and running it under ESXi before attempting it. So yes, you would probably need to plan to "start over" and import your data once everything was set up and tested under ESXi.

-

M.2 is a multi purpose interface, and its implementation is dependent upon the motherboard or host card. Some or all of the following may be true: Most people think of M.2 as an interface to a NVMe SSD, but in reality the NVMe card is a PCIe device. In that respect, it's a PCIe slot in a different form. A NVMe SSD is simply a PCIe device that fits into the M.2 slot form factor. The M.2 slot can also be mapped to a M.2 SATA SSD and internally connected over the same edge connector to a SATA HBA. Assuming it is supported by the motherboard or card, an M.2 SATA SSD should work fine with DSM as it is seen as a SATA device. A PCIe adapter with multiple M.2 slots is simply a PCIe switch or bifurcator. For what it's worth, you can buy M.2 to PCIe adapters as well. You already know that a M.2 NVMe SSD cannot be natively used by DSM as regular disk, just as cache (with patch). Your best functional option to use NVMe with DSM is to install DSM within a hypervisor (ESXi), which can then virtualize and syndicate the NVMe storage to DSM as a VMDK (virtual disk image) or RDM (raw device mapping). RDM is preferable as it performs better, gives you 100% of the space and the device can be directly attached to a virtual SATA controller so it appears as SATA to DSM.

-

Yes.

-

Tutorial/Reference: 6.x Loaders and Platforms

flyride replied to flyride's topic in Tutorials and Guides

Make sure you are using DSM 6.2.3 and following the CSM boot rules that are required for 1.03b. Also the supported Intel emulated nic is e1000e, not e1000. -

Full disk devices attached to a VM via passthrough or RDM are fully portable to a baremetal instance of DSM. If you use install a hypervisor filesystem on those disks and then create virtual disks on that filesystem, you are dependent upon that hypervisor OS to get to data. I'm not sure if that answers your question.

-

My understanding (perhaps dated) was that there was no suitable NIC available under Hyper-V. Microsoft chose not to emulate any of the popular NICs XPE uses because of course they did not need them - since they control the underlying OS.

-

You should be aware that just because a slot is M.2 does not mean it supports M.2 SATA. It's up to the motherboard or other device. For example, my X570 motherboard supports two M.2 NVMe slots but they do not work with M.2 SATA. However, my Supermicro server board does support both on its slot. So check before buying M.2 SATA SSDs.

-

Try upgrade at least to 6.1.7 (no change needed to your loader as long as you stay on 6.1.x) and add extra.lzma if necessary. Aquantia is supported but ASUS has newer silicon than the default driver.

-

M.2 SATA drives should be seen by DSM as regular disk. Sometimes there are limitations on motherboards where a physical SATA port will be disabled when M.2 SATA is active (nothing to do with XPE). I suppose it's possible that there could be a controller dedicated to the M.2 SATA that would have to be addressed with extra.lzma, but that seems unlikely.

-

It's arguably better to have a separate and dedicated device for VPN termination. Best practice would actually have the VPN terminating at your perimeter. Can you run Wireguard on your firewall?

-

The installation is exactly the same as 6.1.7. You are currently using a 1.02 version of the loader, you will need to boot with 1.03b/1.04b and do a migration install of 6.2.3. Which one is up to your hardware and the features you want: https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ If there are no new features you need, you will gain nothing by upgrading.

-

Try e1000e

-

Get "what" working? QuickConnect is a suite of services that are provided as a cloud offering from Synology. You did not pay for them so you should not try and use them. If you want remote access services, then VPN or locally hosted reverse proxy are your main options. If you want DDNS, you can easily configure a non-Synology service using the DSM DDNS client or another client. Be specific in your question and you'll get better responses. In any case, there are many examples posted here to solve all the problems you are likely to pose.

-

Here is your board specs: https://www.kontron.com/products/boards-and-standard-form-factors/motherboards/uatx/d3643-h-uatx.html Intel i219LM embedded NIC on B360 chipset will likely require an extra.lzma with newer Intel drivers. https://xpenology.com/forum/forum/91-additional-compiled-modules/ Alternatively, buy a $20 Intel CT PCIe 1Gbps card and test with that.

-

Congrats for working through it. Multiple overlapping issues are always hard to unravel.

-

I can address #2 - unless Syno has recently changed, the fan speed, beep and LEDs are controlled via tty connected to a proprietary PCB. Some trial and error sending bitmasks may get you what you need, and there is a limited amount of example data online.

-

Don't assume that just because the device doesn't appear in the passthrough menu by default, that it cannot be configured to do so. I had to do this with one of my SATA controllers: https://www.truenas.com/community/threads/configure-esxi-to-pass-through-the-x10sl7-f-motherboard-sata-controller.51843/

-

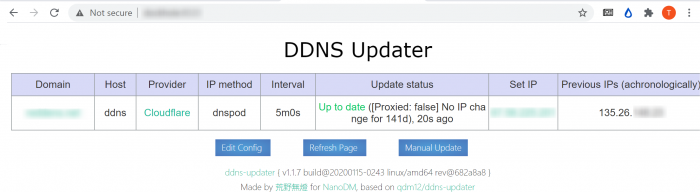

I'm sorry you are having so much trouble. Needless to say many people are successfully using DDNS, both the Synology client and others. Keep at it and you will find a solution. Some of the free options are problematic however. For what it's worth, I am using this docker: https://hub.docker.com/r/80x86/ddns-updater This is specifically because it has support for CloudFlare as my DDNS provider, as I use cloudflare to host my domains (I DDNS my home systems to one of my domains). But it supports a number of free and paid services.

-

What DDNS provider are you using? If you want help you have to provide some information about what you are doing.

-

https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/