flyride

Moderator-

Posts

2,438 -

Joined

-

Last visited

-

Days Won

127

Everything posted by flyride

-

Doesn't matter

-

External HDDs detected as internal HDD after synoinfo.conf edit.

flyride replied to Triplex's question in General Questions

All array disks are redundancy disks. If DSM boots and there are not enough disks available to start the array, no change will be made to the array and a subsequent correction of the problem allowing the array to start will result in a normal state. When there are enough disks to start the array (given redundancy), it is subsequently modified and the disks left out of the array are no longer valid. Therefore, the array must be rebuilt to restore them. IMHO, "strain on disks" is poppycock with the exception of all-flash arrays that will use up some of their write allowance. -

Invalid raid superblock magic in dmesg is "normal" for any Storage Pool array member device. At that point in the boot, the kernel is trying to start the /md0 boot and /md1 swap arrays that use a V0.90 superblock type, and reject the V1.2 superblocks for the data arrays. Later in the boot, it will start the Storage Pool arrays. tty devices are used for serial port console, and also to control the custom beep and led PCB's on a real Synology. It looks like perhaps DSM is generating an error message trying to write to that custom PCB port. Can you log in via serial and see if your arrays are started? If so, you just have a network issue.

-

Most J3xxx/4xxx/5xxx need a modification to the loader in order to work with 6.2.1 and 6.2.2. If you modified the loader with extra.lzma or "real3x" mod you will need to revert it by restoring the original configuration to the loader from running DSM. Then apply the 6.2.3 update immediately, before rebooting. See details here: https://xpenology.com/forum/topic/28131-dsm-623-25423-recalled-on-may-13/?do=findComment&comment=141604 If you are running now without a loader modification, you should be able to upgrade with no other actions. If for some reason you lock yourself out trying to upgrade, just do a migration install with a clean 1.04b loader and install 6.2.3 directly, should work fine.

-

Please post your upgrade problem as a new question, this thread is not for troubleshooting upgrades. Thanks!

-

The control panel CPU information is hardcoded to the DSM platform and is cosmetic. Your system is actually are using all the cores and hyperthreads. Changing to another platform will only net you more performance if you changed to a chip with >4 cores. Whether you should change to another platform or version is up to you and the features you want. Refer to the capabilities table here: https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ It is possible to change DSM versions and incorporate your existing data. It is also possible to make a mistake and make your data inaccessible. The problem that is most prevalent is that there is some incompatibility or procedural error which makes your system unable to boot post-upgrade. Your data is safe but then you have to work through the problem. Some folks are unable to figure out the problem and then elect to install from scratch, either ignoring or damaging their data in the process.

-

I have never had problems with Mellanox cards. However I have ALWAYS included a 1 GBe Ethernet port active in the system (even if I don't have anything plugged into the port). If you have 10Gbe only this may be an issue. To be specific I have personally tested the following combinations: Baremetal DS3615xs 6.1.7 Mellanox Connect-X 2 dual Baremetal DS3615xs 6.1.7 Mellanox Connect-X 3 dual ESXi passthrough DS3615xs 6.1.7 Connect-X 3 dual ESXi passthrough DS3615xs 6.2.x Connect-X 3 dual ESXi passthrough DS3617xs 6.2.x Connect-X 3 dual

-

Again, if you read the feedback, using the RAID controller to manage the volume is counterproductive in a DSM environment. #3 is preferable, but your controller needs to be able to address and syndicate the drives individually and isn't typical for a RAID controller. If it is not an ACPI compliant controller, you will need driver support in DSM, which may be problematic. #2 is probably the nest best choice. I'm not 100% sure how you are doing this however. RDM? I am not aware that you can "passthrough" disks. #1 is the least beneficial to you. RAID5 would be supported by the controller, not ESXi. So any problems with your array cannot be solved by DSM or ESXi, nor is there an easy path to add disks.

-

dsm goes into recovery everytime i reboot the esxi host.

flyride replied to aniel's question in General Questions

I don't have other ideas at the moment, but I don't recall seeing you address this suggestion? -

Only possible on DS918+ DSM image with a patch, search for "NVME patch" and you will find it. Normal rules apply - one NVMe SSD = read-only cache, two NVMe SSD = r/w cache. SSD cache is quite overrated, it quickly wears out SSD's and adding RAM is often as effective with typical workloads, but if you really want to do it, it is relatively straightforward. Haven't tested M2D18 but it ought to work with the patch at least.

-

Investigate. See if the daemon is running, and what port it is attached to. $ sudo -i # ps -ef | grep "/usr/bin/sshd" # netstat -tulpn | grep "sshd"

-

Do you have more than one NIC in the system?

-

Installing Python 3.7 or better under DSM 6.0.2

flyride replied to Akhlan's question in General Questions

Docker with all the middleware embedded? -

dsm goes into recovery everytime i reboot the esxi host.

flyride replied to aniel's question in General Questions

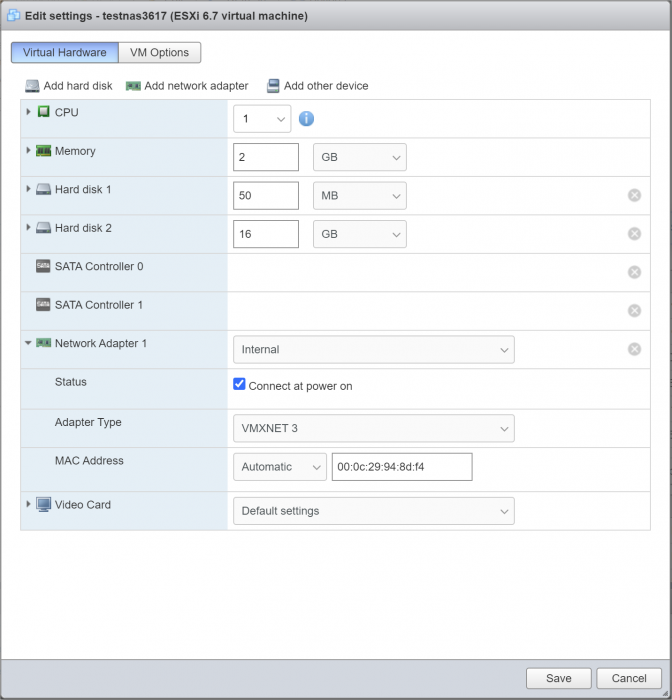

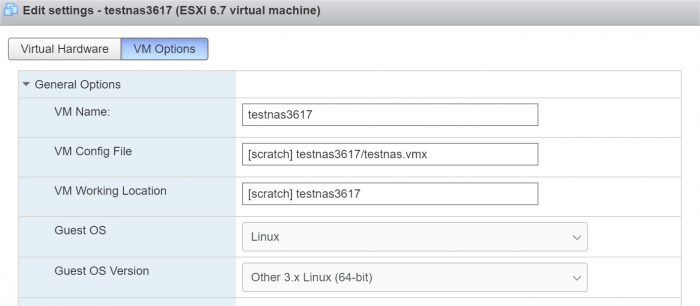

Not much theory here: Maybe OP can double-check this: Also, maybe check the automatically assigned VMXNET3 MAC address and edit the grub config, or change the autoassigned MAC address to the value in the loader. Shouldn't matter, but you never know I guess. Finally maybe build a virtual disk for testing since all the DSM installed copies are on the passthrough SATA. Maybe it is bouncing up and down and the loader no longer can see the disks, which would result in a reinstall prompt. -

dsm goes into recovery everytime i reboot the esxi host.

flyride replied to aniel's question in General Questions

vmxnet3 is present in all of Jun's loaders. It doesn't work in 6.2.1/6.2.2 which is why folks were directed at the time to use e1000e. Maybe you meant something else? -

FULL Hardware list support 6.1.X quicknick 3.0 DS916+ PCI

flyride replied to Knight_Lore's topic in DSM 6.x

I did something similar with the inventories I posted a couple of years back. I never found that the various online PCI library resources were complete in regard to subid's, I either had to go through the driver source code or try and filter the .ko binaries. It took a long time to get a complete picture of the drivers represented in those posts. https://xpenology.com/forum/topic/13922-guide-to-native-drivers-dsm-617-and-621-on-ds3615/ https://xpenology.com/forum/topic/14127-guide-to-native-drivers-dsm-621-on-ds918/ -

dsm goes into recovery everytime i reboot the esxi host.

flyride replied to aniel's question in General Questions

e1000e is limited to 1Gbps only. But it can be changed at any time just by editing the VM configuration. But if the reason for 10Gbe is expressly for DSM support, you can pass the whole card through when you get it, have DSM's driver manage it as a second NIC, keeping the e1000e driver intact as a management-style interface, if that is what works for you. -

dsm goes into recovery everytime i reboot the esxi host.

flyride replied to aniel's question in General Questions

wouldn't there be better performance between vm's with vmxnet3? True, but the person that is using multiple VM's with NFS mounted services from DSM already probably understands this. The more typical connections from off-host clients will not benefit from vmxnet3 unless it is being used to enable ESXi native support of a 10Gbe interface. -

dsm goes into recovery everytime i reboot the esxi host.

flyride replied to aniel's question in General Questions

Do you have a 10Gb network interface? If not, e1000e is just fine and provides the same performance. -

dsm goes into recovery everytime i reboot the esxi host.

flyride replied to aniel's question in General Questions

vmxnet3 works with 6.2.3, but not 6.2.1 or 6.2.2 -