Search the Community

Showing results for 'transcoding'.

-

I found a chinese blogger that states that this does not work on some motherboards from MSI, Gigabyte and Rainbow (almost end of page, in red). Maybe that's the same for my Dell motherboard 😕 Regarding the update 3, this was doing the same with the update 2 I spent too much time on this already, I think I'll go the ESXi route with one Linux VM with Jellyfin and HW transcoding, and another VM with DSM

-

Everything worked out for me! (for some reason, the /dev/dri folder did not appear immediately after the patch and the DSM start, but after some time🤪) I restored it in this order (maybe some steps are unnecessary): 1) Arc loader / Configure / Update/ Full upgrade 2) Build loader for another model - DS918+ (to clear all previous patches and addons), start DSM ("Migrate") 2.1) [optional] patching i915.ko for DS918+, reboot, check /dev/dri 3) Arc loader / Configure/ Build loader for my previous model - DS920+, start/migrate 4) patching i915.ko for DS920+, reboot, wait, see /dev/dri -SUCCESS! (check HW transcoding in Jellyfin - working) The patch was done using a hexadecimal editor by simply replacing the CPU ID 3E92 with my 3E98 and removing the driver signature at the end of file.

-

It looks like a simple method of code substitution in i915.ko stopped working - see the message below. After installing 7.2 update 3, HW-transcoding also disappeared (there is no /dev/dri folder). Previously used i915 patches.ko and addon in ARC bootloader are not helping yet. I'll try to experiment again later. I have a CPU Core i9-9900K (3E98), ESXi 8, passthroughed iGPU (it worked at 7.2 U1)

-

My goal was to make NVENC work on Jellyfin. Docker I was able to expose my GPU in docker without the libnvidia-container by doing: sudo docker run \ -e NVIDIA_VISIBLE_DEVICES=all \ -v /usr/local/bin/nvidia-smi:/usr/local/bin/nvidia-smi \ -v /usr/local/bin/nvidia-cuda-mps-control:/usr/local/bin/nvidia-cuda-mps-control \ -v /usr/local/bin/nvidia-persistenced:/usr/local/bin/nvidia-persistenced \ -v /usr/local/bin/nvidia-cuda-mps-server:/usr/local/bin/nvidia-cuda-mps-server \ -v /usr/local/bin/nvidia-debugdump:/usr/local/bin/nvidia-debugdump \ -v /usr/lib/libnvcuvid.so:/usr/lib/libnvcuvid.so \ -v /usr/lib/libnvidia-cfg.so:/usr/lib/libnvidia-cfg.so \ -v /usr/lib/libnvidia-compiler.so:/usr/lib/libnvidia-compiler.so \ -v /usr/lib/libnvidia-eglcore.so:/usr/lib/libnvidia-eglcore.so \ -v /usr/lib/libnvidia-encode.so:/usr/lib/libnvidia-encode.so \ -v /usr/lib/libnvidia-fatbinaryloader.so:/usr/lib/libnvidia-fatbinaryloader.so \ -v /usr/lib/libnvidia-fbc.so:/usr/lib/libnvidia-fbc.so \ -v /usr/lib/libnvidia-glcore.so:/usr/lib/libnvidia-glcore.so \ -v /usr/lib/libnvidia-glsi.so:/usr/lib/libnvidia-glsi.so \ -v /usr/lib/libnvidia-ifr.so:/usr/lib/libnvidia-ifr.so \ -v /usr/lib/libnvidia-ml.so.440.44:/usr/lib/libnvidia-ml.so \ -v /usr/lib/libnvidia-ml.so.440.44:/usr/lib/libnvidia-ml.so.1 \ -v /usr/lib/libnvidia-ml.so.440.44:/usr/lib/libnvidia-ml.so.440.44 \ -v /usr/lib/libnvidia-opencl.so:/usr/lib/libnvidia-opencl.so \ -v /usr/lib/libnvidia-ptxjitcompiler.so:/usr/lib/libnvidia-ptxjitcompiler.so \ -v /usr/lib/libnvidia-tls.so:/usr/lib/libnvidia-tls.so \ -v /usr/lib/libicuuc.so:/usr/lib/libicuuc.so \ -v /usr/lib/libcuda.so:/usr/lib/libcuda.so \ -v /usr/lib/libcuda.so.1:/usr/lib/libcuda.so.1 \ -v /usr/lib/libicudata.so:/usr/lib/libicudata.so \ --device /dev/nvidia0:/dev/nvidia0 \ --device /dev/nvidiactl:/dev/nvidiactl \ --device /dev/nvidia-uvm:/dev/nvidia-uvm \ --device /dev/nvidia-uvm-tools:/dev/nvidia-uvm-tools \ nvidia/cuda:11.0.3-runtime nvidia-smi Output is: > nvidia/cuda:11.0.3-runtime nvidia-smi Tue Aug 1 00:54:12 2023 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 440.44 Driver Version: 440.44 CUDA Version: N/A | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 GeForce GTX 106... On | 00000000:01:00.0 Off | N/A | | 84% 89C P2 58W / 180W | 1960MiB / 3018MiB | 90% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| +-----------------------------------------------------------------------------+ This should work on any platform that has NVIDIA runtime library installed. However, this still does not seem to work with Jellyfin docker. I can configure NVENC, play videos fine, but the logs does not show h264_nvenc. I also see no process running in `nvidia-smi`. Official docs points to using nvidia-container-toolkit https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html That's why I was looking at how to build it with DSM 7.2 kernel. Running rffmpeg My second idea was to use rffmpeg (remote ffmpeg to offload transcoding to another machine). I was thinking running Jellyfin in Docker and configure rffmpeg, then run the hardware accelerated ffmpeg in DSM host. I downloaded the portable linux jellyfin-ffmpeg distribution https://github.com/jellyfin/jellyfin-ffmpeg/releases/tag/v5.1.3-2 Running it in ssh yields [h264_nvenc @ 0x55ce40d8c480] Driver does not support the required nvenc API version. Required: 12.0 Found: 9.1 [h264_nvenc @ 0x55ce40d8c480] The minimum required Nvidia driver for nvenc is (unknown) or newer Error initializing output stream 0:0 -- Error while opening encoder for output stream #0:0 - maybe incorrect parameters such as bit_rate, rate, width or height Conversion failed! I think this is because of the driver DSM uses which is an old 440.44. jellyfin-ffmpeg is compiled with the latest https://github.com/FFmpeg/nv-codec-headers. The DSM Nvidia driver only supports 9.1. Confirming NVENC works I confirmed NVENC works with the official driver by installing Emby and trying out their packaged ffmpeg /volume1/@appstore/EmbyServer/bin/emby-ffmpeg -i /volume1/downloads/test.mkv -c:v h264_nvenc -b:v 1000k -c:a copy /volume1/downloads/test_nvenc.mp4 +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | 0 15282 C /var/packages/EmbyServer/target/bin/ffmpeg 112MiB | | 0 20921 C ...ceStation/target/synoface/bin/synofaced 1108MiB | | 0 32722 C ...anceStation/target/synodva/bin/synodvad 834MiB | +-----------------------------------------------------------------------------+ Next steps The other thing I have yet to try is recompile jellyfin-ffmpeg with an older nv-codec-headers and use it inside Jellyfin docker

-

Advanced Media Extension will not activate dsm7.1-42661

dj_nsk replied to phone guy's topic in Synology Packages

I had to abandon the Video Station, because my DSM works as a VM in ESXi. And there, although the passthroughed iGPU is correctly recognized, but for some reason it is the Video Station that cannot use it for HW-transcoding. I now use Jellyfin - HW-transcoding is perfectly configured and works there. Jellyfin is better in terms of functionality, the only drawback/feature is that its own logins/passwords are used, and not DSM users. You can try it too -

Advanced Media Extension will not activate dsm7.1-42661

dj_nsk replied to phone guy's topic in Synology Packages

You need: CPU + integrated GPU DS918+ or DS920+ (see here - transcoding) valid SN/MAC or synocodectool-patch -

I can get hw transcoding to work for plex in docker but to make it work I have to run plex from package center first or /dev/dri/ is empty. Rebooting (manually or from losing power) results in no /dev/dri/ being empty again and I have to stop docker plex and run package center plex then stop it and start docker plex for hw transcoding to start working again in docker. /dev/dri/ also magically goes empty after a few days even if there's no power loss. Currently I have some scheduled scripts that run at 5am that stops docker plex, installs package center plex, stops package center plex, then starts docker plex to ensure hw transcoding will be available at least daily if there's a power loss or something that happens without me being aware of it. I know, it's all weird and confusing but my question is if there's another way to get /dev/dri/ to populate without having to run package center plex. Also, because of the default unchangeable port number of plex I can't have both plex's running.

-

Well, I don't know what to think after that full day spent understanding what happens. Yesterday I installed ARPL-i18n and it worked out of the box using DS923+ on a i5-10500 CPU (Optiplex 5080) Wanting to enable Hardware transcoding with patched i915 (which never worked, I must miss something), I realized that maybe the AMD based DS was not shipped with the i915 drivers and changed to DS920+ in the loader. From there, started a full day of black screen ... the loader loads and after the installation of DSM and its reboot, the screen goes black, it doesn't display the loader typical information. I formatted the drives and used DS918+, same deal. I changed loader to the original ARPL, formatted drives, same deal. I changed loader to ARC, ... same black screen went back to DS923+ on ARPL-i18n and it worked again. So what is it ?? Also, would I be able to enable HW transcoding ? Thanks a lot

-

Hi Strainu, I have the same MB and and I can't have /dev/dri folder. Have you this folder ? (I use it for Plex hardware transcoding). My config : - ARPL with DSM 7.1 (42962) - DS918+ with original serial - 1 mac spoofing All is OK on another board (J5005) with DSM 7.0 and TCRP loader.

-

Upgrade from DSM6 918 to DSM7 1622 with some questions

firelord posted a question in General Questions

Hello All, The time has come to update my current DSM to latest one. Currently I have this hardware: MB: Asrock Z390 Pro4 CPU: Intel Core i5-8500T DISKS: Kingston SA400S37480G and 2 HDDs DSM: Model 918+ , DSM 6.2.3-25426 Update 3 I am using synology for the following services: - Surveillance station with 5 cameras - Cloudsync - Plex media server - Torrent with Transmission - Hyper backup/vault - Docker for some containers like (ddclient, kms server, speedtest etc) - Virtual machine manager for the following VMs: -- DNS server with pihole -- Homebridge -- CheckMK monitoring -- DSM 7 virtualized for using Photos app (this one would be migrated) -- Windows 8 I would like to add one more SSD and put them into raid1 then doing a fresh install to DSM7 especially to DVA1622 because of the 8 free cam licenses. What I would like to know mainly that the list above would work on a DVA1622 or not? Can DVA1622 handle more than 2 disks? What about plex transcoding? Is that working on DVA1622? I've read somewhere that DVA1622's Survstation AI is working with Intel's built in GPU but DVA3221 needs separated GPU for that. Is that right? Many thanks if you can help with your answers. -

Upgrade from DSM6 918 to DSM7 1622 with some questions

pocopico replied to firelord's question in General Questions

Hi, find my anwsers inline : Q1 What I would like to know mainly that the list above would work on a DVA1622 or not? , A1 You need to try and see, i see no reason not to work. Q2 Can DVA1622 handle more than 2 disks? A2 Yes Q3 What about plex transcoding? Is that working on DVA1622? A3 Since /dev/dri is working i would expect that to work also for the transcoding Q4 I've read somewhere that DVA1622's Survstation AI is working with Intel's built in GPU but DVA3221 needs separated GPU for that. Is that right? A4 Thats right. -

Hello Community. The reason for this post is to share my personal experience so that this might help the community here, for noobs like me and for developers to help figure out some of the maybe most common questions and issues. My baremetal build is as follows: * AMD Ryzen 7 5700G (Using integrated GPU) * ASRock B550M Steel Legend * 32Gb DDR * NIC Realtek RTL8125BG (<-- Now I understand the importance of knowing your nic) * 1x 120gb SSD as boot drive * 3x 14TB HDD Seagate Loader: * I tried the following loaders without any success (For every single loader same results where faced, DS3622xs+ was chosen, latest build offered used (7.2 preferred), manage to assign a mac to the nic, built the loader, got the IP apparently with no issues detected by loader, but when i tried to login using the IP provided by loader never managed to login, neither was finds.synology.com was able to see my nas) Same is true for all loaders on the following list: a. ARC https://github.com/AuxXxilium/arc This was my favorite and #1 choice, but once I built the loader and got the IP, for some reason never manage to login into the server. b. Automated Redpill Loader(i18n) https://github.com/wjz304/arpl-i18n (Failed) c. Automated Redpill Loader v1.1-beta2a https://github.com/fbelavenuto/arpl (Failed) d. Tinycore Redpill - very intimidating and no idea what to do. (Failed) e. If its not on the list I probably tried it. For 3 consecutive days and countless hours working with this trying to figure out how to do it, asking questions and not getting answers from experts (cannot blame since we do not live connected to the forum 24/7) and reading numerous topics here on the forum and reddit and nothing worked. I was going to gave up, I even installed Win 11 and was going to configure a raid and use it for media share which is my main focus, Photo Backup and runing EMBY Media Server and maybe an app for hosting my digital comics. f. ** The only Loader that worked and the one that I am running right now is PeterSuh Tinycore Redpill: https://github.com/PeterSuh-Q3/tinycore-redpill Thanks to gericb to giving me advice and referring me to this loader. This loader did everything for me and even let me use my Nic and Mac. When IP was given by loader I managed to login to the Nas. I believe that it is an issue with the nic drivers. Issues with this loader and xpenology: a. My nic is 2.5 gigabits and Synology sees it as a 2.5 nic and so is my switch, but for some weird reason it wont go over 1 gigabit speed. Now this is a mayor letdown considering that I invested in the Switch to get better transfer speeds performance. b. AME is not activated and there was no patch offered in the loader prior building it. For a noob this is very frustrating, trying to figure out how to do this now. More reading to find the right patch if any to do this without breaking the system. On top of that, not being a linux savy person, figuring out how to deploy code to get things done is not easy when you read about a possible solution and there is little to now explanation on how to do it step by step. c. Dont know if loader can be changed without losing whats inside of my pool. A different scenario is to simply install custom Windows and use it as media server and get an app similar to Synology photos for windows and android. This is a dumb free solution, I will have all codecs needed, will get the internal GPU working for transcoding when needed and requires little to no expertise to create. I will add more info here, but for now these are my preliminary observations. This is all fun until it stops being fun and you cant figure out the mystery and specially when the wife keeps asking me why am i spending this many hours in my PC 🤣

-

Hi guys I installed ds3622xs+ on my fresh system using i5 7500,originally I was running ds918+. I've noticed plex has high cpu usage when transcoding on the ds3622xs, is there a way to change the system onto a ds9xx system to see if it helps without losing data, seems like quicksync on the cpu is not supported on the 3622, is that because it is xeon based? Plex in docker also complains about missing /dev/dri. TIA

-

DS918+ has hardware transcoding capability with its iGPU. DS3622xs+ does not have any iGPU so does not have HW transcoding capability. Your I5 7500 has a DS918+ compatible CPU to use its iGPU, so HW works with this loader. As DS362XS+ is not built for HW transcoding, even if your CPU is compatible, there is no driver/module available for it. Why did you want to replace a working DS918+ loader with DDS3622xs+ ?

-

Hey guys, I do not want to open multiple threats to ask question based on the same build. I would like to know what is the best alternative: Baremetal or VM like windows and run a virtualbox? The reason I am asking is that I am going to run EMBY media server and I do not like to transcode, but sometimes you cant avoid transcoding for whatever reason and then the internal GPU on the CPU becomes handy. It is my understanding that Xpenology do not support AMD integrated GPU due to Synology not supporting it. Is there any walkaround to this? Is the better option is to go with a Win PC running VM? I am currently making a HUGE backup of all of my data, once i Finish y will delete Truenas Scale and try Xpenology. Any help with this questions will be deeply appreciated.

-

I appreciate your continued support! but it got to the point I had enough, so I pulled out my daughters TUF GAMING B560M-PLUS which pretty much worked off the bat. Got both DS920+ working (using aplr) and DS918+ (using tcrp)... the only thing missing is no /dev/dri on a i3-10105, if I can't get it to use transcoding (Jellyfin is all I care about,couldn't care less for Video Station) then it's maybe something i'll have to sacrifice. I've settled on DS918+ DSM 7.1.1-42962 Update 1 and making making sure things stay running smoothly. In the mean time, I've purchased another one of these board so my daughter can get her computer back lol. The whole reason behind getting new hardware and 3 12TB hdd's is because my 6x3TB was filling up and I wanted a smooth transition and way to copy from the 6 drive on 3 12TB's without downtime. There also wasn't really a viable way to transfer the files without a second system either. The worst part of this is spending 250AUD on a motherbord (MSI) thats now going straight to storage.

-

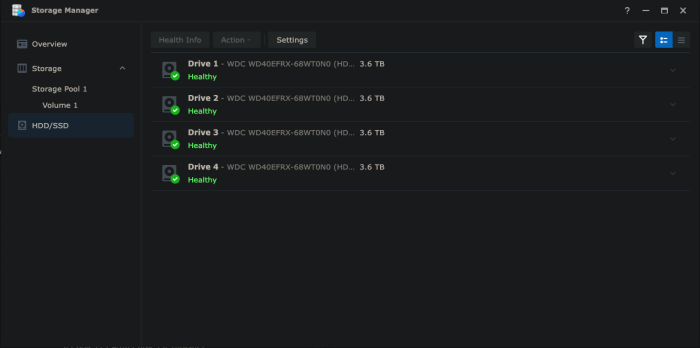

- Outcome of the Install: SUCCESSFUL - DSM version prior to update: NON - Loader version and model arpl-i18n - DSM 7.2 64570 Update 1 using DVA1622 image. - Using custome xtra.lzma: NO - Installation type: BAREMETAL QNAP 453a 4GB RAM N3160 4x4GB Western Digital Red - Additional comments: Plex Transcoding using the iGPU WORKS! - All 4 LAN Ports are operational.

-

Hello have someone tryed to get dsm7 on a core i3 12100 with Hardware transcoding? My Board uses the 1gb Intel nic and i want to use my Adaptec 71605 in hba Mode. If possible someone can Tell me that step by step?

-

Thanks PerryMason, actually last week I read your post about J5040 and I saved it for future references Also thanks @gadreel. I think I'm going to give the N100 a shot then, since I won't be doing live transcoding with it. If I need to I'll just probably set up some decoding software to convert video files before they are streamed.

-

What might not being supported is Transcoding and stuff related to that. Installing DSM should not be a problem.

-

What 8/9/10th Gen motherboard you have that supported in DSM 7.x?

merve04 replied to donchico's topic in Hardware Modding

So prior to DVA1622 being made available, I found out about DVA3221 which supported 8 cameras. I was using DS918+ running a VM within DSM as each instance provided 2 licenses for cameras, but this started getting heavy running DSM and 2 VM's. I tried DVA3221 which was awesome but due to the lack of discreate video card, I could no longer support HW transcoding for plex. When DVA1622 came around, I jumped on it and its been great ever since. The one things I've mentioned a few times around is ever since I moved on from using DS918+, my download speeds have suffered, to the tune of nearly 30MB\s. I suspect a network driver issue. I've tried several loaders, but always the same result. If I rebuild with DS918+ image, I get full 95-105MB\s. I believe the NIC is realtek 8118. I've thought about buying a NIC and put it in a PCIe slot, just never bothered. -

For ASRock J5040 - DS918+ is the best choice (not 923). You can now easily change the model and update the version using a simpler bootloader for beginners. At the same time you should also have the HW-transcoding option, which will allow you to process photos faster. 1) Download last release of bootloader Arc from here, write arc.img to the USB flash drive, start your NAS with this USB drive 2) Configure loader: DS918+, OK, build 64570, Yes, OK, OK, OK... OK (Boot Arc Loader now) 3) Check in Synology Assistant - find your DSM and connect, agree to the migration with the settings saved 4) Enjoy!