-

Posts

4,645 -

Joined

-

Last visited

-

Days Won

212

Everything posted by IG-88

-

network driver in general looks ok (1G) [ 36.688820] e1000e 0000:00:1f.6 eth0: NIC Link is Up 1000 Mbps Full Duplex, Flow Control: Rx/Tx last version of the e1000e driver in from mid 2020 is 3.8.7, the one you use is 3.8.4 but there seems to be a problem with the igb.ko for the i211 [ 22.909104] Intel(R) Gigabit Ethernet Linux Driver - version 5.3.5.22 [ 22.909633] Copyright(c) 2007 - 2018 Intel Corporation. [ 22.910091] igb: probe of 0001:02:00.0 failed with error -5 [ 22.910553] igb: probe of 0001:03:00.0 failed with error -5 you might need a newer driver pwr button looks ok from the driver [ 22.992199] input: Sleep Button as /devices/LNXSYSTM:00/LNXSYBUS:00/PNP0C0E:00/input/input0 [ 22.992882] ACPI: Sleep Button [SLPB] [ 22.993212] input: Power Button as /devices/LNXSYSTM:00/LNXSYBUS:00/PNP0C0C:00/input/input1 [ 22.993895] ACPI: Power Button [PWRB] [ 22.994220] input: Power Button as /devices/LNXSYSTM:00/LNXPWRBN:00/input/input2 [ 22.994826] ACPI: Power Button [PWRF] [ 2.995533] e1000e 0000:00:1f.6 0: (PCI Express:2.5GT/s:Width x1) a8:a1:59:26:22:4f there is also something wrong with your i915 firmware (if you want to use intel quick sync) if you have a spare disk and a usb you might test jun's loader 1.04b (dsm 6.2.3) with my extended drivers to test if the things work with newer drivers in general https://xpenology.com/forum/topic/28321-driver-extension-jun-103b104b-for-dsm623-for-918-3615xs-3617xs/

-

in this i listed all the supported id's by syno's driver and 3e96 is already supported with the default driver, if it does not work check for the firmware files mentioned above

-

why does it have to be a *.gz file? there is no real size limit/problem and the handling (like adding a extension manually) would be easier if its just s directory structure with files, with jun's loader it keeps most people out to try out or change things as the format is way to "exotic" (at least for windows) if it has to be a archive the at least it should be a format that can be used on any platform (and it should be able to simple change the content of the file), maybe *.zip? there are a lot of windows users and the *.gz files cand be changed with normal programms like 7zip

- 34 replies

-

- mutiloader

- redpill

-

(and 4 more)

Tagged with:

-

Is there any way to flash Xpenology or Redpill Image to "Xreamer Pro"?

IG-88 replied to Matin's topic in The Noob Lounge

beside beeing 11 years old its cpu is a RTD1283DD, not a x86-64 cpu and thats the end to it - NO -

Anyone want to Zoom to help w/ bare metal install? I'll pay!

IG-88 replied to dean4109's topic in The Noob Lounge

if its 6.2.3 to install the 1st advise would be to use loader 1.04b for 918+, your cpu allows it and that one work in uefi too (3615/17 aka loader 1.03b only works in old legacy aka csm mode and needs to be booted from the usb legacy device - not the usb uefi device) https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ jun's loader (all 3 flavors) have the realtek driver so imho the nic is not your problem also dont expect to much of as (single) nvme ssd, dsm will use it only as read cache and thats kind of pointless in most home environments as it only speeds up date you already used and with a 1G nic its mostly the nic that holds you back wen it comes to network speed (1G = ~112MByte/s) if you are interested in the hardware based video transcoding then 918+ is your way anyway if you master the install you would need some more steps to get that working with 6.2.3 and even the nvme would need some manual tweaking to get it working - but 1st you would need to master the install (and dint update 6.2.3 further then u3, 6.2.4 or 7.x need different loaders and that is completely different to configure/use (and still in development phase) -

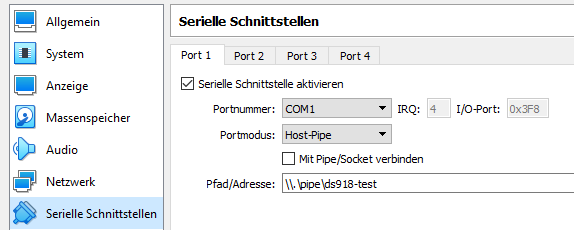

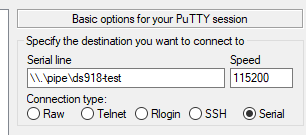

the main thing with virtualbox war usually to have the same mac in the vm and the dsm config (aka grub.cfg) i use ich9 as chipset as its a sata/ahci chipset by default, the rest is the same as with the vm config for 6.2.3 one sata controller, 1st disk is the loader network is bridged and Intel 1000MT Desktop (thas the e1000 extension in the rp loader) i guess all the intel nic's in virtual box will work as they are all 8254x and thats all e1000 when it comes to the driver its also handy to configure the com1 port to have access to the log when booting putty as terminal

-

that depends on the loader you are using, user_config.json belongs to tinycore and the "results" after using this file will end in in the 1st partiton in grub.cfg in that regard the rp loader is still the same as jun's loader, the values end up as kernel parameters in grub.cfg looks like this in grub.cfg: (see the vid/pid, sn and mac?) linux /zImage HddHotplug=0 withefi console=ttyS0,115200n8 netif_num=1 syno_hdd_detect=0 syno_port_thaw=1 vender_format_version=2 earlyprintk mac1=E7ACA9ACDCFF syno_hdd_powerup_seq=1 pid=0x0001 log_buf_len=32M syno_hw_version=DS918+ vid=0x46f4 earlycon=uart8250,io,0x3f8,115200n8 sn=1330NZN012245 elevator=elevator root=/dev/md0 loglevel=15 so either do it the tc way and work with its commands and user_config.json or the plain rp way by editing grub.cfg directly but if you use tc later on then the values in grub.cfg will be overwritten later when tc writes its values to the grub.cfg edit: user_config.json would be stored in the 3rd partion where tc resides mydata.tgz \home\tc\user_config.json

-

e1000e is possible to use in proxmox and i#ve seen it in configs in this thread https://xpenology.com/forum/topic/7387-tutorial-dsm-6x-on-proxmox/?do=findComment&comment=122230 if using e1000e in the vm config you would not need any added driver from the loader, the driver dsm for 3622 comes with would do the job - but i guess it depends on what way you choose, both need something extra and the result is the same (1G nic in vm), i guess when it comes to higher network speed only virtio is the way to go anyway

-

the driver you can use depends on whats in rd.gz of the model (that one comes from synology) and whats added as extension to the custom.gz (thats created by tc) the one in rd.gz would be called "native" drivers as they are part of dsm (and available in the boot loader), there s a 2nd type of native driver that is only part of dsm (*.pat file) but not in rd.gz, thats usually hardware that can be added (to original units), a excample for the later one would be mellanox drivers, even if they are suppported in dsm natively you can only boot with that one as nic if its added with tc to tinycore in case of 3622 there are the following drivers in rd.gz e1000e, igb, ixgbe, i40, r8168 if the hypervisor can emulate a nic thats supported by drivers in rd.gz you would not need to add any special driver in tc in case of esxi its possible to choose Intel 82574 aka E1000E and there should be no need for any special drives to add in tc afaik there is e1000e in proxmox and as e1000e driver is native to 3622 it should be ok to just choose e1000e as vNIC and thats it (virtio was usually the one people wanted to use in proxmox and rp loader came ootb with that one so i wonder why not using virtio as vNIC)

-

its the same as a fresh install but when dsm install process detects a "older" version on disk it will "migrate" aka update it to the new one (and in case of 6->7 there are major differences with packages so you might need to replace same after the update with 7.0 aware versions - automatically happens for syno's own packages from there repository), it can also be a different model of synology and the migrate will take as much as possible to the now model, so you can exven change from 3615 to 918+ or 3622 in that process you would just shutdown your 6.2.3, remove the old usb and insert the one with the 7.x loader its suggested to prepare/test the 7.x loader with a single blank disk (even a old small disk is ok), so you are sure it works, detects you hardware and you could even test packages with 7.x that way when finished testing the single test-disk can be deleted and the 7.x loader usb can be used for updating your old 6.2.3 dsm disks its the same situation on a original synology hardware (internal usm dom) when a old hardware with loader 6.2.x breaks and the new hardware sent to the customer comes with a recent 7.1 loader on the internal usb dom, the customer puts in his disks, boots up and does the update to 7.1 (the usb dom only holds the loader/kernel of DSM, the DSM OS itself is stored on every disk as a raid1 2.4GB partition)

-

i guess as its controller (pcie device) based you would only be able to do that for the added 8 port controller, you already used one port of the 6 port onboard in the hypervisor so you can't hand that controller/device to a vm as a whole as its already blocked by the hypervisor and you would need to do raw mapping of the disks to the vm (at least thats the way it work in esxi and imho it will be the same with kvm/proxmox) and when doing that already why bother with lsi sas drivers in dsm, just raw map these disks too and be free of that and choose whatever platform you want (like 918/920?) if the hypervisor handles the controller it would also handle disk errors and smart and informing the user about problems (and you would need that already for you disk of the hypervisor), in some cases it might be beneficial like when using a hardware based raid and config the disks outside disk as a raid volume and make dsm only see one big disk, the hypervisor would have less limits then dsm to handle added drivers and tools, example might be a hp smart array p410 where a raid 5 is configured, the hypervisor would care for the disks and the state of the raid and dsm would only see one big disk and would have no raid to maintain, one way to circumvent the problems with hpe "non-microserver" with p4xx onbard and missing capabilities of dsm in that regard

-

saw a tpn-c125 on ebay with a i3-5005U so if its a i5 then it will also be 5th gen and 918+ starts at 4th gen, so it should work

-

yes https://xpenology.com/forum/topic/32867-sata-and-sas-config-commands-in-grubcfg-and-what-they-do/

- 1 reply

-

- 1

-

-

LSI SAS2008 - Make disk order follow enclosure places

IG-88 replied to exodius's topic in Developer Discussion Room

JBOD is a raid mode where you create a raid volume from a bunch of disk (size does not matter) and they are lined up in that volume as sequence as a result you would have one volume visible to dsm and when it comes to the "older" lsi sas like sas2008 the it also means that the controller uses IR (R like in raid) mode and need a differen driver, not mpt2sas (or mpt3sas) it would be "megaraid" as driver IT mode (initiator target mode) or HBA mode (host bus adapter) is when you see every disk as a single disk and there is no raid at all in older lsi sas like the sas2008 you needed a different firmware for IR mode and IT mode to flash, newer chip versions (12G sas?) where able to switch that in the controller bios/firmware so there was no it/it firmware controller with the chip sas2008 was sold under different names depending if it had a ir or it firmware, ir controller where sold as MegaRAID controllers something similar goes for hp smart array 4xx series 400 had no hba mode at all (at least for linux/windows), 410 was switchable by a software tool and since 420 it can be switched in the controller bios - not that i would suggest to use a hp sa controller with dsm, we never had much luck with them (and you might also have trouble using them in somewhere else as a hpe server) start with that link https://www.servethehome.com/lsi-sas-2008-raid-controller-hba-information/ and maybe that one https://www.ikus-soft.com/en/blog/2021-01-20-flash-lsi-controller-for-zfs/ if you want one already in the right mode look for one thats labeled to have IT mode firmware like this https://www.ebay.de/itm/194584436310 edit: don't even think about a cheap controller with a older 1068E chip, they have a hardware limit at 2.3TB so you cant use any bigger disks and there is no way around this, no bios, no driver -

slight but important mistake, jmb575 is a multiplier chip and cards with that one will not bring the results as it will end up in the amount of usable ports of the real sata controller on that card, worst case might be a 2 port sata chips and two jmb575 (each on every real sata port) looks like a 10 port card but will onlyx have 2 ports working with dsm (because synology prevents sata multiplexer except there own used in external units) the JMB one is JMB585 and beside one port more over the ancient marvell controllers it also has pcie 3.0, doubling the possible speed of over all data transfer (keep in mind the cip has only two pcie lanes usable) same as jmb585 is the asm1166 but has 6 ports (also two pcie lanes and up to pcie 3.0 speed) there are at least two 8 port ahci controllers but as they only based on pcie 2.0 chips there performance is not very good (especially when used with ssd's its a waste of money) - i wrote about these and also had a break down about the chips used and the max. performance that is to expect from the architecture the old pcie 2.0 lsi sas controllers are still a good choice when it comes to performance as the can use up to 8 pcie lanes (depending on the slot, they also work with less lanes, even with one but the performance will be less too) - as long as i dont have tested the lsi sas myself i would only use them with platforms where synology uses them too and use the original driver (that scenario limits possible choice to "older" varieties and the latest tri-mode might not be usable but that one might be to expansive anyway compared with the old trusty sas2008 based ones that need extra firmware flashing to have hba aka IT mode) tc loader has a newer mpt3sas driver but as long as i have not tested it thoroughly i'm not going to use it on a productive hardware, i have seen to much trouble with added drivers in 6.2, especially with 918+

-

the driver is extended for additional support of 10th gen, all other that worked before will still work, the driver is supposed to replace the driver used now yesterday i already did a test with 6th gen (skylake) to see if the patched driver i compiled does work in general and it did, so i dont think it needs testing with older gen's already proven to work a i3 2nd will be hard to test (even if it would be working as a gpu) as the kernel of 918/920 only boots with 4th gen and newer even 10th gen lower tier cpu's are tested already, ist just the upper tier version of desktops and the one i use from a notebook (U type cpu with lower tdp geared for mobile use) wan#n there a patch for that too? (a ffmpeg hack that just patched the binary to enable it afair) your binary files for 6.2.3 are the same size as mine and it does the same with your files, i also tried to disable vt-d but the did not change anything loading the driver does work [Thu Apr 21 00:40:40 2022] [drm] Supports vblank timestamp caching Rev 2 (21.10.2013). [Thu Apr 21 00:40:40 2022] [drm] Driver supports precise vblank timestamp query. [Thu Apr 21 00:40:40 2022] vgaarb: device changed decodes: PCI:0000:00:02.0,olddecodes=io+mem,decodes=io+mem:owns=io+mem [Thu Apr 21 00:40:40 2022] [drm] Finished loading DMC firmware i915/kbl_dmc_ver1_04.bin (v1.4) [Thu Apr 21 00:40:40 2022] [drm] Initialized i915 1.6.0 20171222 for 0000:00:02.0 on minor 0 [Thu Apr 21 00:40:40 2022] ACPI: Video Device [GFX0] (multi-head: yes rom: no post: no) [Thu Apr 21 00:40:40 2022] input: Video Bus as /devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A08:00/LNXVIDEO:00/input/input2 [Thu Apr 21 00:40:40 2022] i915 0000:00:02.0: fb0: inteldrmfb frame buffer device in intels driver in kernel 5.17rc4 there is a extra section for the low power "U" gpu's /* CML GT2 */ #define INTEL_CML_GT2_IDS(info) \ INTEL_VGA_DEVICE(0x9BC2, info), \ INTEL_VGA_DEVICE(0x9BC4, info), \ INTEL_VGA_DEVICE(0x9BC5, info), \ INTEL_VGA_DEVICE(0x9BC6, info), \ INTEL_VGA_DEVICE(0x9BC8, info), \ INTEL_VGA_DEVICE(0x9BE6, info), \ INTEL_VGA_DEVICE(0x9BF6, info) #define INTEL_CML_U_GT2_IDS(info) \ INTEL_VGA_DEVICE(0x9B41, info), \ INTEL_VGA_DEVICE(0x9BCA, info), \ INTEL_VGA_DEVICE(0x9BCC, info) so my 9B41 might need different handling then the normal CML_GT2?

-

atm i have the option to test with a i5-10210u, device id 9B41, CometLake-U GT2 [UHD Graphics] @blackmanga i applied the i915.patch to dsm's 6.2.3 source and used the *.ko files you had in your insmod script in extra/extra2 (along with the i915 firmware) there are devices in /dev/dri after boot now but video station (activated with a patch for codecs and hw acceleration) gives no picture, when done without hw acceleration it does transcode (with a crappy quality and high cpu load) same driver/installation on a skylake system does work with hw transcoding, so i think the i915 driver is ok with my old patching method the 9b41 was the same as the high tier 10th gen cpu (like 10900, 9bc5), system did not boot did anyone with a 9bc5 test the driver yet? the one tester above had a low tier cpu (10100, 9bc8) and these did work with the old binary patching already the log does not have much to offer 2022-04-20T01:09:42+02:00 DiskStation synoscgi_SYNO.VideoStation2.Streaming_2_open[20709]: video_format_profile.cpp:373 There is no such json member, value[braswell_2][mkv_serial] in [/var/packages/VideoStation/target/etc/TransInfo_HLS] 2022-04-20T01:11:28+02:00 DiskStation coredump: Process ffmpeg[21116] dumped core on signal [6]. maybe i should try it with your binarys on an 7.0.1 installation

-

unlikely to be a driver problem if its a realtek 8111/8168 chip, cant remember to have seen cases where the hardware was "to new" as seen with intel nic's or realtek 8125 it its 1.03b loader then is more likely to be a uefi/csm problem where you not only need to have csm active you also need to boot from the usb legacy device instead if usb uefi device, csm i a option and if usb uefi dvice is used the it will boot in uefi its documented here https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ so try 918+ (the board/chipset seem to be haswell aka 4th gen intel) or try loader 1.02b (as seen in the link 1.02b and dsm 6.1 dont habe that uefi/csm-legacy problem)

-

LSI SAS2008 - Make disk order follow enclosure places

IG-88 replied to exodius's topic in Developer Discussion Room

no its not random, if nothing on the disks changes they will keep positions, i a disks fails for some reason, the following disks will fill the gap and if you add a replacement disk on the same cable on the controller the new disk will be last in order seen in dsm can be problematic when doing disk recovery and disk are not on the same positions as before after a reboot as long as the disk SN is shown no its possible to deal with it but with 6.2 there was a problem with jun's original driver and the one made from synology's kernel source did not show SN or smart data and in that situation it can be dangerous lots of people want to have fixed slots in the same way a original units shows and that was a problem with the lsi sas controllers (at least in 6.2) might be different with dsm7 and redpill loader as the loader might do things differently, i have not tested rp loader and 7.x with lsi sas if its still the same, might be different -

i guess its a Inspiron 530 https://linux-hardware.org/?probe=44903c7ee0 we can see the pci id's of the devices and the used drivers in that link storage is ahci (at least when correctly set up in bios) network controller is only 10/100 MBit and its supported with a driver directly in dsm (at least for ds3615/3617) so in theory you should be able to use dsm 6.1/6.2 and even 7.0 (e1000e driver is also part of tinycore's driver set) https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ 1st thing you can see that you dant have to try a loader for 916+/918+ because your cpu is to old for these this indicates that if there is a problem with uefi/csm mode you can use loader 1.02b for dsm 6.1, that version works for both start off with loader 1.02b for 3615, no modifications to the loader or it's files, see if you can find it in network (synology assistant or maybe your dhcp server, often the internet rouer) if that works you can try to mod the grub.cfg of the loader to match the vendor and device id of the usb you use for booting with the loader now try to install dsm 6.1 as in the tutorials if you want dsm 6.2 you can now try the same with loader 1.03b, leave your working loader and disk as is and use a new usb and do the same as above, 1st try without modding the loader and just look for it in your network, if not then you can try the same with the mbr format loader that can be found here https://xpenology.com/forum/forum/98-member-tweaked-loaders/ you can also try the new tutorials for dsm 7.x like this one https://xpenology.com/forum/topic/59956-redpill-tinycore-loader-installation-guide-for-dsm-701-baremetal/

-

in 7.0 there is a new kernel version, so no kernel modules 6.2.x will work there, if you want a system with security fixes you best chance is rp loader for 6.2.4 that way you can still use the old spk and even the old 6.2.3 driver if you go 7.x then you would at least need the hpilo.ko driver for the platform in question (i use 918+ aka apollolake myself so i could provide a driver for that without much effort) edit: as the driver is for 7.0 918+ and might work an 3617/3622 too (same kernel version) but its no use for 3615 (and as hpe microserver gen8 is only 3rd gen intel, it cant run 918+) hpilo.ko

-

thanks for doing so, it was like that with 3615/17 in dsm 6.2 and i hat that on my list for testing if its still the case with kernel 4.4 now in dsm 7.x but as with 3615/17 in 6.2 there is still the option to compile additional nvidia drivers and use them instead of intel qsv https://xpenology.com/forum/topic/22272-nvidia-runtime-library/ (we do have dva3221 with build-in nvidia but that one is limited to 16 threads, 3617 and 3622 are 24 threads and if FS6400 get support in tinycore it will be 64 threads) might not be a question of modules, is presumably like with hyper-v support and you would need to create a special extension (module) where the code usually fixed in the kernel is made up es module to fix the missing dependencies - nor sure it that would be even possible but might not be worth the effort imho nvidia drivers and jellyfin (as spk or docker) might be a far better option to invest time, jellyfin as 7.x spk already exists and nvidia drivers for 3615/17 and 918+ on 6.2 where no that difficult to make (at least i was able to build the base *.ko files)

-

in theory it might work hpilo.ko as driver is part or kernel 4.4 and is no problem to build (beside 3615 all units we have for 7.x use that) packages like the "hp_ams-5.2_2.5.0-1.spk" will not work as 7.x prevents things like using root user to run a package but its possible to mimic the installation of the spk (can be extracted and there are scripts for installing and running) and then match it the way it has to be to run in 7.x i native 7.x might be better but as long as there is none it might be possible to make it work by installing the files manually