Search the Community

Showing results for 'Supermicro x7spa'.

-

unfortunately no, it was not possible to win work on the 30th series, I tried it on r630\730, I also tried it on r620, it was installed immediately without any manipulations, so I had to switch to the platform with supermicro +hba card, everything immediately worked perfectly...

-

Tutorial: Install DSM 6.2 on ESXi 6.7

Arthur P Fleck replied to luchuma's topic in Tutorials and Guides

Hi guys, sorry if this has been asked before or in the wrong place but I was hoping you could help please? It's not directly related to XPen but I want to install on ESXi and cannot get my box to recognise the arrays. At my home I got a Supermicro server X9SRL-F (is an old but still good 2014) with an Adaptec ASR7805 RAID SAS connector on 8 hard drives. The computer itself has an Intel Xeon E5-2630LV2 CPU / 50GB RAM. I have tried many things with the box but I can't get VMware installed. I have already tried 5.1 and 6.2 but somehow the VMware does not see the arrays - do you have an idea what this could be? I have created arrays in the control tool but when I boot the box from the CD it does not see the drives where it has to install. With Windows 10 I have managed without problem, it sees the arrays immediately only with VMware it does not see the arrays??? 😞 -

Hello, I'm trying to run XPEnology DSM 6.2.x on Server Supermicro 3U CSE836TQ-R800B X7DTI-F (16x HDD slot, 24GB RAM, Dual Xeon L5640)... Installation on SATA SSD (on mother board SATA port) running good, system is stable and running fine, but RAID Adapter for front 16 HDD slot wasn't recognise It's Adaptec ASR 71605, system didn't see adapter, "aacraid.ko" is missing at /lib/modules. Im not so good with this linux distribution for fix it by myself, can someone help me how to add support for Adaptec RAID adapter, please. Thank you!

-

If I were you, I would just install ESXi 6.7 or 7.0 and run Xpenology on this with hardware redirect of hard disks into the virtual machine. That way network drivers is of no problem as you just run it with either virtual Intel 1Gbps nics or the VMware vmxnet3 virtual nics. Sure, it's been a while since I did this, as I'm now running it directly on a Supermicro S9XCL mainboard, but when I did run it under ESXi it did run just fine. [emoji4]

-

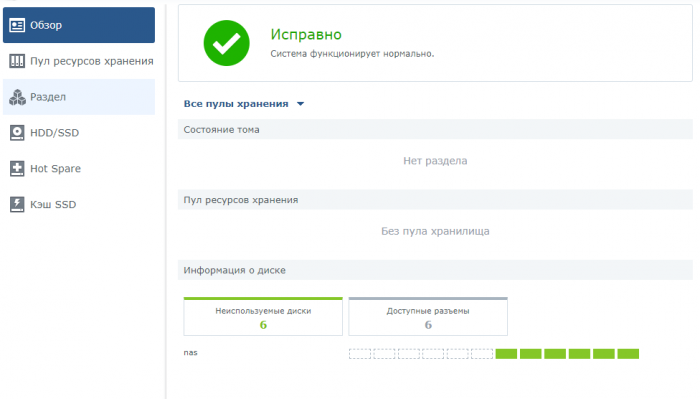

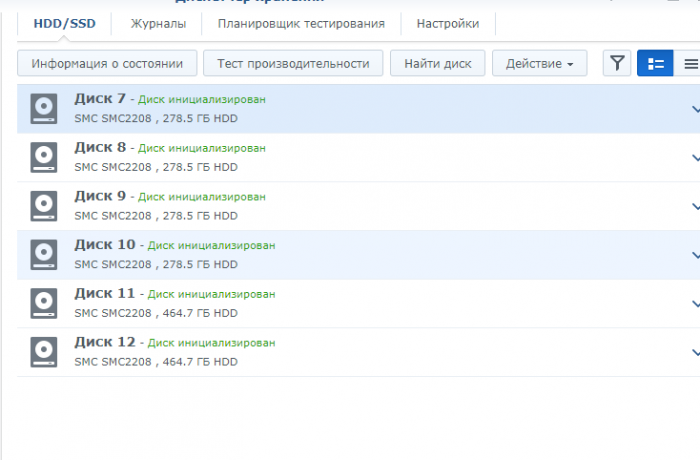

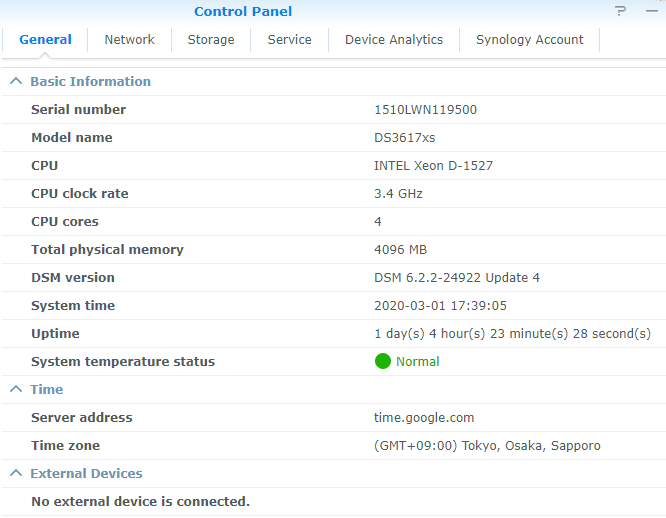

DS3615xs DSM 6.2.3-25426 Update 3 Платформа не помню какой supermicro с raid контроллером LSI Mega. В наличии 8 HDD, для всех 8 в raid контроллере создан raid0 с 1 диском в нем. В момент загрузки контроллер показывает что видит 8 дисков и 8 массивов, но что DS3615xs, что DS3617xs показывают только 6. При чем рядом стоит хрень ds3615xs с DSM 6.1.7-15284 Update 3 и там пишет что доступно 8 разъемов и используется 4 диска, тоже самое есть и дома и там на такой же сборке используется 8 дисков. Пока возился с хренью, попробовал установить TrueNAS (все посмотреть хотел), он увидел все 8 дисков без проблем. Извиняюсь если вопрос был, не нашел. И заранее спасибо.

-

Hi Balrog, I just bought the exact same setup to replace my bare metal setup on a HPE Microserver Gen8 with a e3-1230v2 and 16GB ram. For the Gen10+ I have installed 64Gb ECC Micron dimes DDR4-3200 EUDIMM (MTA18asf4g72az-3g2b1). How is your setup handling? I have not installed the new Xeon yet (just got it today) is now running with the new memory and the Pentium G54XX that came with it. So before I install the CPU I would love to hear what your experience is. Also, did you pass the HD's through to the xpenology? In the past I had ran some test with VMDK's but got issues with several setups loosing disks. On one of my other servers (Supermicro) I am running ESXI 6.7 with Freenas as a VM with HBA passthrough to freenas and hosting its storage though NFS for the ESXi host on a dedicated internal network bridge. Then I run a xpenology VM and mount the NFS storage in DSM and only use one vmdk as volume1 for local installs. This is not my production xpenology but it works great. The reason why I chose this approach is that if I want to snapshot the VM lets say for testing a new DSM version I don't have to deal with the large amounts of data and even if something goes wrong the most important data will be on a NFS share that can be simultaneous accessed by various systems. Example. I can use a VM running sabnzbd storing it on the NFS share and with plex on synology access the same data etc. (just a example). So Now I am wondering how I should go about it for my next setup. Same way I did on the SuperMicro Or just Pass the disks to xpenology and share some storage via Iscsi or NFS to the ESXI host. Also looking and testing proxmox. Anyhow, would love to hear what you (or other members) think of it

-

Hello everyone! Currently running 1.03b/3615xs/6.2.3 on an old HP workstation. Recently purchased a supermicro 2u server and intend on using that for Xpenology going forward. It has an e3-1220v3, so new enough for the 918+ loader. The backplane is currently connected to an LSI 9211-8i in IT mode. I'm also planning on adding a Mellanox Connectx-3 NIC for 10gb. However, I'm having trouble finding what the best build to run is, and what kind of migration path that leaves me, as I'll be reusing my existing disks. From what I can tell, 6.2.3 is broken when using an LSI controller, with either hibernation not working, or waking up from hibernation causing corruption. Is this still accurate? If so that leaves with with running an older build of DSM. That wouldn't bother me, except I plan on doing an "HDD Migration" using my existing disks, which I believe requires the source and destination machine to be on the same build, or newer on the destination. Any thoughts?

-

Bonjour, Je me demande si quelqu'un a déjà essayé d'installer XPenology sur une carte mère Supermicro PDSML-LN2+ (http://www.supermicro.com/products/moth ... ML-LN2.cfm), et donc de savoir si ca peut fonctionner... Merci à tous.

-

Привет. Есть Supermicro X8DTL-I на неё нормально встаёт и работает 3615xs последней версии(6.2.3) и встаёт версия 6.2.2 3617xs. Установка 3617 последней версии с загрузчиком v0.5_test внешне всё работает, но перезагрузка и выключение из Web перестаёт работать. Плата явно выключается, снимает питание с Ethernet портов и дисков, но кулеры работают... куда рыть или это данность на сейчас ? заранее спасибо.

-

918+ Can Not Create Hot Spare Drives "Operation Failed"

powerplyer replied to powerplyer's question in Answered Questions

@IG-88Thank you so much for the feedback. I tried to disable all onboard SATA ports in BIOS and hooked up 14x HDD's and 2x SSD to LSI controller. I still can not create a hot spare. All the drives are showing up in storage manager. I tried to modify the synoinfo.conf (20x slots) file but that did not work either. I am also a bit concerned about no SMART and no hot spare. I am going back to the 3617xs, seemed to work solid with my Supermicro x10SAE motherboard. Since I have a Intel® Xeon® CPU E3-1285L v4 @ 3.40GHz, I really wanted transcoding (mainly for moments), but I rather have data integrity. I assume there is no modifications I can make to 3716xs to enable HW transcoding? -

Hi everyone, I have a custom built 20bay chassis, supermicro X9DRi-LN4F, 32gb ddr3 ram, dual xeon e5-2650L cpus, 20x 10tb drives and Mellanox 10gb DAS card. What is the best way to install Xpenology on this hardware and it actually work? I am messing with TrueNAS right now and just don't like the interface. I'm used to QNAP and Synology OS's. Thanks!

-

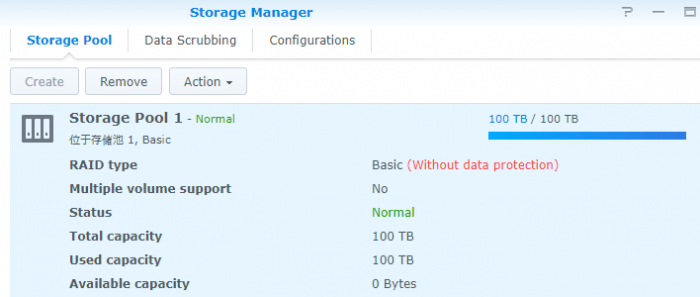

As of a FreeBSD user, I use ZFS to store my data, but DSM provide some feature/app very nice, I would show how to run XPenology DSM 6.2 on FreeBSD 12.1R. Hardware configuration will be a little special. here is my Build: Xeon E5 2687v2 * 2 SuperMicro X9DRH-iF 256GB RAM other staff. Because bhyve only support e1000/virtio-net emu adapter, for performance and stability consideration a physical ethernet interface will be provide better performance. The key is MB/CPU should support VT-d, otherwize you have to choose a newer CPU platform(XEON E5 v3 or above) to run DS918+. which is support e1000. I will not cover how to install FreeBSD/vm-bhyve and config VT-D here. by using vm-bhyve, the vm config file will be as follow: loader="uefi-csm" cpu=8 cpu_sockets=1 cpu_cores=4 cpu_threads=2 memory=4G ahci_device_limit="1" disk0_type="ahci-hd" disk0_name="ds3617-1.03b.img" disk1_type="ahci-hd" disk1_name="disk0.img" disk1_opts="sectorsize=4096/4096" debug="yes" passthru0="3/0/0" since I am running ds3617+ with 1.03b loader, I use 8 vcpu and 4G ram. and, two disks. one is 50M jun loader, and one data disk. which is disk0.img. there is a ahci_device_limit=1 here. that is tell vm-bhyve will use one SATA controller for one disk. ther reason I will explain later. since our HDDs are organize by FreeBSD's zfs, only one data disk here is enough. we can make it larger. such as 100TB, so we won't have space issue on DSM later. So the VM folder will have 3 files. root@nas253:/zones/vm/jnas # ls -lh total 21798 -rw------- 1 root wheel 100T Mar 1 08:27 disk0.img -rw-r--r-- 1 root wheel 50M Aug 1 2018 ds3617-1.03b.img -rwxr-xr-x 1 root wheel 255B Mar 1 08:26 jnas.conf before you start the VM, make sure you have a DHCP server running in your local network. vm start jnas after the, you can attach to console by using following command vm console jnas and you will see the serial output. Running /usr/syno/etc/rc.d/J99avahi.sh... Starting Avahi mDNS/DNS-SD Daemon cname_load_conf failed:/var/tmp/nginx/avahi-aliases.conf :: Loading module hid ... [ OK ] :: Loading module usbhid ... [ OK ] ============ Date ============ Sun Mar 1 08:30:59 UTC 2020 ============================== starting pid 5957, tty '': '/sbin/getty 115200 console' Sun Mar 1 08:31:00 2020 DiskStation login: after you saw this, you can query which IP is assigned by your DHCP server. and access that IP by web browser and contine setting. the steps are common. As the loader disk is shown inside DSM, you can modify grub.cfg and replace following setting. set sata_args='DiskIdxMap=1F00' and make sure loader entry use this parameter. (grub boot select ESXi entry, or by modify enty setting) that is all. thanks. Here is an instance I have already run for half year Thank you for people who provide loader!!!

-

Intro/Motivation This seems like hard-to-find information, so I thought I'd write up a quick tutorial. I'm running XPEnology as a VM under ESXi, now with a 24-bay Supermicro chassis. The Supermicro world has a lot of similar-but-different options. In particular, I'm running an E5-1225v3 Haswell CPU, with 32GB memory, on a X10SLM+-F motherboard in a 4U chassis using a BPN-SAS2-846EL1 backplane. This means all 24 drives are connected to a single LSI 9211-8i based HBA, flashed to IT mode. That should be enough Google-juice to find everything you need for a similar setup! The various Jun loaders default to 12 drive bays (3615/3617 models), or 16 drive bays (DS918+). This presents a problem when you update, if you increase maxdisks after install--you either have to design your volumes around those numbers, so whole volumes drop off after an update before you re-apply the settings, or just deal with volumes being sliced and checking integrity afterwards. Since my new hardware supports the 4.x kernel, I wanted to use the DS918+ loader, but update the patching so that 24 drive bays was the new default. Here's how. Or, just grab the files attached to the post. Locating extra.lzma/extra2.lzma This tutorial assumes you've messed with the synoboot.img file before. If not, a brief guide on mounting: Install OSFMount "Mount new" button, select synoboot.img On first dialog, "Use entire image file" On main settings dialog, "mount all partitions" radio button under volumes options, uncheck "read-only drive" under mount options Click OK You should know have three new drives mounted. Exactly where will depend on your system, but if you had a C/D drive before, probably E/F/G. The first readable drive has an EFI/grub.cfg file. This is what you usually customize for i.e. serial number. On the second drive, should have a extra.lzma and extra2.lzma file, alongside some other things. Copy these somewhere else. Unpacking, Modifying, Repacking To be honest, I don't know why the patch exists in both of these files. Maybe one is applied during updates, one at normal boot time? I never looked into it. But the patch that's being applied to the max disks count exists in these files. We'll need to unpack them first. Some of these tools exist on macOS, and likely Windows ports, but I just did this on a Linux system. Spin up a VM if you need. On a fresh system you likely won't have lzma or cpio installed, but apt-get should suggest the right packages. Copy extra.lzma to a new, temporary folder. Run: lzma -d extra.lzma cpio -idv < extra In the new ./etc/ directory, you should see: jun.patch rc.modules synoinfo_override.conf Open up jun.patch in the text editor of your choice. Search for maxdisks. There should be two instances--one in the patch delta up top, and one in a larger script below. Change the 16 to a 24. Search for internalportcfg. Again, two instances. Change the 0xffff to 0xffffff for 24. This is a bitmask--more info elsewhere on the forums. Open up synoinfo_override.conf. Change the 16 to a 24, and 0xffff to 0xffffff To repack, in a shell at the root of the extracted files, run: (find . -name modprobe && find . \! -name modprobe) | cpio --owner root:root -oH newc | lzma -8 > ../extra.lzma Not at the resulting file sits one directory up (../extra.lzma). Repeat the same steps for extra2.lzma. Preparing synoboot.img Just copy the updated extra/extra2.lzma files back where they came from, mounted under OSFMount. While you're in there, you might need to update grub.cfg, especially if this is a new install. For the hardware mentioned at the very top of the post, with a single SAS expander providing 24 drives, where synoboot.img is a SATA disk for a VM under ESXi 6.7, I use these sata_args: # for 24-bay sas enclosure on 9211 LSI card (i.e. 24-bay supermicro) set sata_args='DiskIdxMap=181C SataPortMap=1 SasIdxMap=0xfffffff4' Close any explorer windows or text editors, and click dismount all in OSFMount. This image is ready to use. If you're using ESXi and having trouble getting the image to boot, you can attach a network serial port to telnet in and see what's happening at boot time. You'll probably need to disable the ESXi firewall temporarily, or open port 23. It's super useful. Be aware that the 4.x kernel no longer supports extra hardware, so network card will have to be officially supported. (I gave the VM a real network card via hardware passthrough). Attached Files I attached extra.lzma and extra2.lzma to this post. They are both from Jun's Loader 1.04b with the above procedure applied to change default drives from 16 from 24. extra2.lzma extra.lzma

-

As always thank you very much IG-88. I need 24 ports. My configuration: Supermicro X10 motherboard 6x SATA (internal ports) 16x SAS/SATA ports (2x HBA LSI 9211-8i) 2x USB 3.0 (For external drive connection) NO eSATA ports. 3617xs Here is how I got is working. The key was not to mess with the usbportcfg settings. maxdisks="24" 0000 0000 0000 0000 0000 0000 0000 0000 => esataportcfg ==>"0x0000 0000" 0000 0000 0011 0000 0000 0000 0000 0000 => usbportcfg ==>"0x0030 0000" 0000 1111 0000 1111 1111 1111 1111 1111 => internalportcfg ==>"0x0f0f ffff" FYI still have not installed all the drives, but verified I "see" 24 open ports and USB is working as a USB device vs a USB internal storage. I will update once I have the system up and running.

-

You should be aware that just because a slot is M.2 does not mean it supports M.2 SATA. It's up to the motherboard or other device. For example, my X570 motherboard supports two M.2 NVMe slots but they do not work with M.2 SATA. However, my Supermicro server board does support both on its slot. So check before buying M.2 SATA SSDs.

-

I am looking to back-up my data to a couple of external drives. I bought a dual USB 3.0 docking station. When I plug it into my xpenology 3615xs it get recognized under Storage Manager but do not see any devices to mount under Control Panel-->External Devices. I am using DSM 6.2.3-25426 Update 2 When I try to configure under storage manager I get an error "Operation Failed". Status shows not initialized. I tried to format the drives to NTFS, EXT4 and FAT32. They are 16TB drives and I have two of them in the docking station. I tried both the USB 2.0 and USB 3.0 ports. It works find on my windows PC. The actual model number of the docking station ASMT ASM1156-PM (Y-3026RG) I am using DSM 6.2.3-25426 Update 2, Supermicro X10 motherboard. Any help would be appreciated.

-

- Outcome of the installation/update: SUCCESSFUL - DSM version prior update: DSM 6.2.3-25426 Update 2 - Loader version and model: Jun v1.04b - DS918+ - Using custom extra.lzma: Yes I-88 13.3 - Installation type: BAREMETAL - Intel Core i3-8300 - Supermicro X11SCH-F - 16GB - 8 x 4TB Red WD AFRX - 10Gb NIC (asus C100C / Aquantia C107) & 1Gb NIC (Intel-i210) - Additional Comment: Plex HW decoding OK / Reboot required

-

Hi everyone, I just built a custom server and am loading xpenology on it. I have a 16port LSI MegaRAID card in the server. The mobo is a Supermicro x9dri-f dual socket with 32gb and dual Xeon E5-2650L CPUs. Which loader should I download? I tried the synoboot 3617 and was able to get it loaded, but couldn't find any of my drives except for the SSD that i have plugged into the regular SATA port on the mobo. I am familiar with the Synology OS, went through everything and couldn't find any of the drives. Right now i have 13x 10tb drives in the server. Any idea of what i should do? Thanks!

-

Recently I upgraded my router to QNAP QHora301W router with 2x 10Gbe network port. To utilize the new 10Gbe network ports, I installed a Supermicro Intel X540-T2 network card into my Xpenlogy NAS. I then booted the system with the 1 onboard NIC (Intel I219V or Intel I211AT) connected, and confirmed the system picked up the two new ports on the X540-T2. So I wired a CAT7 wire between the router and the NAS. After the physical wire was setup, although there are blinking lights on both sides and router management page shows a 10Gbe link is established, Synology side shows the port as disconnected. What step I can do in SSH to troubleshoot or attempt the get them working? Hardware Configuration: Intel Core i7-8700T (ES) ASRock Z390M-ITX/ac Supermicro Intel X540-T2 Bootloader Configuration: Jun's v1.04b DS918+ with v0.13.3 with NVMe Patch on DSM 6.2.3-25426 Update 3 Thanks.

-

Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 6.2.3-25426-2 - Loader version and model: JUN'S LOADER v1.03b - DS3617xs - Using custom extra.lzma: NO - Installation type: BAREMETAL - Supermicro X10SDV-6C-TLN4F - Additional comments: REBOOT REQUIRED -- Manual Update

-

Материнская плата: supermicro X8DTU Процессоры: e5620 х2 Оперативка: Samsung ddr3 -10600R = 24gb

-

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 6.2.3-25426 - Loader version and model: JUN'S LOADER v1.04b - DS918+ - Using custom extra.lzma: NO - Installation type: BAREMETAL Supermicro x9sbaa -f -Additional comments: REBOOT REQUIRED

-

- Outcome of the installation/update: SUCCESSFUL - DSM version prior update: DSM 6.2.3-25426 Update 2 - Loader version and model: Jun v1.04b - DS918+ - Using custom extra.lzma: NO - Installation type: BAREMETAL - Supermicro MBD-X11SCL-IF-O, mini-ITX, Intel C242 chipset, CPU Intel Core™ i3-9100F, RAM 32GB, HDD Seagate NAS 4*3=8TB (SHR), WD Ultrastar 12TB, SSD Intel 545s 512GB, Power Thermaltake TR-500P 500W, Back-UPS CS 650 - Additional Comment: Reboot required

-

Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 6.2.3-25426 Update 2 - Loader version and model: Jun's Loader v1.04b - DS918+ (with NVME cache) - Using custom extra.lzma: Yes. v0.11 from IG-88 - Installation type: BAREMETAL - Supermicro X11SSZ-TLN4F + Intel Xeon E3-1245 v5 - Additional comment: NvME cache working after update, all 4 NIC available (2 x 1GBe + 2 x 10Gbe) , 32 GB RAM ECC available, 10GBe connection working. H/W transcoding works also using driver extension for 918+ , v0.11, Reboot required: No

-

never seen a unit IRl but that looks like a common server hardware https://www.itpro.co.uk/640606/nimble-storage-cs240-review/page/0/2 "... Nimble opted for Supermicro's 6036ST-6LR Storage Bridge Bay platform. ... Motherboard: Supermicro X8DTS-F CPU: 2GHz Xeon E5504 Memory: 12GB DDR3 RAID controller: Supermicro AOM SAS2-L8 ..." the build seems to pretty non standard the controller is a good old lsi sas2008 chip based most people have here if its a sas controller and there is no limit with disk size the thing not to have is lsi SAS1068/1078 these have a 2.2TB hardware limit so older nimble versions might be a hands off if they used these older sas1xxx chips beside this you would need to check the hardware for storage before buying, dsm does nor have support for every storage controller, the only universal is the ahci support