flyride

Moderator-

Posts

2,438 -

Joined

-

Last visited

-

Days Won

127

Everything posted by flyride

-

What was DiskIdxMap before (did it exist)? If that is the way it is now, try DiskIdxMap=0008 Here's the text from the Kconfig: Add boot argument DiskIdxMap to modify disk name sequence. Each two characters define the start disk index of the sata host. This argument is a hex string and is related with SataPortMap. For example, DiskIdxMap=030600 means the disk name of the first host start from sdd, the second host start from sdq, and the third host start sda.

- 11 replies

-

- remap

- supermicro

-

(and 2 more)

Tagged with:

-

Disk hibernation/spindown is what folks are concerned about with 6.2.3. There are a lot of reasons it may not be working, but mostly log messages written recurrently to /var/log due to hardware status failures resulting from non-Synology hardware. That's one of the reasons I've posted on some filtering of smart and scemd errors, but there are many errors and they differ depending on the platform you have installed. Unfortunately not a simple or single solution works for all.

-

So mapping your drives out by controller and array, it looks like this. md2 is the array that supports the small drives (sda/b/c/d/j) and md3 is the array that incorporates the remainder of the storage on the large drives. The two arrays are joined and represented as a single Storage Pool. The larger the size difference between the small and large drives, the more writes are going to the large array so the small drives will be less busy (or even idle sometimes). This is a byproduct of how SHR/SHR2 is designed, and not anything wrong. Because of this, you haven't seen the actual write throughput on disk 1-4. So if they were underperforming for some reason, impact to performance would be random and sporadic, because it would only be when writes were going to the small array. What we are trying to figure out is if one component or array (or controller even) is performing abnormally slowly. You might want to repeat the evaluation of the drives using the dd technique from earlier instead of copying files from the Mac, and maybe run for an extended time until Disk 1-4 get used, as it will stress the disk system much more. Just a random thought, is write cache enabled for the individual drives?

-

DS918+ enables NVMe cache drive support and hardware transcoding using Intel QuickSync. DS3617xs offers 16 CPU thread support and RAIDF1.

-

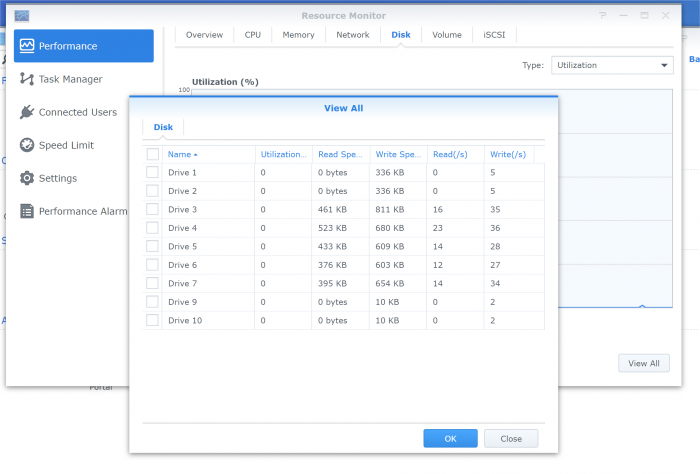

Array performance is definitely slow. You have plenty of CPU (should be no penalty for btrfs or SHR2) and your disk throughput is 1/3 of what it should be on writes. Individual read performance seems okay so something interesting is going on with writes. In DSM there is a Resource Monitor utility, launch that, go to Performance, Disk, then click View All and you should get some real-time I/O indicating reads, writes, utilization etc on a per-disk basis. The panel can be expanded to show all your disks by dragging the top or bottom. Repeat the write tests above while monitoring this panel to see if a particular drive is overused on writes or has extremely different utilization.

-

DSM usually modifies the boot loader during upgrades. The recent 6.2.3 error 21 issue requiring SynoBootFix is because the loader was not accessible for upgrade due to incorrect initialization of the synoboot devices. But if an old loader encounters a newer DSM version, it will just be upgraded in place with no notification. So you can replace a loader stick with a fresh burn and a working system should just boot.

-

There are some Linux commands that do this; that was the link in post #26.

-

I don't think I misread your question, I'm still trying to help you with your request. Benchmarking the disk system (array) without the network is an important part of understanding what is going on. Also, I was going to ask you to test with and without SSD cache. You still don't know where the performance bottleneck is but you want to reinstall DSM anyway. Your choice. So the same version can be reinstalled directly from Synology Assistant. Packages and settings will need to be reconfigured. No need to reburn your loader USB.

-

The point of that test (or a NASPT test) is to separate the behavior of your disk system from the network or file type. That would tell you something about what is or isn't happening and whether it is a problem. You asked for help troubleshooting performance, but it sounds like you just want an affirmation to install again, so go for it.

-

Unless there is a clear smoking gun, maybe figure out what's happening before making a big change? Maybe follow the process in this thread, this will help us understand your CPU and separate the disk I/O from interaction with the network. Also lots of good examples of representative systems that you can compare with. https://xpenology.com/forum/topic/13368-benchmarking-your-synology/

-

You just turned it off, or had turned it off? The SHR option makes sense. Might be worth doing some synthetic tests using something like NASPT for reference now. https://www.intel.com/content/www/us/en/products/docs/storage/nas-performance-toolkit.html

-

Those look ok. Did you turn OFF "Record file access time frequency" on each of the volumes? (Storage Manager | Volume Select | Action | Configure | General) You didn't add encryption by chance? When you created the Storage Pool, did you pick the flexibility or performance option?

-

Well, you can start with a cat /proc/mdstat so you can see the state of your array and the member disks. Then you can do a read test on each disk to see how they are performing. Example here: https://xpenology.com/forum/topic/34553-weak-performance-optimization-possibilities/?do=findComment&comment=167497

-

Запустите сценарий при загрузке, чтобы инициализировать /dev/tun. См., Например, этот пост. Вам не обязательно использовать WireGuard. Устройство /dev/tun одинаково для всех VPN-клиентов. https://xpenology.com/forum/topic/30678-using-docker-wireguard-vpn-on-xpenologydsm

-

Synology certifies btrfs on Atom hardware and it's not that slow. So something isn't quite right. Start with lowest level hardware first, then move to higher-level structures. Is everything else the same? Have you tested the raw performance of each disk connected? What is the configuration and state of the array? What's the memory usage?

-

Assuming you are baremetal, DiskIdxMap=0800 in the loader ought to do it?

- 11 replies

-

- 1

-

-

- remap

- supermicro

-

(and 2 more)

Tagged with:

-

No, it has to run and create the devices on each boot. Security Advisor is useless. Just disable that check.

-

Not much. More recent patches. Better support for transcoding. Support for NVMe flash caching.

-

Regardless of virtualization or not, if you want to hardware transcode, you need DS918+ image which limits you to 8 cores. To maximize the use of your CPU, turn off hyperthreading.

-

This has the information you are looking for. https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/

-

NAS Hardware compatible to install xpenology

flyride replied to Mary Andrea's topic in The Noob Lounge

That is curious, but our knowledge of this is based on community feedback. Yours is the first I've seen documented on a x86 processor working on 6.x. That said, I know that a 32-bit Linux ESXi VM does NOT work for DSM. -

Yes, just burn a new/equivalently configured loader and it will boot back up no issues.

-

NAS Hardware compatible to install xpenology

flyride replied to Mary Andrea's topic in The Noob Lounge

x86 != x86-64 -

NAS Hardware compatible to install xpenology

flyride replied to Mary Andrea's topic in The Noob Lounge

AFAIK x86-64 CPU is required for Linux kernel. Your chip is 32 bit.