Search the Community

Showing results for 'detected that the hard drives'.

-

i lost all my data so rebuilt the loader i used before. now some of my drives are that connected via the lsi hba card are showing as external drives. any ideas how i can make that appear as internal drives so i can add them to my pool. The loader is aprl

-

Hi, Currently, I have a motherboard: GA-B85M-DS3H, it has 4 x SATA3 + 2 SATA2, total of 6 drives, it can support. CPU is an Intel i7-4770 CPU based on socket 1150, Haswell architecture. This motherboard support hot-swappable drives in the BIOS/UEFI. So, in order to take advantage this feature, I need have 6 drives connected during DSM installation? I am about to start 1st installation of DSM 7.x, however after reading the is information about selecting the hardware: DSM 7.x Loaders and Platforms - Tutorials and Guides - XPEnology Community I windows user for many years, not a linux pro. so naturally, I a bit confused about these terminologies: 1) SataPortMap 2) DiskIdxMap 3) device tree Questions: 1) If I am to select the architecture: apollolake, DS918+, how many drive would it be display within DSM, the number = 4, right? 2) Can appollolake, DS918+ supports more than 4 drives, but cosmetically, it can only show 4 drives? 3) What happen if I can a extra HBA into the PCI-E x16 slot, which can support 4 more drives, same issue, it will only shows 4 drives? 4) How to map out drives? 5) What is RAIDF1 support means? Thank you.

-

To restore the VERSION file, I would use a 2nd DSM installation. So remove all drives and reinstall a free/empty hard drive, may be an old laptop drive, in slot 1 (far left) with DSM. Then insert the first/leftmost of the removed hard drives into the 2nd slot during operation. Don't put together hard drives online or anything like that! Then make the system partition available via SSH/root via the point "/mnt": mount /dev/sdb1 /mnt cd /mnt/etc.defaults Copy a working VERSION here, from the new DSM or it's share, or repair it using the editor "vi". power off 1. Remove the spare hard drive form slot 1, 2.=>1 and switch it on again to test. The data volume will be missing, but if DSM boots up properly it will be there again with all disks when you restart.

- 306 replies

-

- firmware

- 08-0220usb14

- (and 4 more)

-

Hello guys, noob here. Hope this questions not too stupid and greetings from Indonesia. I'm currently using DS112 and looking to upgrade it as it already quite slow for me. I just need a basic network storage functions, Download Station, Surveillance Station (for future, 1 cam only), access from outside the network (like quickconnect, I read that I can use the DDNS method), Docker (looking for PiHole or AdGuard for the network, 2 people only), adding more drive in future (going for 1 drive for starter, don't need RAID), and most important of all is the power consumption (for 24/7, mostly for media server and download station). Have a few questions as I still not decided between Xpenology or other NAS OS option for my needs. I found 2 HP ProDesk 400 G4 Mid Tower (500gigs HDD and 4GB DDR4 RAM, 3 SATA ports | 2x3.5" hard drive slot), the first one are using i3-6100 and the other i5-6500. Which one should I get? I read that i5-6500 not supporting ECC and the i3-6100 are supporting ECC, but I don't know about the motherboard whether it support or not. The ECC part aren't important if I read it right from this forum (for Xpenology), I think the power consumption part are the most important for me. As I'm looking for 1 drive starter and not looking for RAID (mirror or anything), can I just adding the drive when the Xpenology already running? I mean like on the computer, when the C drive already filled at 90% then I just have to buy more drive and add to the computer, or do I have to reconfigure the loader? I'm not familiar with how DSM works on the multibay, as I only have experience with the DS112. I'm looking for something like JBOD, as long as the new added drive are detected and I can use it. Basically, TLDR, I'm looking for much beefier than DS112 in terms of processing time (like managing the data inside, while running Download Station, as the DS112 are quite slow and makes me waiting a few times), space for more hard drive (I think I can live with 3 SATA ports on the HP Desktop), and the friendliness of DSM GUI while still have good power consumptions and off course cheaper hardware (than the Synology one). Any info or point to where I should be looking at are helping. Thank you in advance, and hope my English are understandable. EDIT: For the media server, I don't need transcoding as I'm using some Android TV box.

-

Hi all, I have been using Xpenology 7.1.1 on ESXI for a good while but with many changes to ESXI i would like to migrate to another hypervisor. I currently pass though a LSI 2008 HBA controller where the VM has direct access to the disks. I have installed Proxmox on a new boot drive on the same original server as before. Proxmox all setup, PCI Passthrough all working and migrated the VM fine and everything boots as it should. In the Xpenology VM now hosted by Proxmox, i am missing a few drives which has put the original disk arrays into a bit of a state. This is what i know: In ESXI you have two storage controllers, one for the RedPill Boot virtual image and the other controller for any other virtual disks. I don't have any other virtual disks as i am passing through the entire controller. In Proxmox, you can only have one SATA controller. I think the number of sata ports of the old virtual controller must be different from the new controller putting all the disk mapping into disarray (pun intended). It has put all disks out of sync with respect of where it thinks they should be. Has anybody successfully migrated an ESXI Xpenology VM to Proxmox whilst using PCIE passthrough? Has anybody got any suggestions? Thank you in advance, Dan.

-

Hi all! It's my first topic in this forum and I'm not very sure that this is the right subplace but I here is my question: I can't create a volume on a HP Gen10 with a H240 controller card and 4x2TB SAS drives. The system see the disks but no way to create a volume, after a couple of seconds, a message shows that the volume can not be created. But on the original motherboard controller, I have no problem to create another Raid volumen with SATA disks.... My Xpenology is 7.2.1 ds3622xs+. Thx for watching and BIG THX to all community

-

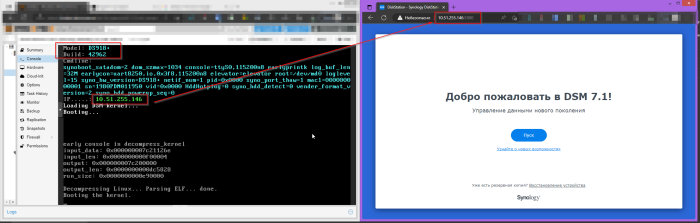

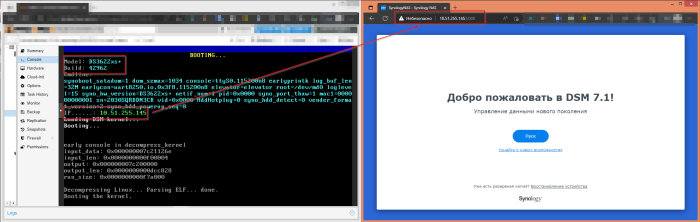

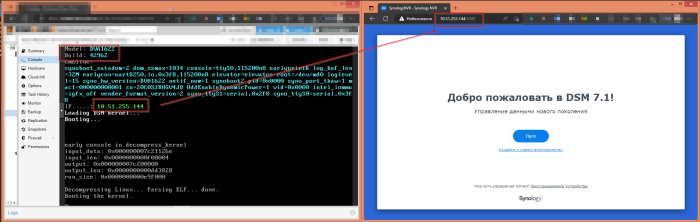

Hello. I'll start right away in order. I put it here on this guide https://github.com/fbelavenuto/arpl Specifications of my PC ( i5-12400, 16GB, Asus Prime B660M-A D4, 3xSkyhawk 4TB 1xSSD M.2 NVMe 250Gb Kingston NV1 SNVS/250G) Then when I put it using the Arpl method, the bootloader is installed on my USB flash drive, everything is fine. But at the Booting stage.... the computer is not responding. There is no access by IP. But at the boot stage, the IP is obtained via DHCP and it is visible for 3 seconds while there is still no Booting stage.... I tried this guide but all to no avail. On this guide at the stage ./rploader.sh satamap writes me 1 2 3 4 bad ports.https://xpenology.com/forum/topic/62221-tutorial-installmigrate-to-dsm-7x-with-tinycore-redpill-tcrp-loader/ , but all to no avail. On this guide at the stage ./rploader.sh satamap writes me 1 2 3 4 bad ports. Found "00:17.0 Intel Corporation Device 7ae2 (rev 11)" Detected 8 ports/3 drives. Bad ports: 1 2 3 4. Override # of ports or ENTER to accept <8> Computed settings: SataPortMap=8 DiskIdxMap=00 WARNING: Bad ports are mapped. The DSM installation will fail! And I do not know what else to do in this situation because in the Arpl method it freezes there at the loading stage. Here I can't make a flash drive because at the sata stage I have an error, if I make a build, it freezes at the flash drive build stage. Please help me, what can I do?

-

I'm trying to update my Synology_HDD_db script but I've been having issues getting E10M20-T1, M2D20 and M2D18 working in DS1823xs+, DS1821+ and DS1621+ in DSM 7.2-64570-U2 and later. These 3 models all use device tree, have 2 internal M.2 slots and do not officially support E10M20-T1, M2D20, M2D18 or M2D17. Setting the power_limit in model.dtb to 100 for each NVMe drive does not work for these models. The only way I can get more than 2 NVMe drives to work in DS1823xs+, DS1821+ and DS1621+ is to replace these 2 files with versions from 7.2-64570 (which I'd rather not do). /usr/lib/libsynonvme.so.1 /usr/syno/bin/synonvme And there are error messages in synoscgi.log, synosnmpcd.log and synoinstall.log With 3 NVMe drives installed the logs contain: nvme_model_spec_get.c:90 Incorrect power limit number 3!=2 With 4 NVMe drives installed the logs contain: nvme_model_spec_get.c:90 Incorrect power limit number 4!=2 I suspect that the DS1823xs+, DS1821+ and DS1621+ have the number of M.2 drives hard coded to 2 somewhere. If I restore these 2 files to the versions from 7.2.1-69057-U1 /usr/lib/libsynonvme.so.1 /usr/syno/bin/synonvme And add the default power_limit 14.85,9.075 the Internal M.2 slots show in storage manager and the fans run at normal speed. The "Incorrect power limit" log entries are gone, but synoscgi.log now contains the following for NVMe drives in the E10M20-T1: synoscgi_SYNO.Core.System_1_info[24819]: nvme_model_spec_get.c:81 Fail to get fdt property of power_limit synoscgi_SYNO.Core.System_1_info[24819]: nvme_model_spec_get.c:359 Fail to get power limit of nvme0n1 synoscgi_SYNO.Core.System_1_info[24819]: nvme_slot_info_get.c:37 Failed to get model specification synoscgi_SYNO.Core.System_1_info[24819]: nvme_dev_port_check.c:23 Failed to get slot informtion of nvme0n1 synoscgi_SYNO.Core.System_1_info[24819]: nvme_model_spec_get.c:81 Fail to get fdt property of power_limit synoscgi_SYNO.Core.System_1_info[24819]: nvme_model_spec_get.c:359 Fail to get power limit of nvme1n1 synoscgi_SYNO.Core.System_1_info[24819]: nvme_slot_info_get.c:37 Failed to get model specification synoscgi_SYNO.Core.System_1_info[24819]: nvme_dev_port_check.c:23 Failed to get slot informtion of nvme1n1 I get the default power_limit 14.85,9.075 for the DS1823xs+, DS1821+ and DS1621+ with: cat /sys/firmware/devicetree/base/power_limit | cut -d"," -f1 Apparently other models don't have /sys/firmware/devicetree/base/power_limit

-

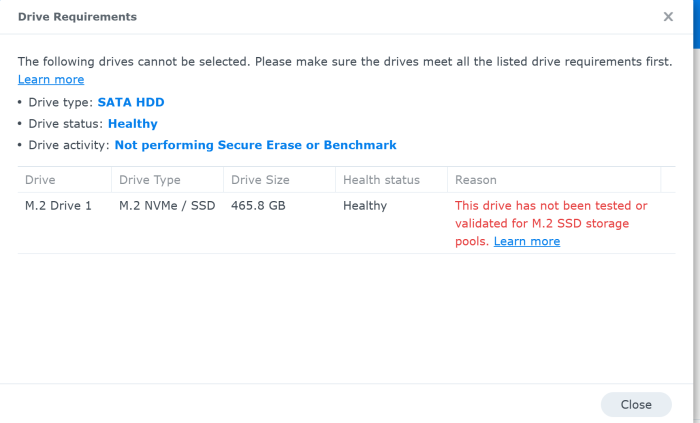

Everyone knows that the DS923+ model supports using nvme hard drives as storage pools. By comparing synoinfo.conf, I found that this option is the key to enabling this feature. support_m2_pool="yes" I used sa6400 for testing, I can indeed see the nvme hard disk in the hard disk list, but when I try to add him as a storage pool, I encountered an annoying compatibility reminder, as shown below, I also Try to turn off the hard disk compatibility option through the following configuration, support_disk_compatibility="no" I also added the model and compatibility support of my corresponding hard drive to almost all files in the /var/lib/disk-compatibility directory, but the page still has a compatibility report, anyone have any better suggestions?

-

Hi Everyone! Due to DSM 6.x Proxmox Backup Template I have made a clean backups for several Xpenology installations on Proxmox. All installation was made via Automated Redpill Loader by fbelavenuto. Download Link --------------->>>>> https://mega.nz/folder/42RmGBgR#GPZIL371zNE3uTt14CmY2A WARNINGS! DO NOT TRY TO UPDATE FROM 6.x to 7.x WITHOUT FULL BACKUP! DO NOT TRY TO UPDATE 7.x to NEW VERSIONS WITHOUT FULL BACKUP! How to use this backups: Choose the correct platform according to the table from this topic: DSM 7.x Loaders and Platforms Download backup Put this backup to Your BACKUP STORAGE in Proxmox (e.g. local -> /var/lib/vz/dump) Open WEB UI Open STORAGE in WEB UI Choose the backup which has been downloaded Click on Restore NECESSARILY! Choose the storage where backup will be restored. (e.g. local) There are some several important points: TWO Network Interfaces. First NIC is disabled. It has been done because for DSM first device must be with suitable MAC which is fixed in Grub. If You need multiple instance of DSM (as me) - it's costly to mofidy everytime Grub. So Second NIC uses any MAC address from Proxmox. TWO Hard Disk Drives. Firsk disk - is bootable disk made by Automated Redpill Loader! Don't try to use is as Storage. (maybe it's painfully, I haven't checked). Second Drive - it's a System Drive where was DSM has already installed. Second drive capacity - 11 GB! All space of this driver is gone for Synology DSM Firmware. You can extend this partition or create a new Hard disk's for making a Storage pool. Boot Drive - Sata0 (where loader is installed). Implemented addons: DS3622xs+ -> misc powersched 9p acpid DS918+ -> i915-8th i915-10th misc powersched 9p acpid DVA1622 -> i915-10th misc powersched 9p acpid DVA3221 -> misc powersched 9p acpid Big thanks to: pocopico -> TinyCore RedPill Loader (TCRP) - Loaders - XPEnology Community fbelavenuto -> Automated RedPill Loader (ARPL) - Loaders - XPEnology Community flyride -> DSM 7.x Loaders and Platforms - Tutorials and Guides - XPEnology Community Joyz -> Установка DSM 7.1.1 на Proxmox - Виртуализация - XPEnology Community Some screenshots: DS918+ DS3622xs+ DVA1622 DVA3221

-

new sata/ahci cards with more then 4 ports (and no sata multiplexer)

vbz14216 replied to IG-88's topic in Hardware Modding

Well well well, the WD drives mentioned a few months ago are still ok. But 1 out of my 2x HGST 7K1000 without noncq which ran fine for around year suddenly got kicked out of its own RAID1 array(within its own storage volume), degrading the volume. During that time I was testing Hyper Backup to another NAS, the HB ended without issues so I still have a known good backup in case if anything went wrong. dmesg and /var/log/messages listed some (READ) FPDMA QUEUED error and the ATA link was reset twice before md decided to kick the partitions out. I powered down the NAS, used another PC to make sure the drive was all good(no bad sectors) and erased the data preparing for a clean array repair. Before loading the kernel I added more noncq parameter to libata for all remaining SATA ports. Deactivate the drive, then a power cycle to make it recognized as an unused drive. The array was successfully repaired after some time, followed by a good BTRFS scrub. Analysis: This drive simply went "Critical" without any I/O error or bad sector notice from log center. /proc/mdstat showed the drive(sdfX) got kicked out from md0(system partition) and md3(my 2nd storage array, mdX at whichever your array was). Interestingly enough md1(SWAP) was still going, indicating the disk was still recognized by the system(instead of a dead drive). root@NAS:~# cat /proc/mdstat Personalities : [raid1] md3 : active raid1 sdd3[0] sdf3[1](F) 966038208 blocks super 1.2 [2/1] [U_] md2 : active raid1 sde3[3] sdc3[2] 1942790208 blocks super 1.2 [2/2] [UU] md1 : active raid1 sdf2[0] sdd2[3] sde2[2] sdc2[1] 2097088 blocks [12/4] [UUUU________] md0 : active raid1 sde1[0] sdc1[3] sdd1[2] sdf1[12](F) 2490176 blocks [12/3] [U_UU________] The other drive with identical model in the same R1 array has a different firmware and fortunately didn't suffer from this, preventing a complete volume crash. Upon reading /var/log/messages I assume md prioritized the dropped drive for reading data from array, which caused the drive to get kicked out in the first place: Continuing with a BTRFS warning followed by md doing its magic by rescheduling(switch over for redundancy): There's no concrete evidence on what combination of hardware, software and firmware can cause this so there isn't much point in collecting setup data. Boot parameter for disabling NCQ on select ports, or simply libata.force=noncq to rid of NCQ on all ports: libata.force=X.00:noncq,Y.00:noncq dmesg will say NCQ (not used) instead of NCQ (depth XX). This mitigates libata NCQ quirks at the cost of some multitask performance. I only tried RAID 1 with HDDs, not sure if this affects RAID5/6/10 or Hybrid RAID performance. NCQ bugs can also happen on SSDs with queued TRIM, reported as problematic on some SATA SSDs. A bugzilla thread on NCQ bugs affecting several Samsung SATA SSDs: https://bugzilla.kernel.org/show_bug.cgi?id=201693 It's known NCQ does have some effects on TRIM(for SATA SSDs). That's why libata also has noncqtrim for SSDs, which doesn't disable NCQ as a whole but only the queued TRIM. Do note that writing 1 to /sys/block/sdX/device/queue_depth may not be the solution for mitigating queued TRIM bug on SSDs, as someone in that thread stated it doesn't work for them until noncq boot parameter is used. (I suppose noncqtrim should just do the trick, this was the libata quirk for those drives.) Since this option doesn't seem to cause data intergrity issues, maybe can be added as advanced debug option for loader assistant/shell? Dunno which developer to tag. I suspect this is one of the oversights on random array degrades/crashes besides bad cables/backplanes. For researchers: /drivers/ata/libata-core.c is the one to look into. There are some (old) drives applied with mitigation quirks. Still... I do not take responsibility on data corruption, use at your own risk. Perform full backup before committing anything. -

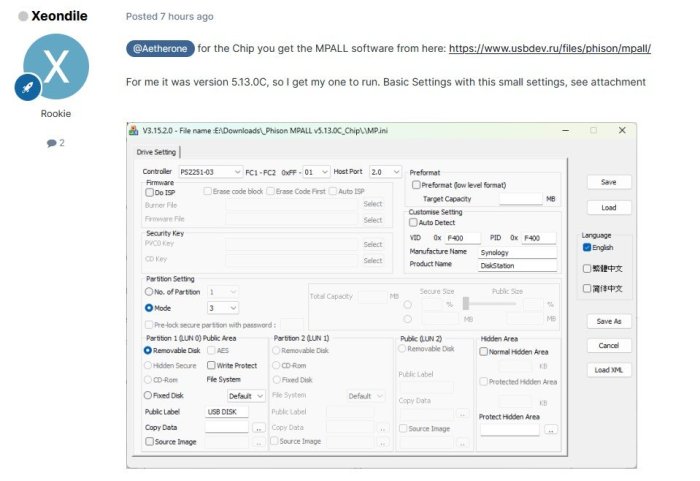

HA! After a whole lot of fiddling I got the right combination of files to flash my Phison drives to F400. Turns out the various packages on usbdev.ru are incomplete - I needed "Phison_MPALL_v5.13.0C.rar" for an operational flashtool AND "firmware_ps2251-03.rar" for the correct firmware files to flash my units. Thanks to @Xeondile for the correct settings once this lot was cobbled together. BEFORE AFTER The settings once the firmware BIN was loaded into the folder. As above, some caution would be advised - a small number of online virus checkers flag these as potentially hostile but none of them can agree on exactly what the malware is so I'm not convinced it's a false positive given what these programs are designed to do. Still, be careful & use them offline, preferably from a disposable temporary Windows install.

- 306 replies

-

- firmware

- 08-0220usb14

- (and 4 more)

-

HA! After a whole lot of fiddling I got the right combination of files to flash my Phison drives to F400. Turns out the various packages on usbdev.ru are incomplete - I needed "Phison_MPALL_v5.13.0C.rar" for an operational flashtool AND "firmware_ps2251-03.rar" for the correct firmware files to flash my units. Thanks to @Xeondile for the correct settings once this lot was cobbled together. BEFORE AFTER Some caution would be advised - a small number of online virus checkers flag these as potentially hostile but none of them can agree on exactly what the malware is so I'm not convinced it's a false positive given what these programs are designed to do. Still, be careful & use them offline, preferably from a disposable temporary Windows install.

- 37 replies

-

- boot

- transplant

-

(and 2 more)

Tagged with:

-

DSM 6.2.3 will not work with these drivers, if you install or update you will fall back to "native" drivers that come with DSM, like no realtek nic on 3615/17 but on 918+ or no mpt2/mpt3sas on 918+ or no broadcom onboard nic on HP microserver or Dell server read this if you want to know about "native" drivers https://xpenology.com/forum/topic/13922-guide-to-native-drivers-dsm-617-and-621-on-ds3615/ synology reverted the changes made in 6.2.2 so the old drivers made for 6.2.(0) are working again and there are new drivers made for 6.2.3 too (we got recent kernel source from synology lately) https://xpenology.com/forum/topic/28321-driver-extension-jun-104b-for-dsm623-for-918/ This is the new 2nd test version of the driver extension for loader 1.04b and 918+ DSM 6.2.2, network drivers for intel and realtek are now all latest and the same as in 3615/17 from mid. december (also broadcoam tg3 driver is working), tries to address the problems with the different GPU's by haveing 3 versions of the pack additional information and packages for 1.03b and 3615/3617 are in the lower half under a separate topic (i will unify the 918+ and 3615/17 parts later as they are now on the same level again) mainly tested as fresh install with 1.04b loader with DSM 6.2.2, there are extra.lzma and extra2.lzma in the zip file - you need both - the "extra2" file is used when booting the 1st time and under normal working conditions the extra.lzma is used (i guess also normal updates - jun left no notes about that so i had to find out and guess). Hardware in my test system used additional driver: r8168, igb, e1000e, bnx2x, tn40xx, mpt2sas The rest of the drivers just load without any comment on my system, i've seen drivers crashing only when real hardware is present so be warned, i assume any storage driver beside ahci and mps2sas/mpt3sas as not working, so if you use any other storage as listed before you would need to do a test install with a new usb and a single empty disk to find out before doing anything with your "production" system i suggest testing with a new usb and a empty disk and it that's ok then you have a good chance for updating for updating its the same as below in the 3615/17 section with case 1 and 2 but you have extra.lzma and extra2.lzma and you will need to use https://archive.synology.com/download/DSM/release/6.2.2/24922/DSM_DS918+_24922.pat most important is to have zImage and rd.gz from the "DSM_DS918+_24922.pat" file (can be opened with 7zip) together with the new extra/extra2, same procedure as for the new extra for 3615/17 (see below) all 4 files extra.lzma, extra2.lzma (both extracted from the zip downloaded), zImage and rd.gz go to the 2nd partition of the usb (or image when using osfmount), replacing the 4 files there if you want the "old" files of the original loader back you can always use 7zip to open the img file from jun and extract the original files for copying them to usb if really wanting to test with a running 6.2.x system then you should empty /usr/lib/modules/update/ and /usr/lib/firmware/i915/ before rebooting with the new extra/extra2 rm -rf /usr/lib/modules/update/* rm -rf /usr/lib/firmware/i915/* the loader will put its files on that locations when booting again, this step will prevent having old incompatible drivers in that locations as the loader replaces only files that are listed in rc.modules and in case of "syno" and "recovery" there are fewer entries, leaving out i915 related files, as long as the system boots up this cleaning can be done with the new 0.8 test version there a 3 types of driver package, all come with the same drivers (latest nic drivers for realtek and intel) and conditions/limitations as the 3615/17 driver set from mid. december (mainly storage untested, ahci and mpt3sas is tested). 1. "syno" - all extended i915 stuff removed and some firmware added to max compatibility, mainly for "iGPU gen9" (Skylake, Apollo Lake and some Kaby Lake) and older and cases where std did not work, i915 driver source date: 20160919, positive feedback for J3455, J1800 and N3150 2. "std" - with jun's i915 driver from 1.04b (tested for coffee lake cpu from q2/2018), needed for anything newer then kaby lake like gemini lake, coffee lake, cannon lake, ice lake, i915 driver source date: 20180514 - as i had no source i915 driver is the same binary as in jun's original extra/extra2, on my system its working with a G5400, not just /dev/dri present, tested completely with really transcoding a video, so its working in general but might fail in some(?) cases, also 8th/9th gen cpu like i3/i5 8100/9400 produce a /dev/dri, tested with a 9400 and it does work 3. "recovery" - mainly for cases where the system stops booting because of i915 driver (seen on one N3150 braswell), it overwrites all gpu drivers and firmware with files of 0 size on booting so they can't be loaded anymore, should also work for any system above but guarantees not having /dev/dri as even the firmware used from the dsm's own i915 driver is invalid (on purpose) - if that does not work its most likely a network driver problem, safe choice but no transcoding support start with syno, then std and last resort would be recovery anything with a kernel driver oops in the log is a "invalid" as it will result in shutdown problems - so check /var/log/dmesg the often seen Gemini Lake GPU's might work with "std", pretty sure not with "syno", most (all?) testers with gemini lake where unsuccessful with "std" so if you don't like experimenting and need hardware transcoding you should wait with the version you have the "_mod" on the end of the loader name below is a reminder that you need to to "modding" as in make sure you have zImage and rd.gz from DSM 6.2.2 on you usb for booting, the new extra.lzma will not work with older files 0.8_syno ds918+ - extra.lzma/extra2.lzma for loader 1.04b_mod ds918+ DSM 6.2.2 v0.8_syno https://gofile.io/d/mVBHGi SHA256: 21B0CCC8BE24A71311D3CC6D7241D8D8887BE367C800AC97CE2CCB84B48D869A Mirrors by @rcached https://clicknupload.cc/zh8zm4nc762m https://dailyuploads.net/qc8wy6b5h5u7 https://usersdrive.com/t0fgl0mkcrr0.html https://www104.zippyshare.com/v/hPycz12O/file.html 0.8_std ds918+ - extra.lzma/extra2.lzma for loader 1.04b_mod ds918+ DSM 6.2.2 v0.8_std https://gofile.io/d/y8neID SHA256: F611BCA5457A74AE65ABC4596F1D0E6B36A2749B16A827087D97C1CAF3FEA89A Mirrors by @rcached https://clicknupload.cc/h9zrwienhr7h https://dailyuploads.net/elgd5rqu06vm https://usersdrive.com/peltplqkfxvj.html https://www104.zippyshare.com/v/r9I7Tm0K/file.html 0.8_recovery ds918+ - extra.lzma/extra2.lzma for loader 1.04b_mod ds918+ DSM 6.2.2 v0.8_recovery https://gofile.io/d/4K3WPE SHA256: 5236CC6235FB7B5BB303460FC0281730EEA64852D210DA636E472299C07DE5E5 Mirrors by @rcached https://clicknupload.cc/uha07uso7vng https://dailyuploads.net/uwh710etr3hm https://usersdrive.com/ykrt1z0ho7cm.html https://www104.zippyshare.com/v/7gufl3yh/file.html !!! still network limit in 1.04b loader for 918+ !!! atm 918+ has a limit of 2 nic's (as the original hardware) If there are more than 2 nic's present and you can't find your system in network then you will have to try after boot witch nic is "active" (not necessarily the onboard) or remove additional nic's and look for this after installation You can change the synoinfo.conf after install to support more then 2 nic's (with 3615/17 it was 8 and keep in mind when doing a major update it will be reset to 2 and you will have manually change this again, same as when you change for more disk as there are in jun's default setting) - more info's are already in the old thread about 918+ DSM 6.2.(0) and here https://xpenology.com/forum/topic/12679-progress-of-62-loader/?do=findComment&comment=92682 I might change that later so it will be set the same way as more disks are set by jun's patch - syno's max disk default for this hardware was 4 disks but jun's pach changes it on boot to 16!!! (so if you have 6+8 sata ports then you should not have problems when updating like you used to have with 3615/17) Basically what is on the old page is valid, so no sata_*, pata_* drivers Here are the drivers in the test version listed as kernel modules: The old thread as reference !!! especially read "Other things good to know about DS918+ image and loader 1.03a2:" its still valid for 1.04b loader !!! This section is about drivers for ds3615xs and ds3617xs image/dsm version 6.2.2 (v24922) Both use the same kernel (3.10.105) but have different kernel options so don't swap or mix, some drivers might work on the other system some don't at all (kernel oops) Its a test version and it has limits in case of storage support, read careful and only use it when you know how to recover/downgrade your system !!! do not use this to update when you have a different storage controller then AHCI, LSI MPT SAS 6Gb/s Host Adapters SAS2004/SAS2008/SAS2108/SAS2116/SAS2208/SAS2308/SSS6200 (mpt2sas) or LSI MPT SAS 12Gb/s Host Adapters SAS3004/SAS3008/SAS3108 (mpt3sas - only in 3617), instead you can try a fresh "test" install with a different usb flash drive and a empty single disk on the controller in question to confirm if its working (most likely it will not, reason below) !!! The reason why 1.03b loader from usb does not work when updating from 6.2.0 to 6.2.2 is that the kernel from 6.2.2 has different options set witch make the drivers from before that change useless (its not a protection or anything), the dsm updating process extracts the new files for the update to HDD, writes the new kernel to the usb flash drive and then reboots - resulting (on USB) in a new kernel and a extra.lzma (jun's original from loader 1.03b for dsm 6.2.0) that contains now incompatible drivers, the only drivers working reliable in that state are the drivers that come with dsm from synology Beside the different kernel option there is another thing, nearly none of the new compiled scsi und sas drivers worked They only load as long as no drive is connected to the controller. ATM I assume there was some changes in the kernel source about counting/indexing the drives for scsi/sas, as we only have the 2.5 years old dsm 6 beta kernel source there is hardly a way to compensate People with 12GBit SAS controllers from LSI/Avago are in luck, the 6.2.2 of 3617 comes with a much newer driver mpt3sas then 6.2.0 and 6.2.1 (13.00 -> 21.00), confirmed install with a SAS3008 based controller (ds3617 loader) Driver not in this release: ata_piix, mptspi (aka lsi scsi), mptsas (aka lsi sas) - these are drivers for extremely old hardware and mainly important for vmware users, also the vmw_pvscsi is confirmed not to work, bad for vmware/esxi too Only alternative as scsi diver is the buslogic, the "normal" choice for vmware/ESXi would be SATA/AHCI I removed all drivers confirmed to not work from rc.modules so they will not be loaded but the *.ko files are still in the extra.lzma and will be copied to /usr/modules/update/ so if some people want to test they can load the driver manually after booting These drivers will be loaded and are not tested yet (likely to fail when a disk is connected) megaraid, megaraid_sas, sx8, aacraid, aic94xx, 3w-9xxx, 3w-sas, 3w-xxxx, mvumi, mvsas, arcmsr, isci, hpsa, hptio (for some explanation of what hardware this means look into to old thread for loader 1.02b) virtio driver: i added virtio drivers, they will not load automatically (for now), the drivers can be tested and when confirmed working we will try if there are any problems when they are loaded by default along with the other drivers they should be in /usr/modules/update/ after install To get a working loader for 6.2.2 it needs the new kernel (zImage and rd.gz) and a (new) extra.lzma containing new drivers (*.ko files) zImage and rd.gz will be copied to usb when updating DSM or can be manually extracted from the 6.2.2 DSM *.pat file and copied to usb manually and that's the point where to split up between cases/way's case 1: update from 6.2.0 to 6.2.2 case 2: fresh install with 6.2.2 or "migration" (aka upgrade) from 6.0/6.1 Case 1: update from 6.2.0 to 6.2.2 Basically you semi brick your system on purpose by installing 6.2.2 and when booting fails you just copy the new extra.lzma to your usb flash drive by plugging it to a windows system (witch can only mount the 2nd partition that contains the extra.lzma) or you mount the 2nd partition of the usb on a linux system Restart and then it will finish the update process and when internet is available it will (without asking) install the latest update (at the moment update4) and reboot, so check your webinterface of DSM to see whats going or if in doubt wait 15-20 minutes check if the hdd led's are active and check the webinterface or with synology assistant, if there is no activity for that long then power off and start the system, it should work now Case 2: fresh install with 6.2.2 or "migration" (aka upgrade) from 6.0/6.1 Pretty much the normal way as described in the tutorial for installing 6.x (juns loader, osfmount, Win32DiskImager) but in addition to copy the extra.lzma to the 2nd partition of the usb flash drive you need to copy the new kernel of dsm 6.2.2 too so that kernel (booted from usb) and extra.lzma "match" You can extract the 2 files (zImage and rd.gz) from the DSM *.pat file you download from synology https://archive.synology.com/download/DSM/release/6.2.2/24922/DSM_DS3615xs_24922.pat or https://archive.synology.com/download/DSM/release/6.2.2/24922/DSM_DS3617xs_24922.pat These are basically zip files so you can extract the two files in question with 7zip (or other programs) You replace the files on the 2nd partition with the new ones and that's it, install as in the tutorial In case of a "migration" the dsm installer will detect your former dsm installation and offer you to upgrade (migrate) the installation, usually you will loose plugins, but keep user/shares and network settings DS3615: extra.lzma for loader 1.03b_mod ds3615 DSM 6.2.2 v0.5_test https://gofile.io/d/iQuInV SHA256: BAA019C55B0D4366864DE67E29D45A2F624877726552DA2AD64E4057143DBAF0 Mirrors by @rcached https://clicknupload.cc/h622ubb799on https://dailyuploads.net/wxj8tmyat4te https://usersdrive.com/sdqib92nspf3.html https://www104.zippyshare.com/v/Cdbnh7jR/file.html DS3617: extra.lzma for loader 1.03b_mod ds3617 DSM 6.2.2 v0.5_test https://gofile.io/d/blXT9f SHA256: 4A2922F5181B3DB604262236CE70BA7B1927A829B9C67F53B613F40C85DA9209 Mirrors by @rcached https://clicknupload.cc/0z7bf9stycr7 https://dailyuploads.net/68fdx8vuwx7y https://usersdrive.com/jh1pkd33tmx0.html https://www104.zippyshare.com/v/twDIrPXu/file.html

- 923 replies

-

- 42

-

-

-

-

From Intel 8th gen to Intel 12th/13th/14th gen in DSM 6.2.3

IG-88 replied to ed_co's question in General Questions

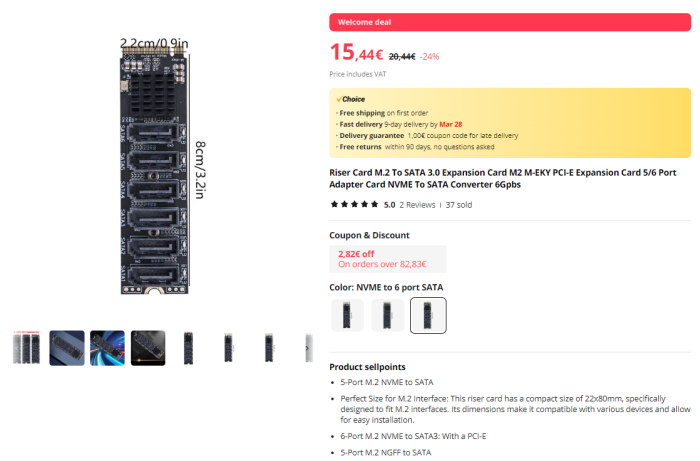

i had a (cheap) jmb585 m.2 and it never worked stable, i also had concerns about flimsy pcb (might crack or parts get damaged when pressing to hard like inserting cables when already mounted in m.2, also the force 5 or 6 sata cables to that flimsy thin board can be a problem, that needs some adjustment too to not run into problems when working inside the system after placing the m.2 adapter), had more success with m.2 cable based adapter that terminated in a pcie 4x slot but also there was no universal solution as one with a slightly longer cable did not work stable with one specific controller, i ended up only using this m.2 contraptions for 10G nic) or not at all) and did spread the needed sata ports over the pcie 4x and 1x slots of the m-atx board i use (pcie 1x slot with jmb582) - a few bugs more for the controllers is better then a shredded btrfs volume (that is often hopeless beyond repair in a situation like this - learned that the hard way, but i also do backups of my nas ...) most normal housings cant have more then 12-14 3.5" hdd's and that often can be achieved with a m-atx or atx board and more ahci adapters for small money (like 6 x onboard, 5-6 sata by one jmb585 or asm1166 in a 4x slot and one or two 2port adapters in 1x slots - the 16x slot or one 4x slot might already be used for a 10G nic in my scenarios, if a 16x and and a 4x slot is free then two 2x/4x cards can add 10-12 sata disks to the 6 sata onboard ...) -

i use arpl with a dva1622 and 6 disks (original has 2) and arc with 3622 and 13 disks (original 12), no problems, in the graphics you see a box with the original amount of slots but in using there is just the "normal" and old 26 disk limit, you will see all disks in HDD/SSD listing of disks you might want to change from 918+ to something newer as 918+ might loose its support and might not get updates as long as newer models (the guarantee is about 5 years, anything above that depends) depending on the features you need (like intel quick sync video) there might be some limits of models you can choose in the loader there is also a model specific cpu thread limit in the kernel but as you use a low spec cpu for you new system that wont be much of a problem, only thing with new er intel cpu's might be that the old 4.x kernel in its original form does not support 12th gen intel qsv and it depends on the loader how far that support is working as it needs extra drivers from the loader, so you might need to read up on that in the loaders doku or here in the forum (i use a older intel cpu with the dva1622 that is working with syno's original i915 driver so i'm not that much up to knowing whats the best solution now, dva1622 comes with a nice feature set ootb when the i915 supports the cpu but there was also some interesting stuff going on with sa6400 and its 5.0 kernel with i915 extended drives, initially here https://github.com/jim3ma but i guess some of it might have found its way to other loaders by now) in genral it does nor matter if the original unit has a amd or intel cpu for just the basic NAS stuff, only when using KVM based VMM from synology or specific things like intel qsv it becomes important (as kernels per from synology are tailored for cpu's to some degree and the most obvious is the thread limit) i'd suggest to use a different usb thumb drive and a single empty disk (maybe two to connect to the last sata port to see how far it gets) to do some tests (you can keep the original usb and he disks you use now offline (just disconnect the disks), play with the loaders model until you find your sweet spot and then use that configured loader to upgrade to the new model and dsm version (7.1 is still fine and as its a LTS version it will get updates at least as long as 7.2)m when creating a system from scratch with empty disks the partitions layout for system and swap will be different with 7.1/7.2 but upgrading from 6.2 and keeping the older smaller partitions is supported by synology so there is no real need to start from scatch for 7.x https://kb.synology.com/en-global/DSM/tutorial/What_kind_of_CPU_does_my_NAS_have

-

Before installing XPEnology using DSM 7.x, you must select a DSM platform and loader. XPEnology supports a variety of platforms that enable specific hardware and software features. All platforms support a minimum of 4 CPU cores, 64GB of RAM, 10Gbe network cards and 16 drives. Each can run "baremetal" as a stand-alone operating system OR as a virtual machine within a hypervisor. A few specific platforms are preferred for typical installs. Review the table and decision tree below to help you navigate the options. NOTE: DSM 6.x is still a viable system and is the best option for certain types of hardware. See this link for more information. DSM 7.x LOADERS ARE DIFFERENT: A loader allows DSM to install and run on non-Synology hardware. The loaders for DSM 5.x/6.x were monolithic; i.e. a single loader image was applicable to all installs. With DSM 7.x, a custom loader must be created for each DSM install. TinyCore RedPill (TCRP) is currently the most developed tool for building 7.x loaders. TCRP installs with two step-process. First, a Linux OS (TinyCore) boots and evaluates your hardware configuration. Then, an individualized loader (RedPill) is built and written to the loader device. After that, you can switch between starting DSM with RedPill, and booting back into TinyCore to adjust and rebuild as needed. TCRP's Linux boot image (indicated by the version; i.e. 0.8) changes only when a new DSM platform or version is introduced. However, you can and should update TCRP itself prior to each loader build, adding fixes, driver updates and new features contributed by many different developers. Because of this ongoing community development, TCRP capabilities change rapidly. Please post new or divergent results when encountered, so that this table may be updated. 7.x Loaders and Platforms as of 06-June-2022 Options Ranked 1a 1b 2a 2b 2c 3a 3b DSM Platform DS918+ DS3622xs+ DS920+ DS1621+ DS3617xs DVA3221 DS3615xs Architecture apollolake broadwellnk geminilake v1000 broadwell denverton bromolow DSM Versions 7.0.1-7.1.0-42661 7.0.1-7.1.0-42661 7.0.1-7.1.0-42661 7.0.1-7.1.0-42661 7.0.1-7.1.0-42661 7.0.1-7.1.0-42661 7.0.1-7.1.0-42661 Loader TCRP 0.8 TCRP 0.8 TCRP 0.8 TCRP 0.8 TCRP 0.8 TCRP 0.8 TCRP 0.8 Drive Slot Mapping sataportmap/ diskidxmap sataportmap/ diskidxmap device tree device tree sataportmap/ diskidxmap sataportmap/ diskidxmap sataportmap/ diskidxmap QuickSync Transcoding Yes No Yes No No No No NVMe Cache Support Yes Yes Yes Yes Yes (as of 7.0) Yes No RAIDF1 Support No Yes No No Yes No Yes Oldest CPU Supported Haswell * any x86-64 Haswell ** any x86-64 any x86-64 Haswell * any x86-64 Max CPU Threads 8 24 8 16 24 (as of 7.0) 16 16 Key Note currently best for most users best for very large installs see slot mapping topic below AMD Ryzen, see slot mapping topic obsolete use DS3622xs+ AI/Deep Learning nVIDIA GPU obsolete use DS3622xs+ * FMA3 instruction support required. All Haswell Core processors or later support it. Very few Pentiums/Celerons do (J-series CPUs are a notable exception). Piledriver is believed to be the minimum AMD CPU architecture equivalent to Intel Haswell. ** Based on history, DS920+ should require Haswell. There is anecdotal evidence gradually emerging that DS920+ will run on x86-64 hardware. NOT ALL HARDWARE IS SUITABLE: DSM 7 has a new requirement for the initial installation. If drive hotplug is supported by the motherboard or controller, all AHCI SATA ports visible to DSM must either be configured for hotplug or have an attached drive during initial install. Additionally, if the motherboard or controller chipset supports more ports than are physically implemented, DSM installation will fail unless they are mapped out of visibility. On some hardware, it may be impossible to install (particularly on baremetal) while retaining access to the physical ports. The installation tutorial has more detail on the causes of this problem, and possible workarounds. DRIVE SLOT MAPPING CONSIDERATIONS: On most platforms, DSM evaluates the boot-time Linux parameters SataPortMap and DiskIdxMap to map drive slots from disk controllers to a usable range for DSM. Much has been written about how to set up these parameters. TCRP's satamap command determines appropriate values based on the system state during the loader build. It is also simple to manually edit the configuration file if your hardware is unique or misidentified by the tool. On the DS920+ and DS1621+ platforms, DSM uses a Device Tree to identify the hardware and ignores SataPortMap and DiskIdxMap. The device tree hardcodes the SATA controller PCI devices and drive slots (and also NVMe slots and USB ports) prior to DSM installation. Therefore, an explicit device tree that matches your hardware must be configured and stored within the loader image. TCRP automatic device tree configuration is limited. For example, any disk ports left unpopulated at loader build time will not be accessible later. VMware ESXi is not currently supported. Host bus adapters (SCSI, SAS, or SATA RAID in IT mode) are not currently supported. Manually determining correct values and updating the device tree is complex. Device Tree support is being worked on and will improve, but presently you will generally be better served by choosing platforms that support SataPortMap and DiskIdxMap (see Tier 1 below). CURRENT PLATFORM RECOMMENDATIONS AND DECISION TREE: VIRTUALIZATION: All the supported platforms can be run as a virtual machine within a hypervisor. Some use case examples: virtualize unsupported network card virtualize SAS/NVMe storage and present to DSM as SATA run other VMs in parallel on the same hardware (as an alternative to Synology VMM) share 10GBe network card with other non-XPEnology VMs testing and rollback of updates Prerequisites: ESXi (requires a paid or free license) or open-source hypervisor (QEMU, Proxmox, XenServer). Hyper-V is NOT supported. Preferred Configurations: passthrough SATA controller and disks, and/or configure RDM/RAW disks This post will be updated as more documentation is available for the various TCRP implementations.

- 76 replies

-

- 42

-

-

-

Update: external USB stick partitions DD imaged and restored back to the stock DOM, all buttoned up and running very nicely. While I had the lid off I tried extra ram again under DSM7 - 6GB upset it as expected, just instantly maxing a CPU core with any interaction at all. However, unlike my DSM6 experience, 4GB is running fine and dandy. System is responsive and behaves as expected - no sign at all of the CPU usage bug. Interesting. Since DSM7.1.1 supports it, I also tested SMB multichannel - which works surprisingly well with some transfers hitting over 200MBps until the tiny ancient 120Gb SSD I've been using for testing runs out of cache 🤐. Watching htop shows SMB-MC pinning the CPU pretty hard though. Well worth the effort to buy this old workhorse a few more years. 😎

- 306 replies

-

- firmware

- 08-0220usb14

- (and 4 more)

-

Hi! I've been going for the latest (DS923+) model and changed the configuration to allow for 5 drives as in my build. However, this new model does not support the onboard graphics chip in my build (based on 8700K CPU, possibly to be upgraded to 9900T CPU in the future). Which would be a better choice? DS920+ or DVA1622? Does anyone know if they will allow intel 630 GPU support for Llama-GPT or image AI docker builds? Thanks!

- 4 replies

-

- intel 630 igp

- llama-gpt

-

(and 1 more)

Tagged with:

-

I would say that for a business that would also favor proper support in case of issues, it's better to go with a off-the-shelf product such as Synology, QNAP, or such. The reason being that they're a one stop shop for their own hardware. And you will be covered by some kind of warranty with an option to buy an extended one, or to sign a service contract. The downside of getting something like Synology now (as opposed to QNAP, Asustor, Thecus and so on), is that Synology is closing its garden somewhat - as in it's moving to require Synology certified Hard drives which will be the only ones to work with its NAS products. Currently its in effect in Synology Business and Enterprise products (which may or may not be the level of product you need) but it may also start to arrive at Home based products. Xpenology may (or may not) always have the option to use any Hard drive, and also mix between them, but it relies on self built hardware most of the time.

- 9 replies

-

- 1

-

-

- nas appliance

- nas solutions

-

(and 2 more)

Tagged with:

-

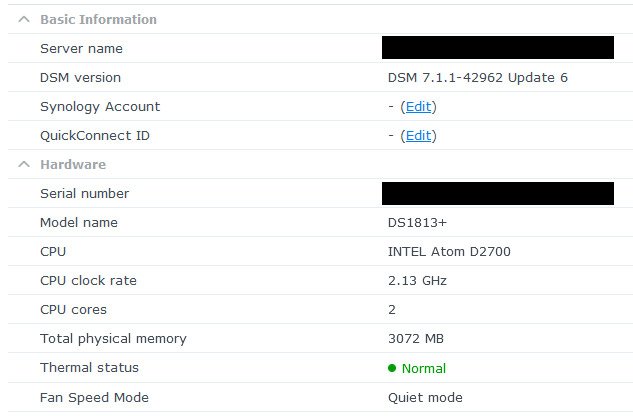

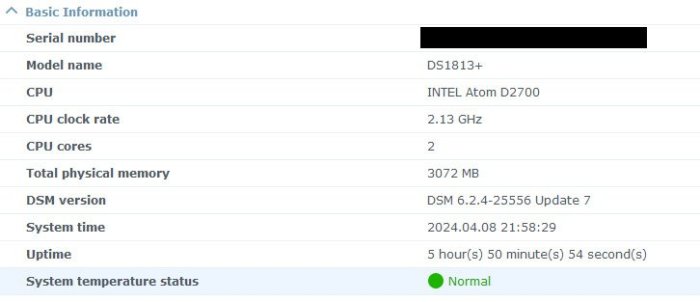

Ah HA! Can't upgrade 12-13 Hackinology from DSM 6.2.4 to 7.0.1, it'll throw that "Incompatible upgrade file" error. Can FRESH install 7.1.1 once you've reset the system (or fed it new drives or whatever). So glad I've got a stash of old small SATA SSDs kicking about.

- 306 replies

-

- 1

-

-

- firmware

- 08-0220usb14

- (and 4 more)

-

Good plan, I did that. In the end it was deceptively easy to persuade my DS1812+ it is really a DS1813+ instead. I didn't bother to downgrade, just installed a blank old SSD, did the file exchange and booted it up ... tada! DS1813+ reporting for duty! To summarise for those who follow (keeping in ind I kept mine simple - one drive; last DS1812+ OS installed; no important data to lose - even if this works, your DSM version is going to be out of date so I'd be VERY cautious about exposing this to the internet). 1. Starting with a working NAS unit, log in to the SSH console and dd if=/dev/synoboot of=/volume1/myShare/DS1812+synoboot-6.2.4u7.img I'm not sure if you need any SynoCommunity packages to get DD. They're pretty worth it for lots of useful utilities. We've started here because a backup image of your Synology is flat out a GOOD thing to have. Remember to put it somewhere safe (NOT on the NAS!) 2. boost your ram to 2 or 3gb. Single rank memory for these old units. Samsung seems to have a decent rep. 4Gb and above trigger the CPU usage bug for me so as nice as that much free ram is, it's at significant performance cost. 3. The really hard part - find a USB stick you can boot the machine from. It has to be something with an older chipset where the manufacturing tools have been leaked. USB-A or small port DOM doesn't matter, making the stick F400/F400 is the important bit. For me a Toshiba flash based DOM didn't work even with the MFG tools, but a 4Gb Lexar Jumpdrive Firefly did. Strictly, you don't need the DOM if you have a working stock flash and a backup, but I'm cautious like that. 4. Once you have the stick, flash it with the DD image you used up there. I used Rufus under Windows to do mine. 5. Open the NAS, remove the stock DOM and see if it'll boot from your DIY media. 6. Assuming it booted, you now need the DS1813+ PAT file. Since my unit was updated to 6.2.4u7, I grabbed the appropriate 1813+ version "DSM_DS1813+_25556.pat". This is where you refer back to post #35 in this thread - open "DSM_DS1813+_25556.pat" with 7zip and extract 4 files (Thanks DSfuchs for the image). Windows didn't want to play ball for me with mounting the tiny DOM partitions so I used a Linux box instead. If you're handy with the command line or "Midnight Commander" you can do this right on the NAS (if you're brave enough, on the stock DOM no less). With an abundance of caution, I made two folders in the second partition - 1812 files and 1813 files. Move the four relevant files to the 1812 folder for safekeeping and copy the 1813 files to the root of the partition. That's it, job done. Yeah, it seems too easy but it's really all there is for this. Dismount the stick, plug it into the bootable port on the NAS, slot in a blank drive and fire it up. After a couple of nervous minutes, my unit emitted a happy beep and showed up on the network as a DS1813+ (original serial number and MAC addresses to boot). With a blank drive in it, I fed the installer the "DSM_DS1813+_25556.pat" file and let it do it's thing. Network performance appears unchanged, all my usual swath of apps installed fine, no complaints anywhere I can see. It's just working 🤑 Next step I guess will be to make a new backup of the USB boot image (see step 1 above) and then see if it'll upgrade to DSM 7. After that, I guess I'll give it a few weeks to be absolutely sure and then look at cloning the USB stick back to the stock DOM and buttoning the old girl up for the next 4-5-6 years. Thanks for the advice DSfuchs!

- 306 replies

-

- firmware

- 08-0220usb14

- (and 4 more)

-

Hello, After several attempts to get all my satas ports to work properly and to preserve my usb ports as an external drive, I decided to write here hoping to find some help ! I have a Xpenology 7.2 DS918+ running a SuperMicro X11SCL-IF motherboard with 4 physical sata ports and a total of 8 USB ports. I recently updated it to version 7.2 (Model DS918+) by following the tutorial => I then added a PCI-e 16x expansion card with 24 sata ports : TISHRIC TSR818 – ASM1812+1064 chip I also added an NVME M2 card with 6 sata ports : Riser - M.2 to SATA 3.0 This gives me a total of 34 satas ports (yes, I know it's a lot, but I wanted to be able to use all my old disks and not be limited in the future). As the DS918+ is limited to 16 disks by default, I made the modifications to support my 34 satas ports by rebooting on tinycore build. To do this, I modified the user_config.json to obtain the following properties: "synoinfo": { "internalportcfg": "0x3FFFFFFFF”, "maxdisks": "34", … "esataportcfg": "0x0", "usbportcfg": "0x3FC00000000" }, I then ran the command ./rploader.sh satamap I was surpised by what it displayed because it wasn't very consistent: For my motherboard with 4 physical ports Found "00:17.0 Intel Corporation Device a352 (rev 10)" Detected 6 ports/0 drives. Override # of ports or ENTER to accept <6> 6 ports found instead of 4 physical ports. No bad ports. So I declared 4 instead of 6. For my PCI-e x16 card with 24 physical ports Found "03:00.0 ASMedia Technology Inc. Device 1064 (rev 02)" Detected 24 ports/0 drives. Bad ports: -139 -138 -137 -136 -133 -132 -131 -130 -129 -128 -127 -126 -125 -124 -123 -122 -121 -120. Override # of ports or ENTER to accept <24> Found "04:00.0 ASMedia Technology Inc. Device 1064 (rev 02)" Detected 24 ports/1 drives. Bad ports: -115 -114 -113 -112 -109 -108 -107 -106 -105 -104 -103 -102 -101 -100 -99 -98 -97 -96. Override # of ports or ENTER to accept <24> Found "05:00.0 ASMedia Technology Inc. Device 1064 (rev 02)" Detected 24 ports/0 drives. Bad ports: -91 -90 -89 -88 -85 -84 -83 -82 -81 -80 -79 -78 -77 -76 -75 -74 -73 -72. Override # of ports or ENTER to accept <24> Found "06:00.0 ASMedia Technology Inc. Device 1064 (rev 02)" Detected 24 ports/0 drives. Bad ports: -67 -66 -65 -64 -61 -60 -59 -58 -57 -56 -55 -54 -53 -52 -51 -50 -49 -48. Override # of ports or ENTER to accept <24> Found "07:00.0 ASMedia Technology Inc. Device 1064 (rev 02)" Detected 24 ports/0 drives. Bad ports: -43 -42 -41 -40 -37 -36 -35 -34 -33 -32 -31 -30 -29 -28 -27 -26 -25 -24. Override # of ports or ENTER to accept <24> Found "08:00.0 ASMedia Technology Inc. Device 1064 (rev 02)" Detected 24 ports/0 drives. Bad ports: -19 -18 -17 -16 -13 -12 -11 -10 -9 -8 -7 -6 -5 -4 -3 -2 -1 0. Override # of ports or ENTER to accept <24> 6 times detected with 24 ports on each with 18 bad ports each time. Knowing that I only have one card with 24 physical ports. I divided 24 by 6 and declared 4 for each. For my M2 NVME board with 6 physical ports Found "0e:00.0 ASMedia Technology Inc. Device 1166 (rev 02)" Detected 32 ports/0 drives. Bad ports: 7 8. Override # of ports or ENTER to accept <32> 32 ports detected with 2 bad ports. Knowing that I only have 6 physical ports. I declared 6 instead of 32. At the end, I get a warning telling me that there are bad ports, but I apply my config anyway and I get this in my user_config.json. "extra_cmdline": { … "SataPortMap": "44444446", "DiskIdxMap": "0004080c1014181c" }, I boot my system and all my sata slots seem to work (modulo the order of the disk numbers which are not consistent). I've read that I can redefine the order with sata_remap in extra_cmdline, is this true? On the other hand, when I plug in an external usb disk, it's detected as an internal device with disk number 17 (whatever USB port I use, I have 8 in total). And that's the subject of my message. How can I preserve my usb ports so that it's considered an external and not an internal disk? It must surely be linked to the wrong ports, but how do I manage them correctly ? If anyone has the right procedure I'm a taker 🙂 Here's the output of my dmesg command dmesg.txt Thank you in advance for your contribution

-

Hi guys, sorry for late reply. I changed my card and loader and eventually managed to get it work. Now I have 10 gig connection between my pc and nas. Speeds are still around 2 gig but I guess now my bottleneck is my drives. I do have another problem. My case has micro SD card reader attached to motherboard via 5v pin and my nas don’t see it. Are there any drives that can be installed?

-

Got DSM 7.2 up and running on ESXi 6.7. Then did a transfer from DSM 6.2.3 to the DSM 7.2 machine which is configured as a DS3266xs+ all data remained perfectly on 7.2 after enabling the controller as a passthough as I did on 6.2.3 Odd things is that it's showing the drives on ports 10-16 when I only have this configured as a 12 bay. Is there anyway to fix it where it shows drive 1-6 or make this into a 24 bay, or do I just leave it as is?