All Activity

- Past hour

-

10GBit Bandwidth ~30% slower after upgrade to 7.2.1-69057-5

IG-88 replied to crurer's question in General Questions

as my 7.1 long term informations where older i looked it up, looks like the is a catch to that (at least now) only older units (presumably kernel 3.10, mostly 2015 units and older) will get one more year up to 6/2025, namely the ds3615xs, the other commonly used types like 3617 or 3622 will loose update support 6/2024 and will have to be updated to 7.2 (if security updates are important) https://kb.synology.com/en-global/WP/Synology_Security_White_Paper/3 looks like i will have to upgrade my dva1622 in the next 3 month to 7.2 there was also a "Synology E10G18-T1" with that performance problem so it might stretch to more nic's or its something different but if exchanging the driver on the already running system clears the speed problem then it would be clearly that issue forgot to mention it but my test system was using arc loader, as most loaders have there ow deriver set its worth checking the driver version and comparing to the original driver from synology (if there is one), in some cases it might not work using synology's drivers as there might not cover other oem versions of nic's, seen that with ixgbe drivers on 6.2 (but also tehuti and other intel drivers and realtek 2.5G nic driver) so it might not work to "downgrade" the driver to syno's original in some cases, that might result in a non working nic, it might find the device but if the phy chip is not supported the it will not show any connection or the driver might not load at all so in case of experiments like that its handy to keep a 2nd nic inside that can be used if the 10G nic fails to work after changing the driver - Today

-

Ja, das geht. Auch kannst Du direkt ein modding auf ein 18/19er-Modell mit machen. Dafür habe ich dieses Thema hier aufgemacht:

-

Attention ! Suite a la derniere mise a jour Proxmox , le nouveau kernel bloque le demarrage (carte mere N100) , pour ma part , jai du epingler le 6.5.13 , car le 6.8.4 : bloque le chargement des le demarrage ! A suivre...

-

Не..... И даже не учусь ....)))

-

Ist das Problem behoben? Wenn nein, dann schreibe mal bitte welche CPU verbaut ist, und welcher Lader genutzt wird.

-

Hallo, eine CPU mit einer TDP von 65w ist als NAS wegen des Stromverbrauchs und der Abwärme ungeeignet. Außerdem gibt es mit INTEL CPUs keine Probleme, um ein neueres DSM zu nutzen. Alles mit TDP <10w ist sinnvoll. Das geht z.B. los ab Intel N3150 https://ark.intel.com/content/www/de/de/ark/products/87258/intel-celeron-processor-n3150-2m-cache-up-to-2-08-ghz.html Bei neueren CPU-Familien sollte man nur die "Apollo Lake" wegen der begrenzten Lebensdauer außen vor lassen.

-

Synology Plus series internal Flash/DOM repair and model-modding

DSfuchs replied to DSfuchs's topic in Hardware Modding

I would like to once again urgently point out the need to back up every DOM of Synology + models. It's done in a few seconds. Simply run via SSH with root: dd if=/dev/synoboot of=/volume1/myShare/DS412+synoboot-6.2.4u5.img where "myShare" is your share for general purpose. Of course, it only makes sense if the file is then downloaded from the Synology to another location. -

login1440 joined the community

-

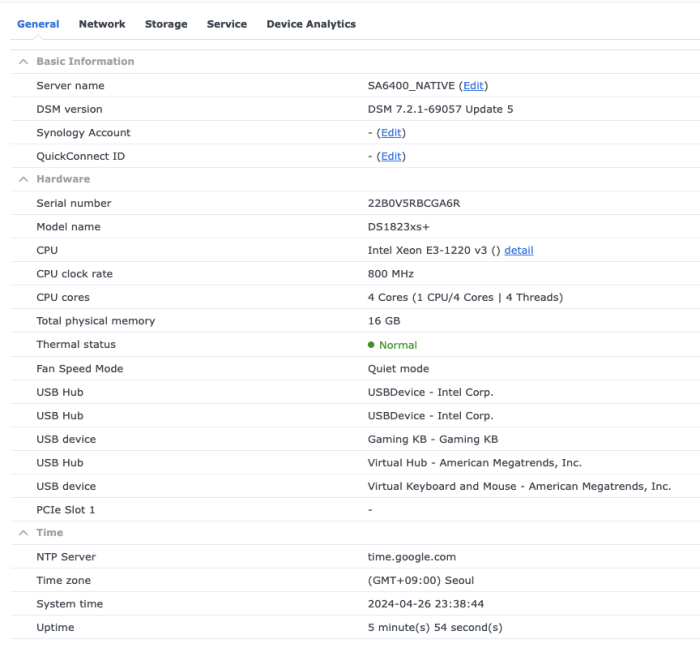

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 7.2.1 69057 Update 4 - Loader version and model: latest loader mshell by Peter Suh - DS3622xs+ - Using custom extra.lzma: NO - Installation type: baremetal on Fujitsu Celsius W530

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

midiman007 replied to Peter Suh's topic in Software Modding

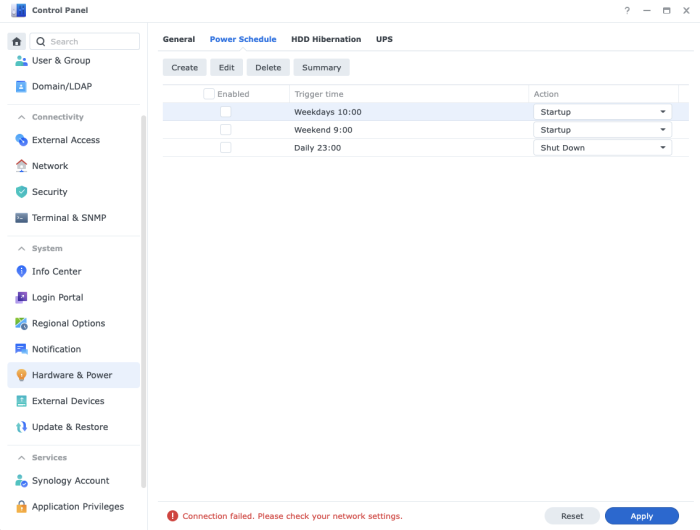

Peter thanks for your reply. Again thank you for your hard work on the loader. After a complete rebuild the PC will shut down and not power on. Really strange. Yes I did notice that even though the error appeared the boxes were checked and the PC shutdown at night but will not power on in the morning. I will try removing the weekend task and just use the weekday and see if that is the issue. I don't know about a true Sysology NAS since all of my OEM NAS's are QNaps. -

SurveillanceStation-x86_64-8.2.2-5766

TRT_miha replied to montagnic's topic in Програмное обеспечение

волшебник))) -

Да вот вам а здоровье ....))) Сайт иногда тупит и не всегда сразу даёт скачать, так что пробуйте и пробуйте SurveillanceStationClient

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

The above error message appears not only here but also in other places. However, it does not affect the actual operation of the scheduler. The last changed settings will remain the same. Please try out StartUp and Shutdown in action. However, I'm not sure if it's a Synology bug or a REDPILL bug, but there is a problem with the schedule operating because weekdays and weekends are differentiated. Maybe your weekend doesn't work out the way you want it to. I experienced this phenomenon a long time ago and was not using the weekend setting. Didn't this problem occur with the genuine product? -

10GBit Bandwidth ~30% slower after upgrade to 7.2.1-69057-5

Peter Suh replied to crurer's question in General Questions

The Intel 10G ixgbe modules of DS3617xs(broadwell) and DS3622xs+(broadwellnk) operate somewhat specially. Both models must use the vanilla ixgbe that was originally built into the original model. I don't know if the TCRP of pocopico you used has adjustments for this vanilla module. rr and my mshell have this vanilla ixgbe adjustment. ixgbe compiled separately from redpill should only be used on other than these two platforms broadwell / broadwellnk. These two files are vanilla module files that are imported directly from the original module in Synology. The module pack is managed by overwriting this file on top of the ixgbe compiled by redpill. https://github.com/PeterSuh-Q3/arpl-modules/blob/main/vanilla/broadwell-4.4.302/ixgbe.ko https://github.com/PeterSuh-Q3/arpl-modules/blob/main/vanilla/broadwellnk-4.4.302/ixgbe.ko I hope you try it with rr or mshell. -

10GBit Bandwidth ~30% slower after upgrade to 7.2.1-69057-5

IG-88 replied to crurer's question in General Questions

6MBit like 1000 times less? thats like not working at all i have my main with 7.1.1 and backup system with 7.2.1, the later one does 250 MByte/s with a bunch of older disks and hyperbackup rsync (not tested wit iperf) so from the 7.1.1 to 7.2.1 is no problem the main 7.1.1 from a win11 system makes 1G Byte/s as long as it fills up ram and then drops to 650MB/s, cant ask for more (good nvme ssd on win11 and 20GB single file) just tested it for 7.2.1 from win11 and its the same as with 7.1.1, 1GByte/s as long as its ram and then ~600MByte/s (no iperf neede here if it looks that good already) (win11 and 7.1 is a sfp+ mellanox and 7.2 a rj-45 Tehuti TN9210 based (might be similar to you syno card) there are some base differences even with systems having the same dsm version, 3615 is kernel 3.10 but 3617 and 3622 are both 4.4 so looks no like its not related to that as 3615 and 3617/22 seem to perform both badly but anyway try arc loader arc-c (sa6400) with 7.2.1 that's kernel 5.x and might be different any switch involved there might be differences when connecting directly or through a switch as of how speed is negotiated what speed does the system show (ethtool eth0 | grep Speed) when its only 6Mbit/s its interesting but if you want to save time consider just keep it with 7.1, as its a LTS version and afair will get the same support as 7.2, if there are no features you need from 7.2 you dont miss anything when just using 7.1 -

SurveillanceStation-x86_64-8.2.2-5766

TRT_miha replied to montagnic's topic in Програмное обеспечение

На офф. сайте остались только 2.x.x версии. Остальные поудаляли. Если у кого-то есть в наличии - поделитесь пожалуйста. -

BootStrap changed their profile photo

-

Post installation, some 3rd party packages failed to launch and package centre hangs up on install / uninstall.

-

- Outcome of the update: SUCCESSFUL - DSM version prior update: DSM 7.2.1 69057-Update 4 - DSM version AFTER update: DSM 7.2.1 69057-Update 5 - Loader version and model prior to update: ARC v24.2.21 / DS3622xs+ - Loader version and model before update: ARC v24.2.21 / DS3622xs+ - Using custom extra.lzma: No - Installation type: BAREMETAL – HPE ProLiant MicroServer Turion II Neo, L2 N40L Dual-Core - 16GB RAM - Additional comments: Downloaded manually and updated via DSM Control Panel. No issues.

-

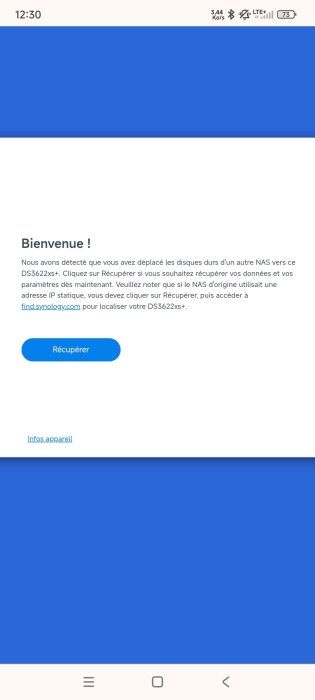

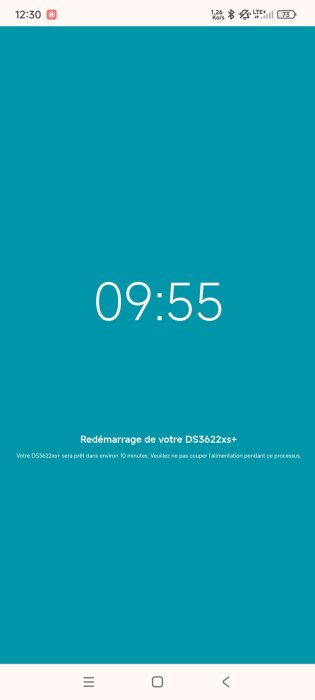

Bonjour, J'ai un N40L tournant sous ARC en directboot (obligatoire sur ce HP Proliant Microserveur). J'étais en version DSM 7.2.1-69057 Update 3. Tout était bien fonctionnel. Le 24/04 j'ai reçu un courriel m'informant de la disponibilité d'une nouvelle version de DSM. (DSM 7.2.1-69057 Update 5). J'ai donc été dans Panneau de configuration, Mise à jour et restauration pour télécharger l'update 5. Une fois téléchargé, je l'ai installé. DSM a rebooté et depuis, ça boucle : 1. "Récupération" proposée (image 1). Clique sur "Récupérer" 2. Installation en cours de DSM (image 2) 3. Installation terminé (image 3) 4. Redémarrage (image 4) Puis retour au point 1. J'ai déjà eu ce problème lorsque je suis passé de DSM 7.2.1-69057 Update 1 à DSM 7.2.1-69057 Update 3. J'ai dû réinitialiser mon DSM. Je n'ai pas perdu mes données, mais toute la configuration a été effacée. Savez-vous comment je dois procéder pour redémarrer correctement mon DSM sans rien perdre ? Merci

-

vladaffera joined the community

-

I can also confirm full USB functionality and advanced speed via TCRP. My systems are not only used for testing purposes. As of a year ago, ARPL didn't work. It's also super easy and quick to migrate from internal RAID0 using a single Linux mdadm-command. If you have an external single drive, you should ideally place the parity disk of a RAID4 there. If you have a dual(or more)-case, it makes sense to place the mirror drives of the RAID10 (RAID0+1 with 2 near-copies) there. In general, the slow hard drive performance when writing in RAID 5/6 scenarios, with only 3-5 drives, is out of the question for me anyway. I can also imagine the long recovery times with the associated collapse in performance. We're not talking about a data center-sized installation where the penalty is almost negligible. Always remember that the higher the number of hard drives, the more likely (I love RAID4 there) it is that a hard drive will fail.

-

no, there is no such thing that tricks dsm (xpenology or original) into accepting a "normal" sata (or sas) enclosure as one of there original expansion units afaik there is some tinkering in specific drivers kernel code and firmware checks for the external unit involved (was introduced ~2012/2013 before that any eSATA unit that presented every disks as single disk worked) also worth mentioning is that technically its a simple sata multiplier and you use the amount of disk in that external unit through that one 6Mbit sata connection, all drives involved (external unit) sharing this bandwidth) as long a you just have to handle a 1Gbit nic you wont see much difference but if you want to max out what lets say 4 + 4 10TB drives can do and use a 10G nic you will see some differences and raid rebuild might speed might also suffer in eSATA connection scenarios also a general problem with that kind of scenario is reliability as when accidentally cutting power to the external unit there will be massive raid problems afterwards usually resulting in loss of the raid volume and when manually forcing repairs its about how much data is lost and hot to know what data (files) is involved i dont know if synology has any code in place to "soften" that for there own external units (like caching to ram or system partition when sensing that "loss" by a heartbeat from external and bringing the raid to a read only mode to keep the mdadm raid in working condition) as you can use internal up to 24 drives with xpenology and only your hardware is the limit (like having room internal for disks and enough sata ports) there is only limited need for even connecting drives external and some people doing this hat seen raid problems if you dont have backup from you main nas then don't do that kind of stuff, its way better to sink some money in hardware then learning all about lvm and mdadm data recovery to make things work again (external company for recovery is most often out of question because of pricing) maybe a scenario with a raid1 with one internal and one external disk might be some carefree thing bat anything that goes above the used raid's spec's for loosing disks is very dangerous and not suggested and to bridge to the answer from above, in theory usb and esata externel drives are handled the same, so it should be possible to configure esata ports in the same way as usb ports to work as internel ports, as esata is old technology and mostly in the way when it comes to xpenology config files it's most often set to 0 and not in use, i used one esata port as internal port years back with dsm 6.x - but as a ootb solution is the thing then externel usb as internel drives is the common thing now and with 5 or 10 Gbits usb is just as capable as esata for a single disk (and you will have a good amount of usb ports on most systems where esata is usually, if there as any, is just one if you want to use usb drives as "intrnal" drives then you can look here (arc loader wiki) https://github.com/AuxXxilium/AuxXxilium/wiki/Arc:-Choose-a-Model-|-Platform its listed specifically that its usable that way

-

Bonjour, J'ai actuellement un N40L avec 8 Go configuré avec un groupe de stockage en RAID6 composé de 4 disques de 6 To (soit 1 volume en ext4 de 10.8 To dispo). J'envisage de passer à un Dell PowerEdge T110 II (Xeon E3-1220 V2 3.11Ghz / 24 Go de Ram). L'idée est de donner plus de répondants à mon NAS. Mon N40L émule actuellement un DS3622xs+ (DSM 7.2.1-69057 Update5). J'ai plusieurs questions. 1. Est-ce que la migration hardware en restant avec un boot ARC ne va pas faire perdre mes données ? 2. Est-ce que je vais y gagner à basculer sur le T110 en termes de performance ? 3. Faut-il rester sur un DS3622xs+ ou un autre modèle est plus adapté ? Ça sera peut-être l'occasion de passer à la virtualisation au passage à ce T110 II. Avec la fin de la version gratuite de l'hyperviseur bare metal ESXi, il vaut mieux s'orienter vers proxmox sur ce matériel ? Si je mets en place un hyperviseur, concernant la VM dédié à DSM , même questions que précédemment : 1. Est-ce que je ne vais pas perdre mes données ? 2. Est-ce que je vais y gagner en termes de performances ? 3. Faut-il rester sur un DS3622xs+ ou un autre modèle est plus adapté ? Merci.

-

на ArcLoader 23.11.20 все обновилось!

-

deemaas joined the community

-

podpal joined the community

-

manhdv joined the community

-

Outcome of the update: SUCCESSFUL - DSM version prior to update: DSM 7.2.1-69057-U4 - DSM version after update: DSM 7.2.1 69057-U5 - Loader version and model prior to update: RR 24.4.6 (DS923+) - Loader version and model after update: RR 24.4.6 (DS923+) - Using custom extra.lzma: NO - Installation type: BAREMETAL - Asrock Fatal1ty Z370 Gaming-ITX/ac, i7-8700K, 1x3TB+1x2TB HDDs, 1x500GB NVME/Intel Optane - Additional comments: Used RR Manager to update loader and add-ons shortly before updating DSM version from control panel

-

Surveillance Station 8.2.7-6222 + камера tp-link c200

TRT_miha replied to TRT_miha's topic in Програмное обеспечение

Задача решена. Провайдер, пробросив порты на своём железе, открыл камеру. Работает без белого IP. Управляется так же без проблем через интерфейс SSS Тему можно закрывать. Всем Добра -

Outcome of the update: SUCCESSFUL - DSM version prior to update: DSM 7.2.1-69057-U4 - DSM version after update: DSM 7.2.1 69057-U5 - Loader version and model prior to update: ARC 23.10.2 (DVA1622) - Loader version and model after update: ARC 24.4.19 (DS918+) - Using custom extra.lzma: NO - Installation type: BAREMETAL - Gigabyte B365-DS3H, i5-8400, 10x12TB, 2x4TB - Additional comments: Rebuilt USB with latest ARC loader.