-

Posts

4,647 -

Joined

-

Last visited

-

Days Won

212

Everything posted by IG-88

-

at least on updates the bonds are often a source of problems also check you network hardware / cables and config if you have access by serial console you could shutdown and cut power to exclude temp. hardware related problems and if that does not work its possible to reset the network config files of dsm to default, it might also be possible to get network access with a added usb nic

-

External HDDs detected as internal HDD after synoinfo.conf edit.

IG-88 replied to Triplex's question in General Questions

when sticking to the 12 limit (918+ is no option wit a hp microserver gen 8 mainboard) 6 onboard + 8 lsi - 2 ( two onboard unusable) - 2 (over 12) = 10 usable disk "slots" (4 onboard sata and 6 out of 8 on the lsi) 1 1 1 1 0 0 1 1 1 1 1 1 0 0 - thats your 10 disks seen from the 6 +8 layout of the controllers if you already have 8 then you can add two not four disks just scrap the cache drives, with a 1GBit nic the performance of the raid array will be enough (110MB/s), no speed gain in most to all cases by ssd cache (at least on a home use system, in a small business environment there might be a difference) local ssd as volume can be interesting for local tasks on the nas like docker/vm's and photostation/moments it might also be a option to switch off onboard sata completely and get a 16port lsi sas controller but at this point it might be batter to thing about a new cpu/board (does not have to be new) if you just sick to the rules and keep it to 10 disks there is no need to change anything, just keep the default of 12 drives and forget about update problems with synoinfo.conf if there are no immediate plans to extend then see for it later where a new cpu/board might be already needed or planed and 918+ might be the choice maybe i will do the patch for more disks for 3615/17 - but i'm talking about that for over a year now ... - lets see what the new hard corona shutdown will bring, maybe i will get bored so much that i do it this time, 16 or 20 disks would be my choice for a new patch -

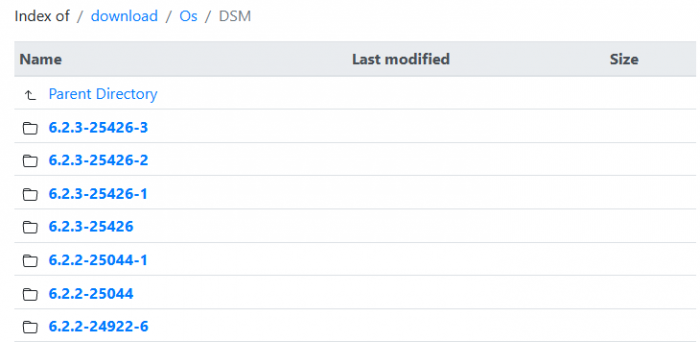

lot of links in the forum to dsm versions and udpates might not work from now on synology replaced the old system and also change the structure slightly old https://archive.synology.com/download/DSM/release/ new https://archive.synology.com/download/Os/DSM also the updates are now in the same area as the realeases

-

918+ has m.2 nvme vach driver support (usually only makes sense with 2 for r/w cahe and with 10G nic) a driver for hardware accelerated transcoding of intel qick sync video, but only you old cpu had intel qsv so its not of importance anymore i guess moments supports jpeg with intel qsv but i have now clue how mach faster IRL it would be but i guess the storage speed will also have a big impact if you process half a million pictures, pretty sure it will be a nice number for intel qsv when using ssd as disk or having it all in a nvme r/w cache (and "preloaded" so you will have 100% r/w speed of nvme) security updates and fixes, i dont think there is much performance gain we know at least dsm 7.0 has a different package format and 7.0 packages can't be installed on 6.x (same the other way, no old packages on 7.0) if they leave out older units with 7.0 that are within the 5 year support they might need to provide packages for dsm 6.2 if it really is important you can buy a original unit at any time, that will have dsm 7.0 and the latest photo/moments package also dont count on xpenology dsm 7.0 (aka a loader for dsm 7.0) - nothing has been heard or seen

-

there is a limit of 12 drives in 3615/17 and when you 1st drive is "sda" it will deduce the total number of disks in a raid to 11 but my question here would be why using that number of disks anyway, why not one big xx TB vdisk without raid? you will have to backup anyway and usually the underlying storage already has raid protection only if using rdm whole disk or path trough a entire controller you would use ore disks 918+ comes with a 16 disk default but needs min 4th gen intel cpu to start also the config file synoinfo.conf can manually be changed for more disks but that has other problems when updating so its not a fire and forget solution

-

if wanting to start a new setup without any data its best remove all partitions before install, formatted usually implies one partition that has a file system also i found it better to use synology assistant and leave the IP setting DHCP for the install process if you run into raid problems with dsm keep in mind its a software raid based on mdadm and there are manually usable reapair options dsm does not offer and also hardware raid does not offer the disks in a raid set have a event number it it does not match the disks with lower are not used because they are to old, missed data often the difference is not that big and the amount of lost data would be small, so forcing a disk with lower event number is possible to access the data again (thats when more then the planed redundancy disks (1 for raid5 2 for raid2) are lost, if its only the redundancy thats out of sync or missing then the normal raid repair can be used (thats what dsm would offer in the gui as rebuild) lesson leard would be to have a backup, it does not need to be all data, often just 1-2 TB are the most important and can easily copied to a cheap usb hdd and even a full backup does not has to be a raid system, as long as its checked to be working a pool of disk as jbod mode can be enough (or big single disk with 16TB) even cloud backup (like backblaze) can be a option

-

when the patch.sh is changed similar to above when u2 came up it will work 1st part is for what version the patch is working (from - to version, so the "to" needs to be changed to 3) 2nd is the version list, the new u3 needs to be added ... #arrays declare -A binhash_version_list=( ["cde88ed8fdb2bfeda8de52ef3adede87a72326ef"]="6.0-7321-0_6.0.3-8754-8" ["ec0c3f5bbb857fa84f5d1153545d30d7b408520b"]="6.1-15047-0_6.1.1-15101-4" ["1473d6ad6ff6e5b8419c6b0bc41006b72fd777dd"]="6.1.2-15132-0_6.1.3-15152-8" ["26e42e43b393811c176dac651efc5d61e4569305"]="6.1.4-15217-0_6.2-23739-2" ["1d01ee38211f21c67a4311f90315568b3fa530e6"]="6.2.1-23824-0_6.2.3-25426-3" ) ... "6.2.3 25423-0" "6.2.3 25426-0" "6.2.3 25426-2" "6.2.3 25426-3" ) #functions print_usage() { printf "

-

also eigentlich meint das nur das man port5/6 entweder am m.2 nutzt ODER als onboard anschluss nutzen kann und selbst wenn man eine m.2 nvme (pcie) einsteckt bleiben die sata ports nutzbar - deshalb im block bild auch der "switch" bei m2 und 2xsata, der sorgt dafür das es nur einer der beiden die signale hat ist bei meinen b360/b365 chipsätzen auch so und das handbuch zu deinem board sagt das auch, zumindest wenn nichts im m.2 ist muss sata5/6 gehen (und gemeint ist eigentlich das wenn keine m.2 satat karte installiert ist sollte 5/6 normal funktionieren) wenn es das nicht macht ist das entweder ein bios problem oder ein defekt, es ist eine zugesicherte eigenschft das man 6 sata ports nutzen kann schließ doch einfach mal nur an 5/6 je eine disk an and schau im bios bei den boot devices nach, die sollten dort auftauchen (mal so ganz unabhängig vom betriessystem bzw. treibern) kann ja durchaus sei das die ports an sich gehen aber nur mit dsm probleme machen - aber das sollte man wiederum im dmesg log sehen, dann sollte er die ports erkennen und dann feststellen das sie nicht nutzbar sind (was auch immer dann die fehlermeldungen dazu sein mögen) ich habe ja schon mal erlebt das ein extrem alter sata ahci chip probleme gemacht hat aber die aktuelle onboard hardware (cpu/chipsatz bzw. soc) hat bisher immer funktioniert check mal das die ports auch im ahci modus sind und nicht auf raid stehen , das wäre der einzige fehler der mit einfällt (außer die ports im bios zu disablen) aber normalerweise ist der default der ports auf ahci

-

VM usage was not my suggestion, i was just pointing out that one core is not the use case for dsm and has more problems then solutions i never tried a 1core dsm, not in vm or baremetal because its below minimum what synology sells as hardware, the not even test it in that direction, so its kind of unsupported there are also "generic" suggestions like check what mode it boots (uefi or csm, maybe disable csm), bios update, reset to bios defaults or maybe try 3617 loader 1.03 with a single empty disk (csm mode active and non uefi usb boot device) and see if that changes anything it must be specific to you system/bios the soc/chipset is widely used here maybe look in the update report section to find some one with the same board and as him about his bios version ans settings

-

Build requirements for HW transcode and Synology Moments AI functions

IG-88 replied to secretliar's topic in The Noob Lounge

hardware transcoding depends on the intel i915 driver andthat one is not present in 3615/17 yes, but you should ignore the higher number of 918+ loader, there are 3 loades for dsm 6.2 and for for every type of the three it should be the latest loader https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ unknown, atm there are no sign that we will get a loader for 7.0 it depends on the person/group who creates the loader, if someone asks, i do have a opinion about it, but it also can be just driven by the need of the one person or to have low effort/time and building on the knowledge already present and just extending/modding the loader we already have in general units get 5 year support from synology so the 3615 were the 15 is the release year 2015 might not be continued, also its kind of redundant as 3617 has all and even more features then 3615 beside this you can still "migrate" between models and take data/settings and most plugins with it, so if you start with 3617 and feel 918+ suits better you just exchange the loader and install same or newer build of the other dsm type (matching the loader you used) same is to expect when updating to 7.0, it needs a new loader and if its not the same type it will be the special update called "migration" -

Build requirements for HW transcode and Synology Moments AI functions

IG-88 replied to secretliar's topic in The Noob Lounge

918+ has 4th gen hasswell as minimum to boot but the original synology units use newer gpu gen's than that so there might be things not working on older gen cpu's i've never tried moments but the Ai stuff will use the jpeg hardware support, you 4th gen cpu only supports jpeg decoding but i's guess that's enough (918+ apollolake also supports encoding, if dsm relies on the type then some gpu functionality is not present when using a older cpu/gpu, but i can't remember a case where we have seen trouble about that) https://en.wikipedia.org/wiki/Intel_Quick_Sync_Video#Hardware_decoding_and_encoding no, you don't https://xpenology.com/forum/topic/24864-transcoding-without-a-valid-serial-number/ https://xpenology.com/forum/topic/30552-transcoding-and-face-recognitionpeople-and-subjects-issue-fix-in-once/ yesy if its at least 4th gen and has intel quick sync video it does needs activation, when using the patch from above you dont need serial and mac of a original unit where`i cant remember any limit like that, i tested vidoestation's transcoding with a 2core cpu and it worked having pcie 3.0 and some pcie slots for extensions like 10G nic and sata ports - you already have that to support 10bit color and 4k h265 you would need a better system but i guess thats no problem atm -

mbr version ist nur wenn es (im csm mode bzw. pre uefi bios) nicht bootet, sieht man bei HP desktops fast immer sieht nicht auffälig aus, sollte gehen (onboard sata auf ahci) nimm doch mal loader 1.03b 3615, passe usb vid/pid in der grub.cfg an und trage die 4 nic's mit ihrer mac ein set mac1=xxxxxxxxxxxx set mac2=xxxxxxxxxxxx set mac3=xxxxxxxxxxxx set mac4=xxxxxxxxxxxx ... set netif_num=4 das bios scheint ja noch kein uefi zu sein so das es ootb mit 1.03b booten sollte (bei uefi muss man csm aktivieren und vom nicht uefi usb device booten) außerdem würde ich empfehlen den synology assistant zur installtion zu verwenden,. da gibt man ganz am anfang den admin account ein und am besten lässt man bei den ip einstellungen dhcp, wenn man was anderes will kann man das später anpassen nein, wenn es im netzwerk gefunden wird und storage ahci ist geht das mit den treiber die im loader dabei sind

- 1 reply

-

- supermicro x8sie-f

- juns loader 1.02b

-

(and 1 more)

Tagged with:

-

External HDDs detected as internal HDD after synoinfo.conf edit.

IG-88 replied to Triplex's question in General Questions

that's normal and expected behavior as "big" updates ~200-300MB containing a hda1.tgz will replace the whole content of the system partition and all "undefined" changes will be ignored and overwritten - its a appliance after all so mangling any files beside what synology allows can vanish at any time with any update the loader takes care of some settings, being checked on every boot and get patched when changed aka are back to original the 12 disks of 3615/17 are the default and where enough when the patch was created, so the patch does not contain any parts monitoring the disk count, if you set it to something different manually it will be used but also it will be reset to default on some updates and your settings are gone the 918+ is different in that way as it had a default of 4 disks and would not have been that useful with that so jun also patched the disk count to 16, so even after a bigger update its patched to 16, there is no equivalent in 3615/17 but technically its possible to create a new patch containing parts to patch the 12 default to i higher number - only no one took the effort and time to do so (i would incorporate the patch in my extra.lzma - if done properly) yes and thats why its not suggested and i warn people to to so, it can be handled but it can be complicated or dangerous, contradicting the purpose of a appliance of beeing easier to handle worst case is "loosing" the dedundacy disks, the raid will come up and you will need to rebuild, a little scarier on 1st boot after update is when more disks then redundant are missing, then the raid completely fails to start but thats better because you just change the synoninfo.conf back the way you had it before, reboot and everything should be back to normal (beside some raid1 system partitions that can be auto repaired by dsm) yes but the max disk count and layout of the disks are in the synoinfo.conf and the kernel commands (if working for sata) are more or less for remapping see above, if you lost redundancy then its kind of worst case scenarion, its not always that way a "crashed" array (like not enough disks to assemble it) is much less hassle (but look scary when happening after boot) with just 8 disks the suggested scenario is to use the available sata ports first and only use the needed lsi ports then so you wont miss the 2 ports at the and and dont need to change anything, the other way would be to completely disable the sata and have the 8 ports of the lsi at position 1-8 (being inside the 12), there might be kernel option to swap controller positions and get the lsi used 1st but thats still not fool proof and might change when synology changes the kernel (they are in control and we not even have a recent source), using the sata ports 1st before the lsi ports is safer and the performance it not problem, the onboard chipset sata usually works good enough -

i'vre read the complete opposite when when using a VM with just one core, two a the suggested minimum the last one core synology dsm unit is from 2016 and hat a Marvell Armada (no x86) yes one thing "unusal" too mightbe the 16GB RAM, the intel spec for this soc is max. 8GB https://ark.intel.com/content/www/us/en/ark/products/95594/intel-celeron-processor-j3455-2m-cache-up-to-2-3-ghz.html so maybe reduce to 8GB and try again with 4 corers (i've seen that gigayte does have 16GB max in its specs for the GA-J3455N-D3H but trying 8GB does not hurt)

-

i have not tried 7.0 yet but just a warning based on the "DSM_Developer_Guide_7_0_Beta.pdf" "... If your package is for DSM6 then you should have a DSM6 NAS. If your package is for DSM7 then you should have a DSM7 NAS. Package for DSM6 is not compatible with DSM7 ..." beside the fact that 7.0 is not working with 1.03b/1.04b loaders and people doing that update on xpenology will end with a non bootable system needing to downgrade manually or reinstall 6.x from scratch if anyone wants to peek then try it in VMM with virtual DSM thats not even close to fast, if using the write log area for mdadm it is possible to just redo missing writes (usually not that many) and you are done, resyncing a raid that way takes seconds

-

usb 3.1 gen 2 or Thunderbolt Z390 work?

IG-88 replied to Captainfingerbang's question in General Questions

the thing i know is that tb was introduced with kernel 4.13 and synology uses 3.10.105 and 4.4.59, so its not just changing a switch in the kernel config and having a driver so if you can backport kernel drivers and add this to the synology kernel source ... -

about that, i looked in the synoinfo.conf of a dva unit and its support_nvidia_gpu="yes" thats the line i overlooked local supportnvgpu=`get_key_value $SYNOINFO_DEF support_nvidia_gpu` here the yes from the synoinfo.conf variable is transferred to a new temporary one

-

maybe try a "older" plex version "... Linux-specific Notes ... Starting with Plex Media Server v1.20.2, driver version 450.66 or newer is required for NVIDIA GPU usage. ..." https://support.plex.tv/articles/115002178853-using-hardware-accelerated-streaming/ maybe this one? https://downloads.plex.tv/plex-media-server-new/1.20.0.3181-0800642ec/synology/PlexMediaServer-1.20.0.3181-0800642ec-x86_64.spk

-

there seemed to be things missing to have it working with docker (i wanted to try with jellyfin), i did not want to dive into trying to compile the whole nvidia package, just wanted to use the one already made by synology for dsm ffmpeg is also a problem as it might not be aware of nvidiaenc for transcoding (that needs to be compiled into ffmpeg and it looked like the packages available (synology and 3rd party) where missing this feature (as, beside of the exotic dva units, nvidia drivers are not availible for dsm) i thought plex might be a uiversal build for dsm, if the synology version is "optimized" not containing nvidia parts then it might be not working that way

-

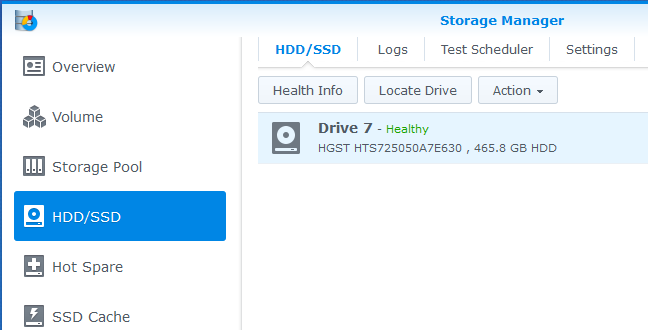

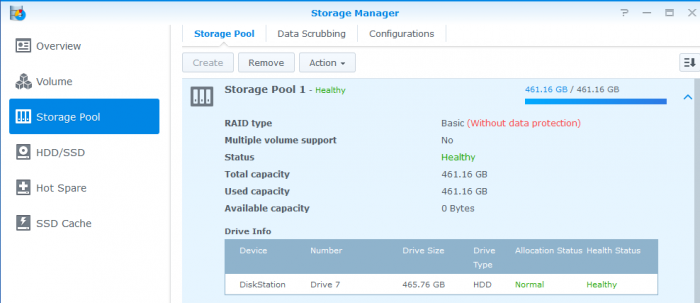

yes, the mpt2sas got merged into mpt3sas in the newer kernels like 4.4 of 918+ you can see that in /var/log/dmesg when the driver is loaded ... [Sun Dec 13 15:46:49 2020] Fusion MPT SAS Host driver 3.04.20 [Sun Dec 13 15:46:49 2020] mpt3sas version 09.102.00.00 loaded [Sun Dec 13 15:46:49 2020] mpt3sas 0000:04:00.0: can't disable ASPM; OS doesn't have ASPM control [Sun Dec 13 15:46:49 2020] mpt2sas_cm0: 64 BIT PCI BUS DMA ADDRESSING SUPPORTED, total mem (8091072 kB) [Sun Dec 13 15:46:49 2020] mpt2sas_cm0: MSI-X vectors supported: 1, no of cores: 2, max_msix_vectors: -1 [Sun Dec 13 15:46:49 2020] mpt2sas0-msix0: PCI-MSI-X enabled: IRQ 27 [Sun Dec 13 15:46:49 2020] mpt2sas_cm0: iomem(0x00000000f78c0000), mapped(0xffffc90000250000), size(16384) [Sun Dec 13 15:46:49 2020] mpt2sas_cm0: ioport(0x000000000000c000), size(256) [Sun Dec 13 15:46:49 2020] mpt2sas_cm0: sending message unit reset !! [Sun Dec 13 15:46:49 2020] mpt2sas_cm0: message unit reset: SUCCESS [Sun Dec 13 15:46:49 2020] mpt2sas_cm0: Allocated physical memory: size(7445 kB) [Sun Dec 13 15:46:49 2020] mpt2sas_cm0: Current Controller Queue Depth(3307),Max Controller Queue Depth(3432) [Sun Dec 13 15:46:49 2020] mpt2sas_cm0: Scatter Gather Elements per IO(128) [Sun Dec 13 15:46:49 2020] mpt2sas_cm0: LSISAS2008: FWVersion(19.00.00.00), ChipRevision(0x03), BiosVersion(07.37.00.00) ... temp, sn, smart works on my test system (6.2.3 u3) with a 9211 controller , fresh install is this a baremetal installation or vm? its not really a fix, its just some of the scsi/sas stuff replaced with jun's drivers from his original extra.lzma if you dont need newer drivers you can try the extra/extra2 from the 1.04b loader (extract the files with 7zip from the *.img and copy them to your usb flash drive) btw. i dont understand russian, either explain whats important in that picture (i dont understand the red error message, i can see there are not temp. for the disks) or switch the gui to english before taking screenshots, also take some more screenspace into the shot so the context can be seen like were in dsm it was made i'm not sure in what section of the storage manager it was taken, neither volume, storage pool or hdd/ssd give me a view like in the picture mpt3sas_base.h from kernel 4.4.59 ... /* driver versioning info */ #define MPT3SAS_DRIVER_NAME "mpt3sas" #define MPT3SAS_AUTHOR "Avago Technologies <MPT-FusionLinux.pdl@avagotech.com>" #define MPT3SAS_DESCRIPTION "LSI MPT Fusion SAS 3.0 Device Driver" #define MPT3SAS_DRIVER_VERSION "09.102.00.00" #define MPT3SAS_MAJOR_VERSION 9 #define MPT3SAS_MINOR_VERSION 102 #define MPT3SAS_BUILD_VERSION 0 #define MPT3SAS_RELEASE_VERSION 00 #define MPT2SAS_DRIVER_NAME "mpt2sas" #define MPT2SAS_DESCRIPTION "LSI MPT Fusion SAS 2.0 Device Driver" #define MPT2SAS_DRIVER_VERSION "20.102.00.00" #define MPT2SAS_MAJOR_VERSION 20 #define MPT2SAS_MINOR_VERSION 102 #define MPT2SAS_BUILD_VERSION 0 #define MPT2SAS_RELEASE_VERSION 00 ...

-

afaik that in not mentioned anywhere in the howto's, maybe its suggested to change nic's when having problems or connect all nic's that problem can also hit people with 918+ from a different direction as it only supports 2 nic's ootb and when adding a 2/4port nic some of the ports will not be usable ootb (the patch in the loader "only" extends the disks form 4 to 16 but does not extend nic's to anything near the default of 8 from the 3615/17, its still 2 nic's after installing and >2 needs manually tweaking atm) the mellanox drivers might be the only nic drivers not working directly with the loader (only after installing dsm) as there are recent enough drivers in dsm itself so they did not make it into the extra.lzma (yet) that beside kernel/rd.gz is also loaded at 1st boot when installing, synology does not support them as to install for a new system, thats only supported with the onboard solutions (obviously or they would be part of rd.gz like igb.ko) that only applies to 3615/17, as 918+ does not have generic support in dsm the mellanox drivers, they are added be the extra.lzma and will work on 1st boot for installing dsm its possible to add them to the 3615/17 extra.lzma but its would bring extra.lzma from 5MB to 20MB, making it impossible to use extra.lzma for the MBR loader version that comes with 20MB partition size (for kernel and extra, jun's defaul size is 30MB), only adding mlx4 (and all lower hardware version) would not have that much impact, there will be only very few to try installing with just a connect-x5 in the system (i'm aware of the 20MB limit as i use old HP desktops for testing that need MBR version of the loader)

-

not really, from the synology default drivers the 3617 gets more attention and has newer drivers in some places (like mpt3sas) the mellanox drivers are the same on both when it comes to default drivers in rd.gz (on the loader together with the kernel) then the 3615 has igb.ko (suiting for i350) and the 3617 is missing that one and has ixgbe.ko instead so when just booting the loader and not having additional drivers the 3617 might not recognise a i350, but with a extra.lzma present - and thats the normal case with 1.03b loader (as it has jun's extra.lzma) - at least thats what i thought, but when going through juns extra.lzma there is no igb.ko present, the rc.modules does try to load that one but if its not present and not in the rd.gz ... its present in dsm so the igb.ko will work after installing, the same goes for the mellanox drivers, not in rd.gz and not in jun's extra.lzma edit: i overlooked the igb.ko in the rd.gz of the 3617, its there so it should work ootb with a i350 (to late for today, i will go to bed) so the thing flyride mentions is about not beeing able to do a 1st installation with just a mellanox and the default loader but as i provide a newer igb.ko with my extra.lzma the i350 should at least work when using my extra.lzma 11.2 for 3617 https://xpenology.com/forum/topic/28321-driver-extension-jun-103b104b-for-dsm623-for-918-3615xs-3617xs/ a added mellanox should not crash a already installed 3617 system if that happens then remove the card, boot again and have a look in dmesg, there should be something about that failed boot attempt, if that does not work its possible to use a null-modem-cable (com1) and a serial console to monitor the boot process (dsm does switch to it early and you dont see anything on a monitor)

-

what files did you edit in what way and do you have backups? if you removed the kernel files then its pretty much clear, without the kernel the loader will not start and for that reason the whole system will not start you need these kernel files if you know what files where there then put them back if it was dsm 2.2 before then https://archive.synology.com/download/DSM/release/2.2/1042/synology_x86_710+_1042.zip 710+ seems to be supported up to DSM 5.2 so why not going with that? i guess if you make a copy the whole module with a imaging tool (like dd on linux) you could try to copy the loader 1.03b 3615 (img file) to that module (also using dd), your original module does have the right usb vid/pid to be recognized by the original bios and if you start the xpenology loader and have the grub.cfg set to f400/f400 it should work the original dsm is x86 (not x64) but the cpu is Intel Atom D410 and that one has 64bit instruction set https://ark.intel.com/content/www/us/en/ark/products/43517/intel-atom-processor-d410-512k-cache-1-66-ghz.html atm it look like you system might be recoverable with the original dsm so you might try that first and if you succeed you can still think if its worth experimenting with the unit again - and you should up the ram to at least 2GB before trying 3615 btw. the x86 dsm version is limited to 16TB per volume but that is not really a concern with just 2 disk

-

also irgendwie gehts hier durcheinander, sind es 4+1 oder 7 platten aber slebst bei 7 sollte ein raid5 volume nicht abstürzen wenn 6 am internen sind und nur eine am lsi, selbst wenn dann eine platte fehlt wäre das volume nur ohne die redundanz und müsste degraded sein /var/log/dmesg (schon mal "internetsuche" benutzt? selbst wikipedia kennt dmesg, geht schneller als zu warten bis ich die frage beantworte) man kann darin sehen welche controller/platten gefunden werden und wie die laufwerke auf die "sdX" verteilt werden, alles hinter "sdl" wäre größer 12 und würde von ds nicht gesehen (zumindest nicht in der default konfiguration) schau mal im bios ob da irgend ein onboard auf raid statt ahci steht (handbuch seite 46 oben)