-

Posts

4,645 -

Joined

-

Last visited

-

Days Won

212

Everything posted by IG-88

-

mehrere möglichkeiten - die datei /var/log/dmesg auf einen share kopieren den man von windows erreichen kann - mit putty zu dsm und den befehel "dmesg" aufrufen, dann scrolled die datei durch und du kannst in der console alles markieren und über die zwischenablage ein eine neue dtei kopieren - in putty die log funktion aktivieren und dann wie vorher dmesg aufrufen, danach kann man im logfile den kram rauskopieren die neue datein mit zip packen um sie an eine nachricht hier im forum zu hängen wenn du mini-sas auf der backplane hast und auf sata "zurück" verbinden willst ist wohl ein anderes kabel fällig als wenn man vom sas controller auf sata geht das wird vermutlich das kabel sein das du brauchst https://www.delock.de/produkte/G_83318/merkmale.html?setLanguage=de https://en.wikipedia.org/wiki/Active_State_Power_Management kann mich aber nicht erinneren sowas mal im bios selbst eingestellt zu haben müsste eigentlich in der duku der backplane stahen aber damit das irgendiwe gesteuert werden kann müsste es einen controlelr geben und eine connection zu mainboard, ich würde aber erwarten das dies optinal ist und ohne die lüfter geht auch das sollte optinal sein, sata an sich kennt keine led anschlüsse habe aber kene erfahrung mit diesen selbsbau backplanes, zu hause nutze ich das nicht und auf arbeit haben wir "fertige" server (hpe und konsorten), das letzte mal das ich sowas auf dem tisch hatte ist >10 jahre her

-

the pcie slot on the expander is just for power delivery, no data connection to the computer the normal hba's use 8 pcie lanes (they work with less but thats the usual max.) there are 24 port hba's like Broadcom SAS 9305-24i, its based on this chip (there might be oem versions too but i guess they are not sold in numbers to find one really cheap) https://www.broadcom.com/products/storage/sas-sata-controllers/sas-3224 but with this adapter the pcie bus might be the limit (8 lanes pcie 3.0 -> ~8GByte/s vs. 24 x 6Gbit (sata speed) -> ~16GByte/s) but that might only be interesting when planning a all flash unit and i guess the sold units now will use m.2 nvme tech instead of sas when using mdadm for raid that should not be a problem, every disk has a id from the software raid and will not be identified by "position", also sata and sas has its own hardware identifier system (wwn) https://en.wikipedia.org/wiki/World_Wide_Name with the lsi sas controller and ist linux drivers its kind of normal that drives appear on different positions (that can get interesting when doing recovery) the handling will be a os related thing and dsm has some specials of its own from synology as they try to map drives to slots on there original units i never used more then one sas controller in a dsm system so its new for me to hear that even positions of controllers might change without placing them differently in pcie slots i expected that at least that would not change as long as there is no chnage on number of cards or there placement in pcie slot btw. when using sas i would suggest using 3617 as it has the newer drivers and no problems like 918+ (if the 918+ lsi sas problems are new for you maybe the following link is interesting) https://xpenology.com/forum/topic/28321-driver-extension-jun-103b104b-for-dsm623-for-918-3615xs-3617xs/ there are also limits on what lsi sas card is supported safest way is to check with modinfo or wit a hex editor (the pci id's are plain text an will be found near "vermagic") there is not really a complete list of tested or supported hardware closest thing might be the network and storage list here https://xpenology.com/forum/topic/12859-driver-extension-jun-103a2dsm62x-for-ds918/ and the native drivers (that come with dsm) https://xpenology.com/forum/topic/13922-guide-to-native-drivers-dsm-617-and-621-on-ds3615/ https://xpenology.com/forum/topic/14127-guide-to-native-drivers-dsm-621-on-ds918/

-

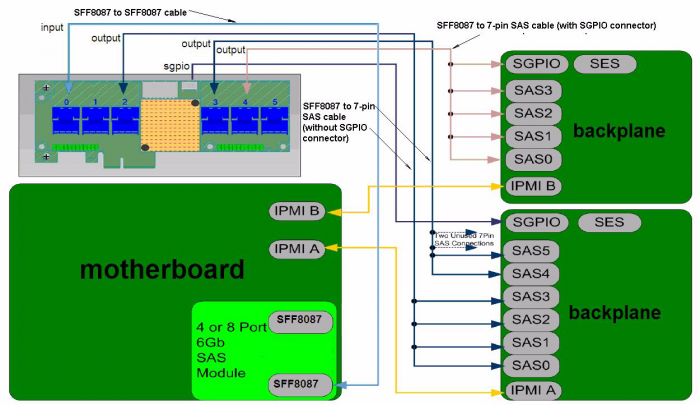

as far as i can see thats a expander and not a hba, imho you would need a hba with a sas port and that would be connected to the expander to multiply the sas ports (for having multiple backplanes on a 4-8HE housing where 2x 8087 from a normal hba are not enough) the manual states "... This controller supports 4 inputs and 20 outputs configuration, or 8 inputs and 16 outputs configuration. ..." look at the light blue arrow in that picture ("input" from hba, in this example a onboard hab is used)

-

Deep Learning NVR DVA

IG-88 replied to Chisee's topic in General Installation Questions/Discussions (non-hardware specific)

without a working nvidia kernel drivers you wont be able to use any of the packages you could try to extract the nvidia drivers from the DSM_DVA3219*.pat file and load them in 918+, the kernel version is the same when adding support_nvidia_gpu="yes" to synoinfo.conf the drivers would be loaded automatically packages can be tweaked to install on "other" dsm systems then intended by changing the INFO file (spk can be opend with 7zip) https://archive.synology.com/download/Package/NVIDIARuntimeLibrary survailance station needs a extra license and i dont know if you would need a special license as for dva are extra spk's like SurveillanceStation-x86_64-8.2.7-6222.spk vs. SurveillanceStation-x86_64-8.2.7-6222_DVA.spk last tests where done here https://xpenology.com/forum/topic/22272-nvidia-runtime-library/ if you find out anything new add to this thread -

bei "neuer desktop" hatte ich mir eher eine 9th/10th gen cpu vorgestellt diese hardware sollte ohne probleme laufen, ich benutze selbst 6th gen cpu desktop von hp zum testen hast du extra ud extra2 ersetzt? hast du auch dsm 6.2.3 installiert? wenn du das mit einem dsm 6.2.2 *.pat file versuchst dürfte es nach der installation vermutlich so ein fehlerbild geben hast du die die neuen kernel files aus dem *.pat file vor der installtion auf den loader kopiert? (rd.gz, zImage) die kernel files gleich auszuspielen ist sozusagen der tet ob die treiber auch mit dem kernel funktionieren , denn die files in dem *.pat file das man installiert landen auch auf dem loader und werden beim folgenden boot dann benutzt, ist also sicherer sie vorher schon zum ersten boot zu benutzen wenn es nach den ersten boot nicht bootet ist entweder etwas mit dem nic treiber faul oder das neu bootende system ist komplett mit kernel panic eingefrohren, sehen könnte man das nur mit einer seriellen schnittstelle und einem null-modem kabel so das man eine serielle console hat, da läuft die gangze ausgabe drüber wenn er beim ersten reboot stehen bleibt könnte man die platte auf die man installiert hat auch an einen anderen pc anschließen, sie mounten und in /var/log/dmesg nachsehen ob das was interessantes steht (oder alternativ ein rescue linux booten und damit nachsehen du kannst ja mal versuchen mit einem frischen 1.04b loader (nur usb vid/pid anpassen), jun's original extra/extra2 und mit dem ersten dsm 6.2.(0) zu installieren (zielplatte am besten ohne partitionen) https://global.download.synology.com/download/DSM/release/6.2/23739/DSM_DS918%2B_23739.pat wenn das geht dann update auf 6.2.3 über die webgui machen man kann auch auf einem anderen pc installieren und dann usb + platte auf den betreffenden pc verlegen, so lange die treiber im vorrat sind und die cpu min. 4th gen intel ist sollte es booten

-

deine angaben sind extrem unkonkret, außer loader 1.04b steht da absolut nichts (mal abgsehen von neuer Desktop) board, cpu, falls verwendet netzwerkakrte und karte für storage welche dsm version wurde installiert? was wurde am loader angepasst? zusätzliche extra/extra2 verwendet? was hast du als know how grundalge benutzt? etwas aus dm forum oder etwas anders? das mit der usb vid/pid musst du ja schon mal richtig gemacht haben sonst hätte es schon beim installieren abgebrochen

-

originaly it's “Only wimps use tape backup. REAL men just upload their important stuff on ftp and let the rest of the world mirror it.” ― Linus Torvalds thats mainly if you come from 6.2.1 or 6.2.2, there are differences with drivers 6.2.(0) and 6.2.3 are compatible, 6.2.1 and 6.2.2 have different kernel settings (binary compiled kernel from synology) and drivers are different and might not work with other versions

-

ja, wenn runtergefahren sollten netzwerk leds lauchten, check mal im bios ob das etwas for wol aktiviert werden muss, füher war sowas immer an aber im zuge der neuren vorgaben für stromparen kann das evtl. auch im default aus aus sein

-

that would be the essential part Kernel driver in use: ahci Kernel driver in use: atl1c atl1c is supported in added driver for loader 1.02b and 1.03b, so dsm 6.1 and 6.2 would be possible ahci is the "normal" sata storage driver already in the dsm kernel loader 1.03b needs csm mode if the bios is a newer uefi type, 1.02b will work witn both old bios mode and uefi (so its easier to test with 1.02b and if that works out you try 1.03b) https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ questions like this should be answered here https://xpenology.com/forum/topic/7973-tutorial-installmigrate-dsm-52-to-61x-juns-loader/ there is not hat much you can take from a 5.2 version, like most plugins will not work if you have a lot of shares and users it might be worth updating but keep in mind the file system like ext4 is not updated that way, the default in 6.1/6.2 is btrfs and some functionality like snapshots or VMM rely on this (but i guess with that old hardware barely x64 capable its no problem as you wont use these things) i'd suggest a update without using the old system data but keeping the raid data volume and its file system (btrfs might be a little to much for a single core cpu with 2 threads) as the nic driver atl1c is part of jun's default driver set you should be abler to use the loader without any modifications and find it in network after booting, so try 1.02b without any changes and if you find it in network change the vid/pid in grub.cfg to mach the usb flash drive you used in that state you could install 6.1 if that works you can try loader 1.03b the same way before updating to 6.2 you should read this and fix it before updating 6.1 to 6.2 https://xpenology.com/forum/topic/26723-network-down-after-dsm-login/?do=findComment&comment=137953 keep in mind you will not add much i terms of NAS, 6.x might offer some more packages but my guess is you will not see much improvement compared to the work you will invest, its more a learning process and if you like tinkering ...

-

damit wol funktioniert muss die mac adresse der netzwerkkarte in der grub.cfg des loaders eingestellt sein und danach muss man wol noch in der webgui aktivieren

-

no you dont want to format anything, the recent version of win10 cant hande things properly, the right thing to d is npthing, just use the win10 funktion to unmount the usb device reads this for more information https://xpenology.com/forum/topic/29872-tutorial-mount-boot-stick-partitions-in-windows-edit-grubcfg-add-extralzma/

-

if there is nothing else in you network like vlan that could prevent the client from accessing the dsm system then i'm out of ideas maybe try to set up a usb/disk "pair" (1.04b dsm 6.2.3 918+) on another system and move that already istalled/running combination to the new hardware, that way you skip the install process, with the intel nic there should be no driver problem (and with 8125 disabled in bios there should be only 2 nic ports, so under all conditions it should stay within the 2 port limit of 918+) another option is the serial port console, you could see drivers loading and failed drivers and even login if the boot process runs completely you board does not have serial port connector anymore but with 918+ (kernel 4.4.59) there is a pcie card solution that can deliver this, a cheap WCH CH382L based card can be used as add-on solution for serial console (you would need a null-model-cable too and maybe a usb2serial adapter for the other computer running a console program like putty) https://xpenology.com/forum/topic/36505-serial-port-com1-stages-of-operation/?do=findComment&comment=182005

-

how about adding a pcie card with a m.2 slot, add a small/cheap m.2 nvme and have esxi run from this, using the whole controller with its 4 disks in passthrough or rdm the 4 disks to dsm vm, the rest of the nvme ssd could also be used as virtual ssd's for dsm as cache drives(s), when its two virtual ssd's it could be a r/w cache (its risky as its not redundant in hardware, with just one ssd as cache its only read cache and thats not very effective in most home use scenarios) also you could use all 4 disk as vmfs store and have thick 4 thick vmdk files the size of the disk minus ~100MB (1st disk still holds vmdk for booting) that way you would have 4 virtual disks of the same size to use in dsm other option could be to use the raid controller in raid mode and have the whole spase as one vmfs, use just 50mb fot the boot vmdk and the rest as vmdk for dsm, that would be a basic disk in dsm and the raid redundancy is in esxi with the driver of the controller why not running dsm baremetal if its the only thing that runs on this hardware?

-

https://xpenology.com/forum/topic/6253-dsm-61x-loader/

-

the driver forcedeth.ko is still in my extrended driver set, i guess wont be able to use 918+ (min. cpu limit, see ink below) but 3617 should work keep in mind that loader 1.03b only supports old bios aka csm mode in uefi bios if you have trouble with finding it in network try loader 1.02b for dsm 6.1 that one works old bios/csm and uefi (also needs a extended extra.lzma for the nvidia chipset nic) https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/

-

there is no "close" when it lower, it hast to be the same number of sectors (or above), anything else will break the raid set and file system in it the file system above the raid set is not capable of just loosing some sectors "somewhere" i'd suggest a usb drive big enough to hold a backup you ssd drive might be ok and if not you would have a backup to restore, if the drive fails then you would not even need to replace it, just build a new raid0 (or jbod) set without that 480GB drive (or replace it with a bigger one for more space in the new set) and restore you data from backup

-

- original DS220+ would need more RAM (default is 2GB) so €299 in not realistic with what you where planing to run (that might tip the scale to notebook/custom build) - plan a usb drive for backups, raid1 is not a replacement for backups, use a 2TB drive and keep older generations of backup available (incremental backups) - both DS220+and a notebook would prevent any additional disks (usb disks can't be used in data raids and and non-synology sata multiplexer housings dont work with dsm, can work only if the housing has its own raid and presents it as one volume), most system boards come with 4 sata ports and a slightly bigger case will offer at least 4 disks slots (hot swap looks nice but is optional, disks are not replaced that often and a more tech oriented people dont need a match of slots between gui and real hardware) - both DS220+ and a notebook dont have the option to extend by pcie slot, that can be 10G nic, 2 x nvme as pcie card, more sata ports, 2.5G/5G might be added by usb adapter on DS220+ and notebook - keep your backup concept in mind, like local data to nas to usb hdd (last one for storing backups outside your home or at least offline in case of encryption trojans or similar), when using media exclusive on nas there is one stage missing and when usb backups are not made that often it leaves a gap - if planing ahead for bigger hardware is a thing then mini-ITX with only one pcie slot can be a limit, a micro ATX board has more options long term (you might end up using esxi and dsm as vm and other services independent from dsm as vm)

-

ok, so its in grub.cfg that part checks if there is a extra.lzma and if so loads it along dsm's rd.gz, if not present only rd.gz is used the extra.lzma is used anyway or we would not have a working loader, atm i'm only working on a smaller tablet so i can't check the context in grub.cfg but i guess it was just some test code that got removed as extra.lzma is needed all the time to make sure the loader is working, so no need to check its presence check your local computer you are using to access the system with, if you can use icmp (ping) but not other ports then maybe local firewall rules prevent access, maybe try a different system like phone/tablet's browser to access that ip address on the ports 5000 (http) / 5001 (https)

-

i've not looked up the board and you listing of hardware looked like a older xeon cpu so i assumed it must be a older system/board if its a newer system then the 8125 might not work when its a newer hardware revision of the chip, the intel card should work ootb with dsm 6.1/6.2 not sure what you have in mind, what exactly is that code?

-

nicht wenn du bereits 6.2.3 hast oder keine zusätzliche extra.lzma hast in den beiden fällen kannst du das update einfach über die webgui starten und wartest falls es fehler bei dem udpate gibt das hier anwenden und erneut versuchen https://xpenology.com/forum/topic/28183-running-623-on-esxi-synoboot-is-broken-fix-available/

-

not very likely with a 6th/7th gen cpu board, cpu is 2015/2016 and the hardware around it will be even older there is a intel nic and a realtek 8125 the intel seems to be a "NC360T PCI Express Dual Port Gigabit Server Adapter" the realtek should work too when using a additional extra.lzma, if any use the new 0.13.3 for dsm 6.2.3 remove the intel nic and try with the onboard and when using the intel nic try both ports not likely if it boots and you see the grub selection and then jun's message the boot process has started and even if you use the loader without changing the grub.cfg in any way then it should be seen in network, the usb vid/pid only gets important later when installing a *.pat fule (dsm system) to disk test with loader 1.02b and try 3617 and 916+ with the intel nic (onboard disable in bios)

-

- netif_num in grub.cfg is 1 but should be 2 - try both nic ports - try loader 1.02b 3615 (1.03b does not support uefi, 1.02b does support both) https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/ - look for a serial port or a onboard connector for com1, the console output is switched to serial port and with this present and a null-modem-cable you could see whats going on

-

its the way you have chosen when using raid0, the normal way with a non working disk / removed disk is a destroyed raid and restoring data from backup, thats the way you designed and set up your system, anything else is optional and depends on other things and might not 100% predictable beside the comments about reinserting the mSATA drive to see if the error is still there, i would suggest clonezilla for cloning, its free and you get a ready to use boot media, as long a the new drive is not smaller it will do this job i think mdadm would not mind a clone of a disk on a new (disk) hardware but DSM could interfere, i never tried this scenario, my guess is that it should work in theory: as your old drive is still in working condition and if the clone does not do its job as intended, the raid0 set should not start and if you put back the old drive the raid0 set should start again and you can have a look in the logs to see what was going wrong once you started the raid0 with the new drive you old drive would be invalid for that raid set

-

having the the real mac address is needed for wake on lan and convenient but its optional the usb vid/pid (of the usb flsh drive used for booting) needs to be in the grub.cfg if the loader looks like it starts then you would use synology assistant to find it in you network (or you check the dhcp server in you network, usually the internet router)

-

running DSM 6.2 on a mac mini 4,2 baremetal or vm

IG-88 replied to Soogs's question in General Questions

without a serial console you wont be able to see the difference between not booting properly and a not working network driver even if you see grub's menu and jun's message it might simply not start the dsm kernel example would be 1.03b 3615/17 in uefi mode, does not work