merve04

Member-

Posts

328 -

Joined

-

Last visited

-

Days Won

4

Everything posted by merve04

-

I’m also converting from ext4 to btrfs. there’s probably nothing technically wrong with 6.2.3, but the fact it’s not able to natively handle lsi card and I require transcoding makes 3615/17 not a viable option.

-

I do remember reading that, but i thought you mentioned just disable hdd hibernation. I have noticed that all my attched hdd's have lost health info on all my drives attached via lsi controller. All in all for me, as much as 6.2.3 "works", my plan is to offload all my data and revert back to 6.2.2u6, much more stable.

-

Agree, I do only have one copy of my data with exception to my personal docs/pics but y’a I guess I best keep things simple. I went with conservative numbers, transferring data at 50MB/s will take about 9 days one way. It just would of been nice to do it twice as fast 😉

-

I guess maybe I should of done 3 14TB and then I could of ripped out the one hdd volume which is just for surveillance and kill 1 of The parity drives.

-

Unfortunately no I don’t enough ports. I got 13 drives running at the moment, I could rip out volume 2 as it’s a single hdd which has nothing important. Id then have physical space for adding 4 in (I have a 16bay chassis) but between the mobo and lsi controller, only have 14 connections. It would be too insane to rip out the 2 parity drives IMO.

-

I'm pretty sure theres a extra/extra2 mod that needs to happen with 918+ to show hdd info when plugged on lsi card.

-

Thanks for that reply, i am considering in the near future to double up my ram. yes they are bare drives, will be getting them some time this week. what I was trying to say is, I still have open slots in my chassis, wouldn’t I get faster transfer speeds if I plugged them directly and have the drive visible under dsm? Just add 1 12 at a time as a simple volume to copy the data over? but it’s my understanding that once the drive is installed, dsm will want to probably create a system partition on it? Won’t that create a potential hassle when I try to add one drive back at a time to copy the data back over? How would my system know which drive to boot?!? As I want to format all my drives and litterally start from scratch once all data is transferred out. if it’s too complicated I will stick with doing it over the network on a desktop machine.

-

Another thought came to mind, i would imagine plugging each 12TB, one at a time, id transfer the data over substantially faster, rather than over network. My concern would be DSM will want to partition in such a way that it has a system partition on the drive, when i wipe all of the hdd's in the array as ill just want to start with clean dsm install, those 12tb would want to boot dsm, im guess theres no real effictive way around this?

-

i suppose not in that case, all i care is that i can saturate my gigabit connection for read\write performance, anything above that is just gravy. In that case with a i5 8400 and 16GB ram, should be able to handle that?

-

well i've read that btrfs has a performance hit compared to ext4. is this true?

-

So if any of you have seen a recent post from me due to file system error, I haven’t been able to fix it, so I picked up 4x 12 TB to offload my data and rebuilt my current array. I’m gonna return the 4 12’s when done, greasy but whatever. What i would like to know is, I will once again have a 54TB storage pool in SHR2, can i create a BTRFS volume and a EXT4 volume on the same pool? My thought is I’ll make a 1TB btrfs for just sensitive data which is duplicated twice on 2 different cloud storage and for all my apps. Second volume would be EXT4 strictly just holding media. Is this doable?

-

Tutorial - Modifying 1.04b Loader to Default to 24 Drives

merve04 replied to autohintbot's topic in Tutorials and Guides

autohintbot posted on the first post of this thread the 2 files you need to replace on your usb key in order to achieve 24 drives. -

I've read on the forums here that for plex\emby\jellyfin, that what you read around here about needing valid SN or the workaround that does not require a SN for transcoding, doesnt apply for the 3 mentioned. Something about just if you use videostation that it needs to validate the dev\dri folder. I could be wrong, but either way it was as simply as replacing the extra and extra2 files on the usb key and was back in buisness. I guess jun's original i915 driver just doesnt work in 6.2.3, so i think what ig88 did was port the original synology i915 driver into his extra and now it works fine. Correct me if im wrong.

-

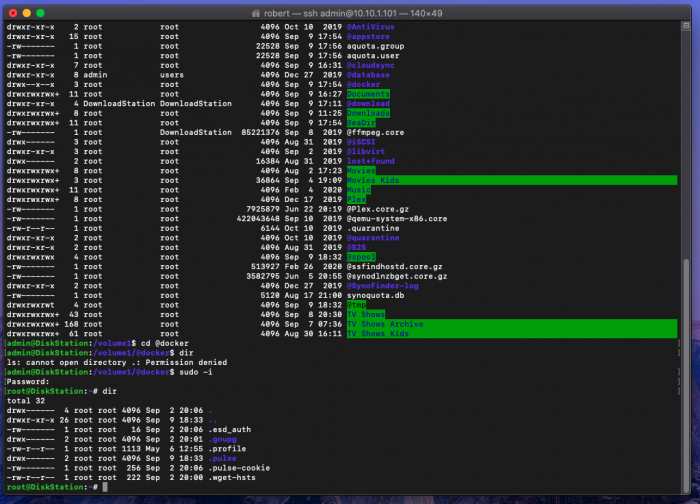

Do you or anyone else seeing this know how to perform e2fsck? Ive also read stuff about it being more effective. The reason I'm investing my time in this is i can literally access every other folder in my volume no issue, but as soon as i touch @docker, immediately triggers dsm a error and to run a fs.

-

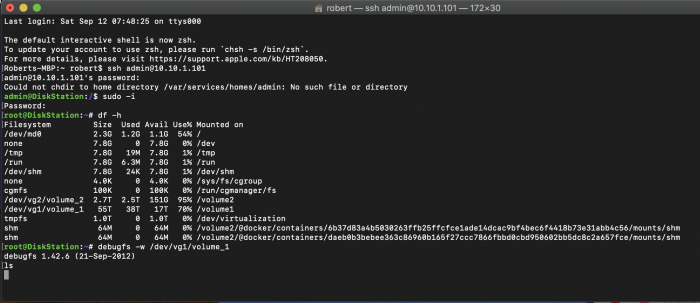

So im trying to see if debugfs can remove this folder, but i dont understand why when i try to mount the volume in questions it seems like it just does nothing, i try a "ls" after what I believe should of mounted the volume in debug but nothing happens. Am I missing something, doing something wrong?

-

Added IG88’s extra v0.13.3 to regain hw transcode in plex. That involved creating a new USB, adding the the 2 extra files and booting machine with new key, dsm recovered system and hw transcode worked again.

-

problem solved, i installed extra918plus v0.13.3, hw transcoding in plex works again

-

Anyone know how I can delete the directory? When I do a rm -rf it’s gives me an error that structure needs cleaning. No matter how many times fsck is ran, the problem remains.

-

Is there anyone here running dsm 6.2.3 update 2 with 1.04b 918+ able to hardware transcode in plex? I’m not sure if it’s a coincidence after a recent dsm update here as I was on 6.2.2u6, but seem to have lost hw transcode I’m running baremetal, no extras.

-

i dont really know where else to post this as im using legit SN\MAC.. i use loader 1.04b, dsm 6.2.3 update 2, i have plex pass, and hw transcoding used to work just fine. just noticed this evening someone is streaming from my plex server, transcoding but now hw. so i checked my activation file; admin@DiskStation:/$ cat /usr/syno/etc/codec/activation.conf {"success":true,"activated_codec":["h264_dec","h264_enc","mpeg4part2_dec","hevc_dec","vc1_dec","vc1_enc","aac_dec","aac_enc"],"token":""} what could be the problem?

-

so interestingly as i kept digging deeper and deeper in the folder structure of @docker, i had dsm logged in the background and on that last CD command, the system error occurred popped up.

-

so back in dsm now, error gone, decided to ssh in server, theres still a "@docker" folder with some stuff in it, could this be the cause? safe to delete?

-

I can confirm my volume is ext4, I can also ensure that I’ve been using docker for probably 2 years now. I do have a second volume as you can see in the picture above for surveillance and the only reason it’s btrfs was to support virtual machine manager. My system is back in checking quota but sure I could install docker on that volume. I’m just lead to suspect something is still lingering after uninstalling docker from volume1

-

Well this is annoying, as soon as I reinstalled docker, error came back!!!

-

Well I tried my second plan as this all started when I was in docker, I removed docker and selected the check mark to remove all settings, files and folder, while it was removing the message about file system error occurred popped up. When it finished removing i did a run/reboot, this time it took almost 1 hour checking quota, when i logged back in dsm, voila warning was gone. 😎