merve04

Member-

Posts

328 -

Joined

-

Last visited

-

Days Won

4

Everything posted by merve04

-

Any particular brand you’ve found success with? Or any will do so long it’s based on jmb585 chipset?

-

I sincerely thank you for your time and narrowing down the likely cause of my issue.

-

Interesting, but must be slow. 10 HDDs on a pcie 1x bus, ouch. Also the price, being im in Canada, it’s $85+$16 of shipping/import so that’s like $135 CAD. I’ve looked on newegg and could get a pair of reverse breakout cables and a pair of jmb585 cards with 5 sata for slightly cheaper. It’s something I may consider in the future. It seems for now the shuffling around of drives with the bulk of the md3 array residing on the mobo controller has greatly improved write speeds. I moved couple 20GB files from Mac to nas, gigabit was fully saturated, will admit, faster going from nas to desktop, but better than 50-70 and sometimes stalling resulting in incomplete transfers.

-

Could it be the difference between 3615 and 918 when using a 9211? As mentioned in a previous post, prior to wipping out my nas, my hdd's were somewhat mixed around between the mobo and the lsi controller. I remember only starting with 7 hdd's and i had them all plugged on the lsi and as i was expanding, naturally i started plugging via mobo. So going back before this all started, i would of had 4x8tb an 1x3tb (surveillance), plugged on mobo and remaininder of 4's and 8's were on the lsi. I've kinda mimiked this again but having 5x 8tb and 1x4tb on mobo and moving my 3tb (surveillance hdd which runs standalone) on the lsi.

-

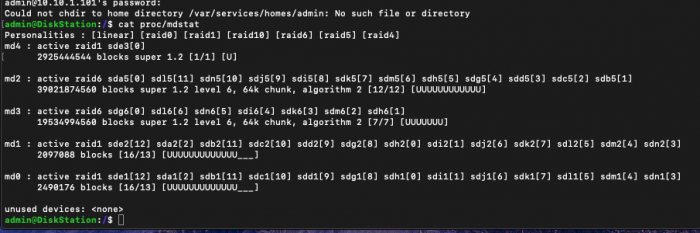

So I moved around my drives, i have 5x 8TB and 1x 4TB on intel, 4x 4TB and 2x 8TB on LSI. I was lucky to hit 30MBps prior.. So I may need to rethink the use of the LSI controller with 918+

-

Yes I've decided to look into the jmb585 cards, a bit pricy, $66 a pop on amazon, found them on ebay for as low as $30-35 but not always sure about what Im getting with ebay afa quality goes. Plus i would need couple more sets of reverse 8087 to sata breakout cables. I will try and move one drive off lsi onto mobo and see if it boots fine, maybe rinse and repeat if all goes well.

-

You have mapped out my arrays exactly as it’s configured, I did check on the enable write cache. Drive 1-5 are enabled, 7-14 are not. Should I enable them? Disable on drive 1-5? y’a it won’t let me enable on 7-14, says operation failed. I did move around drives in my bays before reinstalling. I used to have 4 8TB in intel and 3x8TB, 5x4TB on LSI. Could this be the tipping point in performance? As the array primarily using the group of 8TB drives being active and not having cache enabled killing the performance? could I power down and move 6x 8TB on mobo controller and leave the balance on LSI controller? im kind of guessing here that md2 is 4TB x12 and md3 is the other half of the 8TB so 4TB x5. Therefore with 38TB of data, md2 is full and md3 is being populated? also just for info, all drives are just run of the mill desktop grade barracuda 5400 or 5900rpm drives.

-

I’m not sure what to take from this?!?

-

All drives but #5 are part of volume1; Here im transfering 20GB of random documents to the nas, I'm seeing drive 1-4 doing nothing and 14 doing nothing. Drive 1-5 are plugged on mobo, 7-14 on LSI-9211

-

admin@DiskStation:/$ dd if=/dev/zero bs=1M count=1024 | md5sum 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB) copied, 1.50142 s, 715 MB/s cd573cfaace07e7949bc0c46028904ff - admin@DiskStation:/$ dd if=/dev/zero bs=1M count=4096 | md5sum 4096+0 records in 4096+0 records out 4294967296 bytes (4.3 GB) copied, 6.02468 s, 713 MB/s c9a5a6878d97b48cc965c1e41859f034 - admin@DiskStation:/$ sudo dd bs=1M count=256 if=/dev/zero of=/volume1/Documents/testx conv=fdatasync Password: 256+0 records in 256+0 records out 268435456 bytes (268 MB) copied, 2.03175 s, 132 MB/s admin@DiskStation:/$ sudo dd bs=1M count=256 if=/dev/zero of=/volume1/Documents/testx conv=fdatasync 256+0 records in 256+0 records out 268435456 bytes (268 MB) copied, 1.77604 s, 151 MB/s admin@DiskStation:/$ sudo dd bs=1M count=256 if=/dev/zero of=/volume1/Documents/testx conv=fdatasync 256+0 records in 256+0 records out 268435456 bytes (268 MB) copied, 2.59729 s, 103 MB/s admin@DiskStation:/$ sudo dd bs=1M count=256 if=/dev/zero of=/volume1/Documents/testx conv=fdatasync 256+0 records in 256+0 records out 268435456 bytes (268 MB) copied, 2.08095 s, 129 MB/s admin@DiskStation:/$ admin@DiskStation:/$ sudo dd bs=1M count=2048 if=/dev/zero of=/volume1/Documents/testx conv=fdatasync 2048+0 records in 2048+0 records out 2147483648 bytes (2.1 GB) copied, 12.7959 s, 168 MB/s admin@DiskStation:/$ sudo dd bs=1M count=2048 if=/dev/zero of=/volume1/Documents/testx conv=fdatasync 2048+0 records in 2048+0 records out 2147483648 bytes (2.1 GB) copied, 13.3068 s, 161 MB/s admin@DiskStation:/$ admin@DiskStation:/$ sudo dd bs=1M count=4096 if=/dev/zero of=/volume1/Documents/testx conv=fdatasync 4096+0 records in 4096+0 records out 4294967296 bytes (4.3 GB) copied, 33.0768 s, 130 MB/s admin@DiskStation:/$ sudo dd bs=1M count=4096 if=/dev/zero of=/volume1/Documents/testx conv=fdatasync 4096+0 records in 4096+0 records out 4294967296 bytes (4.3 GB) copied, 32.0901 s, 134 MB/s admin@DiskStation:/$ admin@DiskStation:/$ time $(i=0; while (( i < 9999999 )); do (( i ++ )); done) real 0m24.437s user 0m23.838s sys 0m0.597s admin@DiskStation:/$

-

I don’t have or use SSD cache. Is there benchmarking software that can be used directly in DSM?

-

I think you miss read my question, so let me ask again. Is the correct procedure to reinstall DSM to just make a new USB key and boot my machine back up? As mentioned, I'm currently at 6.2.2u6. Can I reinstall 6.2.2 or am I forced to upgrade to 6.2.3? I've seen it before where you can either do a reinstall of dsm no personal data loss but all packages and settings are gone?

-

I really appreciate the help but comparing my system to others?!?!?! When i offloaded 38TB in the span of 4 days via network transfer, and now when i try the same process of just doing simple copy files from nas to my desktop, it starts at a crawl, 10....20...40...maxes out at around 75-90MBps but prior was instant +100MBps. Is it possible to just reinstall DSM fresh? Do I just make a new usb key and reinstall DSM?

-

I just turned it off on volume1, was set to monthly. Unfortunately being on a Mac, i wont be able to use NASPT. I'm currently running 6.2.2u6 918+, would it be worth my wild to try again 6.2.3 on 918+? Maybe migrate to 3615 while staying on 6.2.2u6 as it has native support for lsi-9211? In the end it still baffles me because i never had performance issues prior to wipping my nas out.

-

I've turned off Record file access time frequency on volume 1, none of my share folders have encryption. I do believe i would of selected flexibility as choosing performance does not offer SHR.

-

/dev/sda: Timing buffered disk reads: 366 MB in 3.01 seconds = 121.57 MB/sec /dev/sdb: Timing buffered disk reads: 440 MB in 3.01 seconds = 146.22 MB/sec /dev/sdc: Timing buffered disk reads: 414 MB in 3.01 seconds = 137.74 MB/sec /dev/sdd: Timing buffered disk reads: 388 MB in 3.01 seconds = 128.90 MB/sec /dev/sde: Timing buffered disk reads: 340 MB in 3.01 seconds = 113.03 MB/sec /dev/sdg: Timing buffered disk reads: 430 MB in 3.01 seconds = 142.88 MB/sec /dev/sdh: Timing buffered disk reads: 418 MB in 3.00 seconds = 139.12 MB/sec /dev/sdi: Timing buffered disk reads: 446 MB in 3.00 seconds = 148.57 MB/sec /dev/sdj: Timing buffered disk reads: 396 MB in 3.01 seconds = 131.71 MB/sec /dev/sdk: Timing buffered disk reads: 470 MB in 3.00 seconds = 156.66 MB/sec /dev/sdl: Timing buffered disk reads: 526 MB in 3.00 seconds = 175.17 MB/sec /dev/sdm: Timing buffered disk reads: 428 MB in 3.00 seconds = 142.52 MB/sec /dev/sdn: Timing buffered disk reads: 556 MB in 3.02 seconds = 184.23 MB/sec

-

all exact same hardware and prior to wipe. ram is at 15% used of 16GB, raid array is shr-2? if that answers the question. how can i check raw performance of each disk?

-

Well i dont know if its just my bad luck, but I'm really hating using btrfs. My system performance is absolute garbage. I do a few simple tasks and everything comes to a crawl. No longer am I able to hit 60-70MBps download speeds with usenets, 30 is the best ive seen, avg is more like 15 now. When i move\rename files i see it take a hit to almost 1-2MBps, if plex is trying to do a task in the library it also takes a hit. Just trying to browse diskstation via mac os using finder is painfully slow. is there anything i can check to improve this? The sad part in all of this is I still havent installed surveillance station and virtual machines which i had running previously.

-

I think there's a major difference between the drivers used for a lsi 9211 found in the original jun 1.04b loader and adding extra v0.12 Heres my findings; I made a new usb with the extra\extra2 v0.12 applied to load 1.04b, booted and installed dsm 6.2.3u2, when trying to transfer files, it was super slow, 30-50MBps I reflashed the same usb key and installed 1.04b untouched, loading dsm 6.2.2u6, transfer speeds 70-100MBps. What could be the issue?

-

Today I nuked my server and reinstalled DSM, but did i do something wrong? I went in storage pool and created a shr2 with all the hdd's i wanted, then went in volume and created 1 volume for the entire pool in btrfs and its doing consistency check, and its extremely slow and my whole system is just boggled down. I dont recall seeing a skip option when creating the volume.

-

Not sure what to say, every single version of 6.2 up to 6.2.2, dsm has displayed all the info correctly on the hdd's via my lsi 9211. Fully aware your extra was to break that, its just the little things i guess 🤷♂️ Why is it not an issue on 3615\17? Different kernel? Its too bad a workaround for trans coding on those machines hasn't been figured out. If plex had hw support on 3615, id switch back it.

-

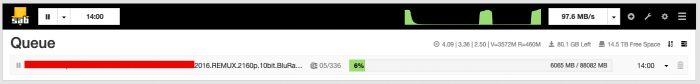

Well I've started copying 11.8TB of data towards my first drive here, happy to see that I'm transferring at 110~145MB\s.