-

Posts

2,638 -

Joined

-

Last visited

-

Days Won

139

Everything posted by Peter Suh

-

And, there is one more great thing I gained from this two-week study. 56 ~ 57% connected to disk damage error 3 FAT boot partitions on USB /dev/synoboot1, /dev/synoboot2, /dev/synoboot3 If it cannot be mounted boot-wait (tcrp) and automount (rr) complement this. There are addons such as: If these three boot partitions are not mounted properly, they cannot be created. There is a function that forces this. This is a method I researched and came up with. Force create the above three nodes with the /bin/mknod command. Because this forcefully created node is not a stable node, The 56-57% disk damage error still did not go away. However, as a result of this function, there is no need to forcibly fake these nodes. It seems to be more stable to connect to a USB device directly using "ln -s" in the form of a direct symbolic link. I will discuss this improvement plan with wjz304 and spread it to rr to improve it into a more stable addon.

-

I should have tried it with a native hard drive from the beginning. I ended up wasting several days by testing in the VMWARE FUSION virtual environment for a more convenient test. The main purpose of Redpill bootloader hard disk porting is to port the bootloader to the hard disk without using a USB stick. In a virtual environment, it is sufficient to use a virtual Sata disk as a boot loader anyway, so the function of porting it to a disk seems unnecessary. Anyway, due to the nature of Redfill lkm, it synoboots the moment a Sata SSD is seen. A healthy disk disappears. Only those who have at least two BASIC or JOB type disks in Native can use it. I will improve the functionality, distribute it soon, and also upload a user guide.

-

I think I found something useful. Internally, Proxmox points to /dev/loop0 as the USB source. I think I can create synoboot even on bare metal just by creating /dev/loop0 as a dummy img. https://github.com/PeterSuh-Q3/redpill-lkm/blob/master/tools/inject_rp_ko.sh # Injects RedPill LKM file into a ramdisk inside of an existing image (so you can test new LKM without constant full image # rebuild & transfer) # # Internally we use it something like this with Proxmox pointing to /dev/loop0 as the USB source: # rm redpill.ko ; wget https://buildsrv/redpill.ko ; \ # IRP_LEAVE_ATTACHED=1 ./inject_rp_ko.sh rp-3615-v6.img redpill.ko ; losetup ; \ # qm stop 101 ; sleep 1 ; qm start 101 ; qm terminal 101 -iface serial1

-

However, the new method attempted by TTG, the Redpill development group, is not a USB loader. The development that enabled the use of the Sata DOM bootloader was hindered. Among Sata disks, the disk that exists first is always recognized as the bootloader. So, the BASIC type disk to which this boot loader has been transplanted also has to be partitioned by Synoboot, the boot loader. There is a phenomenon in which the front disk disappears. (The data is not damaged.) This is implemented on the lkm side, and can be viewed as a type of fake technology. A few days ago, I looked into the source code with the intention of recompiling lkm and improving it. It seems that when the TTG group first implemented this Sata DOM Fake feature, they thought it was crazy. However, I think they left a comment at the end saying that it has stabilized a lot and is usable now. https://github.com/PeterSuh-Q3/redpill-lkm/blob/master/shim/boot_dev/fake_sata_boot_shim.c /** * A crazy attempt to use SATA disks as proper boot devices on systems without SATA DOM support * * BACKGROUND * The syno-modifed SCSI driver (sd.c) contains support for so-called boot disks. It is a logical designation for drives * separated from normal data disks. Normally that designation is based on vendor & model of the drive. The native SATA * boot shim uses that fact to modify user-supplied drive to match that vendor-model and be considered bootable. * Likewise similar mechanism exists for USB boot media. Both are completely separate and work totally differently. * While both USB storage and SATA are SCSI-based systems they different in the ways devices are identified and pretty * much in almost everything else except the protocol. * * * HOW DOES IT WORK? * This shim performs a nearly surgical task of grabbing a SATA disk (similarly to native SATA boot shim) and modifying * its descriptors to look like a USB drive for a short while. The descriptors cannot be left in such state for too * long, and have to be reverted as soon as the disk type is determined by the "sd.c" driver. This is because other * processes actually need to read & probe the drive as a SATA one (as you cannot communicate with a SATA device like * you do with a USB stick). * In a birds-eye view the descriptors are modified just before the sd_probe() is called and removed when ida_pre_get() * is called by the sd_probe(). The ida_pre_get() is nearly guaranteed [even if the sd.c code changes] to be called * very early in the process as the ID allocation needs to be done for anything else to use structures created within. * * * HERE BE DRAGONS * This code is highly experimental and may explode at any moment. We previously thought we cannot do anything with * SATA boot due to lack of kernel support for it (and userland method being broken now). This crazy idea actually * worked and after many tests on multiple platforms it seems to be stable. However, we advise against using it if USB * is an option. Code here has many safety cheks and safeguards but we will never consider it bullet-proof. * * * References: * - https://www.kernel.org/doc/html/latest/core-api/idr.html (IDs assignment in the kernel) * - drivers/scsi/sd.c in syno kernel GPL sources (look at sd_probe() and syno_disk_type_get()) */ So, if it is possible to have a SATA disk at the front as a dummy, I am trying to do it like this. As a first test yesterday, I attempted to connect to the loop device with a dummy img and acquire /dev/sda first when the kernel is loaded as shown below. I tested it within the Friend kernel, and the script works as expected. However, it failed to obtain /dev/sda as a symbolic link first. https://github.com/PeterSuh-Q3/tcrpfriend/blob/main/buildroot/board/tcrpfriend/rootfs-overlay/etc/udev/rules.d/99-custom.rules https://github.com/PeterSuh-Q3/tcrpfriend/blob/main/buildroot/board/tcrpfriend/rootfs-overlay/root/load-sda-first.sh Now, as a second test, we excluded the Friend kernel and injected the above content into initrd-dsm as shown below to conduct the same test in Junior, which is the DSM kernel stage. https://github.com/PeterSuh-Q3/tinycore-redpill/commit/34ed365357820d7c54552ce2114208670805ff13 Do you think this method will be effective?

-

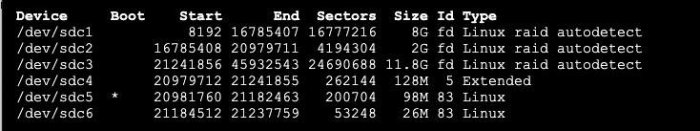

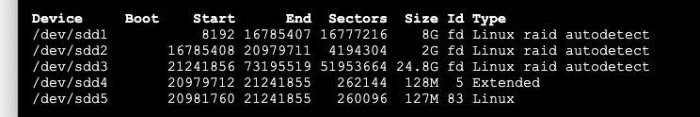

This project started about two weeks ago at the suggestion of @wjz304 . A guide that can be processed with Jun's loader in DSM 6 already exists as shown below. This method requires a lot of manual work through MS Windows. Redpil plans to create a simpler automatic script as a menu function. https://github.com/PeterSuh-Q3/tinycore-redpill/blob/main/menu_m.sh#L1688 The part where tcrp-mshell is slightly different from rr is tcrp, as its name suggests, is a redpill loader that uses tinycore Linux, so it includes one more kernel. The kernels used by rr and tcrp are as follows. 1. rr kernel (tcrp is friend kernel) 2. dsm kernel (same for tcrp) 3. tc kernel (tcrp only) rr contains all the functions that the tc kernel and friend kernel must perform in the rr kernel. This is why the menu starts directly with ./menu.sh in the rr kernel. The reason I explained these kernels is Ultimately, these kernel files (2 per kernel) must be ported to the hard drive. The existing DSM 6 Jun's loader had a kernel size of only 50M, so 100M of spare space at the end of the first hard drive was sufficient. However, Red Pill is equipped with various kernels, and the files for each kernel are close to 100M. 1. tc kernel (20M, mydata.tgz backup file is variable) 2. Friend kernel (61M fixed size) 3. dsm kernel (based on ds3622xs+ 7.2.1-69057, 82M initrd-dsm is variable [including driver modules and addons for each model]) The loader on the original USB stick is all in the 3rd partition with a lot of free space. It is impossible to store all of this in 100M on one hard drive. Give up less and only take items 2 and 3 and use 2 hard drives. Two hard drives created as Basic or JBOD type are required. Assuming that the structure of each hard drive on which the storage pool is created is of the above two types, It consists of system partition 8G + 2G and data partition (the entire remaining area). I thought there was only about 100M space behind the data partition. If you look closely, there is about 128M more free space between the system partition and data partition. You will see that the Start / End sectors are not connected and are empty. In the meantime, create an extended partition, sd#4, and create additional logical partitions where the bootloader partitions should exist. This capture shows bootloader partitions 1, 2, and 3 split into two hard drives. The first partition has 98M space. Contains the friend kernel from number 2 above. Because the location of the kernel changed, modifications were made to a separate grub.cfg. The existing boot loader searches for (hd0, msdos1) and includes the part where grub is set to boot. Use grub-install to readjust it to point to (hd0,msdos5) = /dev/sd#5. Activate /dev/sd#5, which corresponds to the first partition of the boot loader, to Active so that the hard drive can boot grub on its own. The second partition does not require a lot of space, but The important part contains rd.gz extracted from the original dsm pat file. When an update for SmallFixVersion is detected, it automatically assists in update processing through Ramdisk automatic patching. Currently, DSM 7.2.1-69057 is a file required to automatically update U0 to U4. In the 3rd partition The DSM kernel for each model is included. This is a kernel file that keeps changing and being regenerated every time you rebuild the loader. The intermediate file custom.gz (contains rd.gz and the driver) is also included. Because the size is not trivial, the final result, initrd-dsm, contains almost the same content. We have found a way to exclude it and have recently reflected it in the friend kernel version upgrade in advance. In the case of rr, the size of custom.gz and initrd-dsm contains too many modules (e.g. wifi module, etc.) compared to tcrp. As it is, only 2 hard drives are insufficient. I am not sure if wjz304 is well aware of this and is adjusting the kernel size related to this. With this preparation in place, you can boot with the bootloader implanted using only two BASIC type hard drives. For this purpose, remove the original bootloader, USB or SATA DOM bootloader. Up to this point, I have implemented the bootloader script and completed the first test. Following this topic, I would like to separately explain the problems and solutions I would like to consider.

-

Tutorial: Bootloader + DSM 6.1 + Storage on a single SSD

Peter Suh replied to TyphoonNL's topic in Tutorials and Guides

Me and @wjz304 are trying it. If possible, we will try to do this easily within DSM without using Windows. Redpill has a problem with the 100Mb limit per disk. This is because a capacity larger than 100 Mb is required. I'm also thinking about using multiple disks. There is a method of creating partitions through sfdisk that Synology uses for VDSM. I analyzed the contents of the file below provided by @wjz304. /usr/syno/sbin/installer.sh -

@littleMOLE, @gericb There is one more thing you need to know. ATOM CPUs, even though they are 1st generation Intel, are excellent models that support the movbe ("Move Data After Swapping Bytes") instruction internally. https://www.cpu-world.com/CPUs/Atom/Intel-Atom 230 AU80586RE025D.html If this command is supported, most models of REDPILL can be installed without restrictions. Even DS918+ that requires transcoding can be installed.

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

[NOTICE] Distribution of nvmevolume-onthefly addon (immediate version) This is an addon that further complements the existing nvme volume. I originally tried to reflect the requests of users who asked if it could be operated alone without a SATA type disk by creating a volume using only nvme, but after testing for several days, it did not work well. Instead, SATA disks still need to be present, but this is improved with out-of-the-box volumeting capabilities. The reason why this immediate reflection version was developed is that the existing version uses a service scheduling method, so even though the libhwcontrol.so.1 file has been hexa-patched once at the DSM installation completion stage, at least one more boot is required for the volume to appear. It flies. Because users were not aware of this, many of them received the patch script from the original author, @007revad, twice and processed it. This improved, immediate version allows volume conversion to be confirmed immediately upon first login after installing DSM and creating an account. Analyzing the original author @007revad's script, I found that it simply ended up patching only one hex value, like when activating the nvme cache of DS918+ in the past. One line of xxd command processing is enough. Back up the original to /lib64/libhwcontrol.so.1.bak. https://github.com/PeterSuh-Q3/tcrp-addons/blob/main/nvmevolume-onthefly/src/install.sh I don't know if this is an appropriate expression since my native language is not English. @007revad's script method is called "AFTER SHOT". I think that most REDPILL ADDON methods should be “ON THE FLY”. Otherwise, a reboot must always follow after script processing. Testing has only been completed focusing on version 7.2, so I hope users can verify older versions. As always, you must rebuild the loader to change to the new addon. This addon seems to help maintain the continuity of volume that temporarily disappears after migrating to another model or installing a version upgrade. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

The current mechanism of nvmevolume addon is copied from 007revad, and volume is activated only when the schedule is executed at least once in the form of a service after DSM is installed. I am trying to improve nvmevolume and change it to on the fly method. I would like to try to see if volume conversion is possible at the DSM junior level with only nvme, which is what users want. If you see an NVMe volume at the junior level, you probably don't need a separate SATA disk. If the test succeeds or fails, we will share the results. -

rr already stably supports mpt3sas on sa6400. Recently, mshell also adopted the latest version of the mpt3sas and r8125 modules. Stable operation has been confirmed in sas2008 and sas3008. I confirmed that rr and arc's mpt3sas are currently sharing the latest version, version 24.2.0. I think the only difference is the disks addon. As a result of verifying Arc's disks addon, this part was also working well. Share your disk list and /etc/model.dtb file while using the arc loader. Use the commands below. ll /sys/block cat /sys/block/sata*/uevent | grep 'PHYSDEVPATH' grep 'pciepath' /sys/block/sata*/device/syno_block_info grep 'ata_port_no' /sys/block/sata*/device/syno_block_info

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

This is not a case where postupdate should be used. postupdate is no longer needed. Did you rebuild your loader for 7.1.1-42962? Updates should not be made without rebuilding the loader. -

I can't get DSM 7 to work. - tinycore-redpill - Usb boot.

Peter Suh replied to javi930's topic in The Noob Lounge

Although this is the recommended setting for HP N54L, Have you tried disabling C1E? And, instead of connecting to port 5000 Please access port 7681 in the column below using a web browser. 192.35.18.139:7681 The account can be accessed as root without a password. Afterwards, please provide feedback on the results of executing the command below. dmesg cat /var/log/*rc* ll /sys/block -

In jim3ma's solution above, the supported AMD chipsets are very limited to 680M, 780M, and RX6900XT. Likewise, there is a solution available that utilizes PVE but covers all GPUs (igpu, dgpu) in a more general manner. We use the lxc container installed with Docker in PVE. I haven't even tried installing this in my PVE yet. I think I'll try this tonight. The installation guide is below. https://www.youtube.com/watch?v=5q4bFIff8ro For other installation methods, follow the installation method searched for Proxmox lxc on Google. I'm Korean, so I see a lot of Korean guides. I think the Korean server forum's guide is the best. I recommend translating it into your language. https://it-svr.com/proxmox-lxc-container/

-

Are you mistakenly thinking that the console screen is the DSM management window? It is normal for the console to be able to type at first for kernel loading, but to be unoperable after the actual kernel loading begins. There is no evidence that you used a web browser. DSM administrator can go to find.synology.com or You must use the address pointing to port 5000 shown in the screen above directly in your web browser. Additionally, evidence is required that a separate terminal window was opened and a ping attempt was made.

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

I don't think this will work. In my case, I had DS918+ SN and QC and migrated to DS920+. It means that there was no ban in this process alone. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

@Captainfingerbang I asked you a favor, but didn't you see it? -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

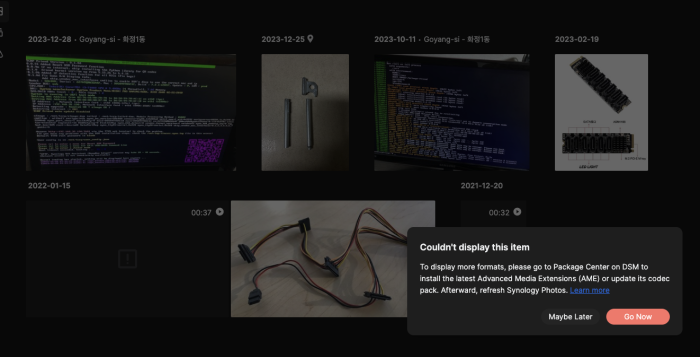

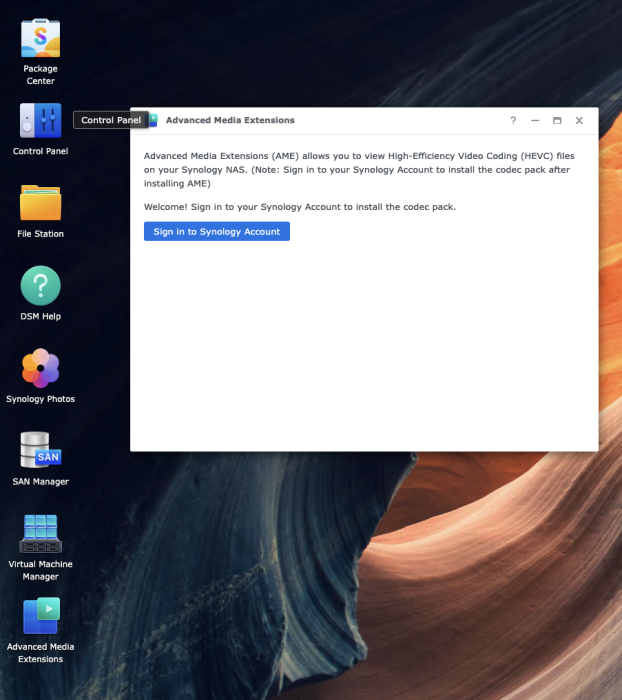

As a result of the test, it was confirmed that the video thumbnail in the photo requires an AME with a SA6400 license. With these results, it can be said that there is no difference between SA6400 and DS2422+ for this purpose only. And one tip: if you have at least one genuine Sn/Mac, QC will be maintained even if you change the model. I don't know if I'm lucky, but I haven't been banned yet. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

I'm sorry. Even though I knew that the 5900X was a model without an iGPU, I overlooked it. If you decide to migrate to a model other than DS918+, I recommend trying SA6400 instead of DS2422+. If you have an Intel iGPU in the original SA6400, H/W transcoding is easily handled. There is no need to install AME, nor does it require a genuine Serial or Mac. Codec patches are also unnecessary. However, I am also curious about how the SA6400 operates without an iGPU. If H/W transcoding is not possible, at least S/W transcoding should work. I also have Works that can be run with only a Xeon CPU without an iGPU. I will tell you the results of testing how the SA6400 works. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

You don't seem to have looked at my answer carefully. Transcoding from bare metal to AMD iGPU is not possible. This includes transcoding all media. It's not just that video transcoding like plex is impossible. Photo transcoding that works with i915.ko is also included here. AMD clearly has some things to give up. Think again. In the server forum in Korea where I work, for AMD transcoding, I recommend proxmox + lxc solutions. lxc says that AMD iGPU can also be used for transcoding. I also have no actual installation experience. Some users are only providing information about the possibility as shown below. If translation is possible, please refer to the search results below. https://svrforum.com/index.php?mid=nas&act=IS&search_target=title_content&is_keyword=AMD+lxc -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

I understand that Arc loader provides various crack tools for violating the license that can irritate Synology. This is a sensitive matter. @dj_nsk, @Captainfingerbang I hope you two will no longer mention ARC's license violations in this topic. I do not agree with ARC's violations. Please inquire in the ARC section. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

Question #1 -> If it doesn't work on DS918+, it seems that the NIC is not responding in the first place. Question #2 -> Even if you do not necessarily use the latest version .img, the latest Friend kernel is always automatically updated to the latest version. Question #3 -> I have not been able to check the Synology_HDD_db script that was recently changed by 007revad. We encourage you to continue using it as you do now. Question #4 -> Container manager was called Docker in the past. Docker is supported on all platforms. If there are Docker issues that arise from migration between models, I don't know exactly. thank you -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

There is no model or platform you have to give up just because you use AMD Ryzen. You can think of Redpill as being developed cross-platform. However, with Ryzen, you only need to remember a few limitations. 1. Since it is not an Intel iGPU, transcoding is not possible. 2. If you need to use VMM, select one of the models that use the v1000, r1000, or epyc7002 platforms.