-

Posts

2,638 -

Joined

-

Last visited

-

Days Won

139

Everything posted by Peter Suh

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

I thought you'd stop here, but I guess I'll have to explain again. MacOS's DCDT/SSDT related to Device-Tree is limited to ACPI. This is beside the point because it is related to power management. The part we should focus on in Synology Device-Tree is the media device tree, such as disks. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

(MacOS DSDT / SSDT) The Differentiated System Description Table (DSDT) and the Secondary System Description Table (SSDT) are part of the Advanced Configuration and Power Interface (ACPI) standard. These tables are provided by the ACPI BIOS, which describes and controls the computer's hardware. It contains information needed by the ACPI operating system to manage system resources and hardware. Differentiated System Description Table (DSDT): DSDT is part of the ACPI standard and primarily provides platform-specific information. It is mainly updated with BIOS updates provided by the motherboard manufacturer. SSDT (Secondary System Description Table): SSDT is used as a complement to DSDT. It is also an ACPI table, which usually provides additional information regarding system resources. Many SSDTs are created at system boot and are used once the OS boots. These tables support various functions such as energy management, power management, device configuration, etc. in hardware. Often in macOS you need to modify or customize these tables. Typically, modifications may be made to Hackintosh builds or to address specific hardware compatibility issues. DSDT and SSDT files are loaded onto the system through the EFI partition or kernel extensions in macOS. Modifying these files requires the use of ACPI-specific tools and scripts. These modifications must be done carefully; incorrect modifications may result in system instability or booting problems. (The Linux Device Tree) The Linux Device Tree is a standardized way to describe hardware in the Linux kernel. It is especially used in embedded systems, supporting different hardware configurations and providing information about the platform. Device Tree functions similarly to the existing BIOS and ACPI tables, but describes information about devices in a tree structure rather than a static structure. A Device Tree is a text file that describes hardware and device information for a specific platform. These text files usually have a .dts or .dtsi file extension. Converted to a .dtb (Device Tree Blob) file compiled using Device Tree Compiler (dtc). This .dtb file is loaded when the Linux kernel boots and defines the configuration of the hardware. The main advantages of Device Tree are: 1.Hardware abstraction: Device Tree describes specific configurations of hardware, allowing the same Linux kernel to be used on multiple hardware platforms. 2.Dynamic loading: Device Tree can be dynamically loaded by the kernel at runtime. This is useful when adding or removing devices from the system. 3.Separation of source code: Device Tree is provided as an independent file that describes the hardware configuration, so it is separated from the Linux kernel source code. 4.Readability: Device Tree is provided in text format, making it easy for humans to read and understand. The structure of the Device Tree is as follows: - Root Node: The highest node of the Device Tree and serves as the parent of lower nodes. - Device Node: A node that contains information about the device, and child nodes describe the device's properties. - Property: A key-value pair belonging to a node that represents the characteristics of the device. Device Tree is primarily used on embedded Linux systems, but can also be used on some desktop systems. It is especially widely used in systems based on ARM architecture. As far as I know, these two concepts seem unrelated. is not it? -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

What is it about? Please tell me the specific link so I can take a look. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

rr seems to have been stabilized for SA3600, but mshell has not yet. I am not sure about the advantages of SA3600. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

You seem to be talking about Hot PlugIn for SATA disks or SAS disks. Device-Tree based models such as SA6400 do not seem to support Hot PlugIn disk mapping. Newly added disks will only have new mappings processed through the reboot process. In a genuine Synology, this process is probably unnecessary. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

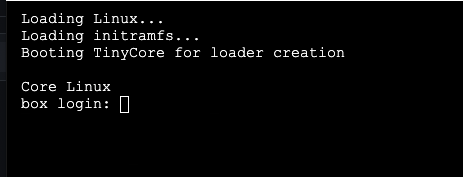

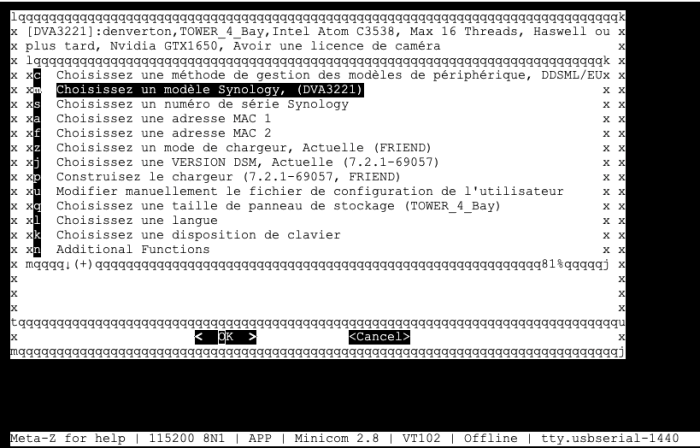

@Orphée I have successfully adjusted Tinycore Linux to use menu.sh after logging in as the tc user through the serial COM port you requested. Four windows appear on the monitor console as usual and can be accessed separately through the COM port. You need to log in as tc / P@ssw0rd. After the function is distributed, the automatic update of curepure64.gz must be processed once for the function to start working. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

The vm should start automatically in the state processed by sanrepair.sh without any adjustments. It would have been nice to have tracked the contents of /var/log/synoscgi.log more in error situations. If you have already migrated to proxmox, it will be difficult to check anymore. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

As you pointed out, there were still remaining issues. Among the command line options applied from Friend kernel 0.1.0d, skip_vender_mac_interfaces appears to be directly causing SAN MANAGER damage. rr is prepared to use this option, but mshell does not seem to be able to do so. I updated Friend kernel 0.1.0j to remove this option and have now confirmed the stability of SAN MANAGER once again. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

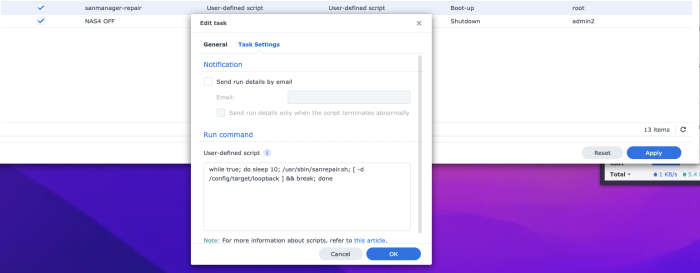

The vmm-repair addon is now deprecated. With just one sanmanager-repair addon, both SAN MANAGER and VMM can be restored, enabling more stable operation and control than service. It was established in the form of a bootup scheduler. https://github.com/PeterSuh-Q3/tcrp-addons/blob/main/sanmanager-repair/src/install.sh Automatic startup of individual VMs within VMM is now possible. https://github.com/PeterSuh-Q3/tcrp-addons/blob/main/sanmanager-repair/src/sanrepair.sh -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

There was a little more progress today. There was no need to complicatedly figure out the uuid. Just because the iscsi and loopback folders do not exist under /config/target. VMM failed to create a directory under it. The failure of iscsi and loopback to be automatically created is probably related to SAN MANAGER damage. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

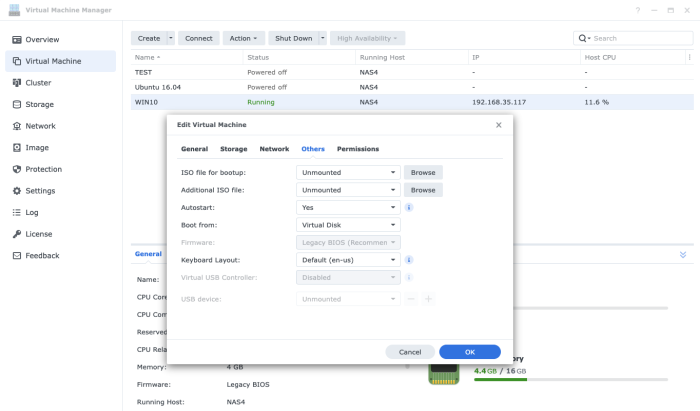

However, there is one caveat. VMM must match the specifications of the real CPU platform and the DSM CPU platform. Intel and AMD cannot work together. Although VMM can operate on DS920+ (Gemini Lake) based on Intel CPU, DS923+(r1000) does not work because it is an AMD-based DSM. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

This won't help if your migration involves switching to a different model. SAN MANAGER appears to work fine on the first boot after completing migration, but it is lost again on reboot. If you want to reinstall from DS920+ to DS923+, you can use it after rebuilding the loader. DSM of DS923+ is reinstalled only in the system partition area, so it does not invade the data partition. All settings are initialized, so you can prepare a backup of settings (dss files) and packages and use them for restoration after reinstallation. For detailed instructions on this, please refer to Synology KB. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

What you seem to mean is that the latest version of mshell cannot be trusted unconditionally. I don't know if you are aware of this problem, but I changed redpill-load/bundled-exts.json to force use of the mac-spoof addon. It will be dated from December 28, 2023 to January 2, 2024. https://github.com/PeterSuh-Q3/redpill-load/commit/87ecb48fba5bdeb602b9e03c7dc1e7acba6da491 https://github.com/PeterSuh-Q3/redpill-load/commit/0ed22a488cc2b9d7ec198ea4e3531875056ec35c At that time, users who built the load for 5 days suffered damage to their SAN MANAGER and VMM due to the forced mac spoof. After that, mac-spoof was never forced to be used. After performing a clean installation with the latest version of mshell, I tested VMM on DS3622xs+. It worked without problem. And friend's prod and dev don't include the version switch functionality you want. The only purpose is to switch between the development and use versions of the redpill.ko kernel. I'm sorry, but I would like to tell you that the only fundamental solution so far is a clean installation. I am sorry for causing you this pain due to my mistake of applying mac spoof without sufficient understanding. I will continue to do as much as possible to restore SAN MANAGER and VMM while avoiding clean installation, which is the last resort. For now, there is nothing I can do for you other than "wound dressing" As you said, it seems difficult to automatically start VMM within this "wound dressing". -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

[NOTICE] The vmm-repair addon has also been distributed. If you rebuild the loader, it will be added and run. When running the VM for the first time, an error may occur once because the uuid has not yet been found, but it will start normally after that. https://github.com/PeterSuh-Q3/tcrp-addons/blob/main/vmm-repair/src/vmmrepair.sh -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

I share a solution to recover the SAN MANAGER after it is damaged and the VMM connected to it is damaged. It is necessary to create the system /config directory used in VMM and adjust the permissions of the files within it. The key is to create the directory twice and grant permission twice. Ultimately, we will create a vmm-repair addon and distribute it further. The directory to be restored or the directory requiring permission is as shown below. Prepare tail with root privileges. tail -f /var/log/synoscgi.log | grep "No such file or directory" As shown in the tail log, the following processing is required. This example follows my uuid, so it may differ depending on your environment. mkdir -p /config/target/iscsi/iqn.4931fa37-41ab-44bc-b472-5c8ea14a36b2 chmod 777 /config/target/iscsi/iqn.4931fa37-41ab-44bc-b472-5c8ea14a36b2/tpgt_1/attrib/demo_mode_write_protect mkdir -p /config/target/loopback/naa.4931fa37-41ab-44bc-b472-5c8ea14a36b2 chmod 777 /config/target/loopback/naa.4931fa37-41ab-44bc-b472-5c8ea14a36b2/tpgt_1/address The above processing may be required for each VMM VOLUME. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

With the distribution of the san manager repair addon a few days ago, SAN MANAGER and VMM were successfully started. https://github.com/PeterSuh-Q3/tcrp-addons/tree/main/sanmanager-repair However, we are receiving reports that VMs created within VMM do not actually operate. I know about this problem too, but I haven't found a solution yet. -

Please try using SA6400 with the loader you are comfortable with, whether it is rr or my mshell. This is the topic of @MoetaYuko, who developed the transcoding i915 module for SA6400 last month. He was the first to test and stabilize SA6400 transcoding on Alder Lake N100. https://xpenology.com/forum/topic/69865-i915-driver-for-sa6400/ However, if the i226-v chipset is used in N100, the NIC chipset may become an issue.

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

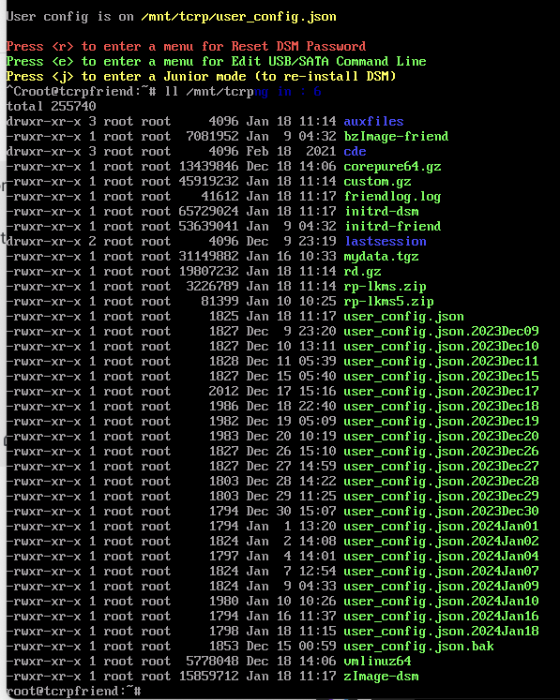

It looks like your user_config.json file has become corrupted for some reason. The location of the corrupted file is /mnt/tcrp/user_config.json on this Friend boot kernel. You will probably see files close to size 0. If you have repeated several loader builds, you may have multiple backups as shown below. During this backup, you can also restore from the file containing your settings using the cp command. I think we need to improve one more feature of the Friend kernel. In case of a corrupted user_config.json like yours, I will modify it to stop booting and guide you. -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

[HBA Recommended settings from flyride] Not EUDEV , Use Only DDSML (If you use TCRP-mshell ) "SataPortMap": "12", "DiskIdxMap": "1000", "SasIdxMap: "0" "MaxDisks: "24" -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

We mentioned the addition of an improved addon on the previous page. https://xpenology.com/forum/topic/61839-tinycore-redpill-loader-build-support-tool-m-shell/?do=findComment&comment=455313 To use this new addon you will need to rebuild your loader. -

This Micron 1100 model is on Synology's genuine support list and is causing problems. Originally, it should operate on its own without any script manipulation. And honestly, I don't have any ideas until @007revad, who has a lot of experience in this area, gives me detailed advice. As I mentioned last time, since your Micron 1100 has real data, I am afraid to ask you to do any testing after modifying the script to improve it. I think it is difficult to figure out the problem until 007revad or I purchase the Micron 1100 and test the hardware ourselves.

-

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

I have found this in two cases so far. 1. When MAC spoofing is processed while DSM has completed booting 2. When the system partition is damaged due to the sudden loss of one of the RAID disks in SA6400 (this is a case that often happens, but was a bit unexpected). -

TinyCore RedPill Loader Build Support Tool ( M-Shell )

Peter Suh replied to Peter Suh's topic in Software Modding

Below, the delay for restarting the SAN MANAGER (ScsiTarget) was adjusted from 3 minutes to 1 minute. synopkg start ScsiTarget The error icon appears briefly within a minute, and appears to appear roughly at the same time as other packages are loading.