snoopy78

Member-

Posts

188 -

Joined

-

Last visited

-

Days Won

2

Everything posted by snoopy78

-

did you actually read this thread before? it's already supported in HBA version....

-

I can't agree to your warning so far...i am using 4.3-3827 HBA with LSI 9201-16i all my drives (1x SSD, 5x HD204UI, 3x 4TB WDRED) are connected to this HBA. the 3 wd red are volume 2 with SHR1 and the 5 HD204UI are volume 3 with SHR1, the SSD is volume 1 single drive last night however my 5 WD Green 1,5 TB which worked for about 5 years flawless in my TS-509Pro dropped out of my system..again... so i guess they have a problem or are faulty... BUT! no issue with the 4 TB WD RED in any way (up-/downgrades, clean install).... i suggest you to check your hardware..

-

stanza's suggestion is for situations where you want to transfer data between 2 or more devices with a higher speed without spending too much money on new equipment... so stanza's setup is NOT working for normal clients, as the most OS can't handle "packet based round robin"...so no need to change all your infrastructure/network I for myself have my main client and storages upgraded to 10g ethernet...for "long" distances i use fiber-cables ( om4 mmf), for "short" distances i use DAC (direct attatched cables) but such a solution requires a good amount of money (at least 500€, unless you live in the US) I also recommend everyone, who's currently building, buying or renovating a hous/appartment to think and install some cabling-ways....it will save money and trouble later.... of course you can use wireless....take the new 802.11ac gbit capable standard..... first you need to find some fast NIC's and then the fast AP's....and then.....omg....wireless is a shared medium....All clients sharing the same bandwith, which means your single client bandwith will decrease... and i haven't started talking about the impact of walls, furniture or other wireless networks... so wireless is a nice thinh, but no network....thats why i tell always " networks use cables, air is for breathing"

-

dont think vlan's are working... the switch stores in his fdb (forwading database) the port & mac association. vlan capable switches also associate the vlan information there.. from normal networking perspective i would not recommend this setup..but if you want to interconnect several storages it will be of help at a cheap cost....(you only need the extra nics and a spare switch)

-

Ibm m1015 with lsi it flash...around 80 eur at ebay...google will be ur best friend for flash procedure

-

teste es doch einfach mal mit nem usb stick aus... ich habe aus faulheit 2 usb sticks mit dem akt. Image bespielt und die entsprechende vendor für das jeweilige system vorbereitet und tausche die sticks beim update einfach mit einem ersatzstick aus, wo ich nur die "neue" vendor am pc einspiele ( natürlich auch das ent. imsge vorher)

-

read this ... then you'll know viewtopic.php?f=2&t=17

-

Installed the boot image first?

-

Xpenology and SAS Controller - HBA

snoopy78 replied to stanza's topic in DSM 5.2 and earlier (Legacy)

new threat would be fine as others may want to know too... Ok...bonding...afaik syno/xpenology uses 802.3ad for bonding...so you need an 802.3ad capable switch for that. so the IEEE standard 802.3ad (lacp) is session based. This means there is an algorithm which is balancing the sessions onto the bond's physical nics. So for each client the max. speed is still 1 GBit. Bonding only provides fault tolerance and more capacity if there are sevetal clients accessing the "server"...so for each connection no speed gain...in my net plan you can see a ds-1812+ which is connected by a 802.3ad bond...and still always only 1gbit for each session... for virtualization..you need 802.3ad bonding and on top of that 802.1Q but still...yout v-switch must be capable then.... Lacp and vlan together with the correct balancing algoriththm..... Ok...vlans you need only if your virtualized machines needs to be separated from each other.. -

Xpenology and SAS Controller - HBA

snoopy78 replied to stanza's topic in DSM 5.2 and earlier (Legacy)

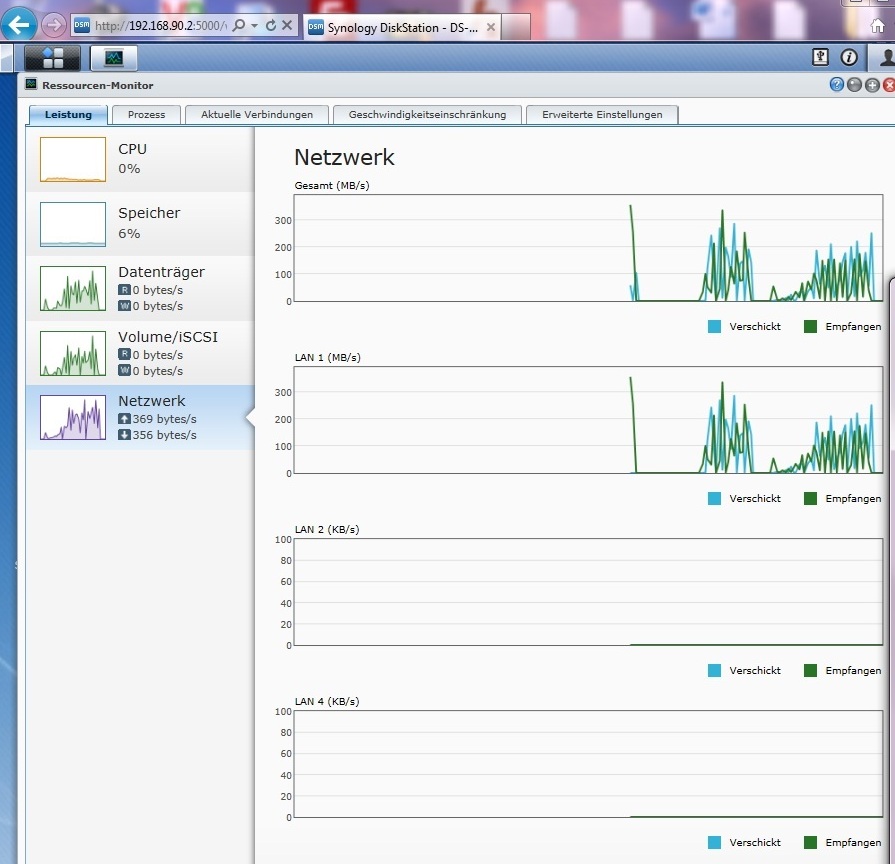

Hi, snoopy78! May be you can tell the full story - what is needed to build 10G network? I'm absolutely nube in 10G... So everything - controllers, routers, cables - is not clear at all. I do understand that it looks like a little bit off-topic question. But with xpenology and especially sas-based configurations we see real throughoutput up to 10G... So I believe it may be interesting not only for me. May be it shall be a special topic for 10G network hardware environment for xpenology. If you can share your knowledge (or if anyone else can help) I will be thankful much. ok, let's say like that... i'm working in enterprise IT industry as an network-tech guy so naturally i'm interested in what's possible and how to achieve this also i try to learn something by myself with such an setup and test some of our newest products in this way so at first you need time, money and a wife/family who will accept your job/hobby secondly you need to have an idea what you want to do.... and you must be willing to spent time and money on it 10G is not cheap, it's affordable.. but still...it cost's money there are many way's to get 10G (40G) up and running (like Infiniband, FCoE or 10 GBe) and i ONLY focus on 10G Ethernet as this also covers my job so what you 1st need is a Client and Storage which can actually handle such speed (even my setup can't fully make use of this), this means you need - fast HDD's / SSD's - fast HBA for SATA or BETTER SAS drives (for me sas drives are way to expensive and i'm satisfied with my reachable speed) - fast cpu then it comes to the networking part currently most of the 10G NICs are using SFP+ or DAC (directly attaced SFP+ cable), new intel ones now switch to Cat7 RJ45, but afaik the latency is higher then SFP+/DAC so now you have to think what cables and distances you need.. usually at home MMF is enough, even DAC (1m, 2m, 5m, 10m) should be fine SO let's say you buy the brand new DGS-1510-20 (which offers 2 10G SFP+) ports then you already spend for this about 200-250 € then you need a 10G nic, here is ebay your best friend.. i was luck to obtain some qlogic nics for about 100€ each nic (so it makes again totally ~ 200 €) then you need a DAC or SFP+.. for a 10G MMF (850nm) SFP+ you should pay around 40 € and the LWL Cable ~ 10 - 15€ (so it makes another 180-200 €) if you use DAC then you can be lucky at ebay and get some for around 30-50 € eacht (so totally 100 €) so now you can around calculate the prices... BUT what's most important... do you have the data for the speed? 10G usually makes sense if you need to transfer laaaarge amount of files fast... but do you need it at home? hope this helps for now... i need now to catch my little girl.. -

Xpenology and SAS Controller - HBA

snoopy78 replied to stanza's topic in DSM 5.2 and earlier (Legacy)

@ stanza thx for your tip, next time when i play around i'll test what you're suggesting is more like nat-pat... am i right? (vpns, routing and all tunnel handling is beeing done by my dsr) here is a part of my network https://www.dropbox.com/s/tz2qnmo6a648y ... .48.25.jpg the red connection are 10G as you can see i do have a "real" vlan-routing capable router and i can redirect packets corretly (actually i do have a little "dirty" network, as the iLO also provides LAN link in XPEnology, routing points to 10G @ SC836) please correct me when i'm wrong p.s. this is the atto bench to a single hdd in my hp-system, routed via sc836 and bypassed GW with direct route from client -

Xpenology and SAS Controller - HBA

snoopy78 replied to stanza's topic in DSM 5.2 and earlier (Legacy)

hello, i use following settings... 1.) improve raid rebuild time (viewtopic.php?f=2&t=2079&p=10371&hilit=sysctl.conf#p10371) sysctl.conf sysctl -w dev.raid.speed_limit_min=300000 sysctl -w dev.raid.speed_limit_min=600000 2.) improve # disks esataportcfg="0x000" usbportcfg="0x05" internalportcfg="0xffffff" 3.) additionally since last night i use my 2port 10G NIC as routing between both ports (my 10G switch only has 2x 10G sfp+ so i route my HP-Xpenology test/backup system via 10G over my SC836 chassis) rc.local echo 1 > /proc/sys/net/ipv4/conf/all/forwarding i have this files prepared at my 1st volume (ssd single drive) so i copy them right after install to the right places BR snoopy78 -

80-125 MB/s entsprechen einer 1GBit Leitung und sind das Maximum, du kannst noch so viele 1GBit NICs in das System stopfen, wirst aber ausser problemen nichts davon haben. wenn du mehr durchsatz/bandbreite willst, bleibt dir nur 10GBit, dann aber Client, Switch und Storage ich habe mit atto bei 10gbit max. ~ 800 MB/s lesen und 500 MB/s schreiben (limitierung druch hdds und systeme) p.s. stanza hat folgende topic erstellt, das KÖNNTE! etwas sein, ist aber nur für ganz spezielle Einsatzgebiete geeignet!! viewtopic.php?f=2&t=2476

-

=> recieved my 2 QLE3242 NIC's yesterday and both are working with current Beta 7

-

As I discovered, sometimes you have to choose create a volume several times before it actually creates a volume ... then the volume SHOULD create successfully and survive a reboot. hi stanza, the issue did occur ONLY after upgading / installing the dsm.pat file and after 1st reboot yesterday i did plug these 5 hdds back into the system (while it was running) and created a new volume (so destroyed the old one) and now the volume works fine and survives reboot i currently restore my data from this volume i undestand, that if the volume isn't fine, that the system can't access it, but why i got a shutdown and reboot? also i had to reinstall afterwards the .pat file again as the system crashed.... so for me for future workaround must be to remove this volume physically and manually backup/recover the data (it's only max 6 tb i don't know if the issue is caused by the "old" hdds (which are about 4 1/2 years old from my old qnap ts-509pro (bought mid 2009) but last smart was fine) if so i will by new wd red 4tb or seagate nas 4 tb but would need to be sure, that this won't happen again is there maybe a way to manually initialize the hdd's via cli? p.s. this is my proc/mdstat " DS-SC836> cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] md3 : active raid5 sdr5[0] sdq5[2] sds5[3] 7804567296 blocks super 1.2 level 5, 64k chunk, algorithm 2 [3/3] [uUU] md5 : active raid5 sdh5[0] sdl5[4] sdk5[3] sdj5[2] sdi5[1] 5841614592 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/5] [uUUUU] md4 : active raid5 sdn5[0] sdg5[4] sdp5[5] sdo5[2] sdm5[1] 7795118592 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/5] [uUUUU] md2 : active raid1 sde5[0] 120301632 blocks super 1.2 [1/1] md1 : active raid1 sde2[0] sdg2[1] sdh2[9] sdi2[12](S) sdj2[13](S) sdk2[11] sdl2[10] sdm2[2] sdn2[3] sdo2[4] sdp2[5] sdq2[6] sdr2[7] sds2[8] 2097088 blocks [12/12] [uUUUUUUUUUUU] md0 : active raid1 sde1[0] sdg1[1] sdh1[9] sdi1[12](S) sdj1[13](S) sdk1[11] sdl1[10] sdm1[2] sdn1[3] sdo1[4] sdp1[5] sdq1[6] sdr1[7] sds1[8] 2490176 blocks [12/12] [uUUUUUUUUUUU] unused devices: DS-SC836> "

-

hi trantor, is it possible to add the tweak about max no of disks into the pat file? i currently use a SC836 chassis with an lsi-9201-16i and x8sil-f mobo. so totally i could have 16 + 6 sata drives, currently i have 13 hdds (3x 4tb wd red SHR1, 5x 2TB HD204UI SHR1, 5x 1,5 WD15EADS SHR1) + 1 SSD (Segate 128GB) in the chassis connected with last update i experienced some issues as follows (had this issue similar also in 4.3 beta 5) - after upgrade "migrate" install only 6 of my 14 drives are discovered => after copying my "modified" synoinfo.conf (viewtopic.php?f=2&t=2028) all HDDs are discovered, but won't get initialized (i also modded the "sysctl.conf" to fasten up the raid rebuild) => after reboot of system the hdd's are in initialized stated EXCEPT that the system crashes immediately after bootup ===> see video of bootup & chrash https://www.dropbox.com/s/j1ubzjovyokui55/3.avi troubleshooting was to remove my volume 4 (5x 1,5 TB R5) and insert it one by one (after each insert do a reboot) till i reach disk #4, then the issue occur again => so i removed the hdds of the volume and setted up the system again => works fine ==> now i inserted all 5 disks into my backup/recovery/test system (HP ML310 + IBM M1015 IT Flash HBA) and install your beta7 there agian ===> after reboot volume 4 is up with 4 of 5 disks, so i could access and backup my last unsaved date ( i had done a full backup of volume 4 last weekend onto my real ds-1812+ (8x3 TB R6) ) ===> afterwards i plugged all 5 disks again into my SC836 and created a new volume, which now works fine so all in all it took my 1 night&day to update and recover data also, i just bought some additional qlogic 10G nics (QLE3242-sr-ck) to play around, can i use the current driver for the QLE82xx series which is included in beta6 upwards? also, i was reading about kernel from RS10613xs+, do you know if this is still moving onward? thx

-

Stupid question - do the HP NLxx microservers need the SAS or normal version? I think they use at least a SAS cable... HBA if you use an additional Host Bus Adaptor (SAS Controller)..otherwise standard which cable you are using doesn't really matter

-

hi trantor, did you already had time to checked the missing "/dev/rtc0" ?

-

i'm mainly using d-link switches.... full managed (DGS-3200-10), smart pro (can't tell the number but it has 2x 10G & 18 1G ports) and smart (DGS-1210-10P)ones... depending on how many ports you need i'd recomment you a smart switch DGS-1210-xx (xx = portnumber /poe)..

-

i'm mainly using d-link switches.... full managed (DGS-3200-10), smart pro (can't tell the number but it has 2x 10G & 18 1G ports) and smart (DGS-1210-10P)ones... depending on how many ports you need i'd recomment you a smart switch DGS-1210-xx (xx = portnumber /poe)..

-

is your "TL-SG1005D" LACP capable? can you configure it? if you can NOT access switch as it's an unmanaged one, then you can not use LACP (802.3ad)

-

is your "TL-SG1005D" LACP capable? can you configure it? if you can NOT access switch as it's an unmanaged one, then you can not use LACP (802.3ad)

-

[SOLVED] Tips for installing XPENology in Hyper-V

snoopy78 replied to grayer's topic in DSM 5.2 and earlier (Legacy)

i haven't had any speed issues.. connection was a 10G NIC (HP NC523SFP) which i added into the virtual switch, there i connected the VM then but now i'm running the native xpenology as there were too many issues (auto shutdown/wakeup, hdd temp,..) in virtual (hyper-v & esxi) which i now don't have -

[SOLVED] Tips for installing XPENology in Hyper-V

snoopy78 replied to grayer's topic in DSM 5.2 and earlier (Legacy)

i haven't had any speed issues.. connection was a 10G NIC (HP NC523SFP) which i added into the virtual switch, there i connected the VM then but now i'm running the native xpenology as there were too many issues (auto shutdown/wakeup, hdd temp,..) in virtual (hyper-v & esxi) which i now don't have -

hi trantor, this may be an unexpected approach and i don't know if it's a driver request. in viewtopic.php?f=2&t=2316&p=11780#p11780 i discuss about an issue i'm currently experiencing. Maybe you had experienced something like that too. Do you maybe know how the akeup scheduler works exactely? It seems to write some values into the NVRAM.. Otherwise could you help to integrate the Mainboards RTC into the DSM as of then i could use a script to run the wakup. unfortunately there is no /dev/rtc0.. BR snoopy78