Leaderboard

Popular Content

Showing content with the highest reputation since 04/19/2024 in all areas

-

Due to many further questions/problems here in the thread, I would like to expand the topic to all models of the Synology "+" series and all questions about internal flash memory regarding hardware replacement, image repair, elsewhere with a new topic. Synology model upgrades are also possible here: As already mentioned, the thread owner also has the opportunity to close this topic here. From my side, thank you @wool very much for the information about the pin-out and the ideas that came from it.2 points

-

2 points

-

1 point

-

Much better is to setup DS3622xs. Yes, you have to select a new model (in loader setup), build a loader, boot into freshly built loader, get into web and migrate to a new model DSM, preferring to save settings.1 point

-

https://web.archive.org/web/20210412133958/https://global.download.synology.com/download/DSM/release/6.1.7/15284/DSM_VirtualDSM_15284.pat1 point

-

There's an internet archive for this sort of thing https://web.archive.org/web/20210305152847/https://archive.synology.com/download/Os/DSM1 point

-

1 point

-

1 point

-

1 point

-

1 point

-

1 point

-

Hi DSfuchs. I managed to download the DOM. Can you give me an email address to send you the link to the DOM image file? Thanks!1 point

-

Mise à jour terminée, je suis maintenant en DSM 7.2.1-69057 Update 41 point

-

зависит от версии ESXi. хотя на рутрекере (через впн) всё есть (обычно достаточно SN указать)1 point

-

1 point

-

Unfortunately, it never occurred to me that such a mistake could be made. If I can, I'll make a backup of the DOM. Tomorrow I'll try to see if it works and what's on it. If I can get it to come back to life, I'll make an img file and send it over. Thanks a lot for your help!1 point

-

1 point

-

Is there no one moderating these forums anymore ? I posted a new topic for Version: 7.2.1-69057 Update 5 3 days ago and it is still waiting approval !1 point

-

1 point

-

Предполагаю что диск который вы заменили нормальный. А диски отключаются по питанию одни и те же или разные? Тут 2 варианта или питалово или плохой сата коннект. Если диск нормальный и отваливаются одни и те же диски то вероятность больше в том что плохие сата коннекты. Если разные диски то надо смотреть в сторону бп. Еще может быть контроллер. Но это уже после проверки первых двух причин.1 point

-

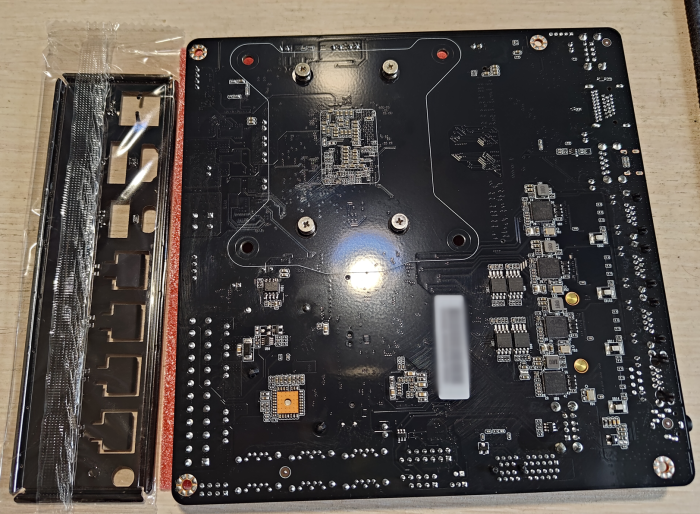

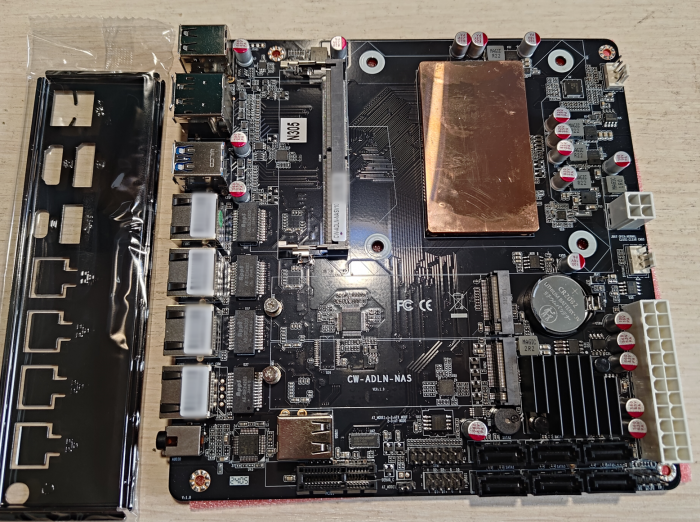

an interesting motherboard. But for Xpenology NAS, it is valuable in it only 8 SATA ports. The rest of the possibilities are redundant1 point

-

My Synology DS412+ is back online!!! Manny thanks to DSfuchs, is the best! 💪🍻1 point

-

Только к некоторым сервисам на самом NAS. Для остальной локалки нужен VPN или ставить виртуалку на DSM, а с нее уже заходить на локалку.1 point

-

DS1621+: DSM 7.2.1-69057 Update 5 - результат обновления: УСПЕШНЫЙ - версия DSM до обновления: DSM 7.2.1-69057 Update 4 - версия и модель загрузчика до обновления: Arc 23.11.15 (загрузчик не обновлял) - версия и модель загрузчика после обновления: Arc 23.11.15 - железо: HP Proliant MicroServer G7 - комментарий: обновление скачал с https://archive.synology.com/download/Os/DSM/7.2.1-69057-5 Решил обновиться в ручную ))) Перегрузка на 8 минут. Всё работает как и раньше1 point

-

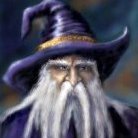

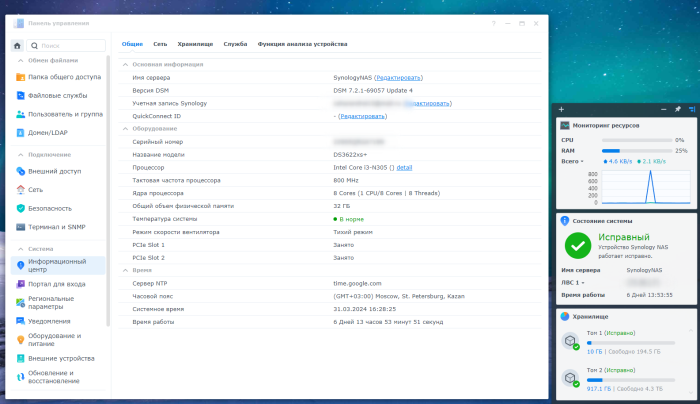

Hello everyone, my long-awaited motherboard arrived in early March. For the first time, the seller canceled the shipment because they found some problems with the batch of motherboards. As a result, they sent it to me at the end of February. The first launch is very long, after everything is initialized the launch becomes fast. hdmi only works in OS, it’s better not to rely on it, there are resolution problems there. But through DP everything works well. I was able to install 32GB of memory from the Chinese brand PUSKILL, frequencies 5200MHz did not work, but at 4800MHz everything works. https://www.aliexpress.com/item/1005005989535277.html?spm=a2g2w.orderdetail.0.0.d9704aa6ABmHvJ&sku_id=12000035200940840 I installed 2 SSDs in m2 from KingSpec, let's see how long they last https://www.aliexpress.com/item/1005003844066987.html?spm=a2g2w.orderdetail.0.0.20f84aa6C22z9Q&sku_id=12000037919355571 Xpenology installed without any problems at all. I used the bootloader from Peter Suh, screenshots of the system and board are attached. I've been using it for 2 weeks now and it's great. https://github.com/PeterSuh-Q3/tinycore-redpill1 point

-

Вспомнил. При каком-то из обновлений винда отключала smb 1 и из-за етого крашилась сеть. Нужно было зайти в компоненты и включить.1 point

-

Du gros "game changer" comme j'adore, personnellement j'ai fais une migration vers le dsm 7.2 (DS3622xs+) de mon dsm 7.1 (DS3615xs) avec chargeur redpills virtualisé sur vmware workstation pro, sa se passe sans problème pour ce faire; j'ai mis moins d'1 heure, bravo pour cette version du chargeur et merci à toute la communauté1 point

-

Для быстрой проверки лучше использовать Security Advisor, он стоит по умолчанию в DSM 7. Порты приложений желательно менять от стандартных, особенно к DSM и размещать переадресацию на роутере вручную. У меня частенько после перезапуска роутера порты upnp не поднимаются сразу автоматом.1 point

-

NOTE OF CAUTION It is strongly advised to never apply an update on a 'production' box as soon as the update is made available. ALWAYS test the update on a test machine first and make sure all features are working as expected. Also, I recommend you to wait several days after the update is available to apply the update on a 'production' box. Reason is that Synology sometimes makes updates available and then suddenly withdraws them for no apparent reason. This could mean that the update has some issues and needs to be withdrawn from the public.1 point