-

Posts

4,640 -

Joined

-

Last visited

-

Days Won

212

Everything posted by IG-88

-

also halten wir mal fest tcrp 0.9.4.0 sollte das problem fixen https://github.com/pocopico/tinycore-redpill/releases/tag/v0.9.4.0

-

mit einer neueren version des loaders? (also dann auch einer neueren version des redpill treibers)

-

für den zweck habe sogar mal was in den tutorials geschrieben (vor allem da externe links irgenwann mal verschwinden) https://xpenology.com/forum/topic/32867-sata-and-sas-config-commands-in-grubcfg-and-what-they-do/ da gibt es in den aufklappbaren Kconfig sachen auch SYNO_SATA_REMAP @cxberdott wenn es nur um solche kosmetik geht sollte man sich überlegen ob es die zeit wert ist und mit solchen exotischen sachen von der üblichen konfig abzuweichen kann später auch viel ärger machen (z.b. wenn isch intern im loader sachen ändern oder beim data recovery) ansonsten probier solche sachem lieber mit einer vm in virtulabox aus, da kann man bei problemen leichter von vorn anfangen und man spielt nicht an den eigenen daten rum , einfach ein paar thin provisioned disks ...

-

imho müssten für Surveilance Lizenzen sn und mac zusammen passen, spätestens wenn du die config des loaders noch mal aufrufst und neu schreibst sollte die sn erneuert werden evtl. wäre ja auch die dva1622 was für dich, ist auch intel qsv mit i915 treiber aber mit mehr cams's (afaik acht ootb) and mehr features bei Surveilance Station (z.b. gesichtserkennung)

-

something like unknown symbol "crc_itu_t" is often missing dependency when loading the module, in that case its most likely crc_itu_t.ko, the module itself seems to be present in the loader https://github.com/AuxXxilium/arc-modules/tree/main/apollolake-4.4.180 but it might be loaded to late, it would need to be loaded before atlantic.ko i guess you might be able to test that by trying to load the two modules with insmod manually (insmod [ull_patch_and_module_name]) after that check the log again, if the module loads with that you would file a bug in the loader you could try the same with the tcrp (load and check log with dmesg) edit: from the github it looks like the module crc_itu_t.ko was added a few hors ago, maybe pull a new version of the loader and try again, it might have been fixed

-

for you can try this loader https://github.com/AuxXxilium/arc or its even more automated version https://github.com/AuxXxilium/arc-automated (less hassle as its menu driven by default as arpl and also claims to support more drives) driver for the nic: atlantic.ko, there are different versions of the driver in the loaders and it might be that newer phy chips are only supported with newer driver versions tcrp: 2.4.15 arpl: 2.5.5.0 arc: 2.5.5.0 ASUS Homepage for the nic https://www.asus.com/supportonly/xg-c100c/helpdesk_download/?model2Name=XG-C100C ASUS XG-C100C Driver Version 5.0.3.3 Version 5.0.3.3 75.59 MB 2021/12/22 1. update driver to ver. 3.0.20.0 2. update linux to ver. 2.4.14.0 so from this it look like the driver versions used in tcrp and arpl should be good enough as asus only uses 2.4.14 also check the log about the driver message after dsm installation (dmesg) it might give you a hint like nic chip detected but unknown phy i might also say that this thread is for 6.2. not for 7.x so on "long" term and further problems create an thread or add to thread about the loader you use, it might also raise the possibility to get proper help for you problem (not much people will look here anymore)

-

the slot, even looking like pcie might not be one, the syno systems are custom builds and are not bound to conventional hardware layouts, so it might be some custom layout transporting sata ans power signals instead of pcie, thats the reason to look for chips on the board and the backplane, if the sata chip/controller is an the main board and there is no such thing on the backplane it might indicate that its not rally a pcie slot, so check at least some basic stuff like power and ground signals and compare pcie and the slot on the board

-

Drivers requests for DSM 6.2

IG-88 replied to Polanskiman's topic in User Reported Compatibility Thread & Drivers Requests

use newer loader for 7.x 6.2.3 is the last one supported in jun's loaders and qla2xxx is also suppoted in the newer drivers https://github.com/pocopico/redpill-modules/tree/master/qla2xxx -

die 918+/920+ braucht als min. cpu eine intel 4th gen und der xeon den du verwendest ist haswell aka 4th gen, sollte also an sich gehen aber da die cpu keine gpu integriert hat fällt der eigentliche plus punkt der 918/920 weg (intel qick synv video support für hardware transoding) die 3622 oder 3617 mit 7.x würden auch mehr cpu kerne/threads unterstützen aber da deine cpu nur 4 cores hat und keine HT wäre das auch keine problem (die 918/920 können max. 8 threads) unter 6.2 war die 918+ auch mit unter interessant weil sie vergleichen mit 3615/3617 nicht "nur" kernel 3.10 hatte sondern kernel 4.4 und da ist ootb mehr neuere hardware supported aber seit 7.x sind die alle auf kernel 4.4 so das dieser vorteil der 918+ nicht mehr greift, außerdem war bei 6.2 die 3615/17 auf csm/bios angewiesen, 918+ konnte auch uefi

-

nein, sind korrekt nur sie treffen nicht auf deine (recht "spezielle") qnap nas hardware zu, für die meisten mehr "normaler pc hardware" basierten nas hier wären die antworten gut mal davon abgesehen das du nichts zu deiner hardware geschieben hast, deine beschreibung des problems macht nicht 100% klar das das system an sich wieder an geht wenn es strom bekommt, man kann deine formulierung zwar so interpretieren aber es wäre auch herauslesbar das es sich erst einschaltet wenn man ein taste auf der tastatur drückt (mit unter gibt es das auch als power on option im bios damit man nicht unter den tisch langen muss um den pc zu starten)

-

man kann nicht erkennen welchen loader du versucht hast aber versuch mal arpl https://github.com/fbelavenuto/arpl/releases/tag/v1.0-beta10 ansonsten kannst du mal zum testen einen alten loader für dsm 6.2 oder 6.1 versuchen (3617 mit csm on und das "nicht uefi" usb boot device) oder einen mbr laoder (wobei die eher für ältere hp systeme waren) https://xpenology.com/forum/forum/98-member-tweaked-loaders/

-

BP might be "BackPlane" so not much out of this, peel off the sticker and look for the chip's markings

-

there is usually one "loss" when doing that, you can't use synology's VMM for handling virtual machines also beside using tcrp friend it might be enough to just restart arpl and configure it to 3622 as base for the system to install

-

the driver source can be downloaded from nvidia and there is a readme in it that lists the supported hardware 10de:21c4 - 10de is nvidia and 21c4 is the device yours "Graphics card is MSI 1660S" from below ... GeForce GTX 1660 SUPER 21C4 ... so in theory the card should be supported by the driver, so it might be something different just so see more try "lspci -v" and look for the device if the driver is working you should see a " Kernel driver in use:" for it A1. NVIDIA GEFORCE GPUS NVIDIA GPU product Device PCI ID* VDPAU features ---------------------------------- --------------- --------------- GeForce GT 640 0FC0 C GeForce GT 640 0FC1 C GeForce GT 630 0FC2 C GeForce GTX 650 0FC6 D GeForce GT 740 0FC8 D GeForce GT 730 0FC9 C GeForce GT 640M LE 0FD2 1028 0595 C GeForce GT 640M LE 0FD2 1028 05B2 C GeForce GT 745A 0FE3 103C 2B16 D GeForce GT 745A 0FE3 17AA 3675 D GeForce GTX TITAN Z 1001 D GeForce GTX 780 1004 D GeForce GTX TITAN 1005 D GeForce GTX 780 1007 D GeForce GTX 780 Ti 1008 D GeForce GTX 780 Ti 100A D GeForce GTX TITAN Black 100C D GeForce GTX 680 1180 D GeForce GTX 660 Ti 1183 D GeForce GTX 770 1184 D GeForce GTX 660 1185 D GeForce GTX 760 1185 10DE 106F D GeForce GTX 760 1187 D GeForce GTX 690 1188 D GeForce GTX 670 1189 D GeForce GTX 760 Ti OEM 1189 10DE 1074 D GeForce GTX 760 (192-bit) 118E D GeForce GTX 760 Ti OEM 1193 D GeForce GTX 660 1195 D GeForce GTX 760 1199 1458 D001 D GeForce GTX 660 11C0 D GeForce GTX 650 Ti BOOST 11C2 D GeForce GTX 650 Ti 11C3 D GeForce GTX 645 11C4 D GeForce GT 740 11C5 D GeForce GTX 650 Ti 11C6 D GeForce GTX 650 11C8 D GeForce GT 740 11CB D GeForce GTX 760A 11E3 17AA 3683 D GeForce GT 635 1280 D GeForce GT 710 1281 D GeForce GT 640 1282 C GeForce GT 630 1284 C GeForce GT 720 1286 D GeForce GT 730 1287 C GeForce GT 720 1288 D GeForce GT 710 1289 D GeForce GT 710 128B D GeForce 730A 1290 103C 2AFA D GeForce GT 740A 1292 17AA 3675 D GeForce GT 740A 1292 17AA 367C D GeForce GT 740A 1292 17AA 3684 D GeForce 710A 1295 103C 2B0D C GeForce 710A 1295 103C 2B0F C GeForce 810A 1295 103C 2B20 D GeForce 810A 1295 103C 2B21 D GeForce 805A 1295 17AA 367A D GeForce 710A 1295 17AA 367C D GeForce 920A 1299 17AA 30BB D GeForce 920A 1299 17AA 30DA D GeForce 920A 1299 17AA 30DC D GeForce 920A 1299 17AA 30DD D GeForce 920A 1299 17AA 30DF D GeForce 920A 1299 17AA 3117 D GeForce 920A 1299 17AA 361B D GeForce 920A 1299 17AA 362D D GeForce 920A 1299 17AA 362E D GeForce 920A 1299 17AA 3630 D GeForce 920A 1299 17AA 3637 D GeForce 920A 1299 17AA 369B D GeForce 920A 1299 17AA 36A7 D GeForce 920A 1299 17AA 36AF D GeForce 920A 1299 17AA 36F0 D GeForce GT 730 1299 1B0A 01C6 C GeForce 830M 1340 E GeForce 830A 1340 103C 2B2B E GeForce 840M 1341 E GeForce 840A 1341 17AA 3697 E GeForce 840A 1341 17AA 3699 E GeForce 840A 1341 17AA 369C E GeForce 840A 1341 17AA 36AF E GeForce 845M 1344 E GeForce 930M 1346 E GeForce 930A 1346 17AA 30BA E GeForce 930A 1346 17AA 362C E GeForce 930A 1346 17AA 362F E GeForce 930A 1346 17AA 3636 E GeForce 940M 1347 E GeForce 940A 1347 17AA 36B9 E GeForce 940A 1347 17AA 36BA E GeForce 945M 1348 E GeForce 945A 1348 103C 2B5C E GeForce 930M 1349 E GeForce 930A 1349 17AA 3124 E GeForce 930A 1349 17AA 364B E GeForce 930A 1349 17AA 36C3 E GeForce 930A 1349 17AA 36D1 E GeForce 930A 1349 17AA 36D8 E GeForce 940MX 134B E GeForce GPU 134B 1414 0008 E GeForce 940MX 134D E GeForce 930MX 134E E GeForce 920MX 134F E GeForce 940A 137D 17AA 3699 E GeForce GTX 750 Ti 1380 E GeForce GTX 750 1381 E GeForce GTX 745 1382 E GeForce 845M 1390 E GeForce GTX 850M 1391 E GeForce GTX 850A 1391 17AA 3697 E GeForce GTX 860M 1392 D GeForce GPU 1392 1028 066A E GeForce GTX 750 Ti 1392 1043 861E E GeForce GTX 750 Ti 1392 1043 86D9 E GeForce 840M 1393 E GeForce 845M 1398 E GeForce 945M 1399 E GeForce GTX 950M 139A E GeForce GTX 950A 139A 17AA 362C E GeForce GTX 950A 139A 17AA 362F E GeForce GTX 950A 139A 17AA 363F E GeForce GTX 950A 139A 17AA 3640 E GeForce GTX 950A 139A 17AA 3647 E GeForce GTX 950A 139A 17AA 36B9 E GeForce GTX 960M 139B E GeForce GTX 750 Ti 139B 1025 107A E GeForce GTX 860M 139B 1028 06A3 D GeForce GTX 960A 139B 103C 2B4C E GeForce GTX 750Ti 139B 17AA 3649 E GeForce GTX 960A 139B 17AA 36BF E GeForce GTX 750 Ti 139B 19DA C248 E GeForce GTX 750Ti 139B 1AFA 8A75 E GeForce 940M 139C E GeForce GTX 750 Ti 139D E GeForce GTX 980 13C0 E GeForce GTX 970 13C2 E GeForce GTX 980M 13D7 E GeForce GTX 970M 13D8 E GeForce GTX 960 13D8 1462 1198 E GeForce GTX 960 13D8 1462 1199 E GeForce GTX 960 13D8 19DA B282 E GeForce GTX 960 13D8 19DA B284 E GeForce GTX 960 13D8 19DA B286 E GeForce GTX 965M 13D9 E GeForce GTX 980 13DA E GeForce GTX 960 1401 F GeForce GTX 950 1402 F GeForce GTX 960 1406 F GeForce GTX 750 1407 E GeForce GTX 965M 1427 E GeForce GTX 950 1427 1458 D003 F GeForce GTX 980M 1617 E GeForce GTX 970M 1618 E GeForce GTX 965M 1619 E GeForce GTX 980 161A E GeForce GTX 965M 1667 E GeForce MX130 174D E GeForce MX110 174E E GeForce 940MX 179C E GeForce GTX TITAN X 17C2 E GeForce GTX 980 Ti 17C8 E TITAN X (Pascal) 1B00 H TITAN Xp 1B02 H TITAN Xp COLLECTORS EDITION 1B02 10DE 123E H TITAN Xp COLLECTORS EDITION 1B02 10DE 123F H GeForce GTX 1080 Ti 1B06 H GeForce GTX 1080 1B80 H GeForce GTX 1070 1B81 H GeForce GTX 1070 Ti 1B82 H GeForce GTX 1060 6GB 1B83 H GeForce GTX 1060 3GB 1B84 H P104-100 1B87 H GeForce GTX 1080 1BA0 H GeForce GTX 1080 with Max-Q Design 1BA0 1028 0887 H GeForce GTX 1070 1BA1 H GeForce GTX 1070 with Max-Q Design 1BA1 1028 08A1 H GeForce GTX 1070 with Max-Q Design 1BA1 1028 08A2 H GeForce GTX 1070 with Max-Q Design 1BA1 1043 1CCE H GeForce GTX 1070 with Max-Q Design 1BA1 1458 1651 H GeForce GTX 1070 with Max-Q Design 1BA1 1458 1653 H GeForce GTX 1070 with Max-Q Design 1BA1 1462 11E8 H GeForce GTX 1070 with Max-Q Design 1BA1 1462 11E9 H GeForce GTX 1070 with Max-Q Design 1BA1 1462 1225 H GeForce GTX 1070 with Max-Q Design 1BA1 1462 1226 H GeForce GTX 1070 with Max-Q Design 1BA1 1462 1227 H GeForce GTX 1070 with Max-Q Design 1BA1 1558 9501 H GeForce GTX 1070 with Max-Q Design 1BA1 1558 95E1 H GeForce GTX 1070 with Max-Q Design 1BA1 1A58 2000 H GeForce GTX 1070 with Max-Q Design 1BA1 1D05 1032 H GeForce GTX 1070 1BA2 H P104-101 1BC7 H GeForce GTX 1080 1BE0 H GeForce GTX 1080 with Max-Q Design 1BE0 1025 1221 H GeForce GTX 1080 with Max-Q Design 1BE0 1025 123E H GeForce GTX 1080 with Max-Q Design 1BE0 1028 07C0 H GeForce GTX 1080 with Max-Q Design 1BE0 1028 0876 H GeForce GTX 1080 with Max-Q Design 1BE0 1028 088B H GeForce GTX 1080 with Max-Q Design 1BE0 1043 1031 H GeForce GTX 1080 with Max-Q Design 1BE0 1043 1BF0 H GeForce GTX 1080 with Max-Q Design 1BE0 1458 355B H GeForce GTX 1070 1BE1 H GeForce GTX 1070 with Max-Q Design 1BE1 103C 84DB H GeForce GTX 1070 with Max-Q Design 1BE1 1043 16F0 H GeForce GTX 1070 with Max-Q Design 1BE1 3842 2009 H GeForce GTX 1060 3GB 1C02 H GeForce GTX 1060 6GB 1C03 H GeForce GTX 1060 5GB 1C04 H GeForce GTX 1060 6GB 1C06 H P106-100 1C07 H P106-090 1C09 H GeForce GTX 1060 1C20 H GeForce GTX 1060 with Max-Q Design 1C20 1028 0802 H GeForce GTX 1060 with Max-Q Design 1C20 1028 0803 H GeForce GTX 1060 with Max-Q Design 1C20 1028 0825 H GeForce GTX 1060 with Max-Q Design 1C20 1028 0827 H GeForce GTX 1060 with Max-Q Design 1C20 1028 0885 H GeForce GTX 1060 with Max-Q Design 1C20 1028 0886 H GeForce GTX 1060 with Max-Q Design 1C20 103C 8467 H GeForce GTX 1060 with Max-Q Design 1C20 103C 8478 H GeForce GTX 1060 with Max-Q Design 1C20 103C 8581 H GeForce GTX 1060 with Max-Q Design 1C20 1462 1244 H GeForce GTX 1060 with Max-Q Design 1C20 1558 95E5 H GeForce GTX 1060 with Max-Q Design 1C20 17AA 39B9 H GeForce GTX 1060 with Max-Q Design 1C20 1A58 2000 H GeForce GTX 1060 with Max-Q Design 1C20 1A58 2001 H GeForce GTX 1060 with Max-Q Design 1C20 1D05 1059 H GeForce GTX 1050 Ti 1C21 H GeForce GTX 1050 1C22 H GeForce GTX 1060 1C23 H GeForce GTX 1060 1C60 H GeForce GTX 1060 with Max-Q Design 1C60 103C 8390 H GeForce GTX 1060 with Max-Q Design 1C60 103C 8467 H GeForce GTX 1050 Ti 1C61 H GeForce GTX 1050 1C62 H GeForce GTX 1050 1C81 H GeForce GTX 1050 Ti 1C82 H GeForce GTX 1050 1C83 H GeForce GTX 1050 Ti 1C8C H GeForce GTX 1050 Ti with Max-Q Design 1C8C 1028 087C H GeForce GTX 1050 Ti with Max-Q Design 1C8C 103C 8519 H GeForce GTX 1050 Ti with Max-Q Design 1C8C 103C 856A H GeForce GTX 1050 Ti with Max-Q Design 1C8C 1462 123C H GeForce GTX 1050 Ti with Max-Q Design 1C8C 1462 126C H GeForce GTX 1050 Ti with Max-Q Design 1C8C 17AA 2266 H GeForce GTX 1050 Ti with Max-Q Design 1C8C 17AA 2267 H GeForce GTX 1050 Ti with Max-Q Design 1C8C 17AA 39FF H GeForce GTX 1050 1C8D H GeForce GTX 1050 with Max-Q Design 1C8D 103C 84E9 H GeForce GTX 1050 with Max-Q Design 1C8D 103C 84EB H GeForce GTX 1050 with Max-Q Design 1C8D 103C 856A H GeForce GTX 1050 with Max-Q Design 1C8D 1043 114F H GeForce GTX 1050 with Max-Q Design 1C8D 1043 1341 H GeForce GTX 1050 with Max-Q Design 1C8D 1043 1351 H GeForce GTX 1050 with Max-Q Design 1C8D 1043 1481 H GeForce GTX 1050 with Max-Q Design 1C8D 1043 14A1 H GeForce GTX 1050 with Max-Q Design 1C8D 1043 18C1 H GeForce GTX 1050 with Max-Q Design 1C8D 1043 1B5E H GeForce GTX 1050 with Max-Q Design 1C8D 1462 126C H GeForce GTX 1050 with Max-Q Design 1C8D 152D 1217 H GeForce GTX 1050 with Max-Q Design 1C8D 1D72 1707 H GeForce GTX 1050 Ti 1C8F H GeForce GTX 1050 Ti with Max-Q Design 1C8F 1462 123C H GeForce GTX 1050 Ti with Max-Q Design 1C8F 1462 126C H GeForce GTX 1050 Ti with Max-Q Design 1C8F 1462 126D H GeForce GTX 1050 Ti with Max-Q Design 1C8F 1462 1284 H GeForce GTX 1050 Ti with Max-Q Design 1C8F 1462 1297 H GeForce MX150 1C90 H GeForce GTX 1050 1C91 H GeForce GTX 1050 with Max-Q Design 1C91 103C 856A H GeForce GTX 1050 with Max-Q Design 1C91 103C 86E3 H GeForce GTX 1050 with Max-Q Design 1C91 152D 1232 H GeForce GTX 1050 1C92 H GeForce GTX 1050 with Max-Q Design 1C92 1043 149F H GeForce GTX 1050 with Max-Q Design 1C92 1043 1B31 H GeForce GTX 1050 with Max-Q Design 1C92 1462 1245 H GeForce GTX 1050 with Max-Q Design 1C92 1462 126C H GeForce GT 1030 1D01 H GeForce MX150 1D10 H GeForce MX230 1D11 H GeForce MX150 1D12 H GeForce MX250 1D13 H GeForce MX250 1D52 H TITAN V 1D81 I TITAN V JHH Special Edition 1DBA 10DE 12EB I TITAN RTX 1E02 J GeForce RTX 2080 Ti 1E04 J GeForce RTX 2080 Ti 1E07 J GeForce RTX 2080 SUPER 1E81 J GeForce RTX 2080 1E82 J GeForce RTX 2070 SUPER 1E84 J GeForce RTX 2080 1E87 J GeForce RTX 2060 1E89 J GeForce RTX 2080 1E90 J GeForce RTX 2080 with Max-Q Design 1E90 1025 1375 J GeForce RTX 2080 with Max-Q Design 1E90 1028 08A1 J GeForce RTX 2080 with Max-Q Design 1E90 1028 08A2 J GeForce RTX 2080 with Max-Q Design 1E90 1028 08EA J GeForce RTX 2080 with Max-Q Design 1E90 1028 08EB J GeForce RTX 2080 with Max-Q Design 1E90 1028 08EC J GeForce RTX 2080 with Max-Q Design 1E90 1028 08ED J GeForce RTX 2080 with Max-Q Design 1E90 1028 08EE J GeForce RTX 2080 with Max-Q Design 1E90 1028 08EF J GeForce RTX 2080 with Max-Q Design 1E90 1028 093B J GeForce RTX 2080 with Max-Q Design 1E90 1028 093C J GeForce RTX 2080 with Max-Q Design 1E90 103C 8572 J GeForce RTX 2080 with Max-Q Design 1E90 103C 8573 J GeForce RTX 2080 with Max-Q Design 1E90 103C 8602 J GeForce RTX 2080 with Max-Q Design 1E90 1043 131F J GeForce RTX 2080 with Max-Q Design 1E90 1043 137F J GeForce RTX 2080 with Max-Q Design 1E90 1043 141F J GeForce RTX 2080 with Max-Q Design 1E90 1043 1751 J GeForce RTX 2080 with Max-Q Design 1E90 1458 1660 J GeForce RTX 2080 with Max-Q Design 1E90 1458 1661 J GeForce RTX 2080 with Max-Q Design 1E90 1458 1662 J GeForce RTX 2080 with Max-Q Design 1E90 1458 75A6 J GeForce RTX 2080 with Max-Q Design 1E90 1458 75A7 J GeForce RTX 2080 with Max-Q Design 1E90 1458 86A6 J GeForce RTX 2080 with Max-Q Design 1E90 1458 86A7 J GeForce RTX 2080 with Max-Q Design 1E90 1462 1274 J GeForce RTX 2080 with Max-Q Design 1E90 1462 1277 J GeForce RTX 2080 with Max-Q Design 1E90 152D 1220 J GeForce RTX 2080 with Max-Q Design 1E90 1558 95E1 J GeForce RTX 2080 with Max-Q Design 1E90 1558 97E1 J GeForce RTX 2080 with Max-Q Design 1E90 1A58 2002 J GeForce RTX 2080 with Max-Q Design 1E90 1A58 2005 J GeForce RTX 2080 with Max-Q Design 1E90 1A58 2007 J GeForce RTX 2080 with Max-Q Design 1E90 1A58 3000 J GeForce RTX 2080 with Max-Q Design 1E90 1A58 3001 J GeForce RTX 2080 with Max-Q Design 1E90 1D05 1069 J GeForce RTX 2070 SUPER 1EC2 J GeForce RTX 2070 SUPER 1EC7 J GeForce RTX 2080 1ED0 J GeForce RTX 2080 with Max-Q Design 1ED0 1025 132D J GeForce RTX 2080 with Max-Q Design 1ED0 1028 08ED J GeForce RTX 2080 with Max-Q Design 1ED0 1028 08EE J GeForce RTX 2080 with Max-Q Design 1ED0 1028 08EF J GeForce RTX 2080 with Max-Q Design 1ED0 103C 8572 J GeForce RTX 2080 with Max-Q Design 1ED0 103C 8573 J GeForce RTX 2080 with Max-Q Design 1ED0 103C 8600 J GeForce RTX 2080 with Max-Q Design 1ED0 103C 8605 J GeForce RTX 2080 with Max-Q Design 1ED0 1043 138F J GeForce RTX 2080 with Max-Q Design 1ED0 1043 15C1 J GeForce RTX 2080 with Max-Q Design 1ED0 17AA 3FEE J GeForce RTX 2080 with Max-Q Design 1ED0 17AA 3FFE J GeForce RTX 2070 1F02 J GeForce RTX 2060 SUPER 1F06 J GeForce RTX 2070 1F07 J GeForce RTX 2060 1F08 J GeForce RTX 2070 1F10 J GeForce RTX 2070 with Max-Q Design 1F10 1025 132D J GeForce RTX 2070 with Max-Q Design 1F10 1025 1342 J GeForce RTX 2070 with Max-Q Design 1F10 1028 08A1 J GeForce RTX 2070 with Max-Q Design 1F10 1028 08A2 J GeForce RTX 2070 with Max-Q Design 1F10 1028 08EA J GeForce RTX 2070 with Max-Q Design 1F10 1028 08EB J GeForce RTX 2070 with Max-Q Design 1F10 1028 08EC J GeForce RTX 2070 with Max-Q Design 1F10 1028 08ED J GeForce RTX 2070 with Max-Q Design 1F10 1028 08EE J GeForce RTX 2070 with Max-Q Design 1F10 1028 08EF J GeForce RTX 2070 with Max-Q Design 1F10 1028 093B J GeForce RTX 2070 with Max-Q Design 1F10 1028 093C J GeForce RTX 2070 with Max-Q Design 1F10 103C 8572 J GeForce RTX 2070 with Max-Q Design 1F10 103C 8573 J GeForce RTX 2070 with Max-Q Design 1F10 103C 8602 J GeForce RTX 2070 with Max-Q Design 1F10 1043 132F J GeForce RTX 2070 with Max-Q Design 1F10 1043 1881 J GeForce RTX 2070 with Max-Q Design 1F10 1043 1E6E J GeForce RTX 2070 with Max-Q Design 1F10 1458 1658 J GeForce RTX 2070 with Max-Q Design 1F10 1458 1663 J GeForce RTX 2070 with Max-Q Design 1F10 1458 1664 J GeForce RTX 2070 with Max-Q Design 1F10 1458 75A4 J GeForce RTX 2070 with Max-Q Design 1F10 1458 75A5 J GeForce RTX 2070 with Max-Q Design 1F10 1458 86A4 J GeForce RTX 2070 with Max-Q Design 1F10 1458 86A5 J GeForce RTX 2070 with Max-Q Design 1F10 1462 1274 J GeForce RTX 2070 with Max-Q Design 1F10 1462 1277 J GeForce RTX 2070 with Max-Q Design 1F10 1558 95E1 J GeForce RTX 2070 with Max-Q Design 1F10 1558 97E1 J GeForce RTX 2070 with Max-Q Design 1F10 1A58 2002 J GeForce RTX 2070 with Max-Q Design 1F10 1A58 2005 J GeForce RTX 2070 with Max-Q Design 1F10 1A58 2007 J GeForce RTX 2070 with Max-Q Design 1F10 1A58 3000 J GeForce RTX 2070 with Max-Q Design 1F10 1A58 3001 J GeForce RTX 2070 with Max-Q Design 1F10 1D05 105E J GeForce RTX 2070 with Max-Q Design 1F10 1D05 1070 J GeForce RTX 2070 with Max-Q Design 1F10 1D05 2087 J GeForce RTX 2070 with Max-Q Design 1F10 8086 2087 J GeForce RTX 2060 1F11 J GeForce RTX 2060 SUPER 1F42 J GeForce RTX 2060 SUPER 1F47 J GeForce RTX 2070 1F50 J GeForce RTX 2070 with Max-Q Design 1F50 1028 08ED J GeForce RTX 2070 with Max-Q Design 1F50 1028 08EE J GeForce RTX 2070 with Max-Q Design 1F50 1028 08EF J GeForce RTX 2070 with Max-Q Design 1F50 103C 8572 J GeForce RTX 2070 with Max-Q Design 1F50 103C 8573 J GeForce RTX 2070 with Max-Q Design 1F50 103C 8574 J GeForce RTX 2070 with Max-Q Design 1F50 103C 8600 J GeForce RTX 2070 with Max-Q Design 1F50 103C 8605 J GeForce RTX 2070 with Max-Q Design 1F50 17AA 3FEE J GeForce RTX 2070 with Max-Q Design 1F50 17AA 3FFE J GeForce RTX 2060 1F51 J GeForce GTX 1650 1F82 J GeForce GTX 1650 1F91 J GeForce GTX 1650 with Max-Q Design 1F91 103C 863E J GeForce GTX 1650 with Max-Q Design 1F91 1043 12CF J GeForce GTX 1650 with Max-Q Design 1F91 1043 156F J GeForce GTX 1650 with Max-Q Design 1F91 144D C822 J GeForce GTX 1650 with Max-Q Design 1F91 1462 127E J GeForce GTX 1650 with Max-Q Design 1F91 1462 1281 J GeForce GTX 1650 with Max-Q Design 1F91 1462 1284 J GeForce GTX 1650 with Max-Q Design 1F91 1462 1285 J GeForce GTX 1650 with Max-Q Design 1F91 1462 129C J GeForce GTX 1650 with Max-Q Design 1F91 17AA 3806 J GeForce GTX 1650 with Max-Q Design 1F91 17AA 3F1A J GeForce GTX 1650 with Max-Q Design 1F91 1A58 1001 J GeForce GTX 1650 1F96 J GeForce GTX 1650 with Max-Q Design 1F96 1462 1297 J GeForce GTX 1660 Ti 2182 J GeForce GTX 1660 2184 J GeForce GTX 1650 SUPER 2187 J GeForce GTX 1660 Ti 2191 J GeForce GTX 1660 Ti with Max-Q Design 2191 1028 0949 J GeForce GTX 1660 Ti with Max-Q Design 2191 103C 85FB J GeForce GTX 1660 Ti with Max-Q Design 2191 103C 85FE J GeForce GTX 1660 Ti with Max-Q Design 2191 103C 86D6 J GeForce GTX 1660 Ti with Max-Q Design 2191 1043 18D1 J GeForce GTX 1660 Ti with Max-Q Design 2191 1462 128A J GeForce GTX 1660 Ti with Max-Q Design 2191 1462 128B J GeForce GTX 1660 SUPER 21C4 J GeForce GTX 1660 Ti 21D1 J A2. NVIDIA QUADRO GPUS NVIDIA GPU product Device PCI ID* VDPAU features ---------------------------------- --------------- --------------- Quadro K420 0FF3 D Quadro K2000D 0FF9 D Quadro K600 0FFA D Quadro K2000 0FFE D Quadro 410 0FFF D Quadro K6000 103A D Quadro K5200 103C D Quadro K4200 11B4 D Quadro K5000 11BA D Quadro K4000 11FA D Quadro K620M 137A 17AA 2225 E Quadro M500M 137A 17AA 2232 E Quadro M500M 137A 17AA 505A E Quadro M520 137B E Quadro M2000M 13B0 E Quadro M1000M 13B1 E Quadro M600M 13B2 E Quadro K2200M 13B3 E Quadro M620 13B4 E Quadro M1200 13B6 E Quadro K2200 13BA E Quadro K620 13BB E Quadro K1200 13BC E Quadro M5000 13F0 E Quadro M4000 13F1 E Quadro M5000M 13F8 E Quadro M5000 SE 13F8 10DE 11DD E Quadro M4000M 13F9 E Quadro M3000M 13FA E Quadro M3000 SE 13FA 10DE 11C9 E Quadro M5500 13FB E Quadro M2000 1430 F Quadro M2200 1436 F Quadro GP100 15F0 G Quadro M6000 17F0 E Quadro M6000 24GB 17F1 E Quadro P6000 1B30 H Quadro P5000 1BB0 H Quadro P4000 1BB1 H Quadro P5200 1BB5 H Quadro P5200 with Max-Q Design 1BB5 17AA 2268 H Quadro P5200 with Max-Q Design 1BB5 17AA 2269 H Quadro P5000 1BB6 H Quadro P4000 1BB7 H Quadro P4000 with Max-Q Design 1BB7 1462 11E9 H Quadro P4000 with Max-Q Design 1BB7 1558 9501 H Quadro P3000 1BB8 H Quadro P4200 1BB9 H Quadro P4200 with Max-Q Design 1BB9 1558 95E1 H Quadro P4200 with Max-Q Design 1BB9 17AA 2268 H Quadro P4200 with Max-Q Design 1BB9 17AA 2269 H Quadro P3200 1BBB H Quadro P3200 with Max-Q Design 1BBB 17AA 225F H Quadro P3200 with Max-Q Design 1BBB 17AA 2262 H Quadro P2000 1C30 H Quadro P2200 1C31 H Quadro P1000 1CB1 H Quadro P600 1CB2 H Quadro P400 1CB3 H Quadro P620 1CB6 H Quadro P2000 1CBA H Quadro P2000 with Max-Q Design 1CBA 17AA 2266 H Quadro P2000 with Max-Q Design 1CBA 17AA 2267 H Quadro P1000 1CBB H Quadro P600 1CBC H Quadro P620 1CBD H Quadro P500 1D33 H Quadro P520 1D34 H Quadro GV100 1DBA I Quadro RTX 6000 1E30 J Quadro RTX 8000 1E30 1028 129E J Quadro RTX 8000 1E30 103C 129E J Quadro RTX 8000 1E30 10DE 129E J Quadro RTX 8000 1E78 10DE 13D8 J Quadro RTX 6000 1E78 10DE 13D9 J Quadro RTX 5000 1EB0 J Quadro RTX 4000 1EB1 J Quadro RTX 5000 1EB5 J Quadro RTX 5000 with Max-Q Design 1EB5 1025 1375 J Quadro RTX 5000 with Max-Q Design 1EB5 1043 1DD1 J Quadro RTX 5000 with Max-Q Design 1EB5 1462 1274 J Quadro RTX 5000 with Max-Q Design 1EB5 1A58 2007 J Quadro RTX 5000 with Max-Q Design 1EB5 1A58 2008 J Quadro RTX 4000 1EB6 J Quadro RTX 4000 with Max-Q Design 1EB6 1462 1274 J Quadro RTX 4000 with Max-Q Design 1EB6 1462 1277 J Quadro RTX 3000 1F36 J Quadro RTX 3000 with Max-Q Design 1F36 1043 13CF J Quadro T2000 1FB8 J Quadro T2000 with Max-Q Design 1FB8 1462 1281 J Quadro T1000 1FB9 J A3. NVIDIA NVS GPUS NVIDIA GPU product Device PCI ID* VDPAU features ---------------------------------- --------------- --------------- NVS 510 0FFD D NVS 810 13B9 E A4. NVIDIA TESLA GPUS NVIDIA GPU product Device PCI ID* VDPAU features ---------------------------------- --------------- --------------- Tesla K20Xm 1021 D Tesla K20c 1022 D Tesla K40m 1023 D Tesla K40c 1024 D Tesla K20s 1026 D Tesla K40st 1027 D Tesla K20m 1028 D Tesla K40s 1029 D Tesla K40t 102A D Tesla K80 102D D Tesla K10 118F D Tesla K8 1194 D Tesla M60 13F2 E Tesla M6 13F3 E Tesla M4 1431 F Tesla P100-PCIE-12GB 15F7 G Tesla P100-PCIE-16GB 15F8 G Tesla P100-SXM2-16GB 15F9 G Tesla M40 17FD E Tesla M40 24GB 17FD 10DE 1173 E Tesla P40 1B38 H Tesla P4 1BB3 H Tesla P6 1BB4 H Tesla V100-SXM2-16GB 1DB1 I Tesla V100-SXM2-16GB-LS 1DB1 10DE 1307 I Tesla V100-FHHL-16GB 1DB3 I Tesla V100-PCIE-16GB 1DB4 I Tesla V100-PCIE-16GB-LS 1DB4 10DE 1306 I Tesla V100-SXM2-32GB 1DB5 I Tesla V100-SXM2-32GB-LS 1DB5 10DE 1308 I Tesla V100-PCIE-32GB 1DB6 I Tesla V100-DGXS-32GB 1DB7 I Tesla V100-SXM3-32GB 1DB8 I Tesla V100-SXM3-32GB-H 1DB8 10DE 131D I Tesla V100-SXM2-16GB 1DF5 I Tesla V100S-PCIE-32GB 1DF6 I Tesla T4 1EB8 J A5. NVIDIA GRID GPUS NVIDIA GPU product Device PCI ID* VDPAU features ---------------------------------- --------------- --------------- GRID K520 118A D Below are the legacy GPUs that are no longer supported in the unified driver. These GPUs will continue to be maintained through the special legacy NVIDIA GPU driver releases.

-

no, there still other way more likely solutions how should the dhcp server on the router determine between a dell server and a dell server with a xpenology loader, it just gets a request from a mac address there is no infomtation about the rest and it way to much code and to many options to do unintended things, its way easier to secure DSM itself and i could continue why its not very logical and very unlikely imho most likely missing firmware files for the nic DELL Server R610, two dual port embedded Broadcom® NetXtreme® II 5709c Gigabit Ethernet NIC -> bnx2.ko and that driver needs firmware to work properly (can be seen when using modinfo or just looking with a hex editot for ".fw" it should be \usr\lib\firmware\bnx2\ bnx2-rv2p-06-6.0.15.fw bnx2-mips-06-6.2.3.fw bnx2-rv2p-09ax-6.0.17.fw bnx2-mips-09-6.2.1b.fw bnx2-rv2p-09-6.0.17.fw i dont know how tcrp handles firmware realted driver, the firmware is not in the driver *.gz that is used in the laoder arpl loader comes with a "firmware.tgz" and that has all 5 files in it, so try this loader to see if that it the problem (my guess is that it will be working with arpl) even jun's 1.03b loader did only contain 3 of 5 firmware files (mips files missing, did not work with all bnx2, i do remember that HPE's did work and some Dell did not) bnx2-rv2p-06-6.0.15.fw bnx2-rv2p-09ax-6.0.17.fw bnx2-rv2p-09-6.0.17.fw for 6.2 (jun's loader) there is a extended extra.lzma with all firmware files in it https://xpenology.com/forum/topic/28321-driver-extension-jun-103b104b-for-dsm623-for-918-3615xs-3617xs/ but as that loader ends with 6.2.3 update3 and 6.2 in in general retired 6/2023 by synology it not suggested to use that for a new install, maybe for testing using that with 3615/17 needs also csm mode in a uefi bios and it is needed that on boot the legacy usb device is used (csm is just a option, choosing the uefi usb device overrides that option) https://xpenology.com/forum/topic/13333-tutorialreference-6x-loaders-and-platforms/

-

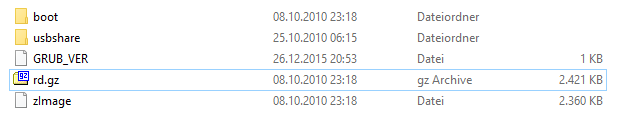

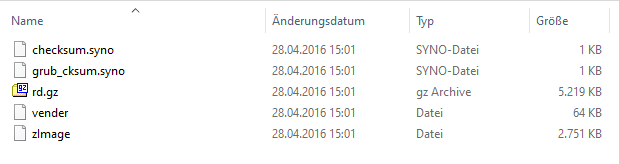

i thought you where using the original one from the usb dom and just replaced the rd.gz and zImage on the 2nd partition (thats where the kernel for a new dsm version is copied i've never seen a original dom image of a arm unit, might be different from one for x86? on a image i have from a 1511+ is a 17.48 MB ext2 parttion and a 104.8 MB ext2 partition, there is a file "vender" an the 2nd partiton that seems to contain the serial of the unit 1st 2nd and just to make clear, as yours is a arm cpu based unit you will not be able to boot any loader from here, everything here is around x86 cpu's (replacing the loader with one from here is not going to work) Jumping to 2nd bootloader... U-Boot 2015.07-g428cfe7-dirty (May 16 2018 - 10:29:38 +0800) CPU : Cortex-A53 Quad Core Board: Realtek QA Board DRAM: 2 GiB ... Starting Kernel ... [ 0.000000] Booting Linux on physical CPU 0x0 [ 0.000000] Initializing cgroup subsys cpuset [ 0.000000] Initializing cgroup subsys cpu [ 0.000000] Initializing cgroup subsys cpuacct [ 0.000000] Linux version 4.4.180+ (root@build16) (gcc version 7.5.0 (GCC) ) #41890 SMP Thu Jul 15 03:42:46 CST 2021 this looks like the boot from the 1st partition came out ok und then its starting to load zImage from the 2nd partiton (kernel 4.4.180 war introduced with dsm 6.x?) from the error it might by a problem with rd.gz? so check that one on the 2nd partition [ 8.437645] RAMDISK: lzma image found at block 0 [ 9.918577] List of all partitions: [ 9.922188] 0100 655360 ram0 (driver?) [ 9.926930] 0101 655360 ram1 (driver?) [ 9.931674] 0102 655360 ram2 (driver?) [ 9.936412] 0103 655360 ram3 (driver?) [ 9.941152] 0104 655360 ram4 (driver?) [ 9.945907] 0105 655360 ram5 (driver?) [ 9.950646] 0106 655360 ram6 (driver?) [ 9.955390] 0107 655360 ram7 (driver?) [ 9.960130] 0108 655360 ram8 (driver?) [ 9.964873] 0109 655360 ram9 (driver?) [ 9.969611] 010a 655360 ram10 (driver?) [ 9.974442] 010b 655360 ram11 (driver?) [ 9.979270] 010c 655360 ram12 (driver?) [ 9.984100] 010d 655360 ram13 (driver?) [ 9.988928] 010e 655360 ram14 (driver?) [ 9.993759] 010f 655360 ram15 (driver?) [ 9.998586] 1f00 1024 mtdblock0 (driver?) [ 10.003774] 1f01 3008 mtdblock1 (driver?) [ 10.008958] 1f02 4092 mtdblock2 (driver?) [ 10.014144] 1f03 64 mtdblock3 (driver?) [ 10.019327] 1f04 4 mtdblock4 (driver?) [ 10.024513] No filesystem could mount root, tried: ext3 ext4 ext2 [ 10.030890] Kernel panic - not syncing: VFS: Unable to mount root fs on unknown-block(9,0)

-

not better but there is a alternative if you set up your new system you can (as long ans the old unit with its cam licenses is in the network) share/borrow them to another system with Synology's Central Management System (cms) https://www.synology.com/en-us/dsm/packages/CMS it was suggested here (sorry you might nee to use google translate as its in the german section) https://xpenology.com/forum/topic/34502-surveillance-station-lizenz/?do=findComment&comment=170511 the downside is that the old unit need to be online all the time (costs for power) so if you build a new unit anyway you can try to use dva's image and when it fails you would still be able to fall back to the sharing solution

-

it depends on how much data you have, how much you would need to push it around or having other people access it (workflow) if its just for editing solo a 16x pcie card with 4 x nvme ssd as raid0 might be the cheapest solution (the "simple" card like that come without any hardware and need the chipset/bios to support bifurcation (https://blog.donbowman.ca/2017/10/06/pci-e-bifurcation-explained/, there are hardware based cards too but i guess they will cost $250-450 compared to less then $100) backup or hosting can be done by copy to a 10G connected NAS, even normal disks in a raid set can give 500-700MB/s and when using nvme/ssd cache as write cache its even less impotent what the main raid for data storage is made off if you want a cost effictive solution its usually kind of tailor cut to your situation - it gets different when money is of no importance and the only thing a pushing the envelop

-

my guess ihere would be you use loader 1.03b and did load the latest dsm version (automatic search) from internt, that results in dsm 6.2.4 and jun's 1.03b laoder can only handle 6.2.3 update3, if its 6.2.4 then it will fail on boot and you cant access loader or system anymore (kernel of new dsm was copied to the usb while "updating" and on next boot (when the new dsm version is supposed to be installed) the boot fails in you stuck with a system not booting anymore (no network access) no, you just lost access to your data, DSM update is only accessing your system partition (of dsm, first partition on every disk 2.4GB as raid1 over all disks) and the usb loader (copy new kernel zImage and rd.gz to 2nd partiton of the loader) but i think its a little more complicated, you already had unexplained behavior here https://xpenology.com/forum/topic/65849-added-new-hard-drives-but-not-found-in-xpenology-dsm-62-23739/ and my guess from that is as you use a raid controller (adaptec 5805) that most like cant use "raw" disks as dsm would need your disks might be configured as raid volumes in the controller and either be used 1. raid0 for every single disk - so dsm sees every disk and can work its usual way by using single disks for building a raid volume 2. disks where grouped as adaptec hardware raid and where seen in dsm as one single disk a simple try that does not have any impact on the situation might be to create a open media vault boot usb drive on another computer and swap that one with your dsm usb boot device, booting omv might detect the data raid volume of your drives by itself and you can access the system by OMV's easy to use web gui to configure a share you can use for backup its also possible to get a better look of whats there with a running linux system to plan what to do next dont change anything on the controller or swap out an drives, keep it the way it is until you know whats the situation if you have a backup (or not) you next choices are getting back to the original loader by reverting the (presumably) started/unfinished dsm update or try to get to a new loader and continue updating to 6.2.4 or 7.1 (as of the unusual and not suggested raid controller you need to care about a driver in the loader for that, its a additional driver that need to added as its most likely not supported in jun's original driver set and might not work in the new loader) also important safe the 1.03b loader in its state as it is now, most important would be grub.conf on 1st partition extra.lzma from 2nd partition (thats where drivers are located and from that we can tell if its a generic or extended/added driver set) and nice to have zImage and rd.gz from the 2nd partiton (to see what dsm version that files belong to, beside the file date an size, rd.gz can be unpacked and will tell you exactly what version it is) you can copy the usb (bootable and everything) to a file with Win32DiskImager 1.0, that way you can reproduce it it needed and also can extract files for having a look and evaluate whets going on it might also be possible to go into the adaptecs bios and see whats its raid configuration (disks group in a raid set or every disk as raid0) collection information and getting the picture without changing things is the thing for now (if you want your data from the disks) depending on the situaltion you can also involve professional data rescue service providers like ontrack or convar (do not choose any random company, you might end up with some cheap but unreliable that might even "take you data hostage" when you have send in your disks)

-

you are to unspecific about your hardware, what board, what nic's (chip, maybe its dual or quad port?), a PCI (not PCIe) 1G nic might be considered "exotic" by now, imho if its a pci chip then its a r8169 (that driver seems to be present in tcrp extensions https://github.com/pocopico/rp-ext/tree/main/r8169/releases (so maybe more of a detection problem?) maybe give us a "lspci -v" so we can see what the hardware is you can try to do that in the loader (tinycore as OS) and in DSM and compare that (or instead of tc you can boot a live/recovery linux from usb, that one will have a lot of drivers ready on boot) the basic thing about ds920+ will be that by its software configuration (synoinfo.conf) it will only support up to 4 nic's (but i dont think that would be a problem with just one or two onboard nic ports and one or two added the normal way with tcrp and arpl might be to re-run the loader (latest version) and let it re-detect your hardware, drivers are handled as optional "extensions" and are only added when the loader is in configuration phase (the old approach in jun's loader was to just try to load all the drivers at any boot)

-

synology usually uses its own oem area in the mac (1st half) so the mechanism might be bound to that and the tools you may find are usually working the other way around (create a SN and create a mac for it) as we are not "supporting" these things here, dsm itself runs fine without it and there are other ways to get hardware transcoding working (patching files) https://xpenology.com/forum/announcement/2-dsm-serial-number-must-read/ you can use a search engine and start with "Synology SN Tool" (keep the " ") maybe digging a little can give you information you are looking for OR if its a more business oriented use of DSM, maybe consider buying some of the products (the last one is more rhetorical as the licensing terms seem to prevent this imho, but that depends you your country and point of view too)

-

sure, dsm 7.1 is still offered for old units like 3615 by synology and a lot of "old" units way less cpu power that get 7.1 (ram is usually not a problem as synology themself put only a bare minimum in most units) you can have a look in the update reporting section here to compare https://xpenology.com/forum/forum/78-dsm-updates-reporting/ but there are even old N40L microserver with a turion cpu working fine https://xpenology.com/forum/topic/64619-dsm-711-42962/?do=findComment&comment=349782

-

if you let the system load the (latest) version of 6.2.x from the internet its going to be 6.2.4 and thats not working with jun's loaders (1.03b/1.04b) you need to switch to manually upload the pat file to you system https://archive.synology.com/download/Os/DSM/6.2.3-25426 (choose the one that matches you loader, 3615/3617/918+) and the max you can go is update3 of this 6.2.3 version from here (also manuall upload it when you dsm 6.2.3 is running, also disable automatic updates as 6.2.4 or 7.x will make the sysem unbootable, https://archive.synology.com/download/Os/DSM/6.2.3-25426-3 if you want 6.2.4 or 7.x look into redpill loaders aka rp like arpl or tcrp, these loaders are up to the task of handling latest versions of dsm, 6.2.4 or 7.x