-

Posts

4,639 -

Joined

-

Last visited

-

Days Won

212

Everything posted by IG-88

-

there was a comment on jim ma's blog about disk issues "Due to compatibility or performance issues on some machines, /dev/console was created late, causing redpill.ko to fail to load, and eventually the hard disk could not be found. The donation version has been repaired" i did see a no disk message on a test notebook with 11th gen cpu that only had nvme and was extended to have a jmb585 ahci controller by a m.2 nvme to pcie adapter, my original test system with a m365 chipset and 9th gen cpu did work without problems in that regard

-

What model to use for a i5-1240P with transcoding support

IG-88 replied to Mirano's question in General Questions

someone would need to compile a new driver from the dsm 7.0 source with the patch and that could be (tested) and installed - i did not follow this thread for a while but a thought they where on the right path, looks like they took a different turn somewhere anyway the best so far might be jim ma's i915 in sa6400 so far, it overcomes some of the backporting issues as the i915 in kernel 5.x can do more and a lot of that stuff was to much effort to backport into kernel 4.4 (and all the other devices of synology is still based in kernel 4.4 and that will be the same with dsm 7.2, so for at least a year there might be no better solution then sa6400 with 10th and above cpu's (for now up to 11th but he wrote that he already had code for 12th gen) -

What model to use for a i5-1240P with transcoding support

IG-88 replied to Mirano's question in General Questions

the alternative to this would be the next level of i915 support we've seen lately its a modded arpl loader with sa6400 (only x64 kernel 5.x device) with a extension to use i915 driver up to 11th gen https://xpenology.com/forum/topic/68067-develop-and-refine-sa3600broadwellnk-and-sa6400epyc7002-thread/ the only glitch was with realtek 8168/8111 where it cant use the nic ootb (i added a intel nic and changed something to make r8168 work on boot and then removed the intel nic) also atm it does not come with such a rich driver set as the other loaders, it still some beta but works and it would be possible to use the *.ko files from other loaders like arpl or tcrp (they do have driver sets for epyc aka sa6400 on github) -

What model to use for a i5-1240P with transcoding support

IG-88 replied to Mirano's question in General Questions

the driver here is not loaded as its a wrong version from a older dsm version the kernel version of 7.0 and 7.1 is 4.4.180 and the driver needs to be compiled for that kernel version from above its there is nothing about the log after you manually loaded the driver and you could also check if there is something in /dev/dri as alternative but when just loading manually it would be gone after a reboot i also checked the driver in arpl and its "only" a patched driver the way i did it in dsm 6.2.3, the "real deal" is the extended driver that is specifically compiled from extended source that take 10th gen stuff into account (the old patch method is just changing a device id from 9th gen into one from other/newer hardware and that does not worked with all 10th gen afair) earlier in this thread https://xpenology.com/forum/topic/59909-i915ko-backported-driver-for-intel-10th-gen-ds918-ver-701-up3/ there was this patch for the i915 source code that would not need any patching for device id's like seen later in the thread or in arpl, you x9BC8 would just be part of the "better" i915 driver (not 4 different driver files, just one for all) -

also das da ahci und 1.5Gbit steht ist schon komisch, da sollte 6GBit ei sata3 stehen (entsprechend langsam wären dann auch zugriffe - wenn es nicht abstürzen würde) wo kommt der cach her? hattest du das system baremetal so laufen und hast dann einfach runtergefahren um mit den gleichen platten wieder als vm zu starten? wenn da etwas im (schreib) cache war und das beim dem prozess über die klinge gesprungen ist dann könnte das evtl. für datenverluste sorgen man würde mindestens den cache vorher manuell leeren oder besser den cache ausbinden und entfernen und dann auf dem neuen system den cache neu anlegen (falls man dann cache nutzen will, mir ist das zu heiß für den mäßigen vorteil in es meist nut hat) ich tippe auf den cache aber man müsste eigentlich die logs studieren (die sind ja auf der system partition und sollten zugreifbar sein) ok, das bestätigt meine vermutungen von weiter oben ich denke mal eher der cache hat sich "verändert" und fehlte dann beim daten volume das raid volume (mdadm) würde an sich unabhängig vom controller und dessen ports funktionieren und das LVM2 volume (das oben drüber sitzt) würde sich aus den parts durch die id's eigentlich auch wieder finden, an sich ist so ein SHR volume ohne weiteres auf neue/andere hardware transferierbar (zumindest sol lange man bei ahci bleibt) und auch die reihenfolge der platten sollte sich ändern können das mit den cache sollte man sich immer gut überlegen da es echt problematisch wird wenn er plötzlich fehlt, auf orginal systemen kommt das nicht so vor aber wenn man das mit wechselnder hardware oder vm's macht ..., wenn man das system erst patchen muss damit es geht und dann beim restart nach einem update der cache fehlt kann das auch für probleme sorgen (aber evtl. wird der cache mittlerweil automatisch vor einem update entleert) generell war die empfehlung bei udpates den cache erst mal komplett zu entfernen (zumindest wenn es von patches abhängt die man erst nach dem udate wieder hinzufügen kann - da waren jun's lösungen besser geeignet, die haben im loader vor de start des systems geprüft ob die anpassungen noch da waren und dann gepatched bevor dsm wieder gestartet ist (so blieb z.b. die max disk bei der 918+ erhalten - auch nach updates original syno's sind an sich nicht so schlecht und easy zu handhaben aber das ist nicth unbedingt der fall wenn man xpenology nutzt, das wird erheblich komplexer und man muss sich zum teil vor jedem update mal umsehen ob es spezialitäten gibt (ich erinnere mal an das 6.2.2 update das kernel einstellungen für pcie power managment anders hatte so as die ganzen zuätzlichen treiber nicht mehr liefen und man neue treiber dafür erstellen musste und dann die rolle rückwärts bei 6.2.3 wo dann die "alten" treiber wieder funktionierten und die von 6.2.2 nicht mehr)

-

der prozess im loader erzeugt ein config file (user_config.json) das man auch mit dem editor (z.b. vi oder nano, ist in tcrp vorhanden) ändern kann, danach den prozess zum erstellen des loaders noch mal laufen lassen am ende landet die sn dann in der grub.cfg als kernel parameter, könnte man zwar auch da ändern aber dann würde die anpassung verloren gehem sobald du den loader noch mal neu erstellen lässt (und wenn das dann 1/2 jahr oder so her ist fragst du dich dann warum plötzlich die sn enders ist und musst wieder alles neu aufrollen) steht auch hier in einem howto https://xpenology.com/forum/topic/62221-tutorial-installmigrate-to-dsm-7x-with-tinycore-redpill-tcrp-loader/ unter "Manual Review:" nach anpassung dann "Step 6. Build the Loader"

-

What model to use for a i5-1240P with transcoding support

IG-88 replied to Mirano's question in General Questions

did you activate the addon for 10th gen cpu in the loader? check the log with "dmesg |grep i915" that the i915 driver is loaded and is loading the firmware might look similar like this (this is a log from 9th gen, afair 10th gen might need a cnl_dmc_*.bin as firmware [ 28.854600] [drm] Finished loading DMC firmware i915/kbl_dmc_ver1_04.bin (v1.4) [ 29.149691] [drm] Initialized i915 1.6.0 20171222 for 0000:00:02.0 on minor 0 [ 29.244885] i915 0000:00:02.0: fb0: inteldrmfb frame buffer device also check if the needed firmware is present "ls /usr/lib/firmware/i915" -

my interpretation here war that it was about the gpu not being recognized, cpu wise there is nothing to recognize, it just works if its a bout what you seen in dsm's gui, then dont worry it just a kind of static picture they persent the user but if its important, there is a cpuinfo tool in the software modding section that can make your real cpu to be seen in the gui arpl loader has its own r8152 driver (version=v2.16.3 (2022/07/06)) that should be working boot with the usb adapter connected and check if the driver is loaded with "lsmod |grep r81" also check whats in the log about that with "dmesg |grep r81"

-

as synology does not specify anything about that its hard to tell but i'd expect something more like shutting down system just after starting or ending file services or web gui as seen in the former timebomb issues in dsm 6.x - but as there is no line they draw there could be more, even malicious stuff like damaging systems or data its a little concerning seeing something you describe as it could be some kind of scan of all data on the system as of the data they collect it would be possible to target any specific system with any special task you can think of as this is original system you are talking about i'd suggest to involve synology's official support and see what they tell you about it, maybe reference to the wikipedia about wedjat (https://en.wikipedia.org/wiki/Eye_of_Horus) and ask specifically about data collection also check you logs in /var/log/ about things going on edit: before involving synology support it might be interesting to find out what payload your system received (and why, as its a original system that should not be bothered at all)

-

off topic here as this thread is about dsm 6.2.2 but dsm 7.1.1 still has the same i915 driver as in 6.2.3 the driver should detect older gpu's as well, only newer gpu's are a problem this CPU has "Intel HD P4600" and thats gpu gen 7.5 https://en.wikipedia.org/wiki/Intel_Graphics_Technology so its capability's are limited and there is no guarantee that it will work video station of the face recognition in photos if there are devices in /dev/dri then the driver is working, your main problem will be is it working with the part of dsm you want to use, as synology did not intent or test if older gpu's will work what a 7th gen gpu can do can be seen here (compared with newer gpu's) https://en.wikipedia.org/wiki/Intel_Quick_Sync_Video#Hardware_decoding_and_encoding no HEVC/h.265 for sure

-

is it possible to use your own kernel with that? like having syno's 7.0 source and being able to add some missing kernel code (like i915 support or hyper-v to ds3622)

-

You have 8 disks, Will you create 1 raid array or 2 arrays?

IG-88 replied to Marawan's question in General Questions

thats about ssd's, on a single ssd there would be the controller leveling that out by distributing the write access between cells (wear leveling) something like that is not needed with conventional magnetic recording but might get a thing with heat or microwave assisted magnetic recording in the next years when combining ssd's in a raid5 set you might face the effect that all disks fail at the same time as wear out is something that will hit a ssd at some point (there is usually a tool or s.m.a.r.t. to monitor this), synology has raid f1 for this as alternative to raid5 https://xpenology.com/forum/topic/9394-installation-faq/?tab=comments#comment-131458 https://global.download.synology.com/download/Document/Software/WhitePaper/Firmware/DSM/All/enu/Synology_RAID_F1_WP.pdf on synology system that is build into kernel and as we use syno's original kernel some units lack that support https://xpenology.com/forum/topic/61634-dsm-7x-loaders-and-platforms/#comment-281190 you can also see if that is supported when looking at the state of a mdadm with "cat /proc/mdstat" "Personalities" would tell you what raid types are possible, in general don't expect a consumer unit being able to use raid f1 but there is a list from synology https://kb.synology.com/en-ro/DSM/tutorial/Which_Synology_NAS_models_support_RAID_F1 -

i came up with this (should survive updates?) create file: /etc/apparmor/usr.syno.bin.synowedjat-exec /usr/syno/bin/synowedjat-exec { deny network, deny capability net_raw, deny capability net_admin, } create file: /usr/local/bin/apparmor_add_start.sh (needs to be executable) #!/bin/sh apparmor_parser -r /etc/apparmor/usr.syno.bin.synowedjat-exec create file: /usr/local/bin/apparmor_add_stop.sh (needs to be executable) #!/bin/sh # apparmor_parser -R /etc/apparmor/usr.syno.bin.synowedjat-exec # no plan to remove that as long as the system is running create file: /usr/local/lib/systemd/system/apparmor_add.service # Service file for apparmor_add # copy this file to /usr/local/lib/systemd/system/apparmor_add.service [Unit] Description=Add Apparmore profile on boot [Service] Type=oneshot ExecStart=/bin/bash /usr/local/bin/apparmor_add_start.sh ExecStop=/bin/bash /usr/local/bin/apparmor_add_stop.sh RemainAfterExit=yes Restart=no [Install] WantedBy=syno-low-priority-packages.target test it: "systemctl start apparmor_add" to start it now check with "aa-status" that the new apparmor profile is active -> /usr/syno/bin/synowedjat-exec "systemctl enable apparmor_add" to make enable it at start of the system should result in this: "Created symlink from /etc/systemd/system/syno-low-priority-packages.target.wants/apparmor_add.service to /usr/local/lib/systemd/system/apparmor_add.service." reboot and check again with "aa-status" -> /usr/syno/bin/synowedjat-exec

-

You have 8 disks, Will you create 1 raid array or 2 arrays?

IG-88 replied to Marawan's question in General Questions

its way easier then you think, you sum up the space of all disks in your array and subtract the largest - in case of shr-1, for shr-2 you subtract the two largest disks a raid5 or raid6 (same disk size) would be a sub case of this since dsm 7 all the created volumes are shr1 or 2 if you look closely, shr was always mdadm software raid sets (same size partitions of disks put together as raid set) and these "glued" together by LVM2 to a volume, in older dsm versions you could leave out LVM2 if you had same size disks only mainly its the question how many disks max for 1 disk as redundancy, if you are willing to have 8 disks as raid5 then you "loose" one disk if you create two raid5 sets it will be two disks for redundancy if you take it as not more the 6 disks in a raid 5 then with 8 disks there is the need of raid6 and in that scenario there is not much difference between two raid5 sets (raid6 has the edge as its not important whats disks fail if two disks fail, with two raid5 sets of 4 disks each and two disks in a 4 disk set fail ... also its more convenient to have just one volume, no juggling space between two volumes, so two times a little argument for all disks in raid6 (you can replace raud5 with shr1 and raid6 with shr2 here, there can be some differences with shr of whats "lost" for redundancy depending on the size difference of biggest disks) that might have been the weaker point of raid6 as it need more writing with two redundancy's, but there is also the argument of having more disks is better to split the needed IOPS and transfer between more disk (older phrase, more spindles more speed, gets more clear if you think of a raid0 made of raid1 sets aka raid10) there is also some correlational with the number of disks in a raid5 set like sets of 3 or 5 having better performance as the number of disks taking the data (not redundancy) it 2 and 4 in this case, so a 9 disk raid 5 would be next in this line (8 disks taking the date, so its about two to the power of n, 2, 4, 8 ) but thats kind of two many disks for just one redundancy, so a 10 disk raid6 would be in that place see my comparison above, the possibility's for a two disk fail of a raid6 of 8 disks are better then having two raid5 sets of 4 disks each, with the 8 disk raid6 any two disks can fail, thats not the case with the two times raid5 there are more things you could take into account when building a system and deciding about redundancy and there a a lot more options to handle this with a normal linux/bsd system then dsm can offer, dsm pretty much limited to mdadm raid depending on how important these things are there can be other solutions then dsm with its mdadm, like systems doing ZFS or UnRAID examples of other things that might be important: constant guaranteed write speed, IOPS, scaling to a larger number of disks, caching, higher level of redundancy, ... -

https://www.synology.com/de-de/dsm/7.1/software_spec/synology_photos "... HEIC-Dateien und Live Photos erfordern das Advanced Media Extensions-Paket, um angezeigt zu werden ..." aka AME https://xpenology.com/forum/topic/30552-transcoding-and-face-recognitionpeople-and-subjects-issue-fix-in-once/?do=findComment&comment=441007 https://xpenology.com/forum/topic/65643-ame-30-patcher/

-

https://xpenology.com/forum/topic/8057-physical-drive-limits-of-an-emulated-synology-machine/?do=findComment&comment=122819

-

Fujitsu Q556/2 Mini PC - will XPEnology install work? Suitable for me needs?

IG-88 replied to ThePhantom79's question in General Questions

dva1622 is device tree, i guess that goes within a platform and dva1622 is geminilake like 920+ and has more in common with 920+ then with dva3122 (denverton) denverton platform dates back to 2018 and device tree came up around 2020 and is used in newer platforms like v1000 older ones like broadwell/broadwellnk (ds3617 or ds3622) are still old style -

Fujitsu Q556/2 Mini PC - will XPEnology install work? Suitable for me needs?

IG-88 replied to ThePhantom79's question in General Questions

that was for "old" style disk handling (sataportmap/diskidxmap, like 918+ or 3622), might be not tested yet to do that with device tree based disk handling -

I need help installing xpenology 7.1 on hp ml110 G10

IG-88 replied to Ozadozky's topic in The Noob Lounge

beside using another usb flash drive there would be trying arpl as loader https://github.com/fbelavenuto/arpl/releases if the hardware is new and untested try a rescue/live linux to boot a see if it runs without problems -

Fujitsu Q556/2 Mini PC - will XPEnology install work? Suitable for me needs?

IG-88 replied to ThePhantom79's question in General Questions

no and lately more inclined to yes https://xpenology.com/forum/topic/67961-use-nvmem2-hard-drives-as-storage-pools-in-synology/ its a feature thats about to come out (not sure why sonology is not making it available as general feature for all models) but under normal conditions an dsm 7.1 its usually a no, dsm's only use would be as cache drive thats not supported under normal conditions as normal volume, it will appear as external usb single disk, no integration into a raid set in a normal volume (for good reasons) that scenario is usually used when you want to user VM's in general a lot, kvm inside dsm (VMM pacckage) is not that good or you would use it when you have way more cpu cores/threads then the baremetal dsm could handle or you wand to use m.2 nvme without the dsm typical constrains (m.2 nvme as virtual ssd in vm, that way dsm will use the nvme as data volume without any tweaking), or you want to use a hardware raid that is not usable in dsm (you can have a single virtual disk in dsm that in the hypervisor is on a raid set) easier install in a vm with dva's? not sure why, i use my dva1622 baremetal -

not sure if you want to use dsm baremetal or as a vm as baremetal there might be two issues 1. cpu number https://ark.intel.com/content/www/de/de/ark/products/64616/intel-xeon-processor-e52430-15m-cache-2-20-ghz-7-20-gts-intel-qpi.html 6 code / 12 thread x2 = 12/24 not all platforms of dsm support these high counts i'd suggest ds3622 or ds3617 (sa6400 might be also a thing when availible, has kernel 5 and seems to perform better on the same hardware) 2. P4xx - hardware raid in not supported in general, dsm is build around having single disks, afaik P420 can be switched into this mode, most dsm 7.1 loaders will have a hpsa.ko driver but be aware that it might not work, lots of trouble seen in dsm 6.2 with that and never really worked - so try it in single disk mode and it it does not work replace it i'd suggest replacing it with a lsi controller in IT mode, same mechanical connections to the backplane and <100$ lsi 9211-8i is the name of the "original" but any LSI SAS2008 or 2108 should work nicely with ds3622 or ds3617 or switch to a hypervisor and use dsm as vm, that removes the troubles above as you can add cpu's at will to the vm and also storage can be used as "single" disk as virtual disk to a vm (raidX implemented on the hypervisor level so you dont need to care about raid in dsm, only use a single disk per volume)

-

What model to use for a i5-1240P with transcoding support

IG-88 replied to Mirano's question in General Questions

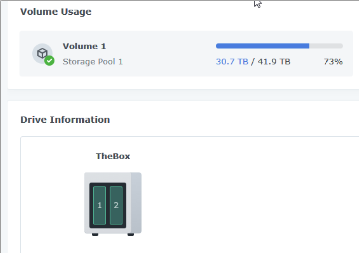

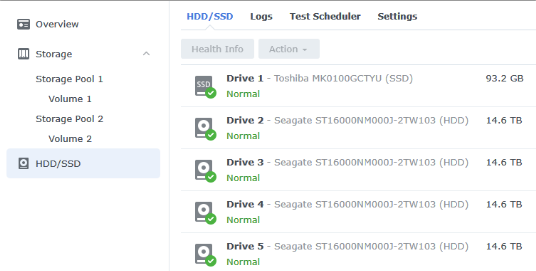

if the driver in arpl is not that one its still possible to replace the i915.ko file with the one from that thread i've seen setups like old gaming board/cpu going into a nas and thats often some high power consumption cpu's (no one case about that in a gaming setup, just use a potent cooler and you are done) did you look at the list of disks (the graphic only shows the original state of the housing wit its possible disks) if the disks are listed in hdd you should be able to add them depending on the configuration of the hardware it might be needed to renew the device tree files to get the disks, going into the loader and use build loader might be needed there is also a option to show disks detected in arpl (in advanced options?) if not detected at this point its not going to work in dsm -

Fujitsu Q556/2 Mini PC - will XPEnology install work? Suitable for me needs?

IG-88 replied to ThePhantom79's question in General Questions

if the nvme is cache then its just read cache, no use in case of backup afair all 2.5" hdd's 3TB and above are shingled recording, not much use in you want to write bigger amounts of data with 1G speed (cmr buffer area will be full at some point and disk will fall back to native smr speed aka ~25-30MB/s) just wanting to make you aware of that limit, if you did not read about that and switch to another hardware last minute (often the old hardware is used for backup and new hardware as main and your old microserver can only have 2nd/3rd gen cpu's) you might run into trouble, also the 4th gen is about bare functioning like booting into OS, face recognition in photo station or AI stuff might need a newer gen, cant remember but i think it was 6th gen as minimum for that -

Fujitsu Q556/2 Mini PC - will XPEnology install work? Suitable for me needs?

IG-88 replied to ThePhantom79's question in General Questions

low default count on cam's and you cant buy a license for use with xpenology https://xpenology.com/forum/topic/13597-tutorial-4-camera-on-surveillance-station-legally/ or use a DVA1622/3221 unit they come with 8 cam license by default but need newer cpu's (having MOVBE feature like intel 4th gen cpu's) install yes and as its 7th gen cpu the mentioned limit above about movbe should be no issue, dva1622 would be the way to go with that hardware, in case of backup i can't answer as im not using syno's active backup, but as it is the same as on original units you can read about that anywhere not just here in the forum in general the speed will be limited do what 1G nic can do and with hat kind of pcie less hardware there will be no option to 2.5G/5G or 10G nic or more disks (more disks usually more speed - at least to a certain degree, i have 4 x 16TB and can use ~450MB/s and before 12 x 4TB only had slightly more) afair dsm as xpenology tends to run the cpu usually at more then the idle on other systems imply, you might read futher about that if its important https://xpenology.com/forum/topic/19846-cpu-frequency-scaling-for-ds918/ -

3615/17 dont come with i915 driver needed for intel qsv and there are parts missing in the kernel so you cant just compile additional drivers (like its done for network and storage drivers) any platform with build in i915 driver needs at least a haswell (4th gen) cpu and microserver gen8 is 2nd/3rd gen cpu's the only ray of light might be sa6400 (only unit with kernel 5.x, epyc based) where Jim Ma added a i915 driver by also adding things missing for i915 in the kernel config https://xpenology.com/forum/topic/68067-develop-and-refine-sa3600broadwellnk-and-sa6400epyc7002-thread/ 1st i cant say for sure if sa6400 will need 4th gen, its still a beta release (i did not test that and i cant remember seeing anyone writing about that) 2nd is in theory someone could apply the same technique to other (future 5.x based units, dsm 7.2 will keep all kernels as it is now so we talk about new units with a new platform in 2023 and dsm 7.3 in 2024), so in 2024 there might be be ds3622 based on dsm 7.3 and kernel 5.x that get the same treatments as sa6400 got now, but thats a lot of time and if's (as we dont know if dsm 7.3 will bring kernel 5.x to most units as it was done with 7.0 where most units changed from 3.x to 4.x - also 7.3 is a placeholder it might be dsm 8.0 next year instead) beside trying jim ma's sa6400 arpl loader on "older" cpu's there is nothing that could be done atm - at least with dsm/xpenology - you could switch to open media vault and have i915 working immediately, its just creating a (additional) boot disk (or usb) and replace the usb xpenlology loader with that, the data raid partitions will be recognized and should be usable ootb (there might be a problem with volume naming but that can be solved) https://xpenology.com/forum/topic/42793-hp-gen8-dsm-623-25426-update-3-failed/#comment-200475 you could remove the OMV boot media at any time and re-add the xpenology usb loader and have DSM back, the dsm partions (1st and 2nd on every disk) will not be touched so dsm with its config will stay put until its used again)