NeoID

Member-

Posts

224 -

Joined

-

Last visited

-

Days Won

2

Everything posted by NeoID

-

What I mean is that I don't understand how jun is able to override synoinfo.conf and make it survive upgrades (never had any issues with it at least), but when I change /etc.defaults/synoinfo.conf it may or may not survive upgrades... it sounds to me like I should put the "Maxdrives=24" or whatever somewhere else (in another file) that again makes sure that synoinfo.conf always has the correct value. For example.. I see that there's also /etc/synoinfo.conf which contains the same values.. is that file persistent and overriding the /etc.defaults/synoinfo.conf file? Seems by the way that I'm still missing something regarding the sata_args or so as I now get the following message trying to log into my test VM: "You cannot login to the system because the disk space is full currently. Please restart the system and try again." I have never seen the error message before and it's obviously not out of disk space by just sitting there idling for 5 hours. It works again after a reboot, but there is still something that isn't right. I'll update my post when I've figured out what's causing this.

-

I see... how will this work in terms of future upgrades? I know Synology sometimes overwrites synofinfo.conf. If this implementation survives upgrades... It's there a way to change it/make it configurable through grub? I have currently two VMs with two 16 ports LSI HBAs, but it would be much nicer to merge them into one with 24 drives. However, not a fan of changing synoinfo.conf and risking a crashed volume if it's suddenly overridden. I guess I could make a script that runs on bolt that makes sure the variable is set before DSM is started, but much nicer if @jun would take the time to implement this or suggest a "best practice" method of changing maxdrives and the two other related (usb/esata) variables.

-

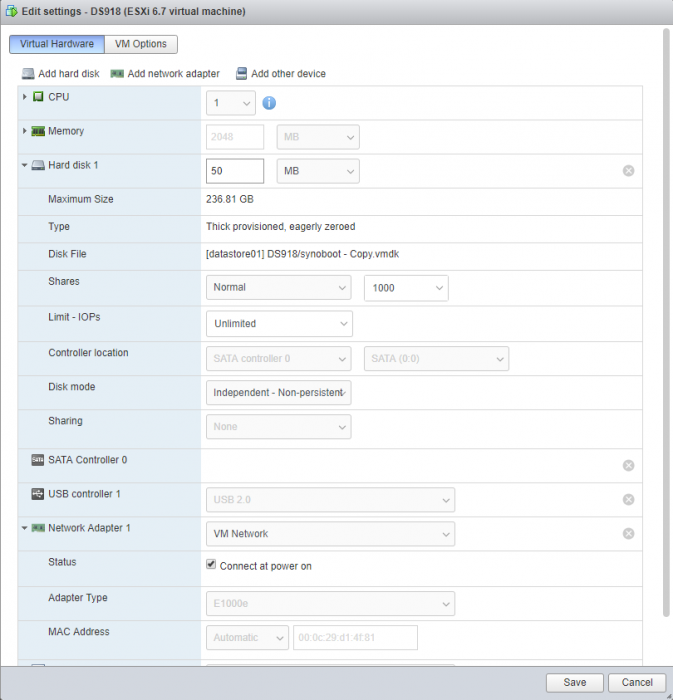

I will answer my own post as it might help others or start a at least shine some light on how to configure the sata_args parameters. As usually I've modified grub.cfg and added my serial and mac before commenting out all menu items except the one for ESXi. When booting the image Storage Manager gave me the above setup which is far from ideal. The first one is the bootloader attached to the virtual SATA controller 0 and the other two are my first and second drive connected to my LSI HBA in pass-through mode. In order to hide the drive I added DiskIdxMap=1F to the sata_args. This pushed the bootloader out of the 16 disk boundary so I was left with the other two data drives. In order to move them to the beginning of the slots I also added SasIdxMap=0xfffffffe. I've testet multiple values and decreased the value one by one until all drives aligned correctly. The reason for why you see 16 drives is because maxdrives in /etc.defaults/synoinfo.conf was set to 16. Not exactly sure why it is set to that value and not 4 or 12, but maybe it's changed based on your hardware/sata ports and the fact that my HBA has 16 drives? No idea about that last part, but it seems to work as intended. set sata_args='DiskIdxMap=1F SasIdxMap=0xfffffffe'

-

This is how it looks right after a new install with two WD Red drives attached to my LSI HBA in pass-through mode. I can see the bootloader (configured as SATA) taking up the first slot. I have no additional SATA controller added to the VM (I have removed all I can remove). For some reason I have four empty slots before the two drives of my HBA show up. I guess this has something to do with the SATAmap variable but I cant' find much info on it. Considering that it shows me 16 slots (more than the default 4 or 12 of the original synology) without modifying any system files I guess I somehow can support all 16 drives or even pass-through two HBA's in order to get 24 drives?

-

Anyone who is running 1.04b on ESXI with a HBA in pass-through? Would love some input on what settings to change in the grub config in order to only show the drives connected to the HBA and ignore the bootloader itself. Is it possible to increase the number of drives by only changing the settings in the grub file now? I'm using a LSI 9201-16i, so 16 ports.

-

Anyone who knows what syno_hdd_powerup_seq and syno_hdd_detect does? It was set to 0 in the older bootloaders, but now it's set to 1 by default. Anyone who is using ESXi and has successfully migrated from ds3615xs to ds918+? Any particular VM setting that should be changed? I'm still struggling to get the latest loader to list all my disks (using LSI in passthrough mode). Anyone who can explain what the sata_args are and how to figure out what values to use?

-

Pretty sure, I'm also running the 3615 image without issues, but I couldn't get the DS918 image to work until I tried it on a 6.gen intel CPU. I guess the boot image requires instructions that aren't present on earlier processors.

-

I could be wrong, but I think you'll need a newer CPU. Something from the same generation (or newer) as Intel Celeron J3455.

-

What CPU do you have? Doesn't show up on the network on my i7-4770, but it works on a later 6.gen CPU from Intel.

-

Anyone got it to work on ESXi 6.7 yet? I've added the boot image as a SATA drive and tried both e1000e and the VMX network adapter. I've tried BIOS and EFI, but I can't seem to find it on the network. Not with Synology Asssistant or by looking at the router. Any tips what I might be missing? I can see the splash screen at the beginning, but notice the CPU spike to 100% and stays there. If that means anything I'm using a i7-4770 in my ESXi server.

-

Will there also be an updated launcher for 3615xs? I assume that ds918 only supports 4 drives without modding system files?

-

My bad, I already had it set to e1000e myself... 😛

-

How did you set the e1000e adapter? I was not aware that there where others actually... I'm also on ESXI and the network speed is way worse on 6.2 then 6.1 so would be fun to try another adapter to see if that helps... According to vmware it's not possible to set this from the U (for Linux)I: https://kb.vmware.com/s/article/1001805 Did you just set the OS in the VM to Windows?

-

It's done when it's done, do not ask for ETA's. Nice work @jun. Would love to read more about your work (like tech talk on how to build the bootloader).

-

- Outcome of the update: UNSUCCESSFUL - DSM version prior update: DSM 6.1.4 15217 Update 5 - Loader version and model: Jun's Loader v1.02b - DS3615xs - Installation type: ESXi 6.5 - Additional comments: Took a long time for the NAS to get it's original IP back. Had to use Synology Assistant. DOCKER SEEMS BROKEN! JUST WSOD!

-

Would appreciate it if anyone else could try the app on Android.

-

Anyone managed to get the Android app to work when using a Letsencrypt certificate? All apps work fine except Drive.

-

I've been looking at dmesg and /proc/mdstat for the last couple of days. Today the rebuild did a second pass and now everything seems to be clean and working again! I guess my conclusion is.. make sure all cables are properly inserted and cool your HBA card.

-

I'm afraid you'r right about the rebuild taking only a single pass and something is wrong with my array... -_- Do you use a hypervisor or run baremetal? I have had these issues even on EXT4, so I doubts it's related to the file system.

-

I also use 3TB WD Red drives (10x ) in RAID 6. First of all I powered down my system and tested the drive on another machine. Since it was fine I didn't think that the crash was caused by it. To make sure, I've replaced the drive with another WD Red 3TB I had lying around. Also this drive "crashed" according to DSM after rebuilding for a day. I started noticing a pattern here. Every time I've started from scratch it has been the same disk bay that DSM was complaining about. The reason I didn't notice this sooner is that DSM sometimes changes the order of the disk (I don't remember if it happens on each boot or each install of DSM). However.. it was always the same bay causing issues. I finally removed the drive from the bay and connected it directly by SATA. For now everything seems to rebuild just fine (even though I don't see why it's currently rebuilding for the second time without any errors in between). I have also noticed that the HBA became very hot when rebuilding (and under heavy load), so that may also have something to do with this issue... So I've 3d printed a bracket and mounted a 120mm fan on top of the card. For now everything works smooth, so I just hope it doesn't restart the rebuild a third time or I think there's something wrong with DSM not being able to save the state of the volume somehow...

-

A bad cable crashed my BTRFS volume the other day. I found the issue after a while and started the rebuild process after replacing the bad SATA cable. The rebuild took a few days to complete, but once it did, it restarted from 0% again. I have not received any other emails or notifications from DSM that anything is abnormal. Is it normal that the rebuild process need multiple passes to complete? I'm on ESXi and the boot drive is mounted as read-only once everything was up and running smoothly. First I thought the the rebuild restarted because the state couldn't be written back to the boot drive (I don't think it does), but it has been read-only since before the crash.. so I don't think it's related to this problem. Anyone who has been in this situation before and knows how many passes the rebuild process needs? I would never believe that it requires more than one, but who knows....

-

I was about to make a video tutorial on how to upgrade to 6.1 with 1.02b after over a month with successful testing when suddenly one drive crashed. SMART values are fine and disk is ok, so it's false positive, upon rebuild another one bites to dust... also a false positive. I still suspect the 9201-16i PCI card to have some issues with XPenology, but the weird thing is that this only happens every 1-2 years. Could also be stress-related since I this time started a scrub hours before it happened. I have plenty of cooling, replaced all wires and drives, still the same thing happen a few month later. Since I'm on ESXi I don't think that anything else apart from the PCI controller, wires, drives and bay should matter but I'm left completely clueless on this one. Anyone else had sudden crashes for no apparent reason?

-

Thanks, but I've figured it out. The problem was that I had to enbale hotplug cpu in esxi. Everything is working great now. I have the 9201-16i myself and it's great once you know about the setting.

-

I know this was required before, but what's the benefit of adding the serial port? Just debugging information and being able to do low-level stuff if SSH is down/disabled?

- 13 replies

-

- vm

- serial port

-

(and 1 more)

Tagged with: