C-Fu

Member-

Posts

90 -

Joined

-

Last visited

Everything posted by C-Fu

-

I don't know for sure, but most likely the difference is mainly the softwares/packages version

-

Point 2: Where do you get the 6.2.3 PowerButton SPK?

-

yeah, but since it's a very old email with tons of (useless) logins to old unused sites like dropbox and linkedin, in all intents and purposes it's useless in regards to xpenology.com IMO - which also uses a generated password. Anyway after rereading, Edge told me of a leaked (generated) password, not site. So I suppose I put it wrongly, perhaps not xpenology.com that got hacked, just my account's particular password...... ? Oh well. All is good 😁 sorry for the heart attack anybody!

-

Today Edge alerted me to this: Luckily password is a generated useless one, but it's still a bit concerning. Is this true?

-

Does anybody know if something like this exists for 6.2.3? QEMU Guest Agent for FreeBSD 12.1 - DSM 6.x - XPEnology Community I sorely miss this feature Basically I need the spk for xpe's qemu guest agent for 6.2.3.

-

https://gofile.io/d/HHbXQP - DS3615

-

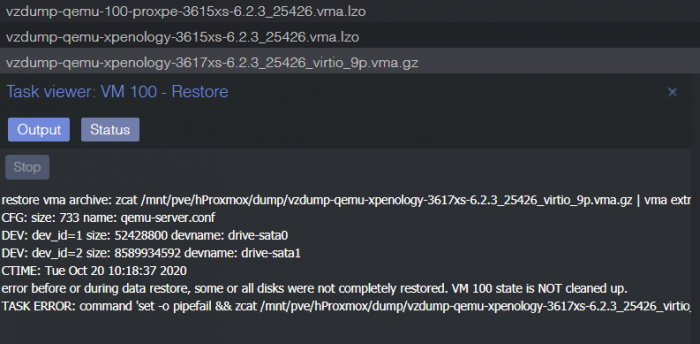

EDIT: LOL SOLVED. DIDN'T SELECT THE RESTORE LOCATION 🤦♂️ I tried to restore, but received this error. restore vma archive: zcat /mnt/pve/hProxmox/dump/vzdump-qemu-xpenology-3617xs-6.2.3_25426_virtio_9p.vma.gz | vma extract -v -r /var/tmp/vzdumptmp24721.fifo - /var/tmp/vzdumptmp24721 CFG: size: 733 name: qemu-server.conf DEV: dev_id=1 size: 52428800 devname: drive-sata0 DEV: dev_id=2 size: 8589934592 devname: drive-sata1 CTIME: Tue Oct 20 10:18:37 2020 error before or during data restore, some or all disks were not completely restored. VM 100 state is NOT cleaned up. TASK ERROR: command 'set -o pipefail && zcat /mnt/pve/hProxmox/dump/vzdump-qemu-xpenology-3617xs-6.2.3_25426_virtio_9p.vma.gz | vma extract -v -r /var/tmp/vzdumptmp24721.fifo - /var/tmp/vzdumptmp24721' failed: storage 'local2' does not exist The 3615xs image that I got works fine.

-

I just changed from 750W psu to a 1600W psu that's fairly new (only a few day's use max), so I don't believe the PSU is the problem. When I get back on monday, I'll see if I can replace the whole system (I have a few motherboards unused) and cables and whatnot and reuse the SAS card if that's not likely the issue, and maybe reinstall Xpenology. Would that be a good idea?

-

Damn. You're right. Usually when something like this happens... is there a way to prevent the sas card from doing this? Like a setting or a bios update or something. Or does this mean that the card is dying? If I take out say, sda - the SSD and put it back in, will the assignments change and revert back? Or whatever drive connected to the sas card. Sorry I'm just frustrated but still wanna understand

-

Yeah it is. Slow, but working. # cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md4 : active raid5 sdl6[0] sdn6[2] sdm6[1] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/3] [UUU__] md2 : active raid5 sdb5[0] sdk5[12] sdo5[11] sdq5[9] sdp5[8] sdn5[7] sdm5[6] sdl5[5] sdf5[4] sde5[3] sdd5[2] sdc5[1] 35105225472 blocks super 1.2 level 5, 64k chunk, algorithm 2 [13/12] [UUUUUUUUUU_UU] md5 : active raid1 sdo7[3] 3905898432 blocks super 1.2 [2/0] [__] md1 : active raid1 sdb2[0] sdc2[1] sdd2[2] sde2[3] sdf2[4] sdk2[5] sdl2[6] sdm2[7] sdn2[11] sdo2[8] sdp2[9] sdq2[10] 2097088 blocks [24/12] [UUUUUUUUUUUU____________] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] 2490176 blocks [12/4] [_UUU_U______] unused devices: <none> Just out of curiosity, does this mean that if I were to replace the current sas card to another, would it fix? An IBM sas expander has just arrived, would this + my current 2 port sas card help somehow? Or is it because of something else, like motherboard? I just did a notepad++ compare with Post ID 113 for fdisk, and seems like nothing has changed. # fdisk -l /dev/sd? Disk /dev/sda: 223.6 GiB, 240057409536 bytes, 468862128 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x696935dc Device Boot Start End Sectors Size Id Type /dev/sda1 2048 468857024 468854977 223.6G fd Linux raid autodetect Disk /dev/sdb: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 43C8C355-AE0A-42DC-97CC-508B0FB4EF37 Device Start End Sectors Size Type /dev/sdb1 2048 4982527 4980480 2.4G Linux RAID /dev/sdb2 4982528 9176831 4194304 2G Linux RAID /dev/sdb5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdc: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 0600DFFC-A576-4242-976A-3ACAE5284C4C Device Start End Sectors Size Type /dev/sdc1 2048 4982527 4980480 2.4G Linux RAID /dev/sdc2 4982528 9176831 4194304 2G Linux RAID /dev/sdc5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdd: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 58B43CB1-1F03-41D3-A734-014F59DE34E8 Device Start End Sectors Size Type /dev/sdd1 2048 4982527 4980480 2.4G Linux RAID /dev/sdd2 4982528 9176831 4194304 2G Linux RAID /dev/sdd5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sde: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: E5FD9CDA-FE14-4F95-B776-B176E7130DEA Device Start End Sectors Size Type /dev/sde1 2048 4982527 4980480 2.4G Linux RAID /dev/sde2 4982528 9176831 4194304 2G Linux RAID /dev/sde5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdf: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 48A13430-10A1-4050-BA78-723DB398CE87 Device Start End Sectors Size Type /dev/sdf1 2048 4982527 4980480 2.4G Linux RAID /dev/sdf2 4982528 9176831 4194304 2G Linux RAID /dev/sdf5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdk: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: A3E39D34-4297-4BE9-B4FD-3A21EFC38071 Device Start End Sectors Size Type /dev/sdk1 2048 4982527 4980480 2.4G Linux RAID /dev/sdk2 4982528 9176831 4194304 2G Linux RAID /dev/sdk5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdl: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 849E02B2-2734-496B-AB52-A572DF8FE63F Device Start End Sectors Size Type /dev/sdl1 2048 4982527 4980480 2.4G Linux RAID /dev/sdl2 4982528 9176831 4194304 2G Linux RAID /dev/sdl5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdl6 5860342336 11720838239 5860495904 2.7T Linux RAID Disk /dev/sdm: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 423D33B4-90CE-4E34-9C40-6E06D1F50C0C Device Start End Sectors Size Type /dev/sdm1 2048 4982527 4980480 2.4G Linux RAID /dev/sdm2 4982528 9176831 4194304 2G Linux RAID /dev/sdm5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdm6 5860342336 11720838239 5860495904 2.7T Linux RAID Disk /dev/sdn: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 09CB7303-C2E7-46F8-ADA0-D4853F25CB00 Device Start End Sectors Size Type /dev/sdn1 2048 4982527 4980480 2.4G Linux RAID /dev/sdn2 4982528 9176831 4194304 2G Linux RAID /dev/sdn5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdn6 5860342336 11720838239 5860495904 2.7T Linux RAID Disk /dev/sdo: 9.1 TiB, 10000831348736 bytes, 19532873728 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 1713E819-3B9A-4CE3-94E8-5A3DBF1D5983 Device Start End Sectors Size Type /dev/sdo1 2048 4982527 4980480 2.4G Linux RAID /dev/sdo2 4982528 9176831 4194304 2G Linux RAID /dev/sdo5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdo6 5860342336 11720838239 5860495904 2.7T Linux RAID /dev/sdo7 11720854336 19532653311 7811798976 3.7T Linux RAID Disk /dev/sdp: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 1D5B8B09-8D4A-4729-B089-442620D3D507 Device Start End Sectors Size Type /dev/sdp1 2048 4982527 4980480 2.4G Linux RAID /dev/sdp2 4982528 9176831 4194304 2G Linux RAID /dev/sdp5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdq: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 54D81C51-AB85-4DE2-AA16-263DF1C6BB8A Device Start End Sectors Size Type /dev/sdq1 2048 4982527 4980480 2.4G Linux RAID /dev/sdq2 4982528 9176831 4194304 2G Linux RAID /dev/sdq5 9453280 5860326239 5850872960 2.7T Linux RAID # dmesg | tail [38440.957234] --- wd:0 rd:2 [38440.957237] RAID1 conf printout: [38440.957238] --- wd:0 rd:2 [38440.957239] disk 0, wo:1, o:1, dev:sdo7 [38440.957258] md: md5: set sdo7 to auto_remap [1] [38440.957260] md: recovery of RAID array md5 [38440.957262] md: minimum _guaranteed_ speed: 600000 KB/sec/disk. [38440.957263] md: using maximum available idle IO bandwidth (but not more than 800000 KB/sec) for recovery. [38440.957264] md: using 128k window, over a total of 3905898432k. [38440.957535] md: md5: set sdo7 to auto_remap [0] This looks like OK, right? Oddly enough fgrep hotswap doesn't return anything. But the last few hundred lines of cat /var/log/disk.log are 2020-01-24T02:23:21+08:00 homelab kernel: [38410.974657] md: md5: set sdo7 to auto_remap [1] 2020-01-24T02:23:21+08:00 homelab kernel: [38410.974659] md: recovery of RAID array md5 2020-01-24T02:23:21+08:00 homelab kernel: [38410.974945] md: md5: set sdo7 to auto_remap [0] 2020-01-24T02:23:21+08:00 homelab kernel: [38411.005717] md: md5: set sdo7 to auto_remap [1] 2020-01-24T02:23:21+08:00 homelab kernel: [38411.005718] md: recovery of RAID array md5 2020-01-24T02:23:21+08:00 homelab kernel: [38411.005961] md: md5: set sdo7 to auto_remap [0] 2020-01-24T02:23:21+08:00 homelab kernel: [38411.038632] md: md5: set sdo7 to auto_remap [1] 2020-01-24T02:23:21+08:00 homelab kernel: [38411.038634] md: recovery of RAID array md5 2020-01-24T02:23:21+08:00 homelab kernel: [38411.038873] md: md5: set sdo7 to auto_remap [0] 2020-01-24T02:23:21+08:00 homelab kernel: [38411.074782] md: md5: set sdo7 to auto_remap [1] 2020-01-24T02:23:21+08:00 homelab kernel: [38411.074784] md: recovery of RAID array md5 2020-01-24T02:23:21+08:00 homelab kernel: [38411.074973] md: md5: set sdo7 to auto_remap [0] 2020-01-24T02:23:21+08:00 homelab kernel: [38411.106766] md: md5: set sdo7 to auto_remap [1] 2020-01-24T02:23:21+08:00 homelab kernel: [38411.106767] md: recovery of RAID array md5 2020-01-24T02:23:21+08:00 homelab kernel: [38411.106956] md: md5: set sdo7 to auto_remap [0] And that's the current time.

-

There was another powercut. Dammit! Now I can't even do cat /proc/mdstat. I'll wait a while just to see if it will work or not. Sorry! So frustrating I connected the pc to an UPS btw. So not really sure why powercuts can still affect the whole system. edit: ok cat /proc/mdstat works but slow. Should I continue? with mdadm assemble md4 md5? or maybe take out sda SSD and see if it fixes the slow mdstat? Please advice. # cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md4 : active raid5 sdl6[0] sdq6[2] sdm6[1] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/3] [UUU__] md2 : active raid5 sdb5[0] sdk5[12] sdn5[11] sdp5[9] sdo5[8] sdq5[7] sdm5[6] sdl5[5] sdf5[4] sde5[3] sdd5[2] sdc5[1] 35105225472 blocks super 1.2 level 5, 64k chunk, algorithm 2 [13/12] [UUUUUUUUUU_UU] md5 : active raid1 sdn7[3] 3905898432 blocks super 1.2 [2/0] [__] md1 : active raid1 sdb2[0] sdc2[1] sdd2[2] sde2[3] sdf2[4] sdk2[5] sdl2[6] sdm2[7] sdn2[8] sdo2[9] sdp2[10] sdq2[11] 2097088 blocks [24/12] [UUUUUUUUUUUU____________] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] 2490176 blocks [12/4] [_UUU_U______] unused devices: <none>

-

Two errors: root@homelab:~# mdadm --stop /dev/md4 mdadm: stopped /dev/md4 root@homelab:~# mdadm --stop /dev/md5 mdadm: stopped /dev/md5 root@homelab:~# mdadm --assemble /dev/md4 -u648fc239:67ee3f00:fa9d25fe:ef2f8cb0 mdadm: /dev/md4 assembled from 3 drives - not enough to start the array. root@homelab:~# mdadm --assemble /dev/md5 -uae55eeff:e6a5cc66:2609f5e0:2e2ef747 mdadm: /dev/md5 assembled from 0 drives and 1 rebuilding - not enough to start the array. What do you mean by not current btw?

-

Cool, no worries. # mdadm --detail /dev/md5 /dev/md5: Version : 1.2 Creation Time : Tue Sep 24 19:36:08 2019 Raid Level : raid1 Array Size : 3905898432 (3724.96 GiB 3999.64 GB) Used Dev Size : 3905898432 (3724.96 GiB 3999.64 GB) Raid Devices : 2 Total Devices : 0 Persistence : Superblock is persistent Update Time : Tue Jan 21 05:58:00 2020 State : clean, FAILED Active Devices : 0 Failed Devices : 0 Spare Devices : 0 Number Major Minor RaidDevice State - 0 0 0 removed - 0 0 1 removed # mdadm --detail /dev/md4 /dev/md4: Version : 1.2 Creation Time : Sun Sep 22 21:55:04 2019 Raid Level : raid5 Array Size : 11720987648 (11178.00 GiB 12002.29 GB) Used Dev Size : 2930246912 (2794.50 GiB 3000.57 GB) Raid Devices : 5 Total Devices : 3 Persistence : Superblock is persistent Update Time : Tue Jan 21 05:58:00 2020 State : clean, FAILED Active Devices : 3 Working Devices : 3 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : homelab:4 (local to host homelab) UUID : 648fc239:67ee3f00:fa9d25fe:ef2f8cb0 Events : 7217 Number Major Minor RaidDevice State 0 8 182 0 active sync /dev/sdl6 1 8 198 1 active sync /dev/sdm6 2 8 214 2 active sync /dev/sdn6 - 0 0 3 removed - 0 0 4 removed

-

Great! root@homelab:~# mdadm -Cf /dev/md2 -e1.2 -n13 -l5 --verbose --assume-clean /dev/sd[bcdefpqlmn]5 missing /dev/sdo5 /dev/sdk5 -u43699871:217306be:dc16f5e8:dcbe1b0d mdadm: layout defaults to left-symmetric mdadm: chunk size defaults to 64K mdadm: /dev/sdb5 appears to be part of a raid array: level=raid5 devices=13 ctime=Tue Jan 21 05:07:10 2020 mdadm: /dev/sdc5 appears to be part of a raid array: level=raid5 devices=13 ctime=Tue Jan 21 05:07:10 2020 mdadm: /dev/sdd5 appears to be part of a raid array: level=raid5 devices=13 ctime=Tue Jan 21 05:07:10 2020 mdadm: /dev/sde5 appears to be part of a raid array: level=raid5 devices=13 ctime=Tue Jan 21 05:07:10 2020 mdadm: /dev/sdf5 appears to be part of a raid array: level=raid5 devices=13 ctime=Tue Jan 21 05:07:10 2020 mdadm: /dev/sdl5 appears to be part of a raid array: level=raid5 devices=13 ctime=Tue Jan 21 05:07:10 2020 mdadm: /dev/sdm5 appears to be part of a raid array: level=raid5 devices=13 ctime=Tue Jan 21 05:07:10 2020 mdadm: /dev/sdn5 appears to be part of a raid array: level=raid5 devices=13 ctime=Tue Jan 21 05:07:10 2020 mdadm: /dev/sdp5 appears to be part of a raid array: level=raid5 devices=13 ctime=Tue Jan 21 05:07:10 2020 mdadm: /dev/sdq5 appears to be part of a raid array: level=raid5 devices=13 ctime=Tue Jan 21 05:07:10 2020 mdadm: /dev/sdo5 appears to be part of a raid array: level=raid5 devices=13 ctime=Sun Sep 22 21:55:03 2019 mdadm: /dev/sdk5 appears to be part of a raid array: level=raid5 devices=13 ctime=Tue Jan 21 05:07:10 2020 mdadm: size set to 2925435456K Continue creating array? yes mdadm: array /dev/md2 started. root@homelab:~# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md2 : active raid5 sdk5[12] sdo5[11] sdq5[9] sdp5[8] sdn5[7] sdm5[6] sdl5[5] sdf5[4] sde5[3] sdd5[2] sdc5[1] sdb5[0] 35105225472 blocks super 1.2 level 5, 64k chunk, algorithm 2 [13/12] [UUUUUUUUUU_UU] md4 : active raid5 sdl6[0] sdn6[2] sdm6[1] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/3] [UUU__] md5 : active raid1 super 1.2 [2/0] [__] md1 : active raid1 sdb2[0] sdc2[1] sdd2[2] sde2[3] sdf2[4] sdk2[5] sdl2[6] sdm2[7] sdn2[8] sdp2[24](F) sdq2[11] 2097088 blocks [24/10] [UUUUUUUUU__U____________] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] 2490176 blocks [12/4] [_UUU_U______] @flyride Should I unplug the sata and power cable for sda? I just realised this 1600W psu has no more sata power plug, and I ran out of sata power splitter plug. Since we probably are going to plug in the other 10TB (I think). It's 1.34am right now, and I can only go to a pc shop to get a splitter tomorrow. Please advice.

-

I can confirm, no array drives changing since 9 hours ago. The one I just ran still has the same 4 "new" entries of add & remove [sda] ssd drive compared to Tuesday's post #113. # fgrep "hotswap" /var/log/disk.log 2020-01-18T10:21:23+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdk] hotswap [add] ==== 2020-01-18T10:21:23+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdl] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdm] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdn] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdo] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdp] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdq] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdb] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdc] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdd] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sde] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdf] hotswap [add] ==== 2020-01-18T10:28:46+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdk] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdl] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdm] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdn] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdo] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdp] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdq] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdb] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdc] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdd] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sde] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdf] hotswap [add] ==== 2020-01-20T04:16:45+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [remove] ==== 2020-01-20T04:16:48+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ==== 2020-01-20T04:24:00+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [remove] ==== 2020-01-20T04:24:02+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ==== 2020-01-20T14:18:35+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [remove] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdk] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdl] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdm] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdn] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdo] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdp] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdq] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ==== 2020-01-20T18:31:34+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdb] hotswap [add] ==== 2020-01-20T18:31:34+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdc] hotswap [add] ==== 2020-01-20T18:31:34+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdd] hotswap [add] ==== 2020-01-20T18:31:34+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sde] hotswap [add] ==== 2020-01-20T18:31:34+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdf] hotswap [add] ==== 2020-01-21T05:58:00+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdo] hotswap [remove] ==== 2020-01-21T05:58:20+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdo] hotswap [add] ==== 2020-01-21T18:19:12+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [remove] ==== 2020-01-21T18:19:14+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ==== 2020-01-21T20:39:20+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [remove] ==== 2020-01-21T20:39:22+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ====

-

ok I did a fgrep hotswap and compare both with notepad++ and seems like there are a few additions: 2020-01-21T18:19:12+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [remove] ==== 2020-01-21T18:19:14+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ==== 2020-01-21T20:39:20+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [remove] ==== 2020-01-21T20:39:22+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ==== I thought sda is the ssd? # fdisk -l /dev/sda Disk /dev/sda: 223.6 GiB, 240057409536 bytes, 468862128 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x696935dc Device Boot Start End Sectors Size Id Type /dev/sda1 2048 468857024 468854977 223.6G fd Linux raid autodetect The fgrep hotplug is in the pastebin link, pasting the whole log here seems to break the board. https://pastebin.com/8fydgapn

-

# fdisk -l /dev/sdb Disk /dev/sdb: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 43C8C355-AE0A-42DC-97CC-508B0FB4EF37 Device Start End Sectors Size Type /dev/sdb1 2048 4982527 4980480 2.4G Linux RAID /dev/sdb2 4982528 9176831 4194304 2G Linux RAID /dev/sdb5 9453280 5860326239 5850872960 2.7T Linux RAID # fdisk -l /dev/sdc Disk /dev/sdc: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 0600DFFC-A576-4242-976A-3ACAE5284C4C Device Start End Sectors Size Type /dev/sdc1 2048 4982527 4980480 2.4G Linux RAID /dev/sdc2 4982528 9176831 4194304 2G Linux RAID /dev/sdc5 9453280 5860326239 5850872960 2.7T Linux RAID # fdisk -l /dev/sdd Disk /dev/sdd: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 58B43CB1-1F03-41D3-A734-014F59DE34E8 Device Start End Sectors Size Type /dev/sdd1 2048 4982527 4980480 2.4G Linux RAID /dev/sdd2 4982528 9176831 4194304 2G Linux RAID /dev/sdd5 9453280 5860326239 5850872960 2.7T Linux RAID # fdisk -l /dev/sde Disk /dev/sde: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: E5FD9CDA-FE14-4F95-B776-B176E7130DEA Device Start End Sectors Size Type /dev/sde1 2048 4982527 4980480 2.4G Linux RAID /dev/sde2 4982528 9176831 4194304 2G Linux RAID /dev/sde5 9453280 5860326239 5850872960 2.7T Linux RAID # fdisk -l /dev/sdf Disk /dev/sdf: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 48A13430-10A1-4050-BA78-723DB398CE87 Device Start End Sectors Size Type /dev/sdf1 2048 4982527 4980480 2.4G Linux RAID /dev/sdf2 4982528 9176831 4194304 2G Linux RAID /dev/sdf5 9453280 5860326239 5850872960 2.7T Linux RAID # fdisk -l /dev/sdk Disk /dev/sdk: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: A3E39D34-4297-4BE9-B4FD-3A21EFC38071 Device Start End Sectors Size Type /dev/sdk1 2048 4982527 4980480 2.4G Linux RAID /dev/sdk2 4982528 9176831 4194304 2G Linux RAID /dev/sdk5 9453280 5860326239 5850872960 2.7T Linux RAID # fdisk -l /dev/sdl Disk /dev/sdl: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 849E02B2-2734-496B-AB52-A572DF8FE63F Device Start End Sectors Size Type /dev/sdl1 2048 4982527 4980480 2.4G Linux RAID /dev/sdl2 4982528 9176831 4194304 2G Linux RAID /dev/sdl5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdl6 5860342336 11720838239 5860495904 2.7T Linux RAID # fdisk -l /dev/sdm Disk /dev/sdm: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 423D33B4-90CE-4E34-9C40-6E06D1F50C0C Device Start End Sectors Size Type /dev/sdm1 2048 4982527 4980480 2.4G Linux RAID /dev/sdm2 4982528 9176831 4194304 2G Linux RAID /dev/sdm5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdm6 5860342336 11720838239 5860495904 2.7T Linux RAID # fdisk -l /dev/sdn Disk /dev/sdn: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 09CB7303-C2E7-46F8-ADA0-D4853F25CB00 Device Start End Sectors Size Type /dev/sdn1 2048 4982527 4980480 2.4G Linux RAID /dev/sdn2 4982528 9176831 4194304 2G Linux RAID /dev/sdn5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdn6 5860342336 11720838239 5860495904 2.7T Linux RAID # fdisk -l /dev/sdo Disk /dev/sdo: 9.1 TiB, 10000831348736 bytes, 19532873728 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 1713E819-3B9A-4CE3-94E8-5A3DBF1D5983 Device Start End Sectors Size Type /dev/sdo1 2048 4982527 4980480 2.4G Linux RAID /dev/sdo2 4982528 9176831 4194304 2G Linux RAID /dev/sdo5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdo6 5860342336 11720838239 5860495904 2.7T Linux RAID /dev/sdo7 11720854336 19532653311 7811798976 3.7T Linux RAID # fdisk -l /dev/sdp Disk /dev/sdp: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 1D5B8B09-8D4A-4729-B089-442620D3D507 Device Start End Sectors Size Type /dev/sdp1 2048 4982527 4980480 2.4G Linux RAID /dev/sdp2 4982528 9176831 4194304 2G Linux RAID /dev/sdp5 9453280 5860326239 5850872960 2.7T Linux RAID # fdisk -l /dev/sdq Disk /dev/sdq: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 54D81C51-AB85-4DE2-AA16-263DF1C6BB8A Device Start End Sectors Size Type /dev/sdq1 2048 4982527 4980480 2.4G Linux RAID /dev/sdq2 4982528 9176831 4194304 2G Linux RAID /dev/sdq5 9453280 5860326239 5850872960 2.7T Linux RAID Uh what's a table error? # fgrep "hotswap" /var/log/disk.log 2020-01-18T10:21:23+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdk] hotswap [add] ==== 2020-01-18T10:21:23+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdl] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdm] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdn] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdo] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdp] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdq] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdb] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdc] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdd] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sde] hotswap [add] ==== 2020-01-18T10:21:24+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdf] hotswap [add] ==== 2020-01-18T10:28:46+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdk] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdl] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdm] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdn] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdo] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdp] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdq] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdb] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdc] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdd] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sde] hotswap [add] ==== 2020-01-18T10:28:47+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdf] hotswap [add] ==== 2020-01-20T04:16:45+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [remove] ==== 2020-01-20T04:16:48+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ==== 2020-01-20T04:24:00+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [remove] ==== 2020-01-20T04:24:02+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ==== 2020-01-20T14:18:35+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [remove] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdk] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdl] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdm] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdn] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdo] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdp] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdq] hotswap [add] ==== 2020-01-20T18:31:33+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sda] hotswap [add] ==== 2020-01-20T18:31:34+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdb] hotswap [add] ==== 2020-01-20T18:31:34+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdc] hotswap [add] ==== 2020-01-20T18:31:34+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdd] hotswap [add] ==== 2020-01-20T18:31:34+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sde] hotswap [add] ==== 2020-01-20T18:31:34+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdf] hotswap [add] ==== 2020-01-21T05:58:00+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdo] hotswap [remove] ==== 2020-01-21T05:58:20+08:00 homelab hotplugd: hotplugd.c:1451 ==== SATA disk [sdo] hotswap [add] ==== # date Tue Jan 21 12:24:08 CST 2020

-

# fdisk -l /dev/sd? Disk /dev/sda: 223.6 GiB, 240057409536 bytes, 468862128 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x696935dc Device Boot Start End Sectors Size Id Type /dev/sda1 2048 468857024 468854977 223.6G fd Linux raid autodetect Disk /dev/sdb: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 43C8C355-AE0A-42DC-97CC-508B0FB4EF37 Device Start End Sectors Size Type /dev/sdb1 2048 4982527 4980480 2.4G Linux RAID /dev/sdb2 4982528 9176831 4194304 2G Linux RAID /dev/sdb5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdc: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 0600DFFC-A576-4242-976A-3ACAE5284C4C Device Start End Sectors Size Type /dev/sdc1 2048 4982527 4980480 2.4G Linux RAID /dev/sdc2 4982528 9176831 4194304 2G Linux RAID /dev/sdc5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdd: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 58B43CB1-1F03-41D3-A734-014F59DE34E8 Device Start End Sectors Size Type /dev/sdd1 2048 4982527 4980480 2.4G Linux RAID /dev/sdd2 4982528 9176831 4194304 2G Linux RAID /dev/sdd5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sde: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: E5FD9CDA-FE14-4F95-B776-B176E7130DEA Device Start End Sectors Size Type /dev/sde1 2048 4982527 4980480 2.4G Linux RAID /dev/sde2 4982528 9176831 4194304 2G Linux RAID /dev/sde5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdf: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 48A13430-10A1-4050-BA78-723DB398CE87 Device Start End Sectors Size Type /dev/sdf1 2048 4982527 4980480 2.4G Linux RAID /dev/sdf2 4982528 9176831 4194304 2G Linux RAID /dev/sdf5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdk: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: A3E39D34-4297-4BE9-B4FD-3A21EFC38071 Device Start End Sectors Size Type /dev/sdk1 2048 4982527 4980480 2.4G Linux RAID /dev/sdk2 4982528 9176831 4194304 2G Linux RAID /dev/sdk5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdl: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 849E02B2-2734-496B-AB52-A572DF8FE63F Device Start End Sectors Size Type /dev/sdl1 2048 4982527 4980480 2.4G Linux RAID /dev/sdl2 4982528 9176831 4194304 2G Linux RAID /dev/sdl5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdl6 5860342336 11720838239 5860495904 2.7T Linux RAID Disk /dev/sdm: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 423D33B4-90CE-4E34-9C40-6E06D1F50C0C Device Start End Sectors Size Type /dev/sdm1 2048 4982527 4980480 2.4G Linux RAID /dev/sdm2 4982528 9176831 4194304 2G Linux RAID /dev/sdm5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdm6 5860342336 11720838239 5860495904 2.7T Linux RAID Disk /dev/sdn: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 09CB7303-C2E7-46F8-ADA0-D4853F25CB00 Device Start End Sectors Size Type /dev/sdn1 2048 4982527 4980480 2.4G Linux RAID /dev/sdn2 4982528 9176831 4194304 2G Linux RAID /dev/sdn5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdn6 5860342336 11720838239 5860495904 2.7T Linux RAID Disk /dev/sdo: 9.1 TiB, 10000831348736 bytes, 19532873728 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 1713E819-3B9A-4CE3-94E8-5A3DBF1D5983 Device Start End Sectors Size Type /dev/sdo1 2048 4982527 4980480 2.4G Linux RAID /dev/sdo2 4982528 9176831 4194304 2G Linux RAID /dev/sdo5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdo6 5860342336 11720838239 5860495904 2.7T Linux RAID /dev/sdo7 11720854336 19532653311 7811798976 3.7T Linux RAID Disk /dev/sdp: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 1D5B8B09-8D4A-4729-B089-442620D3D507 Device Start End Sectors Size Type /dev/sdp1 2048 4982527 4980480 2.4G Linux RAID /dev/sdp2 4982528 9176831 4194304 2G Linux RAID /dev/sdp5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdq: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 54D81C51-AB85-4DE2-AA16-263DF1C6BB8A Device Start End Sectors Size Type /dev/sdq1 2048 4982527 4980480 2.4G Linux RAID /dev/sdq2 4982528 9176831 4194304 2G Linux RAID /dev/sdq5 9453280 5860326239 5850872960 2.7T Linux RAID

-

@flyride I wanted to wait 10 mins, but my ssh session just got closed a few seconds after I connect the drive. Still gonna wait 10 mins. fdisk -l shows the drive is at /dev/sdo. Disk /dev/sdo: 9.1 TiB, 10000831348736 bytes, 19532873728 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 1713E819-3B9A-4CE3-94E8-5A3DBF1D5983 # mdadm --examine /dev/sd?5 /dev/sdb5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 3bd62073:a14acceb:9b6772fd:a2bd2c1b Update Time : Tue Jan 21 05:50:28 2020 Checksum : 5be7c167 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 0 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdc5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : c24f4ce9:f858ffe5:39e3b5f9:a2431b4f Update Time : Tue Jan 21 05:50:28 2020 Checksum : fc784ae4 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 1 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdd5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : de9e7cfd:9e0c3f85:0440908f:597a81b4 Update Time : Tue Jan 21 05:50:28 2020 Checksum : ab28e129 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 2 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sde5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : a81570df:dd73a097:3d4f4d84:58ec9c2d Update Time : Tue Jan 21 05:50:28 2020 Checksum : d56406b - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 3 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdf5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : e862fe90:222e6333:f541fb52:3830097f Update Time : Tue Jan 21 05:50:28 2020 Checksum : 7ac17e88 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 4 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdk5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 065068bb:0ec18b72:af02796c:eb6cab77 Update Time : Tue Jan 21 05:50:28 2020 Checksum : f673fc07 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 12 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdl5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 81ee1c1d:fe670a7d:4c291e84:281abb0f Update Time : Tue Jan 21 05:50:28 2020 Checksum : 125c1545 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 5 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdm5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 07f750e2:ebd026ac:f5c03557:7de8dd53 Update Time : Tue Jan 21 05:50:28 2020 Checksum : 1de6ecb8 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 6 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdn5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : b75ababb:4b982bb0:ebc1e473:a6716e38 Update Time : Tue Jan 21 05:50:28 2020 Checksum : fc94a1e7 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 7 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdo5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 73610f83:fb3cf895:c004147e:b4de2bfe Update Time : Sat Jan 18 07:13:53 2020 Checksum : d1dde2c9 - correct Events : 371014 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 11 Array State : AAAAA.AAAA.A. ('A' == active, '.' == missing, 'R' == replacing) /dev/sdp5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : b18d599a:f3efc211:24138276:d19eeb29 Update Time : Tue Jan 21 05:50:28 2020 Checksum : 30e5aaee - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 8 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdq5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 843a38a2:eabb6de6:1f5b53a1:a7d08136 Update Time : Tue Jan 21 05:50:28 2020 Checksum : 44d69d8b - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 9 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) root@homelab:~# root@homelab:~# mdadm --examine /dev/sd?5 /dev/sdb5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 3bd62073:a14acceb:9b6772fd:a2bd2c1b Update Time : Tue Jan 21 05:50:28 2020 Checksum : 5be7c167 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 0 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdc5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : c24f4ce9:f858ffe5:39e3b5f9:a2431b4f Update Time : Tue Jan 21 05:50:28 2020 Checksum : fc784ae4 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 1 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdd5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : de9e7cfd:9e0c3f85:0440908f:597a81b4 Update Time : Tue Jan 21 05:50:28 2020 Checksum : ab28e129 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 2 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sde5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : a81570df:dd73a097:3d4f4d84:58ec9c2d Update Time : Tue Jan 21 05:50:28 2020 Checksum : d56406b - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 3 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdf5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : e862fe90:222e6333:f541fb52:3830097f Update Time : Tue Jan 21 05:50:28 2020 Checksum : 7ac17e88 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 4 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdk5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 065068bb:0ec18b72:af02796c:eb6cab77 Update Time : Tue Jan 21 05:50:28 2020 Checksum : f673fc07 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 12 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdl5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 81ee1c1d:fe670a7d:4c291e84:281abb0f Update Time : Tue Jan 21 05:50:28 2020 Checksum : 125c1545 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 5 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdm5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 07f750e2:ebd026ac:f5c03557:7de8dd53 Update Time : Tue Jan 21 05:50:28 2020 Checksum : 1de6ecb8 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 6 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdn5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : b75ababb:4b982bb0:ebc1e473:a6716e38 Update Time : Tue Jan 21 05:50:28 2020 Checksum : fc94a1e7 - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 7 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdo5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 73610f83:fb3cf895:c004147e:b4de2bfe Update Time : Sat Jan 18 07:13:53 2020 Checksum : d1dde2c9 - correct Events : 371014 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 11 Array State : AAAAA.AAAA.A. ('A' == active, '.' == missing, 'R' == replacing) /dev/sdp5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : b18d599a:f3efc211:24138276:d19eeb29 Update Time : Tue Jan 21 05:50:28 2020 Checksum : 30e5aaee - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 8 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdq5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 843a38a2:eabb6de6:1f5b53a1:a7d08136 Update Time : Tue Jan 21 05:50:28 2020 Checksum : 44d69d8b - correct Events : 2 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 9 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) # cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md4 : active raid5 sdl6[0] sdn6[2] sdm6[1] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/3] [UUU__] md5 : active raid1 super 1.2 [2/0] [__] md1 : active raid1 sdb2[0] sdc2[1] sdd2[2] sde2[3] sdf2[4] sdk2[5] sdl2[6] sdm2[7] sdn2[8] sdp2[24](F) sdq2[11] 2097088 blocks [24/10] [UUUUUUUUU__U____________] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] 2490176 blocks [12/4] [_UUU_U______] unused devices: <none>

-

root@homelab:~# vgchange -an 0 logical volume(s) in volume group "vg1" now active root@homelab:~# mdadm --examine /dev/sd?5 /dev/sdb5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 3bd62073:a14acceb:9b6772fd:a2bd2c1b Update Time : Tue Jan 21 05:07:11 2020 Checksum : 5be7b741 - correct Events : 1 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 0 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdc5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : c24f4ce9:f858ffe5:39e3b5f9:a2431b4f Update Time : Tue Jan 21 05:07:11 2020 Checksum : fc7840be - correct Events : 1 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 1 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdd5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : de9e7cfd:9e0c3f85:0440908f:597a81b4 Update Time : Tue Jan 21 05:07:11 2020 Checksum : ab28d703 - correct Events : 1 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 2 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sde5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : a81570df:dd73a097:3d4f4d84:58ec9c2d Update Time : Tue Jan 21 05:07:11 2020 Checksum : d563645 - correct Events : 1 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 3 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdf5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : e862fe90:222e6333:f541fb52:3830097f Update Time : Tue Jan 21 05:07:11 2020 Checksum : 7ac17462 - correct Events : 1 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 4 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdk5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 065068bb:0ec18b72:af02796c:eb6cab77 Update Time : Tue Jan 21 05:07:11 2020 Checksum : f673f1e1 - correct Events : 1 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 12 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdl5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 81ee1c1d:fe670a7d:4c291e84:281abb0f Update Time : Tue Jan 21 05:07:11 2020 Checksum : 125c0b1f - correct Events : 1 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 5 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdm5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 07f750e2:ebd026ac:f5c03557:7de8dd53 Update Time : Tue Jan 21 05:07:11 2020 Checksum : 1de6e292 - correct Events : 1 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 6 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdn5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : b75ababb:4b982bb0:ebc1e473:a6716e38 Update Time : Tue Jan 21 05:07:11 2020 Checksum : fc9497c1 - correct Events : 1 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 7 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdo5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : aefb3c70:11304796:c4b38dc3:aaaee205 Update Time : Tue Jan 21 05:07:11 2020 Checksum : b44fff5f - correct Events : 1 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 11 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdp5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : b18d599a:f3efc211:24138276:d19eeb29 Update Time : Tue Jan 21 05:07:11 2020 Checksum : 30e5a0c8 - correct Events : 1 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 8 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdq5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Tue Jan 21 05:07:10 2020 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 843a38a2:eabb6de6:1f5b53a1:a7d08136 Update Time : Tue Jan 21 05:07:11 2020 Checksum : 44d69365 - correct Events : 1 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 9 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) root@homelab:~# mdadm --stop /dev/md2 mdadm: stopped /dev/md2 So just to be clear, you want me to take out the power and sata cable from the current 10TB, and put them into the "bad" 10TB? I don't have to power down btw.

-

Would reinserting the "bad" 10TB and switch with the current 10TB, or add to the array help? root@homelab:/# vgchange -an 0 logical volume(s) in volume group "vg1" now active root@homelab:/# root@homelab:/# mdadm --stop /dev/md2 mdadm: stopped /dev/md2 root@homelab:/# mdadm -Cf /dev/md2 -e1.2 -n13 -l5 --verbose --assume-clean /dev/sd[bcdefpqlmn]5 missing /dev/sdo5 /dev/sdk5 -u43699871:217306be:dc16f5e8:dcbe1b0d mdadm: layout defaults to left-symmetric mdadm: chunk size defaults to 64K mdadm: /dev/sdb5 appears to be part of a raid array: level=raid5 devices=13 ctime=Sat Jan 18 10:33:37 2020 mdadm: /dev/sdc5 appears to be part of a raid array: level=raid5 devices=13 ctime=Sat Jan 18 10:33:37 2020 mdadm: /dev/sdd5 appears to be part of a raid array: level=raid5 devices=13 ctime=Sat Jan 18 10:33:37 2020 mdadm: /dev/sde5 appears to be part of a raid array: level=raid5 devices=13 ctime=Sat Jan 18 10:33:37 2020 mdadm: /dev/sdf5 appears to be part of a raid array: level=raid5 devices=13 ctime=Sat Jan 18 10:33:37 2020 mdadm: /dev/sdl5 appears to be part of a raid array: level=raid5 devices=13 ctime=Sat Jan 18 10:33:37 2020 mdadm: /dev/sdm5 appears to be part of a raid array: level=raid5 devices=13 ctime=Sat Jan 18 10:33:37 2020 mdadm: /dev/sdn5 appears to be part of a raid array: level=raid5 devices=13 ctime=Sat Jan 18 10:33:37 2020 mdadm: /dev/sdp5 appears to be part of a raid array: level=raid5 devices=13 ctime=Sat Jan 18 10:33:37 2020 mdadm: /dev/sdq5 appears to be part of a raid array: level=raid5 devices=13 ctime=Sat Jan 18 10:33:37 2020 mdadm: /dev/sdo5 appears to be part of a raid array: level=raid5 devices=13 ctime=Sat Jan 18 10:33:37 2020 mdadm: /dev/sdk5 appears to be part of a raid array: level=raid5 devices=13 ctime=Sat Jan 18 10:33:37 2020 mdadm: size set to 2925435456K Continue creating array? yes mdadm: array /dev/md2 started. root@homelab:/# vgchange -ay 2 logical volume(s) in volume group "vg1" now active root@homelab:/# mount -t btrfs -o ro,nologreplay /dev/vg1/volume_1 /volume1 mount: wrong fs type, bad option, bad superblock on /dev/vg1/volume_1, missing codepage or helper program, or other error In some cases useful info is found in syslog - try dmesg | tail or so. root@homelab:/# dmesg | tail [38215.216573] disk 5, o:1, dev:sdl5 [38215.216574] disk 6, o:1, dev:sdm5 [38215.216574] disk 7, o:1, dev:sdn5 [38215.216575] disk 8, o:1, dev:sdp5 [38215.216575] disk 9, o:1, dev:sdq5 [38215.216576] disk 11, o:1, dev:sdo5 [38215.216577] disk 12, o:1, dev:sdk5 [38215.242566] md2: detected capacity change from 0 to 35947750883328 [38215.245519] md2: unknown partition table [38221.515480] bio: create slab <bio-2> at 2 [38215.242566] md2: detected capacity change from 0 to 35947750883328 This is good, right?

-

Nope, doesn't work. root@homelab:~# mount -t btrfs -o ro,nologreplay /dev/vg1/volume_1 /volume1 mount: wrong fs type, bad option, bad superblock on /dev/vg1/volume_1, missing codepage or helper program, or other error In some cases useful info is found in syslog - try dmesg | tail or so. root@homelab:~# dmesg | tail [16079.284996] init: dhcp-client (eth4) main process (16851) killed by TERM signal [16079.439991] init: nmbd main process (17405) killed by TERM signal [16083.428550] alx 0000:05:00.0 eth4: NIC Up: 100 Mbps Full [16084.471931] iSCSI:iscsi_target.c:520:iscsit_add_np CORE[0] - Added Network Portal: 192.168.0.83:3260 on iSCSI/TCP [16084.472048] iSCSI:iscsi_target.c:520:iscsit_add_np CORE[0] - Added Network Portal: [fe80::d250:99ff:fe26:36a8]:3260 on iSCSI/TCP [16084.498404] init: dhcp-client (eth4) main process (17245) killed by TERM signal [27893.370010] usb 3-13: usbfs: USBDEVFS_CONTROL failed cmd blazer_usb rqt 33 rq 9 len 8 ret -110 [33089.867131] bio: create slab <bio-2> at 2 [33485.021943] hfsplus: unable to parse mount options [33485.026980] UDF-fs: bad mount option "norecovery" or missing value root@homelab:~#

-

Uh oh. # mount -o ro,norecovery /dev/vg1/volume_1 /volume1 mount: wrong fs type, bad option, bad superblock on /dev/vg1/volume_1, missing codepage or helper program, or other error In some cases useful info is found in syslog - try dmesg | tail or so. # dmesg | tail [16079.284996] init: dhcp-client (eth4) main process (16851) killed by TERM signal [16079.439991] init: nmbd main process (17405) killed by TERM signal [16083.428550] alx 0000:05:00.0 eth4: NIC Up: 100 Mbps Full [16084.471931] iSCSI:iscsi_target.c:520:iscsit_add_np CORE[0] - Added Network Portal: 192.168.0.83:3260 on iSCSI/TCP [16084.472048] iSCSI:iscsi_target.c:520:iscsit_add_np CORE[0] - Added Network Portal: [fe80::d250:99ff:fe26:36a8]:3260 on iSCSI/TCP [16084.498404] init: dhcp-client (eth4) main process (17245) killed by TERM signal [27893.370010] usb 3-13: usbfs: USBDEVFS_CONTROL failed cmd blazer_usb rqt 33 rq 9 len 8 ret -110 [33089.867131] bio: create slab <bio-2> at 2 [33485.021943] hfsplus: unable to parse mount options [33485.026980] UDF-fs: bad mount option "norecovery" or missing value DSM's Storage Manager shows that I can repair though.

-

I see. SHR/Syno's cache implementation is basically a copy of selected hdds' files, right? In my understanding. root@homelab:/# vgchange -ay 2 logical volume(s) in volume group "vg1" now active root@homelab:/# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md4 : active raid5 sdl6[0] sdo6[5] sdn6[2] sdm6[1] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/4] [UUUU_] md2 : active raid5 sdb5[0] sdk5[12] sdq5[10] sdp5[9] sdo5[8] sdn5[7] sdm5[6] sdl5[5] sdf5[4] sde5[3] sdd5[2] sdc5[1] 35105225472 blocks super 1.2 level 5, 64k chunk, algorithm 2 [13/12] [UUUUUUUUUUU_U] md5 : active raid1 sdo7[2] 3905898432 blocks super 1.2 [2/1] [_U] md1 : active raid1 sdb2[0] sdc2[1] sdd2[2] sde2[3] sdf2[4] sdk2[5] sdl2[6] sdm2[7] sdn2[8] sdo2[9] sdp2[10] sdq2[11] 2097088 blocks [24/12] [UUUUUUUUUUUU____________] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] 2490176 blocks [12/4] [_UUU_U______] unused devices: <none>