C-Fu

Member-

Posts

90 -

Joined

-

Last visited

Everything posted by C-Fu

-

# mdadm --detail /dev/md2 /dev/md2: Version : 1.2 Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Used Dev Size : 2925435456 (2789.91 GiB 2995.65 GB) Raid Devices : 13 Total Devices : 12 Persistence : Superblock is persistent Update Time : Fri Jan 17 17:14:06 2020 State : clean, degraded Active Devices : 12 Working Devices : 12 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : homelab:2 (local to host homelab) UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Events : 370940 Number Major Minor RaidDevice State 0 8 21 0 active sync /dev/sdb5 1 8 37 1 active sync /dev/sdc5 2 8 53 2 active sync /dev/sdd5 3 8 69 3 active sync /dev/sde5 4 8 85 4 active sync /dev/sdf5 5 8 165 5 active sync /dev/sdk5 13 65 5 6 active sync /dev/sdq5 7 8 181 7 active sync /dev/sdl5 8 8 197 8 active sync /dev/sdm5 9 8 213 9 active sync /dev/sdn5 - 0 0 10 removed 11 8 229 11 active sync /dev/sdo5 10 8 245 12 active sync /dev/sdp5 # mdadm --examine /dev/sd[bcdefklmnpoqr]5 /dev/sdb5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : a8109f74:46bc8509:6fc3bca8:9fddb6a7 Update Time : Fri Jan 17 17:14:06 2020 Checksum : b3409c8 - correct Events : 370940 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 0 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdc5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 8dfdc601:e01f8a98:9a8e78f1:a7951260 Update Time : Fri Jan 17 17:14:06 2020 Checksum : 2877dd7b - correct Events : 370940 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 1 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdd5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : f98bc050:a4b46deb:c3168fa0:08d90061 Update Time : Fri Jan 17 17:14:06 2020 Checksum : 7a59cc36 - correct Events : 370940 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 2 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sde5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 1e2742b7:d1847218:816c7135:cdf30c07 Update Time : Fri Jan 17 17:14:06 2020 Checksum : 48cea80b - correct Events : 370940 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 3 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdf5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : ce60c47e:14994160:da4d1482:fd7901f2 Update Time : Fri Jan 17 17:14:06 2020 Checksum : 8fb75d89 - correct Events : 370940 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 4 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdk5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 706c5124:d647d300:733fb961:e5cd8127 Update Time : Fri Jan 17 17:14:06 2020 Checksum : eafb5d6d - correct Events : 370940 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 5 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdl5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 6993b9eb:8ad7c80f:dc17268f:a8efa73d Update Time : Fri Jan 17 17:14:06 2020 Checksum : 4ec0e4a - correct Events : 370940 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 7 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdm5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 2f1247d1:a536d2ad:ba2eb47f:a7eaf237 Update Time : Fri Jan 17 17:14:06 2020 Checksum : 735bfe09 - correct Events : 370940 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 8 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdn5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 1b4ab27d:bb7488fa:a6cc1f75:d21d1a83 Update Time : Fri Jan 17 17:14:06 2020 Checksum : ad104523 - correct Events : 370940 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 9 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdo5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 73610f83:fb3cf895:c004147e:b4de2bfe Update Time : Fri Jan 17 17:14:06 2020 Checksum : d1e31db9 - correct Events : 370940 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 11 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdp5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : a64f01c2:76c56102:38ad7c4e:7bce88d1 Update Time : Fri Jan 17 17:14:06 2020 Checksum : 21042cad - correct Events : 370940 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 12 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdq5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 5cc6456d:bfc950bf:1baf6fef:aabec947 Update Time : Fri Jan 17 17:14:06 2020 Checksum : a06b99b9 - correct Events : 370940 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 6 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdr5: Magic : a92b4efc Version : 1.2 Feature Map : 0x2 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Recovery Offset : 79355560 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : bfc0d160:b7147b64:ca088295:9c6ab3b2 Update Time : Fri Jan 17 07:11:38 2020 Checksum : 4ed7b628 - correct Events : 370924 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 10 Array State : AAAAAAAAAAAAA ('A' == active, '.' == missing, 'R' == replacing) # dmesg | fgrep "md:" [ 0.895403] md: linear personality registered for level -1 [ 0.895405] md: raid0 personality registered for level 0 [ 0.895406] md: raid1 personality registered for level 1 [ 0.895407] md: raid10 personality registered for level 10 [ 0.895776] md: raid6 personality registered for level 6 [ 0.895778] md: raid5 personality registered for level 5 [ 0.895779] md: raid4 personality registered for level 4 [ 0.895780] md: raidF1 personality registered for level 45 [ 9.002596] md: Autodetecting RAID arrays. [ 9.007616] md: invalid raid superblock magic on sda1 [ 9.012674] md: sda1 does not have a valid v0.90 superblock, not importing! [ 9.075202] md: invalid raid superblock magic on sdb5 [ 9.080258] md: sdb5 does not have a valid v0.90 superblock, not importing! [ 9.131736] md: invalid raid superblock magic on sdc5 [ 9.136787] md: sdc5 does not have a valid v0.90 superblock, not importing! [ 9.184688] md: invalid raid superblock magic on sdd5 [ 9.189741] md: sdd5 does not have a valid v0.90 superblock, not importing! [ 9.254542] md: invalid raid superblock magic on sde5 [ 9.259597] md: sde5 does not have a valid v0.90 superblock, not importing! [ 9.310317] md: invalid raid superblock magic on sdf5 [ 9.315372] md: sdf5 does not have a valid v0.90 superblock, not importing! [ 9.370415] md: invalid raid superblock magic on sdk5 [ 9.375468] md: sdk5 does not have a valid v0.90 superblock, not importing! [ 9.423869] md: invalid raid superblock magic on sdl5 [ 9.428919] md: sdl5 does not have a valid v0.90 superblock, not importing! [ 9.468250] md: invalid raid superblock magic on sdl6 [ 9.473300] md: sdl6 does not have a valid v0.90 superblock, not importing! [ 9.519960] md: invalid raid superblock magic on sdm5 [ 9.525015] md: sdm5 does not have a valid v0.90 superblock, not importing! [ 9.556049] md: invalid raid superblock magic on sdm6 [ 9.561101] md: sdm6 does not have a valid v0.90 superblock, not importing! [ 9.614718] md: invalid raid superblock magic on sdn5 [ 9.619773] md: sdn5 does not have a valid v0.90 superblock, not importing! [ 9.642163] md: invalid raid superblock magic on sdn6 [ 9.647220] md: sdn6 does not have a valid v0.90 superblock, not importing! [ 9.689354] md: invalid raid superblock magic on sdo5 [ 9.694404] md: sdo5 does not have a valid v0.90 superblock, not importing! [ 9.711917] md: invalid raid superblock magic on sdo6 [ 9.716972] md: sdo6 does not have a valid v0.90 superblock, not importing! [ 9.731387] md: invalid raid superblock magic on sdo7 [ 9.736444] md: sdo7 does not have a valid v0.90 superblock, not importing! [ 9.793088] md: invalid raid superblock magic on sdp5 [ 9.798143] md: sdp5 does not have a valid v0.90 superblock, not importing! [ 9.845631] md: invalid raid superblock magic on sdq5 [ 9.850684] md: sdq5 does not have a valid v0.90 superblock, not importing! [ 9.895380] md: invalid raid superblock magic on sdr5 [ 9.900435] md: sdr5 does not have a valid v0.90 superblock, not importing! [ 9.914093] md: invalid raid superblock magic on sdr6 [ 9.919143] md: sdr6 does not have a valid v0.90 superblock, not importing! [ 9.938110] md: invalid raid superblock magic on sdr7 [ 9.943161] md: sdr7 does not have a valid v0.90 superblock, not importing! [ 9.943162] md: Scanned 47 and added 26 devices. [ 9.943163] md: autorun ... [ 9.943163] md: considering sdb1 ... [ 9.943165] md: adding sdb1 ... [ 9.943166] md: sdb2 has different UUID to sdb1 [ 9.943168] md: adding sdc1 ... [ 9.943169] md: sdc2 has different UUID to sdb1 [ 9.943170] md: adding sdd1 ... [ 9.943171] md: sdd2 has different UUID to sdb1 [ 9.943172] md: adding sde1 ... [ 9.943173] md: sde2 has different UUID to sdb1 [ 9.943174] md: adding sdf1 ... [ 9.943175] md: sdf2 has different UUID to sdb1 [ 9.943176] md: adding sdk1 ... [ 9.943177] md: sdk2 has different UUID to sdb1 [ 9.943178] md: adding sdl1 ... [ 9.943179] md: sdl2 has different UUID to sdb1 [ 9.943181] md: adding sdm1 ... [ 9.943182] md: sdm2 has different UUID to sdb1 [ 9.943183] md: adding sdn1 ... [ 9.943184] md: sdn2 has different UUID to sdb1 [ 9.943185] md: adding sdo1 ... [ 9.943186] md: sdo2 has different UUID to sdb1 [ 9.943187] md: adding sdp1 ... [ 9.943188] md: sdp2 has different UUID to sdb1 [ 9.943189] md: adding sdq1 ... [ 9.943190] md: sdq2 has different UUID to sdb1 [ 9.943191] md: adding sdr1 ... [ 9.943192] md: sdr2 has different UUID to sdb1 [ 9.943203] md: kicking non-fresh sdr1 from candidates rdevs! [ 9.943203] md: export_rdev(sdr1) [ 9.943205] md: kicking non-fresh sdq1 from candidates rdevs! [ 9.943205] md: export_rdev(sdq1) [ 9.943207] md: kicking non-fresh sde1 from candidates rdevs! [ 9.943207] md: export_rdev(sde1) [ 9.943208] md: created md0 [ 9.943209] md: bind<sdp1> [ 9.943214] md: bind<sdo1> [ 9.943220] md: bind<sdn1> [ 9.943223] md: bind<sdm1> [ 9.943226] md: bind<sdl1> [ 9.943229] md: bind<sdk1> [ 9.943232] md: bind<sdf1> [ 9.943235] md: bind<sdd1> [ 9.943238] md: bind<sdc1> [ 9.943241] md: bind<sdb1> [ 9.943244] md: running: <sdb1><sdc1><sdd1><sdf1><sdk1><sdl1><sdm1><sdn1><sdo1><sdp1> [ 9.981355] md: considering sdb2 ... [ 9.981356] md: adding sdb2 ... [ 9.981357] md: adding sdc2 ... [ 9.981358] md: adding sdd2 ... [ 9.981360] md: adding sde2 ... [ 9.981361] md: adding sdf2 ... [ 9.981362] md: adding sdk2 ... [ 9.981363] md: adding sdl2 ... [ 9.981364] md: adding sdm2 ... [ 9.981365] md: adding sdn2 ... [ 9.981367] md: adding sdo2 ... [ 9.981368] md: adding sdp2 ... [ 9.981369] md: md0: current auto_remap = 0 [ 9.981369] md: adding sdq2 ... [ 9.981370] md: adding sdr2 ... [ 9.981372] md: resync of RAID array md0 [ 9.981504] md: created md1 [ 9.981505] md: bind<sdr2> [ 9.981511] md: bind<sdq2> [ 9.981515] md: bind<sdp2> [ 9.981520] md: bind<sdo2> [ 9.981525] md: bind<sdn2> [ 9.981530] md: bind<sdm2> [ 9.981535] md: bind<sdl2> [ 9.981540] md: bind<sdk2> [ 9.981544] md: bind<sdf2> [ 9.981549] md: bind<sde2> [ 9.981554] md: bind<sdd2> [ 9.981559] md: bind<sdc2> [ 9.981565] md: bind<sdb2> [ 9.981574] md: running: <sdb2><sdc2><sdd2><sde2><sdf2><sdk2><sdl2><sdm2><sdn2><sdo2><sdp2><sdq2><sdr2> [ 9.989470] md: minimum _guaranteed_ speed: 1000 KB/sec/disk. [ 9.989470] md: using maximum available idle IO bandwidth (but not more than 200000 KB/sec) for resync. [ 9.989474] md: using 128k window, over a total of 2490176k. [ 10.052110] md: ... autorun DONE. [ 10.052124] md: md1: current auto_remap = 0 [ 10.052126] md: resync of RAID array md1 [ 10.060221] md: minimum _guaranteed_ speed: 1000 KB/sec/disk. [ 10.060222] md: using maximum available idle IO bandwidth (but not more than 200000 KB/sec) for resync. [ 10.060224] md: using 128k window, over a total of 2097088k. [ 29.602277] md: bind<sdr7> [ 29.602651] md: bind<sdo7> [ 29.679014] md: md2 stopped. [ 29.803959] md: bind<sdc5> [ 29.804024] md: bind<sdd5> [ 29.804084] md: bind<sde5> [ 29.804145] md: bind<sdf5> [ 29.804218] md: bind<sdk5> [ 29.804286] md: bind<sdq5> [ 29.828033] md: bind<sdl5> [ 29.828589] md: bind<sdm5> [ 29.828942] md: bind<sdn5> [ 29.829120] md: bind<sdr5> [ 29.854008] md: bind<sdo5> [ 29.855384] md: bind<sdp5> [ 29.857913] md: bind<sdb5> [ 29.857922] md: kicking non-fresh sdr5 from array! [ 29.857925] md: unbind<sdr5> [ 29.865755] md: export_rdev(sdr5) [ 29.993600] md: bind<sdm6> [ 29.993748] md: bind<sdn6> [ 29.993917] md: bind<sdr6> [ 29.994084] md: bind<sdo6> [ 29.994230] md: bind<sdl6> [ 30.034680] md: md4: set sdl6 to auto_remap [1] [ 30.034681] md: md4: set sdo6 to auto_remap [1] [ 30.034681] md: md4: set sdr6 to auto_remap [1] [ 30.034682] md: md4: set sdn6 to auto_remap [1] [ 30.034682] md: md4: set sdm6 to auto_remap [1] [ 30.034684] md: delaying recovery of md4 until md1 has finished (they share one or more physical units) [ 30.222237] md: md2: set sdb5 to auto_remap [0] [ 30.222238] md: md2: set sdp5 to auto_remap [0] [ 30.222238] md: md2: set sdo5 to auto_remap [0] [ 30.222239] md: md2: set sdn5 to auto_remap [0] [ 30.222239] md: md2: set sdm5 to auto_remap [0] [ 30.222240] md: md2: set sdl5 to auto_remap [0] [ 30.222241] md: md2: set sdq5 to auto_remap [0] [ 30.222241] md: md2: set sdk5 to auto_remap [0] [ 30.222242] md: md2: set sdf5 to auto_remap [0] [ 30.222242] md: md2: set sde5 to auto_remap [0] [ 30.222243] md: md2: set sdd5 to auto_remap [0] [ 30.222244] md: md2: set sdc5 to auto_remap [0] [ 30.222244] md: md2 stopped. [ 30.222246] md: unbind<sdb5> [ 30.228152] md: export_rdev(sdb5) [ 30.228157] md: unbind<sdp5> [ 30.231173] md: export_rdev(sdp5) [ 30.231178] md: unbind<sdo5> [ 30.236190] md: export_rdev(sdo5) [ 30.236205] md: unbind<sdn5> [ 30.239180] md: export_rdev(sdn5) [ 30.239183] md: unbind<sdm5> [ 30.244169] md: export_rdev(sdm5) [ 30.244172] md: unbind<sdl5> [ 30.247189] md: export_rdev(sdl5) [ 30.247192] md: unbind<sdq5> [ 30.252207] md: export_rdev(sdq5) [ 30.252211] md: unbind<sdk5> [ 30.255196] md: export_rdev(sdk5) [ 30.255200] md: unbind<sdf5> [ 30.259408] md: export_rdev(sdf5) [ 30.259411] md: unbind<sde5> [ 30.271235] md: export_rdev(sde5) [ 30.271242] md: unbind<sdd5> [ 30.280228] md: export_rdev(sdd5) [ 30.280233] md: unbind<sdc5> [ 30.288234] md: export_rdev(sdc5) [ 30.680068] md: md2 stopped. [ 30.731994] md: bind<sdc5> [ 30.732110] md: bind<sdd5> [ 30.732258] md: bind<sde5> [ 30.732340] md: bind<sdf5> [ 30.732481] md: bind<sdk5> [ 30.732606] md: bind<sdq5> [ 30.737432] md: bind<sdl5> [ 30.748124] md: bind<sdm5> [ 30.748468] md: bind<sdn5> [ 30.748826] md: bind<sdr5> [ 30.749254] md: bind<sdo5> [ 30.763073] md: bind<sdp5> [ 30.776215] md: bind<sdb5> [ 30.776229] md: kicking non-fresh sdr5 from array! [ 30.776231] md: unbind<sdr5> [ 30.780828] md: export_rdev(sdr5) [ 60.552383] md: md1: resync done. [ 60.569174] md: md1: current auto_remap = 0 [ 60.569202] md: delaying recovery of md4 until md0 has finished (they share one or more physical units) [ 109.601864] md: md0: resync done. [ 109.615133] md: md0: current auto_remap = 0 [ 109.615149] md: md4: flushing inflight I/O [ 109.618280] md: recovery of RAID array md4 [ 109.618282] md: minimum _guaranteed_ speed: 600000 KB/sec/disk. [ 109.618283] md: using maximum available idle IO bandwidth (but not more than 800000 KB/sec) for recovery. [ 109.618296] md: using 128k window, over a total of 2930246912k. [ 109.618297] md: resuming recovery of md4 from checkpoint. [17557.409842] md: md4: recovery done. [17557.601751] md: md4: set sdl6 to auto_remap [0] [17557.601753] md: md4: set sdo6 to auto_remap [0] [17557.601754] md: md4: set sdr6 to auto_remap [0] [17557.601754] md: md4: set sdn6 to auto_remap [0] [17557.601755] md: md4: set sdm6 to auto_remap [0]

-

Well a power trip just happened. And obviously I freaked out lol. hopefully nothing damaging happened. But a drive in md2 doesn't show [E] anymore. # cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md2 : active raid5 sdb5[0] sdp5[10] sdo5[11] sdn5[9] sdm5[8] sdl5[7] sdq5[13] sdk5[5] sdf5[4] sde5[3] sdd5[2] sdc5[1] 35105225472 blocks super 1.2 level 5, 64k chunk, algorithm 2 [13/12] [UUUUUUUUUU_UU] md4 : active raid5 sdl6[0] sdo6[3] sdr6[5] sdn6[2] sdm6[1] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/5] [UUUUU] md5 : active raid1 sdo7[0] sdr7[2] 3905898432 blocks super 1.2 [2/2] [UU] md1 : active raid1 sdb2[0] sdc2[1] sdd2[2] sde2[3] sdf2[4] sdk2[5] sdl2[6] sdm2[7] sdn2[9] sdo2[8] sdp2[10] sdq2[11] sdr2[12] 2097088 blocks [24/13] [UUUUUUUUUUUUU___________] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] sdk1[6] sdl1[7] sdm1[8] sdn1[10] sdo1[0] sdp1[4] 2490176 blocks [12/10] [UUUUUUUUU_U_] unused devices: <none> yup, sdr5 is still missing.

-

I foolishly thought if I deactivated a drive, unplug it and replug it (hotswap-capable) DSM would recognize, reactivate the 10TB and rebuild array. That's obviously not the case. Current status is, md2 has finished resync. md2 : active raid5 sdb5[0] sdp5[10](E) sdn5[11] sdo5[9] sdm5[8] sdl5[7] sdq5[13] sdk5[5] sdf5[4] sde5[3] sdd5[2] sdc5[1] 35105225472 blocks super 1.2 level 5, 64k chunk, algorithm 2 [13/12] [UUUUUUUUUU_UE] this means that sdp5 has system partition error, and one sd?5 partition is missing, right? md5 is 95% complete. Wow, I didn't know just booting to ubuntu had such a large effect (drives got auto remapped). Or did it get remapped because of a command like mdadm --assemble --scan that I did? That's.... awesome. I never would've come across that article on my own.

-

Aaah, I see now what you mean. My mistake, sorry! root@homelab:~# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md2 : active raid5 sdr5[14] sdb5[0] sdp5[10] sdn5[11] sdo5[9] sdm5[8] sdl5[7] sdq5[13] sdk5[5] sdf5[4] sde5[3] sdd5[2] sdc5[1] 35105225472 blocks super 1.2 level 5, 64k chunk, algorithm 2 [13/12] [UUUUUUUUUU_UU] [>....................] recovery = 0.0% (2322676/2925435456) finish=739.2min speed=65905K/sec md4 : active raid5 sdr6[5] sdl6[0] sdn6[3] sdo6[2] sdm6[1] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/4] [UUU_U] resync=DELAYED md5 : active raid1 sdr7[2] sdn7[0] 3905898432 blocks super 1.2 [2/1] [U_] resync=DELAYED md1 : active raid1 sdr2[12] sdq2[11] sdp2[10] sdo2[9] sdn2[8] sdm2[7] sdl2[6] sdk2[5] sdf2[4] sde2[3] sdd2[2] sdc2[1] sdb2[0] 2097088 blocks [24/13] [UUUUUUUUUUUUU___________] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] sdk1[6] sdl1[7] sdm1[8] sdn1[0] sdo1[10] sdp1[4] 2490176 blocks [12/10] [UUUUUUUUU_U_] unused devices: <none> md2 looks... promising, am I right?

-

OK I just remembered, I replaced an old 3TB drive because it was showing increasing bad sectors. I replaced the drive, resync the array and everything went well for a few months at least. I believe the old data is still there. Sorry about that, it's 6am right now and I just remembered about this but nothing happened during the few months after replacing. Should I plug it in and do mdstat?

-

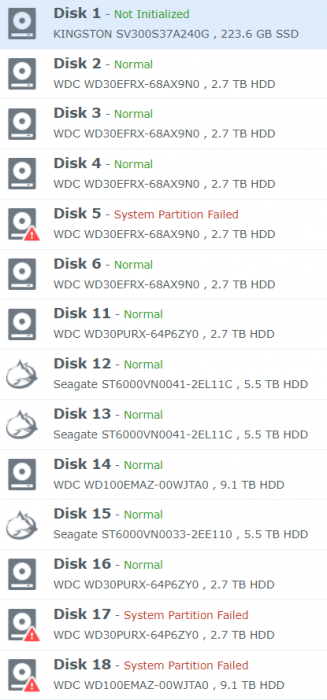

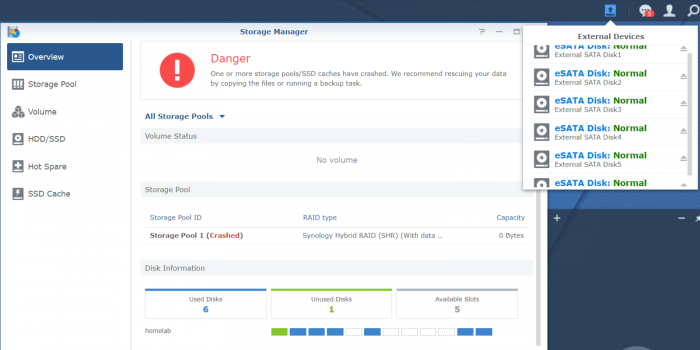

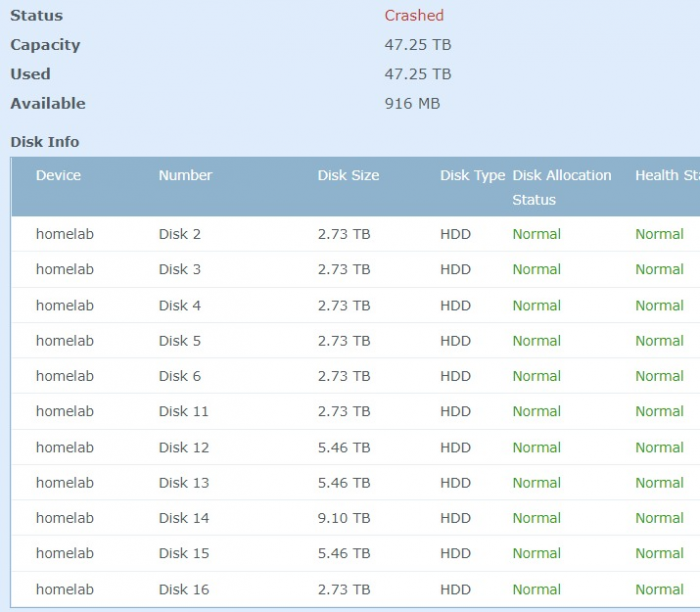

It was originally 13 drives + cache. The cache is not initialized now in DSM for some reason. And also I stupidly deactivated one 9.10TB (10TB WD) drive. root@homelab:~# fdisk -l Disk /dev/sda: 223.6 GiB, 240057409536 bytes, 468862128 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x696935dc Device Boot Start End Sectors Size Id Type /dev/sda1 2048 468857024 468854977 223.6G fd Linux raid autodetect Disk /dev/sdb: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 43C8C355-AE0A-42DC-97CC-508B0FB4EF37 Device Start End Sectors Size Type /dev/sdb1 2048 4982527 4980480 2.4G Linux RAID /dev/sdb2 4982528 9176831 4194304 2G Linux RAID /dev/sdb5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdc: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 0600DFFC-A576-4242-976A-3ACAE5284C4C Device Start End Sectors Size Type /dev/sdc1 2048 4982527 4980480 2.4G Linux RAID /dev/sdc2 4982528 9176831 4194304 2G Linux RAID /dev/sdc5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdd: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 58B43CB1-1F03-41D3-A734-014F59DE34E8 Device Start End Sectors Size Type /dev/sdd1 2048 4982527 4980480 2.4G Linux RAID /dev/sdd2 4982528 9176831 4194304 2G Linux RAID /dev/sdd5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sde: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: E5FD9CDA-FE14-4F95-B776-B176E7130DEA Device Start End Sectors Size Type /dev/sde1 2048 4982527 4980480 2.4G Linux RAID /dev/sde2 4982528 9176831 4194304 2G Linux RAID /dev/sde5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdf: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 48A13430-10A1-4050-BA78-723DB398CE87 Device Start End Sectors Size Type /dev/sdf1 2048 4982527 4980480 2.4G Linux RAID /dev/sdf2 4982528 9176831 4194304 2G Linux RAID /dev/sdf5 9453280 5860326239 5850872960 2.7T Linux RAID GPT PMBR size mismatch (102399 != 30277631) will be corrected by w(rite). Disk /dev/synoboot: 14.4 GiB, 15502147584 bytes, 30277632 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: B3CAAA25-3CA1-48FA-A5B6-105ADDE4793F Device Start End Sectors Size Type /dev/synoboot1 2048 32767 30720 15M EFI System /dev/synoboot2 32768 94207 61440 30M Linux filesystem /dev/synoboot3 94208 102366 8159 4M BIOS boot Disk /dev/sdk: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 1D5B8B09-8D4A-4729-B089-442620D3D507 Device Start End Sectors Size Type /dev/sdk1 2048 4982527 4980480 2.4G Linux RAID /dev/sdk2 4982528 9176831 4194304 2G Linux RAID /dev/sdk5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdl: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 849E02B2-2734-496B-AB52-A572DF8FE63F Device Start End Sectors Size Type /dev/sdl1 2048 4982527 4980480 2.4G Linux RAID /dev/sdl2 4982528 9176831 4194304 2G Linux RAID /dev/sdl5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdl6 5860342336 11720838239 5860495904 2.7T Linux RAID Disk /dev/sdm: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 423D33B4-90CE-4E34-9C40-6E06D1F50C0C Device Start End Sectors Size Type /dev/sdm1 2048 4982527 4980480 2.4G Linux RAID /dev/sdm2 4982528 9176831 4194304 2G Linux RAID /dev/sdm5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdm6 5860342336 11720838239 5860495904 2.7T Linux RAID Disk /dev/sdn: 9.1 TiB, 10000831348736 bytes, 19532873728 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 1713E819-3B9A-4CE3-94E8-5A3DBF1D5983 Device Start End Sectors Size Type /dev/sdn1 2048 4982527 4980480 2.4G Linux RAID /dev/sdn2 4982528 9176831 4194304 2G Linux RAID /dev/sdn5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdn6 5860342336 11720838239 5860495904 2.7T Linux RAID /dev/sdn7 11720854336 19532653311 7811798976 3.7T Linux RAID Disk /dev/sdo: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 09CB7303-C2E7-46F8-ADA0-D4853F25CB00 Device Start End Sectors Size Type /dev/sdo1 2048 4982527 4980480 2.4G Linux RAID /dev/sdo2 4982528 9176831 4194304 2G Linux RAID /dev/sdo5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdo6 5860342336 11720838239 5860495904 2.7T Linux RAID Disk /dev/sdp: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: A3E39D34-4297-4BE9-B4FD-3A21EFC38071 Device Start End Sectors Size Type /dev/sdp1 2048 4982527 4980480 2.4G Linux RAID /dev/sdp2 4982528 9176831 4194304 2G Linux RAID /dev/sdp5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdq: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 54D81C51-AB85-4DE2-AA16-263DF1C6BB8A Device Start End Sectors Size Type /dev/sdq1 2048 4982527 4980480 2.4G Linux RAID /dev/sdq2 4982528 9176831 4194304 2G Linux RAID /dev/sdq5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdr: 9.1 TiB, 10000831348736 bytes, 19532873728 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: EA537505-55B5-4C27-A7CA-C7BBB7E7B56F Device Start End Sectors Size Type /dev/sdr1 2048 4982527 4980480 2.4G Linux RAID /dev/sdr2 4982528 9176831 4194304 2G Linux RAID /dev/sdr5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdr6 5860342336 11720838239 5860495904 2.7T Linux RAID /dev/sdr7 11720854336 19532653311 7811798976 3.7T Linux RAID Disk /dev/md0: 2.4 GiB, 2549940224 bytes, 4980352 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disk /dev/md1: 2 GiB, 2147418112 bytes, 4194176 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disk /dev/zram0: 2.3 GiB, 2488270848 bytes, 607488 sectors Units: sectors of 1 * 4096 = 4096 bytes Sector size (logical/physical): 4096 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disk /dev/zram1: 2.3 GiB, 2488270848 bytes, 607488 sectors Units: sectors of 1 * 4096 = 4096 bytes Sector size (logical/physical): 4096 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disk /dev/zram2: 2.3 GiB, 2488270848 bytes, 607488 sectors Units: sectors of 1 * 4096 = 4096 bytes Sector size (logical/physical): 4096 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disk /dev/zram3: 2.3 GiB, 2488270848 bytes, 607488 sectors Units: sectors of 1 * 4096 = 4096 bytes Sector size (logical/physical): 4096 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disk /dev/md5: 3.7 TiB, 3999639994368 bytes, 7811796864 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disk /dev/md4: 10.9 TiB, 12002291351552 bytes, 23441975296 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 65536 bytes / 262144 bytes Disk /dev/md2: 32.7 TiB, 35947750883328 bytes, 70210450944 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 65536 bytes / 786432 bytes

-

root@homelab:~# mdadm --assemble --run /dev/md2 /dev/sd[bcdefklmnopq]5 mdadm: /dev/md2 has been started with 12 drives (out of 13). root@homelab:~# root@homelab:~# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md2 : active raid5 sdb5[0] sdp5[10] sdn5[11] sdo5[9] sdm5[8] sdl5[7] sdq5[13] sdk5[5] sdf5[4] sde5[3] sdd5[2] sdc5[1] 35105225472 blocks super 1.2 level 5, 64k chunk, algorithm 2 [13/12] [UUUUUUUUUU_UU] md4 : active raid5 sdl6[0] sdn6[3] sdo6[2] sdm6[1] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/4] [UUU_U] md5 : active raid1 sdn7[0] 3905898432 blocks super 1.2 [2/1] [U_] md1 : active raid1 sdr2[12] sdq2[11] sdp2[10] sdo2[9] sdn2[8] sdm2[7] sdl2[6] sdk2[5] sdf2[4] sde2[3] sdd2[2] sdc2[1] sdb2[0] 2097088 blocks [24/13] [UUUUUUUUUUUUU___________] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] sdk1[6] sdl1[7] sdm1[8] sdn1[0] sdo1[10] sdp1[4] 2490176 blocks [12/10] [UUUUUUUUU_U_] unused devices: <none> I think it's a (partial) success... maybe? Thanks for the lengthy layman explanation, I honestly appreciate that!!! I was wondering about the event ID thing, I thought it corresponds to some system log somewhere.

-

Here's the output: root@homelab:~# mdadm --assemble /dev/md2 --uuid 43699871:217306be:dc16f5e8:dcbe1b0d mdadm: ignoring /dev/sdc5 as it reports /dev/sdq5 as failed mdadm: ignoring /dev/sdd5 as it reports /dev/sdq5 as failed mdadm: ignoring /dev/sde5 as it reports /dev/sdq5 as failed mdadm: ignoring /dev/sdf5 as it reports /dev/sdq5 as failed mdadm: ignoring /dev/sdk5 as it reports /dev/sdq5 as failed mdadm: ignoring /dev/sdl5 as it reports /dev/sdq5 as failed mdadm: ignoring /dev/sdm5 as it reports /dev/sdq5 as failed mdadm: ignoring /dev/sdo5 as it reports /dev/sdq5 as failed mdadm: ignoring /dev/sdn5 as it reports /dev/sdq5 as failed mdadm: ignoring /dev/sdp5 as it reports /dev/sdq5 as failed mdadm: /dev/md2 assembled from 2 drives - not enough to start the array. root@homelab:~# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md4 : active raid5 sdl6[0] sdn6[3] sdo6[2] sdm6[1] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/4] [UUU_U] md5 : active raid1 sdn7[0] 3905898432 blocks super 1.2 [2/1] [U_] md1 : active raid1 sdr2[12] sdq2[11] sdp2[10] sdo2[9] sdn2[8] sdm2[7] sdl2[6] sdk2[5] sdf2[4] sde2[3] sdd2[2] sdc2[1] sdb2[0] 2097088 blocks [24/13] [UUUUUUUUUUUUU___________] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] sdk1[6] sdl1[7] sdm1[8] sdn1[0] sdo1[10] sdp1[4] 2490176 blocks [12/10] [UUUUUUUUU_U_] unused devices: <none> 🤔

-

mdadm --assemble --scan /dev/md2 mdadm: /dev/md2 not identified in config file. and mdstat doesn't show md2. # cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md4 : active raid5 sdl6[0] sdn6[3] sdo6[2] sdm6[1] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/4] [UUU_U] md5 : active raid1 sdn7[0] 3905898432 blocks super 1.2 [2/1] [U_] md1 : active raid1 sdr2[12] sdq2[11] sdp2[10] sdo2[9] sdn2[8] sdm2[7] sdl2[6] sdk2[5] sdf2[4] sde2[3] sdd2[2] sdc2[1] sdb2[0] 2097088 blocks [24/13] [UUUUUUUUUUUUU___________] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] sdk1[6] sdl1[7] sdm1[8] sdn1[0] sdo1[10] sdp1[4] 2490176 blocks [12/10] [UUUUUUUUU_U_] unused devices: <none> Post #15 it did say mdadm: /dev/md2 assembled from 12 drives - not enough to start the array.

-

It means that it's not running right? Just trying to learn and understand at the same time 😁 root@homelab:~# mdadm --stop /dev/md2 mdadm: stopped /dev/md2 root@homelab:~# root@homelab:~# mdadm --assemble --force /dev/md2 /dev/sd[bcdefklmnopq]5 mdadm: forcing event count in /dev/sdq5(6) from 370871 upto 370918 mdadm: clearing FAULTY flag for device 11 in /dev/md2 for /dev/sdq5 mdadm: Marking array /dev/md2 as 'clean' mdadm: /dev/md2 assembled from 12 drives - not enough to start the array. # cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md4 : active raid5 sdl6[0] sdn6[3] sdo6[2] sdm6[1] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/4] [UUU_U] md5 : active raid1 sdn7[0] 3905898432 blocks super 1.2 [2/1] [U_] md1 : active raid1 sdr2[12] sdq2[11] sdp2[10] sdo2[9] sdn2[8] sdm2[7] sdl2[6] sdk2[5] sdf2[4] sde2[3] sdd2[2] sdc2[1] sdb2[0] 2097088 blocks [24/13] [UUUUUUUUUUUUU___________] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] sdk1[6] sdl1[7] sdm1[8] sdn1[0] sdo1[10] sdp1[4] 2490176 blocks [12/10] [UUUUUUUUU_U_] unused devices: <none> If it matters, the Kingston SSD is my cache drive.

-

# cat /tmp/raid.status # cat /tmp/raid.status /dev/sdb5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : a8109f74:46bc8509:6fc3bca8:9fddb6a7 Update Time : Fri Jan 17 03:26:11 2020 Checksum : b2b47a7 - correct Events : 370917 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 0 Array State : AAAAAA.AAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdc5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 8dfdc601:e01f8a98:9a8e78f1:a7951260 Update Time : Fri Jan 17 03:26:11 2020 Checksum : 286f1b5a - correct Events : 370917 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 1 Array State : AAAAAA.AAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdd5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : f98bc050:a4b46deb:c3168fa0:08d90061 Update Time : Fri Jan 17 03:26:11 2020 Checksum : 7a510a15 - correct Events : 370917 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 2 Array State : AAAAAA.AAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sde5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 1e2742b7:d1847218:816c7135:cdf30c07 Update Time : Fri Jan 17 03:26:11 2020 Checksum : 48c5e5ea - correct Events : 370917 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 3 Array State : AAAAAA.AAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdf5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : ce60c47e:14994160:da4d1482:fd7901f2 Update Time : Fri Jan 17 03:26:11 2020 Checksum : 8fae9b68 - correct Events : 370917 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 4 Array State : AAAAAA.AAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdk5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 706c5124:d647d300:733fb961:e5cd8127 Update Time : Fri Jan 17 03:26:11 2020 Checksum : eaf29b4c - correct Events : 370917 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 5 Array State : AAAAAA.AAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdl5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 6993b9eb:8ad7c80f:dc17268f:a8efa73d Update Time : Fri Jan 17 03:26:11 2020 Checksum : 4e34c29 - correct Events : 370917 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 7 Array State : AAAAAA.AAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdm5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 2f1247d1:a536d2ad:ba2eb47f:a7eaf237 Update Time : Fri Jan 17 03:26:11 2020 Checksum : 73533be8 - correct Events : 370917 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 8 Array State : AAAAAA.AAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdn5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 73610f83:fb3cf895:c004147e:b4de2bfe Update Time : Fri Jan 17 03:26:11 2020 Checksum : d1da5b98 - correct Events : 370917 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 11 Array State : AAAAAA.AAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdo5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 1b4ab27d:bb7488fa:a6cc1f75:d21d1a83 Update Time : Fri Jan 17 03:26:11 2020 Checksum : ad078302 - correct Events : 370917 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 9 Array State : AAAAAA.AAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdp5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : a64f01c2:76c56102:38ad7c4e:7bce88d1 Update Time : Fri Jan 17 03:26:11 2020 Checksum : 20fb6a8c - correct Events : 370917 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 12 Array State : AAAAAA.AAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdq5: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1968 sectors, after=0 sectors State : clean Device UUID : 5cc6456d:bfc950bf:1baf6fef:aabec947 Update Time : Fri Jan 10 19:44:10 2020 Checksum : a0630221 - correct Events : 370871 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 6 Array State : AAAAAAAAAA.AA ('A' == active, '.' == missing, 'R' == replacing) /dev/sdr5: Magic : a92b4efc Version : 1.2 Feature Map : 0x2 Array UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Name : homelab:2 (local to host homelab) Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Raid Devices : 13 Avail Dev Size : 5850870912 (2789.91 GiB 2995.65 GB) Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Data Offset : 2048 sectors Super Offset : 8 sectors Recovery Offset : 78633768 sectors Unused Space : before=1968 sectors, after=0 sectors State : active Device UUID : d0a4607c:b970d906:02920f5c:ad5204d1 Update Time : Fri Jan 3 03:01:29 2020 Checksum : f185b28c - correct Events : 370454 Layout : left-symmetric Chunk Size : 64K Device Role : Active device 10 Array State : AAAAAAAAAAAAA ('A' == active, '.' == missing, 'R' == replacing) # mdadm --examine /dev/sd[bcdefklmnopqr]5 | egrep 'Event|/dev/sd' /dev/sdb5: Events : 370917 /dev/sdc5: Events : 370917 /dev/sdd5: Events : 370917 /dev/sde5: Events : 370917 /dev/sdf5: Events : 370917 /dev/sdk5: Events : 370917 /dev/sdl5: Events : 370917 /dev/sdm5: Events : 370917 /dev/sdn5: Events : 370917 /dev/sdo5: Events : 370917 /dev/sdp5: Events : 370917 /dev/sdq5: Events : 370871 /dev/sdr5: Events : 370454

-

synoinfo.conf editing works! Not sure why it works after reboot, but meh doesn't matter 😁 3 drives have System Partition Failed now. Anyways.. md2, md4, md5, md1, md0. # ls /dev/sd* /dev/sda /dev/sdb2 /dev/sdc2 /dev/sdd2 /dev/sde2 /dev/sdf2 /dev/sdk2 /dev/sdl2 /dev/sdm1 /dev/sdn /dev/sdn6 /dev/sdo2 /dev/sdp1 /dev/sdq1 /dev/sdr1 /dev/sdr7 /dev/sda1 /dev/sdb5 /dev/sdc5 /dev/sdd5 /dev/sde5 /dev/sdf5 /dev/sdk5 /dev/sdl5 /dev/sdm2 /dev/sdn1 /dev/sdn7 /dev/sdo5 /dev/sdp2 /dev/sdq2 /dev/sdr2 /dev/sdb /dev/sdc /dev/sdd /dev/sde /dev/sdf /dev/sdk /dev/sdl /dev/sdl6 /dev/sdm5 /dev/sdn2 /dev/sdo /dev/sdo6 /dev/sdp5 /dev/sdq5 /dev/sdr5 /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1 /dev/sdf1 /dev/sdk1 /dev/sdl1 /dev/sdm /dev/sdm6 /dev/sdn5 /dev/sdo1 /dev/sdp /dev/sdq /dev/sdr /dev/sdr6 root@homelab:~# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md2 : active raid5 sdb5[0] sdp5[10] sdn5[11] sdo5[9] sdm5[8] sdl5[7] sdk5[5] sdf5[4] sde5[3] sdd5[2] sdc5[1] 35105225472 blocks super 1.2 level 5, 64k chunk, algorithm 2 [13/11] [UUUUUU_UUU_UU] md4 : active raid5 sdl6[0] sdn6[3] sdo6[2] sdm6[1] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/4] [UUU_U] md5 : active raid1 sdn7[0] 3905898432 blocks super 1.2 [2/1] [U_] md1 : active raid1 sdr2[12] sdq2[11] sdp2[10] sdo2[9] sdn2[8] sdm2[7] sdl2[6] sdk2[5] sdf2[4] sde2[3] sdd2[2] sdc2[1] sdb2[0] 2097088 blocks [24/13] [UUUUUUUUUUUUU___________] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] sdk1[6] sdl1[7] sdm1[8] sdn1[0] sdo1[10] sdp1[4] 2490176 blocks [12/10] [UUUUUUUUU_U_] unused devices: <none> /dev/md2: Version : 1.2 Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Used Dev Size : 2925435456 (2789.91 GiB 2995.65 GB) Raid Devices : 13 Total Devices : 11 Persistence : Superblock is persistent Update Time : Fri Jan 17 03:26:11 2020 State : clean, FAILED Active Devices : 11 Working Devices : 11 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : homelab:2 (local to host homelab) UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Events : 370917 Number Major Minor RaidDevice State 0 8 21 0 active sync /dev/sdb5 1 8 37 1 active sync /dev/sdc5 2 8 53 2 active sync /dev/sdd5 3 8 69 3 active sync /dev/sde5 4 8 85 4 active sync /dev/sdf5 5 8 165 5 active sync /dev/sdk5 - 0 0 6 removed 7 8 181 7 active sync /dev/sdl5 8 8 197 8 active sync /dev/sdm5 9 8 229 9 active sync /dev/sdo5 - 0 0 10 removed 11 8 213 11 active sync /dev/sdn5 10 8 245 12 active sync /dev/sdp5 /dev/md4: Version : 1.2 Creation Time : Sun Sep 22 21:55:04 2019 Raid Level : raid5 Array Size : 11720987648 (11178.00 GiB 12002.29 GB) Used Dev Size : 2930246912 (2794.50 GiB 3000.57 GB) Raid Devices : 5 Total Devices : 4 Persistence : Superblock is persistent Update Time : Fri Jan 17 03:26:11 2020 State : clean, degraded Active Devices : 4 Working Devices : 4 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : homelab:4 (local to host homelab) UUID : 648fc239:67ee3f00:fa9d25fe:ef2f8cb0 Events : 7052 Number Major Minor RaidDevice State 0 8 182 0 active sync /dev/sdl6 1 8 198 1 active sync /dev/sdm6 2 8 230 2 active sync /dev/sdo6 - 0 0 3 removed 3 8 214 4 active sync /dev/sdn6 /dev/md5: Version : 1.2 Creation Time : Tue Sep 24 19:36:08 2019 Raid Level : raid1 Array Size : 3905898432 (3724.96 GiB 3999.64 GB) Used Dev Size : 3905898432 (3724.96 GiB 3999.64 GB) Raid Devices : 2 Total Devices : 1 Persistence : Superblock is persistent Update Time : Fri Jan 17 03:26:06 2020 State : clean, degraded Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 Name : homelab:5 (local to host homelab) UUID : ae55eeff:e6a5cc66:2609f5e0:2e2ef747 Events : 223792 Number Major Minor RaidDevice State 0 8 215 0 active sync /dev/sdn7 - 0 0 1 removed /dev/md1: Version : 0.90 Creation Time : Fri Jan 17 03:25:58 2020 Raid Level : raid1 Array Size : 2097088 (2047.94 MiB 2147.42 MB) Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 24 Total Devices : 13 Preferred Minor : 1 Persistence : Superblock is persistent Update Time : Fri Jan 17 03:26:49 2020 State : active, degraded Active Devices : 13 Working Devices : 13 Failed Devices : 0 Spare Devices : 0 UUID : 846f27e4:bf628296:cc8c244d:4f76664d (local to host homelab) Events : 0.20 Number Major Minor RaidDevice State 0 8 18 0 active sync /dev/sdb2 1 8 34 1 active sync /dev/sdc2 2 8 50 2 active sync /dev/sdd2 3 8 66 3 active sync /dev/sde2 4 8 82 4 active sync /dev/sdf2 5 8 162 5 active sync /dev/sdk2 6 8 178 6 active sync /dev/sdl2 7 8 194 7 active sync /dev/sdm2 8 8 210 8 active sync /dev/sdn2 9 8 226 9 active sync /dev/sdo2 10 8 242 10 active sync /dev/sdp2 11 65 2 11 active sync /dev/sdq2 12 65 18 12 active sync /dev/sdr2 - 0 0 13 removed - 0 0 14 removed - 0 0 15 removed - 0 0 16 removed - 0 0 17 removed - 0 0 18 removed - 0 0 19 removed - 0 0 20 removed - 0 0 21 removed - 0 0 22 removed - 0 0 23 removed /dev/md0: Version : 0.90 Creation Time : Sun Sep 22 21:01:46 2019 Raid Level : raid1 Array Size : 2490176 (2.37 GiB 2.55 GB) Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 10 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Fri Jan 17 03:42:39 2020 State : active, degraded Active Devices : 10 Working Devices : 10 Failed Devices : 0 Spare Devices : 0 UUID : f36dde6e:8c6ec8e5:3017a5a8:c86610be Events : 0.539389 Number Major Minor RaidDevice State 0 8 209 0 active sync /dev/sdn1 1 8 17 1 active sync /dev/sdb1 2 8 33 2 active sync /dev/sdc1 3 8 49 3 active sync /dev/sdd1 4 8 241 4 active sync /dev/sdp1 5 8 81 5 active sync /dev/sdf1 6 8 161 6 active sync /dev/sdk1 7 8 177 7 active sync /dev/sdl1 8 8 193 8 active sync /dev/sdm1 - 0 0 9 removed 10 8 225 10 active sync /dev/sdo1 - 0 0 11 removed

-

All are true. It didn't ask to migrate again after rebooting. But this happened. Before rebooting, all disks showed Normal in Disk Allocation Status. mdstat after rebooting (md2 and md0 are different than before reboot): # cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md2 : active raid5 sdb5[0] sdl5[7] sdk5[5] sdf5[4] sde5[3] sdd5[2] sdc5[1] 35105225472 blocks super 1.2 level 5, 64k chunk, algorithm 2 [13/7] [UUUUUU_U_____] md4 : active raid5 sdl6[0] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/1] [U____] md1 : active raid1 sdl2[6] sdk2[5] sdf2[4] sde2[3] sdd2[2] sdc2[1] sdb2[0] 2097088 blocks [12/7] [UUUUUUU_____] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] sdk1[6] sdl1[7] sdm1[8] sdn1[0] sdo1[10] sdp1[4] 2490176 blocks [12/10] [UUUUUUUUU_U_] unused devices: <none> root@homelab:~# mdadm --detail /dev/md2 /dev/md2: Version : 1.2 Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Used Dev Size : 2925435456 (2789.91 GiB 2995.65 GB) Raid Devices : 13 Total Devices : 7 Persistence : Superblock is persistent Update Time : Fri Jan 17 02:11:28 2020 State : clean, FAILED Active Devices : 7 Working Devices : 7 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : homelab:2 (local to host homelab) UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Events : 370911 Number Major Minor RaidDevice State 0 8 21 0 active sync /dev/sdb5 1 8 37 1 active sync /dev/sdc5 2 8 53 2 active sync /dev/sdd5 3 8 69 3 active sync /dev/sde5 4 8 85 4 active sync /dev/sdf5 5 8 165 5 active sync /dev/sdk5 - 0 0 6 removed 7 8 181 7 active sync /dev/sdl5 - 0 0 8 removed - 0 0 9 removed - 0 0 10 removed - 0 0 11 removed - 0 0 12 removed root@homelab:~# mdadm --detail /dev/md0 /dev/md0: Version : 0.90 Creation Time : Sun Sep 22 21:01:46 2019 Raid Level : raid1 Array Size : 2490176 (2.37 GiB 2.55 GB) Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 10 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Fri Jan 17 02:18:37 2020 State : clean, degraded Active Devices : 10 Working Devices : 10 Failed Devices : 0 Spare Devices : 0 UUID : f36dde6e:8c6ec8e5:3017a5a8:c86610be Events : 0.536737 Number Major Minor RaidDevice State 0 8 209 0 active sync /dev/sdn1 1 8 17 1 active sync /dev/sdb1 2 8 33 2 active sync /dev/sdc1 3 8 49 3 active sync /dev/sdd1 4 8 241 4 active sync /dev/sdp1 5 8 81 5 active sync /dev/sdf1 6 8 161 6 active sync /dev/sdk1 7 8 177 7 active sync /dev/sdl1 8 8 193 8 active sync /dev/sdm1 - 0 0 9 removed 10 8 225 10 active sync /dev/sdo1 - 0 0 11 removed OK lemme rephrase. Post #4 says I used the ubuntu usb. Post #5 you asked me to use the xpe usb and run those commands. Post #6 states when I put the xpe usb back it asked me to migrate. I migrated, and hence lost my edited synoinfo.conf and it defaults to 6 used disks, etc. I can't make any changes since everything is in read-only mode. xpe usb is the same one that I've been using since the very beginning.

-

It means that when this whole thing started (and the 2 crashed drives), everything went into read-only mode. I have no idea why. My synoinfo.conf was still as is, I didn't change anything since after the first installation. Now when I pop the xpe usb back, it asks me to migrate, and thus wiping out my synoinfo and reverting to the default ds3617xs setting.

-

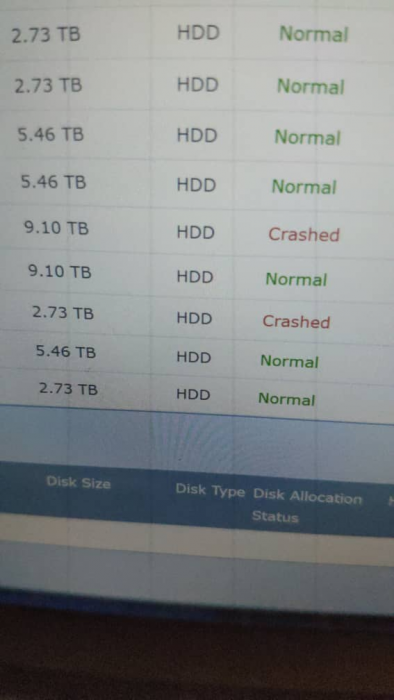

Thing is, as I said earlier First, nothing out of the ordinary happened. Then two drives out of nowhere started crashing. Drives (Some?) data was still accessible. My mistake was I deactivated the 10TB. So I backed up whatever that I could via rclone from a different machine, accessing via SMB and NFS. Then I rebooted. It presented the option to repair. I clicked repair, and when everything's done a drive was labelled clean, and a different one labelled as crashed. I freaked out, and continued to do the backup. Soon after all shares are gone. I did mdadm -Asf && vgchange -ay in DSM. I booted ubuntu live because I read multiple times that you can just pop in your drives in ubuntu and you can (easily?) mount the drives to access whatever data that's there. That's all. So if I can mount any/all of the drives in ubuntu and proceed to back up what's left, at this stage I'd be more than happy 😁 I didn't physically alter anything on the server other than changing USB to ubuntu. I will post whatever commands you asked, and unkind or not, at this stage I appreciate any reply 😁 /volume1$ cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1] md2 : active raid5 sdb5[0] sdl5[7] sdk5[5] sdf5[4] sdd5[2] sdc5[1] 35105225472 blocks super 1.2 level 5, 64k chunk, algorithm 2 [13/6] [UUU_UU_U_____] md4 : active raid5 sdl6[0] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/1] [U____] md1 : active raid1 sdl2[6] sdk2[5] sdf2[4] sdd2[2] sdc2[1] sdb2[0] 2097088 blocks [12/6] [UUU_UUU_____] md0 : active raid1 sdb1[1] sdc1[2] sdd1[3] sdf1[5] sdk1[6] sdl1[7] sdm1[8] sdn1[10] sdo1[0] sdp1[4] 2490176 blocks [12/10] [UUUUUUUUU_U_] unused devices: <none> root@homelab:~# mdadm --detail /dev/md2 /dev/md2: Version : 1.2 Creation Time : Sun Sep 22 21:55:03 2019 Raid Level : raid5 Array Size : 35105225472 (33478.95 GiB 35947.75 GB) Used Dev Size : 2925435456 (2789.91 GiB 2995.65 GB) Raid Devices : 13 Total Devices : 6 Persistence : Superblock is persistent Update Time : Thu Jan 16 15:36:17 2020 State : clean, FAILED Active Devices : 6 Working Devices : 6 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : homelab:2 (local to host homelab) UUID : 43699871:217306be:dc16f5e8:dcbe1b0d Events : 370905 Number Major Minor RaidDevice State 0 8 21 0 active sync /dev/sdb5 1 8 37 1 active sync /dev/sdc5 2 8 53 2 active sync /dev/sdd5 - 0 0 3 removed 4 8 85 4 active sync /dev/sdf5 5 8 165 5 active sync /dev/sdk5 - 0 0 6 removed 7 8 181 7 active sync /dev/sdl5 - 0 0 8 removed - 0 0 9 removed - 0 0 10 removed - 0 0 11 removed - 0 0 12 removed root@homelab:~# mdadm --detail /dev/md4 /dev/md4: Version : 1.2 Creation Time : Sun Sep 22 21:55:04 2019 Raid Level : raid5 Array Size : 11720987648 (11178.00 GiB 12002.29 GB) Used Dev Size : 2930246912 (2794.50 GiB 3000.57 GB) Raid Devices : 5 Total Devices : 1 Persistence : Superblock is persistent Update Time : Thu Jan 16 15:36:17 2020 State : clean, FAILED Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : homelab:4 (local to host homelab) UUID : 648fc239:67ee3f00:fa9d25fe:ef2f8cb0 Events : 7040 Number Major Minor RaidDevice State 0 8 182 0 active sync /dev/sdl6 - 0 0 1 removed - 0 0 2 removed - 0 0 3 removed - 0 0 4 removed root@homelab:~# mdadm --detail /dev/md1 /dev/md1: Version : 0.90 Creation Time : Thu Jan 16 15:35:53 2020 Raid Level : raid1 Array Size : 2097088 (2047.94 MiB 2147.42 MB) Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 12 Total Devices : 6 Preferred Minor : 1 Persistence : Superblock is persistent Update Time : Thu Jan 16 15:36:44 2020 State : active, degraded Active Devices : 6 Working Devices : 6 Failed Devices : 0 Spare Devices : 0 UUID : 000ab602:c505dcb2:cc8c244d:4f76664d (local to host homelab) Events : 0.25 Number Major Minor RaidDevice State 0 8 18 0 active sync /dev/sdb2 1 8 34 1 active sync /dev/sdc2 2 8 50 2 active sync /dev/sdd2 - 0 0 3 removed 4 8 82 4 active sync /dev/sdf2 5 8 162 5 active sync /dev/sdk2 6 8 178 6 active sync /dev/sdl2 - 0 0 7 removed - 0 0 8 removed - 0 0 9 removed - 0 0 10 removed - 0 0 11 removed root@homelab:~# mdadm --detail /dev/md0 /dev/md0: Version : 0.90 Creation Time : Sun Sep 22 21:01:46 2019 Raid Level : raid1 Array Size : 2490176 (2.37 GiB 2.55 GB) Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 12 Total Devices : 10 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Thu Jan 16 15:48:30 2020 State : clean, degraded Active Devices : 10 Working Devices : 10 Failed Devices : 0 Spare Devices : 0 UUID : f36dde6e:8c6ec8e5:3017a5a8:c86610be Events : 0.522691 Number Major Minor RaidDevice State 0 8 225 0 active sync /dev/sdo1 1 8 17 1 active sync /dev/sdb1 2 8 33 2 active sync /dev/sdc1 3 8 49 3 active sync /dev/sdd1 4 8 241 4 active sync /dev/sdp1 5 8 81 5 active sync /dev/sdf1 6 8 161 6 active sync /dev/sdk1 7 8 177 7 active sync /dev/sdl1 8 8 193 8 active sync /dev/sdm1 - 0 0 9 removed 10 8 209 10 active sync /dev/sdn1 - 0 0 11 removed root@homelab:~# ls /dev/sd* /dev/sda /dev/sdb2 /dev/sdc2 /dev/sdd2 /dev/sdf2 /dev/sdk2 /dev/sdl2 /dev/sdm1 /dev/sdn /dev/sdn6 /dev/sdo5 /dev/sdp1 /dev/sdq1 /dev/sdr1 /dev/sdr7 /dev/sda1 /dev/sdb5 /dev/sdc5 /dev/sdd5 /dev/sdf5 /dev/sdk5 /dev/sdl5 /dev/sdm2 /dev/sdn1 /dev/sdo /dev/sdo6 /dev/sdp2 /dev/sdq2 /dev/sdr2 /dev/sdb /dev/sdc /dev/sdd /dev/sdf /dev/sdk /dev/sdl /dev/sdl6 /dev/sdm5 /dev/sdn2 /dev/sdo1 /dev/sdo7 /dev/sdp5 /dev/sdq5 /dev/sdr5 /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sdf1 /dev/sdk1 /dev/sdl1 /dev/sdm /dev/sdm6 /dev/sdn5 /dev/sdo2 /dev/sdp /dev/sdq /dev/sdr /dev/sdr6 root@homelab:~# ls /dev/md* /dev/md0 /dev/md1 /dev/md2 /dev/md4 root@homelab:~# ls /dev/vg* /dev/vga_arbiter Note that when I pop the synoboot usb back, it asks me to migrate, and since all files are read-only, I can't restore my synoinfo.conf back to the original - 20 drives, 4 usb, 2 esata. Again I appreciate any reply, thank you!

-

Nobody? 😪 I have no idea what this means, but I think it's important. HELP! sdn WDC WD100EMAZ-00 10TB homelab:4 10.92TB homelab:5 3.64TB sdk ST6000VN0033-2EE homelab:4 10.92TB sdj WDC WD100EMAZ-00 10TB homelab:4 10.92TB homelab:5 3.64TB sdi ST6000VN0041-2EL homelab:4 10.92TB sdh ST6000VN0041-2EL homelab:4 10.92TB sda KINGSTON SV300S3 [SSD CACHE] homelab:3 223.57GB # fdisk -l Disk /dev/sda: 223.6 GiB, 240057409536 bytes, 468862128 sectors Disk model: KINGSTON SV300S3 Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x696935dc Device Boot Start End Sectors Size Id Type /dev/sda1 2048 468857024 468854977 223.6G fd Linux raid autodetect Disk /dev/sdb: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Disk model: WDC WD30EFRX-68A Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 43C8C355-AE0A-42DC-97CC-508B0FB4EF37 Device Start End Sectors Size Type /dev/sdb1 2048 4982527 4980480 2.4G Linux RAID /dev/sdb2 4982528 9176831 4194304 2G Linux RAID /dev/sdb5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sde: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Disk model: WDC WD30EFRX-68A Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 48A13430-10A1-4050-BA78-723DB398CE87 Device Start End Sectors Size Type /dev/sde1 2048 4982527 4980480 2.4G Linux RAID /dev/sde2 4982528 9176831 4194304 2G Linux RAID /dev/sde5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdc: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Disk model: WDC WD30EFRX-68A Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 0600DFFC-A576-4242-976A-3ACAE5284C4C Device Start End Sectors Size Type /dev/sdc1 2048 4982527 4980480 2.4G Linux RAID /dev/sdc2 4982528 9176831 4194304 2G Linux RAID /dev/sdc5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdd: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Disk model: WDC WD30EFRX-68A Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 58B43CB1-1F03-41D3-A734-014F59DE34E8 Device Start End Sectors Size Type /dev/sdd1 2048 4982527 4980480 2.4G Linux RAID /dev/sdd2 4982528 9176831 4194304 2G Linux RAID /dev/sdd5 9453280 5860326239 5850872960 2.7T Linux RAID [-----------------------THE LIVE USB DEBIAN/UBUNTU------------] Disk /dev/sdf: 14.9 GiB, 16008609792 bytes, 31266816 sectors Disk model: Cruzer Fit Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0xf85f7a50 Device Boot Start End Sectors Size Id Type /dev/sdf1 * 2048 31162367 31160320 14.9G 83 Linux /dev/sdf2 31162368 31262719 100352 49M ef EFI (FAT-12/16/32) Disk /dev/loop0: 2.2 GiB, 2326040576 bytes, 4543048 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdj: 9.1 TiB, 10000831348736 bytes, 19532873728 sectors Disk model: WDC WD100EMAZ-00 Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 1713E819-3B9A-4CE3-94E8-5A3DBF1D5983 Device Start End Sectors Size Type /dev/sdj1 2048 4982527 4980480 2.4G Linux RAID /dev/sdj2 4982528 9176831 4194304 2G Linux RAID /dev/sdj5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdj6 5860342336 11720838239 5860495904 2.7T Linux RAID /dev/sdj7 11720854336 19532653311 7811798976 3.7T Linux RAID Disk /dev/sdg: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Disk model: WDC WD30PURX-64P Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 1D5B8B09-8D4A-4729-B089-442620D3D507 Device Start End Sectors Size Type /dev/sdg1 2048 4982527 4980480 2.4G Linux RAID /dev/sdg2 4982528 9176831 4194304 2G Linux RAID /dev/sdg5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdn: 9.1 TiB, 10000831348736 bytes, 19532873728 sectors Disk model: WDC WD100EMAZ-00 Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: EA537505-55B5-4C27-A7CA-C7BBB7E7B56F Device Start End Sectors Size Type /dev/sdn1 2048 4982527 4980480 2.4G Linux RAID /dev/sdn2 4982528 9176831 4194304 2G Linux RAID /dev/sdn5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdn6 5860342336 11720838239 5860495904 2.7T Linux RAID /dev/sdn7 11720854336 19532653311 7811798976 3.7T Linux RAID Disk /dev/sdl: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Disk model: WDC WD30PURX-64P Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: A3E39D34-4297-4BE9-B4FD-3A21EFC38071 Device Start End Sectors Size Type /dev/sdl1 2048 4982527 4980480 2.4G Linux RAID /dev/sdl2 4982528 9176831 4194304 2G Linux RAID /dev/sdl5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdm: 2.7 TiB, 3000592982016 bytes, 5860533168 sectors Disk model: WDC WD30PURX-64P Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 54D81C51-AB85-4DE2-AA16-263DF1C6BB8A Device Start End Sectors Size Type /dev/sdm1 2048 4982527 4980480 2.4G Linux RAID /dev/sdm2 4982528 9176831 4194304 2G Linux RAID /dev/sdm5 9453280 5860326239 5850872960 2.7T Linux RAID Disk /dev/sdh: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Disk model: ST6000VN0041-2EL Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 849E02B2-2734-496B-AB52-A572DF8FE63F Device Start End Sectors Size Type /dev/sdh1 2048 4982527 4980480 2.4G Linux RAID /dev/sdh2 4982528 9176831 4194304 2G Linux RAID /dev/sdh5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdh6 5860342336 11720838239 5860495904 2.7T Linux RAID Disk /dev/sdk: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Disk model: ST6000VN0033-2EE Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 09CB7303-C2E7-46F8-ADA0-D4853F25CB00 Device Start End Sectors Size Type /dev/sdk1 2048 4982527 4980480 2.4G Linux RAID /dev/sdk2 4982528 9176831 4194304 2G Linux RAID /dev/sdk5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdk6 5860342336 11720838239 5860495904 2.7T Linux RAID Disk /dev/sdi: 5.5 TiB, 6001175126016 bytes, 11721045168 sectors Disk model: ST6000VN0041-2EL Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 423D33B4-90CE-4E34-9C40-6E06D1F50C0C Device Start End Sectors Size Type /dev/sdi1 2048 4982527 4980480 2.4G Linux RAID /dev/sdi2 4982528 9176831 4194304 2G Linux RAID /dev/sdi5 9453280 5860326239 5850872960 2.7T Linux RAID /dev/sdi6 5860342336 11720838239 5860495904 2.7T Linux RAID Disk /dev/md126: 3.7 TiB, 3999639994368 bytes, 7811796864 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disk /dev/md125: 10.9 TiB, 12002291351552 bytes, 23441975296 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 65536 bytes / 262144 bytes Disk /dev/md124: 223.6 GiB, 240052666368 bytes, 468852864 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 65536 bytes / 65536 bytes # cat /proc/mdstat Personalities : [raid1] [raid0] [raid6] [raid5] [raid4] md124 : active raid0 sda1[0] 234426432 blocks super 1.2 64k chunks md125 : active (auto-read-only) raid5 sdk6[2] sdj6[3] sdi6[1] sdh6[0] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/4] [UUU_U] md126 : active (auto-read-only) raid1 sdj7[0] 3905898432 blocks super 1.2 [2/1] [U_] unused devices: <none> # mdadm --detail /dev/md125 /dev/md125: Version : 1.2 Creation Time : Sun Sep 22 21:55:04 2019 Raid Level : raid5 Array Size : 11720987648 (11178.00 GiB 12002.29 GB) Used Dev Size : 2930246912 (2794.50 GiB 3000.57 GB) Raid Devices : 5 Total Devices : 4 Persistence : Superblock is persistent Update Time : Sat Jan 11 20:50:35 2020 State : clean, degraded Active Devices : 4 Working Devices : 4 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Consistency Policy : resync Name : homelab:4 UUID : 648fc239:67ee3f00:fa9d25fe:ef2f8cb0 Events : 7035 Number Major Minor RaidDevice State 0 8 118 0 active sync /dev/sdh6 1 8 134 1 active sync /dev/sdi6 2 8 166 2 active sync /dev/sdk6 - 0 0 3 removed 3 8 150 4 active sync /dev/sdj6 # lvm vgscan Reading all physical volumes. This may take a while... Couldn't find device with uuid xreQ41-E5FU-YC9V-cTHA-QBb0-Cr3U-tcvkZf. Found volume group "vg1" using metadata type lvm2 lvm> vgs vg1 Couldn't find device with uuid xreQ41-E5FU-YC9V-cTHA-QBb0-Cr3U-tcvkZf. VG #PV #LV #SN Attr VSize VFree vg1 3 2 0 wz-pn- <47.25t 916.00m lvm> lvmdiskscan /dev/loop0 [ <2.17 GiB] /dev/sdf1 [ <14.86 GiB] /dev/sdf2 [ 49.00 MiB] /dev/md124 [ <223.57 GiB] /dev/md125 [ <10.92 TiB] LVM physical volume /dev/md126 [ <3.64 TiB] LVM physical volume 0 disks 4 partitions 0 LVM physical volume whole disks 2 LVM physical volumes lvm> pvscan Couldn't find device with uuid xreQ41-E5FU-YC9V-cTHA-QBb0-Cr3U-tcvkZf. PV [unknown] VG vg1 lvm2 [32.69 TiB / 0 free] PV /dev/md125 VG vg1 lvm2 [<10.92 TiB / 0 free] PV /dev/md126 VG vg1 lvm2 [<3.64 TiB / 916.00 MiB free] Total: 3 [<47.25 TiB] / in use: 3 [<47.25 TiB] / in no VG: 0 [0 ] lvm> lvs Couldn't find device with uuid xreQ41-E5FU-YC9V-cTHA-QBb0-Cr3U-tcvkZf. LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert syno_vg_reserved_area vg1 -wi-----p- 12.00m volume_1 vg1 -wi-----p- <47.25t lvm> vgdisplay Couldn't find device with uuid xreQ41-E5FU-YC9V-cTHA-QBb0-Cr3U-tcvkZf. --- Volume group --- VG Name vg1 System ID Format lvm2 Metadata Areas 2 Metadata Sequence No 12 VG Access read/write VG Status resizable MAX LV 0 Cur LV 2 Open LV 0 Max PV 0 Cur PV 3 Act PV 2 VG Size <47.25 TiB PE Size 4.00 MiB Total PE 12385768 Alloc PE / Size 12385539 / <47.25 TiB Free PE / Size 229 / 916.00 MiB VG UUID 2n0Cav-enzK-3ouC-02ve-tYKn-jsP5-PxfYQp

-

I'm at loss here, dunno what to do tl;dr version: I need to either recreate my SHR1 pool, or at least let me mount the drives so I can transfer my files. Story begins with this: 13 drives in total, including one cache SSD. Two crashes at the same time?? That's like.... a very rare possibility right? Well whatever. Everything's read-only now. Fine, I'll just back whatever's important via rclone + gdrive. That's gonna take me a while. Then something weird happened... I could repair, and the 2.73/3TB drive came up normal. But a different drive crashed now. Weird. And stupid of me, I didn't think and clicked deactivate with the 9.10/10TB drive. And now I have no idea how to reactive the drive again. After a few restarts, this happened. The crashed 10TB is not there anymore, which is understandable, but everything's... normal...? But all shares are gone I took out the usb and plug in ubuntu live usb. # cat /proc/mdstat Personalities : [raid0] [raid1] [raid6] [raid5] [raid4] md4 : active (auto-read-only) raid5 sdj6[0] sdl6[3] sdo6[2] sdk6[1] 11720987648 blocks super 1.2 level 5, 64k chunk, algorithm 2 [5/4] [UUU_U] md2 : inactive sdm5[10](S) sdj5[7](S) sdd5[0](S) sde5[1](S) sdp5[14](S) sdo5[9](S) sdl5[11](S) sdn5[13](S) sdi5[5](S) sdf5[2](S) sdh5[4](S) sdk5[8](S) 35105225472 blocks super 1.2 md5 : active (auto-read-only) raid1 sdl7[0] 3905898432 blocks super 1.2 [2/1] [U_] md3 : active raid0 sdc1[0] 234426432 blocks super 1.2 64k chunks unused devices: <none> # mdadm -Asf && vgchange -ay mdadm: Found some drive for an array that is already active: /dev/md/5 mdadm: giving up. mdadm: Found some drive for an array that is already active: /dev/md/4 mdadm: giving up. Drive list: 250GB SSD sdc /dev/sdc1 223.57 3TB sdd /dev/sdd1 2.37GB /dev/sdd2 2.00GB /dev/sdd5 2.72TB 3TB sde /dev/sde1 2.37GB /dev/sde2 2.00GB /dev/sde5 2.72TB 3TB sdf /dev/sdf1 2.37GB /dev/sdf2 2.00GB /dev/sdf5 2.72TB 3TB sdh /dev/sdh1 2.37GB /dev/sdh2 2.00GB /dev/sdh5 2.72TB 3TB sdi /dev/sdi1 2.37GB /dev/sdi2 2.00GB /dev/sdi5 2.72TB 5TB sdj /dev/sdj1 2.37GB /dev/sdj2 2.00GB /dev/sdj5 2.72TB /dev/sdj6 2.73TB 5TB sdk /dev/sdk1 2.37GB /dev/sdk2 2.00GB /dev/sdk5 2.72TB /dev/sdk6 2.73TB 10TB sdl /dev/sdl1 2.37GB /dev/sdl2 2.00GB /dev/sdl5 2.72TB /dev/sdl6 2.73TB /dev/sdl7 3.64TB 3TB sdm /dev/sdm1 2.37GB /dev/sdm2 2.00GB /dev/sdm5 2.72TB 3TB sdn /dev/sdn1 2.37GB /dev/sdn2 2.00GB /dev/sdn5 2.72TB 5TB sdo /dev/sdo1 2.37GB /dev/sdo2 2.00GB /dev/sdo5 2.72TB /dev/sdo6 2.73TB 10TB sdp /dev/sdp1 2.37GB /dev/sdp2 2.00GB /dev/sdp5 2.72TB /dev/sdp6 2.73TB /dev/sdp7 3.64TB Can anybody help me? I just want to access my data, how can I do that? Relevant thread of mine (tl;dr version: it worked, for a few months at least)

-

Physical drive limits of an emulated Synology machine?

C-Fu replied to SteinerKD's topic in Hardware Modding

Yeah after I posted my question, I did follow up with him personally and he confirmed that he had tons of issues with his setup. About quicknick, AFAIK he pulled back his loader so I suppose only those who got his loader earlier would know more I guess. Oh well. One can only dream. -

How would you go about doing this? Just wanna see what's the maximum write speed that I can achieve so I can upgrade my network infrastructure accordingly Example: If my 13 disk SHR can achieve 400MB/s write speed, then I'll attach a quad gigabit or a 10G mellanox PCI-e card to it, with LACP-capable hardware and all. Preferably a tool that won't destroy existing data in the SHR.

-

Weird: I clicked on add disk, and I can somehow add all of the ex-volume3 disks (1x3TB + 2x10TB). I thought I couldn't add the 3TB disk since the new /volume1 already has 3x6TB disks? With the exception of Note Station contents, everything seems to be working right up to the point where I left. 😁

- 6 replies

-

- shr

- storage manager

-

(and 1 more)

Tagged with:

-