-

Posts

423 -

Joined

-

Last visited

-

Days Won

25

Everything posted by WiteWulf

-

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

deleted -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

Gen8 iLO doesn't come with full license, you have to buy a license code to unlock all the features. But like I say, I can't remember if the virtual serial port is part of that. I know remote media is. -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

No, you only get textcons on the web interface. For serial output you need to ssh into the iLO and run 'vsp' I can't remember if you need a full iLO license for this or not, but it's worth having anyway and you can get them for ~£10 on eBay. -

Am I Restricted In How Many NICs I Can Add To A System?

WiteWulf replied to WiteWulf's question in Answered Questions

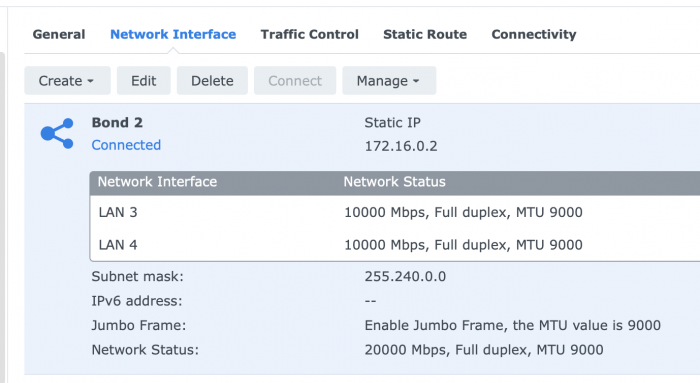

FYI, even with maxlanports=4 and the interfaces all declared in grub.cfg, NICs 3 and 4 (eth2 and eth3) didn't appear in Control Panel until I ssh'd in and ran 'ifconfig eth2 up', for example. Once I'd done that it always failed to create the bond using the web ui, so I used synonet instead and that worked fine. Then rebooted to check it persisted between reboots and it's all good. -

Am I Restricted In How Many NICs I Can Add To A System?

WiteWulf replied to WiteWulf's question in Answered Questions

-

Am I Restricted In How Many NICs I Can Add To A System?

WiteWulf replied to WiteWulf's question in Answered Questions

Thanks for the helpful responses folks, that makes sense. I moved the existing NIC to vmxnet3 and added a second one, also vmxnet3, to the system and it's working properly now with two NICs. -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

Not helpful -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

Cool, cool, cool. I appreciate there's a language gap around these parts and everyone's trying their best (I am very happy that everyone uses English!) -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

Please don't share images. It's been covered many times on this thread why you shouldn't. -

Am I Restricted In How Many NICs I Can Add To A System?

WiteWulf replied to WiteWulf's question in Answered Questions

Even after going through the steps above and making eth2 "visible" to DSM, it fails to add it to a channel bond with eth1 (it fails silently with no error). it's the channel bond that I'm trying to do this for, fwiw. I note that the 918+ has two NICs and the 3615xs has four. I may give it another try with a 3615xs image. More info: What I'm actually trying to do here is set up a dedicated direct connection between this VM and a physical QNAP box using two GigE ports at each end, channel bonded, with a private IP on each, to do very fast NFS transfers over a dedicated link with no other traffic. I figure I could either: - pass through the PCI NICs on the ESXi host to the guest (along with the existing vNIC that has the systems services bind to it) and configure them as a bond - create a vSwitch on ESXi with these two NICs configured as uplinks and attach a vmxnet3 interface to the guest on this switch I thought the first option would be simpler, but it's not looking that way now -

Am I Restricted In How Many NICs I Can Add To A System?

WiteWulf posted a question in Answered Questions

Is there a restriction on how many NICs I can add to a system based on how many the hardware I'm emulating (ie. DS918+ or DS3615xs) has? I've tried adding two additional NICs to a 918+ VM on ESXi, using PCI passthrough. This is in addition to an existing vNIC using e1000e driver. The existing vNIC is still there, the first PCI NIC shows up, but not the second. If I ssh in, though, I can see all three NICs using "ip a", although eth2 is "down" Issuing "ifconfig eth2 up" then brings the NIC up *and* it's now visible in the DSM Control Panel 🤔 NB, all three NICs are declared in grub.cfg as mac1, mac2, mac3 -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

Good effort, but be aware that TTG are already planning this functionality for the final release (and maybe beta, too). If you read nothing else from the past in this thread, at least read TTG's posts. They give a *lot* of detail about where the project is heading and should help reduce duplication of effort 👍 -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

As we seem to have come around to the topic of ESXi again, does anyone know if there's an updated version of VMware tools for DSM7 yet? -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

Is it assuming the first disk is synoboot, so not showing it? -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

No, not sure, but it's a common cause of a failure at 55% with corrupt file -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

Check your USB VID/PID, you may have got them mixed up or forgotten them when you built a new image. -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

7.0U2 NB. that's not on the HP Gen8 hardware I have in my sig, it's one of these: https://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-c240-m4-rack-server/index.html -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

Yeah, this was mostly as an experiment just to see if it would work I share Plex libraries with a few work colleagues. My TVs are both 4k HDR capable, so I never do any transcoding (and keep the 4k content in a separate library so ppl without capable eqpt don't play it by accident), but some folk watch on laptops and 720p TVs, so transcoding is often used for 1080p content. It's currently running on a QNAP TS-853A which does hardware transcoding with a GPU (it needs it as the Celeron CPU in it is rubbish!), but I figure moving it off onto this new system will be faster, and I'll just use the QNAP for storage (as it has a 12TB SATA array in it). I'm hoping to do similar with hyperback. I had a quick play last night backing up my home server to the new ESXi host at work and it was very fast and easy. -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

Ah, my mistake. I'd assumed the 2.5GHz E5-2680 v3 beasties in this box supported QuickSync, but apparently not. -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

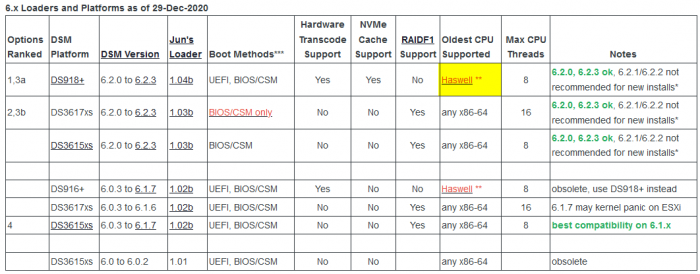

I assume they used threads as opposed to cores in the table to cover hyperthreading CPUs. As I'm provisioning vCPUs in ESXi it makes no difference. I've assigned 8 cores/1 socket and it's making use of all 8 "CPUs" for Plex transcoding. Now I wonder where we're at with hardware transcoding for Plex? I've never bothered looking into it before as my main hardware (HP Gen8) isn't capable... -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

Am I right in thinking that the above table means both 918+ and 3615xs images will only make use of up to 8 cores, and that only the 3617xs image (which isn't available for redpill) will support 16? -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

Yeah, we need to ideally wait for TTG to support 7.0.1, or as a bodge, for Jumkey to update their repo to support extensions. -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

You can build it with Haydib's docker scripts by adding the relevant details to the config json file. NB. TTG don't support 42218, so this is pulling from jumkey's repo and you won't be able to use extensions with this. This is what I'm using on my system. { "id": "bromolow-7.0.1-42218", "platform_version": "bromolow-7.0.1-42218", "user_config_json": "bromolow_user_config.json", "docker_base_image": "debian:8-slim", "compile_with": "toolkit_dev", "redpill_lkm_make_target": "dev-v7", "downloads": { "kernel": { "url": "https://sourceforge.net/projects/dsgpl/files/Synology%20NAS%20GPL%20Source/25426branch/bromolow-source/linux-3.10.x.txz/download", "sha256": "18aecead760526d652a731121d5b8eae5d6e45087efede0da057413af0b489ed" }, "toolkit_dev": { "url": "https://sourceforge.net/projects/dsgpl/files/toolkit/DSM7.0/ds.bromolow-7.0.dev.txz/download", "sha256": "a5fbc3019ae8787988c2e64191549bfc665a5a9a4cdddb5ee44c10a48ff96cdd" } }, "redpill_lkm": { "source_url": "https://github.com/RedPill-TTG/redpill-lkm.git", "branch": "master" }, "redpill_load": { "source_url": "https://github.com/jumkey/redpill-load.git", "branch": "develop" } }, -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

Thanks for helping me get up and running with ESXi, folks. I spent most of the evening upgrading from 6.5 to 7.0 (damn VIB dependencies!) but once that was out of the way the DSM installation was pretty smooth. The only problem I had was that the network it's on has no DHCP (for reasons), so I had to get the virtual terminal working so I could go in and manually give it an IP address, netmask and gateway to even be able to get at the web interface. Now it's up and running! 👍 It's got a 918+ image on it at the moment, given that I've got loads of CPUs and RAM in this host: is 918+ the best image for it? I'm hoping to eventually use this as a Plex host, so CPU, disk and network throughout will be important. -

RedPill - the new loader for 6.2.4 - Discussion

WiteWulf replied to ThorGroup's topic in Developer Discussion Room

I have the exact same hardware configuration and Plex would frequently kernel panic on redpill loader. It's being worked on, but I'd stay on 6.2.3 for now unless you like living dangerously