mervincm

Member-

Posts

193 -

Joined

-

Last visited

-

Days Won

4

mervincm last won the day on September 2 2020

mervincm had the most liked content!

Recent Profile Visitors

2,196 profile views

mervincm's Achievements

Advanced Member (4/7)

15

Reputation

-

I am trying to get my 1815+>1817+ I grabbed the sae.py with sudo wget https://github.com/K4L0dev/Synology_Archive_Extractor/blob/main/sae.py I grabbed the 1815+ pat with sudo wget https://global.synologydownload.com/download/DSM/release/7.1.1/42962-1/DSM_DS1815%2B_42962.pat I tried to extract it with sudo python3 sae.py -k SYSTEM -a DSM_DS1815+_42962.pat -d . but I just get a pile of errors, starting with Traceback (most recent call last): File "sae.py", line 1, in <module> {"payload":{"allShortcutsEnabled":false,"fileTree":{"":{"items":[{"name":"images","path": ... ending with "}}},"title":"Synology_Archive_Extractor/sae.py at main · K4L0dev/Synology_Archive_Extractor"} NameError: name 'false' is not defined

-

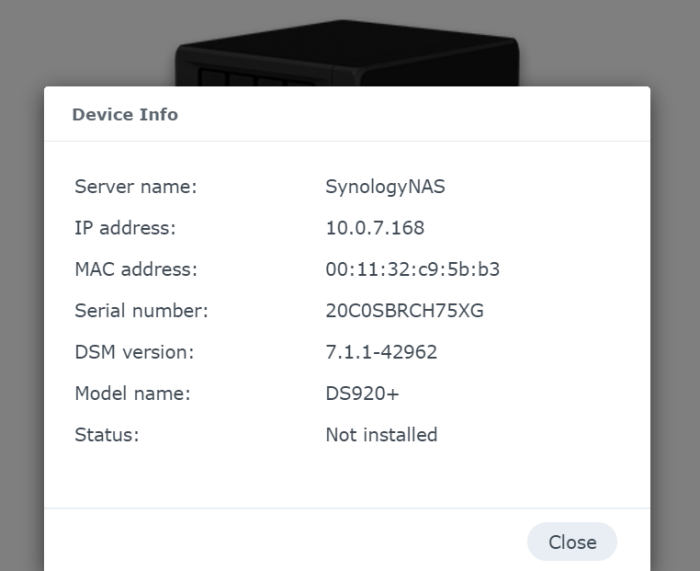

My Hardware is a Lenovo D30 4229 (2x internal NIC, 2x E5-2690,128G, 256GB SSD on systemboard SATA for proxmoxboot/ISO/VM storage, AIC JMS585 SATA, AIC NetXtreme II 10GB ) https://pcsupport.lenovo.com/us/en/products/workstations/thinkstation-d-series-workstations/thinkstation-d30/4229/parts/pd023261-detailed-specifications-and-overview-thinkstation-d30 https://www.amazon.ca/JMT-Expansion-Converter-Backward-Compatible/dp/B07VPVV97Y https://www.ebay.com/itm/IBM-BROADCOM-PCI-EX-10Gb-FIBRE-SERVER-ADAPTER-42C1792-BCM957710A1020G-/351569797201?_ul=IN (both AIC are hopefully to bw passed through to DSM at some point but unused for now) I used DS920 as that was what I found for a most recent guide, but I am wondering if perhaps my hw is too old for the ds 920? under proxmox I didn't think so but ... Lenovo firmware MB SATA all set to hot plug (not sure it matters under proxmox but ..) audio, card reader diskabled (not sure if that would be seem as non attached disk, nor if it mattered under proxmox) virtualization enabled legacy boot enabled (required for proxmox to boot) Proxmox updated to v 7.2.14 DS920 virtual hardware 1 socket,2 core, 2GB,Seabios,default display,default machine,SCSI VirtIO,SATA disk0:1GB TC .927 img, disk1:100GB, net0=vitIO,serial0 bootorder:sata0 I followed the linked guide to install DS920+ using .927 except options to update as that prevented me from proceeding Install DS920+ DSM 7.1.1 Proxmox ./rploader.sh update now (didn't allow this) ./rploader.sh serialgen DS920+ ./rploader.sh ext geminilake-7.1.1-42962 add https://raw.githubusercontent.com/pocopico/redpill-load/master/redpill-virtio/rpext-index.json ./rploader.sh ext geminilake-7.1.1-42962 add https://raw.githubusercontent.com/pocopico/redpill-load/develop/redpill-acpid/rpext-index.json edit user_config_json SataPortMap:4 DiskIdxMap:0 ./rploader.sh backup now ./rploader.sh build geminilake-7.1.1-42962 [ 0.000000] Command line: BOOT_IMAGE=/zImage withefi earlyprintk syno_hw_version=DS920+ console=ttyS0,115200n8 netif_num=1 synoboot2 pid=0xa4a5 earlycon=uart8250,io,0x3f8,115200n8 mac1=001132C95BB3 sn=20C0SBRCH75XG HddEnableDynamicPower=1 vid=0x0525 elevator=elevator loglevel=15 intel_iommu=igfx_off DiskIdxMap=0 vender_format_version=2 log_buf_len=32M root=/dev/md0 SataPortMap=4 syno_ttyS1=serial,0x2f8 syno_ttyS0=serial,0x3f8 [ 1.788548] ahci 0000:00:07.0: AHCI 0001.0000 32 slots 6 ports 1.5 Gbps 0x3f impl SATA mode [ 1.789899] ahci 0000:00:07.0: flags: 64bit ncq only [ 1.790881] Cannot find slot node of this ata_port. [ 1.791800] Cannot find slot node of this ata_port. [ 1.792700] Cannot find slot node of this ata_port. [ 1.793605] Cannot find slot node of this ata_port. [ 1.794534] Cannot find slot node of this ata_port. [ 1.795466] Cannot find slot node of this ata_port. [ 1.868218] scsi host0: ahci [ 1.870565] scsi host1: ahci [ 1.873710] scsi host2: ahci [ 1.876461] scsi host3: ahci [ 1.878967] scsi host4: ahci [ 1.880809] scsi host5: ahci [ 1.881450] ata1 : SATA max UDMA/133 abar m4096@0xfea52000 port 0xfea52100 irq 24 [ 1.882695] ata2 : SATA max UDMA/133 abar m4096@0xfea52000 port 0xfea52180 irq 24 [ 1.883922] ata3 : SATA max UDMA/133 abar m4096@0xfea52000 port 0xfea52200 irq 24 [ 1.885141] ata4 : SATA max UDMA/133 abar m4096@0xfea52000 port 0xfea52280 irq 24 [ 1.886354] ata5 : SATA max UDMA/133 abar m4096@0xfea52000 port 0xfea52300 irq 24 [ 1.887569] ata6 : SATA max UDMA/133 abar m4096@0xfea52000 port 0xfea52380 irq 24 [ 1.888811] ahci: probe of 0001:01:00.0 failed with error -22 [ 2.193288] ata2 : SATA link up 1.5 Gbps (SStatus 113 SControl 300) [ 2.194585] ata2.00 : ATA-7: QEMU HARDDISK, 2.5+, max UDMA/100 [ 2.195547] ata2.00 : 209715200 sectors, multi 16: LBA48 NCQ (depth 31/32) [ 2.196641] ata2.00 : SN:QM00007 [ 2.197188] ata2.00 : applying bridge limits [ 2.198148] ata3 : SATA link down (SStatus 0 SControl 300) [ 2.199062] ata3 : No present pin info for SATA link down event [ 2.200056] Invalid parameter [ 2.200580] ata6 : SATA link down (SStatus 0 SControl 300) [ 2.201494] ata6 : No present pin info for SATA link down event [ 2.202500] Invalid parameter [ 2.203146] ata5 : SATA link down (SStatus 0 SControl 300) [ 2.203991] ata5 : No present pin info for SATA link down event [ 2.204981] Invalid parameter [ 2.205512] ata1 : SATA link up 1.5 Gbps (SStatus 113 SControl 300) [ 2.206840] ata4 : SATA link down (SStatus 0 SControl 300) [ 2.207738] ata4 : No present pin info for SATA link down event [ 2.208715] Invalid parameter [ 2.209230] ata1.00 : ATA-7: QEMU HARDDISK, 2.5+, max UDMA/100 [ 2.210196] ata1.00 : 2097152 sectors, multi 16: LBA48 NCQ (depth 31/32) [ 2.211295] ata1.00 : SN:QM00005 [ 2.211800] ata1.00 : applying bridge limits [ 2.212604] ata2.00 : configured for UDMA/100 [ 2.213331] Invalid parameter [ 2.213864] ata1.00 : configured for UDMA/100 [ 2.214625] Invalid parameter [ 2.215200] ata1.00 : Find SSD disks. [QEMU HARDDISK] [ 2.217159] scsi 0:0:0:0: Direct-Access QEMU HARDDISK 2.5+ PQ: 0 ANSI: 5 [ 2.219138] Cannot find slot node of this ata_port. [ 2.219932] got SATA disk[0] [ 2.220568] ata2.00 : Find SSD disks. [QEMU HARDDISK] [ 2.220688] sd 0:0:0:0: [sata1] 2097152 512-byte logical blocks: (1.07 GB/1.00 GiB) [ 2.220907] sd 0:0:0:0: [sata1] Write Protect is off [ 2.220908] sd 0:0:0:0: [sata1] Mode Sense: 00 3a 00 00 [ 2.220973] sd 0:0:0:0: [sata1] Write cache: enabled, read cache: enabled, doesn't support DPO or FUA [ 2.226304] sata1: p1 p2 p3 [ 2.227403] sd 0:0:0:0: [sata1] Attached SCSI disk [ 2.230750] scsi 1:0:0:0: Direct-Access QEMU HARDDISK 2.5+ PQ: 0 ANSI: 5 [ 2.232384] Cannot find slot node of this ata_port. [ 2.233218] got SATA disk[1] [ 2.233832] sd 1:0:0:0: [sata2] 209715200 512-byte logical blocks: (107 GB/100 GiB) [ 2.235545] sd 1:0:0:0: [sata2] Write Protect is off [ 2.236389] sd 1:0:0:0: [sata2] Mode Sense: 00 3a 00 00 [ 2.237486] sd 1:0:0:0: [sata2] Write cache: enabled, read cache: enabled, doesn't support DPO or FUA [ 2.240424] sd 1:0:0:0: [sata2] Attached SCSI disk System is booting dtbpatch - early /sys/block/sata1/device/syno_block_info 00:07.0 - 0 /sys/block/sata2/device/syno_block_info 00:07.0 - 1 Most likely your vid/pid configuration is not correct, or you don't have drivers needed for your USB/SATA controller (does this matter if I boot SATA?) [ 32.964417] Fail to get disk 1 led type [ 32.965033] Fail to get disk 2 led type [ 32.965632] Fail to get disk 3 led type [ 32.966244] Fail to get disk 4 led type :: Loading module syno_hddmon ... [FAILED] Excution Error

-

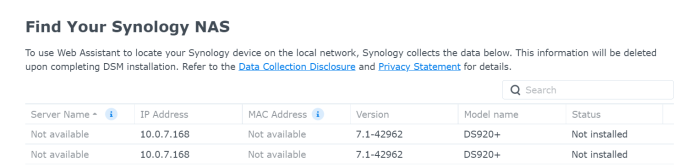

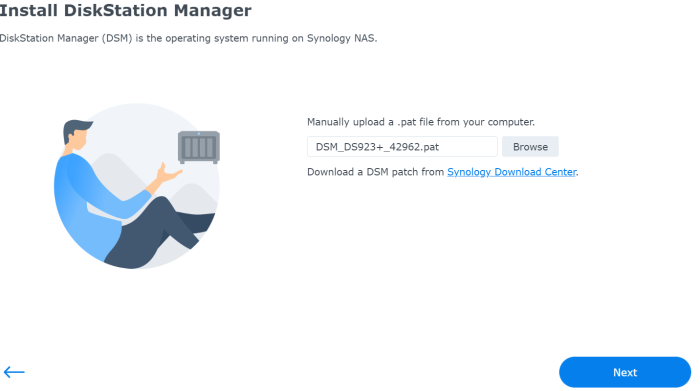

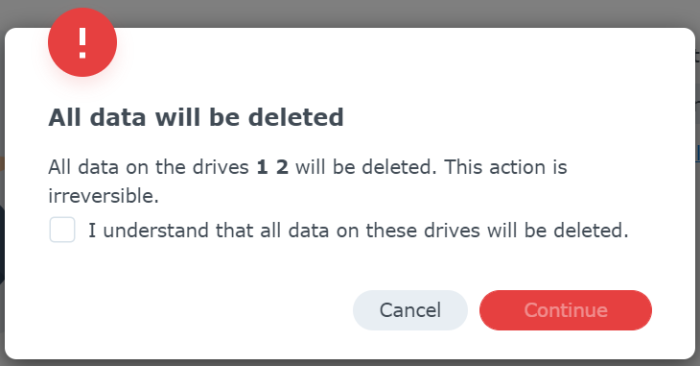

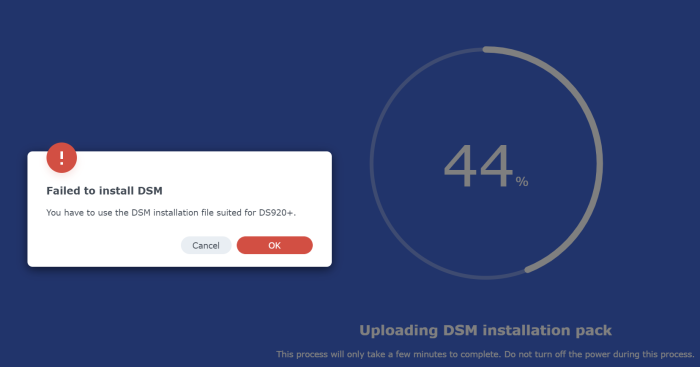

I am trying to install Xpenology virtualized via Proxmox. I have attempted to follow a few guides, but have not seemed to find a solution. This one seems to be the most current https://www.youtube.com/watch?v=td1XNHL_gAg&t=330s but I land at thte same issue. When it comes time to install DSM, it sees two disks and it fails to complete. I only add one disk (other than the 1GB boot volume) but it always sees two disks and I assume, it is trying to install DSM on my 1 GBE boot volume thus failing. Note: I have tried the currect .93 version and that wouldnt procees, so I used the .927 version using in the guide ... that got me all the way to DSM installation. This proxmox host is ( so far) dedicated to the DSM, so I can change anything to accomodate.

-

In the process of resetting my admin password, I somehow lost it. I am not sure how as I immediately put it into my lastpass but in anycase thats where I find myself. I tried to guess what it was but proceeded to lock myip out. When I built this NAS I disabled the built in admin account and made myself a replacement admin level account, and this replacement is the account that I do not know the password to. I am using juns loader 918 I have physical access kb, display etc. Earlier bootloaders you could reset an account at boot .. is that still possible? Edit: found it

-

From what I was able to determine it actually is boosting. You can tell by looking for it running at 3601 Mhz. That extra 1 indicated that turbo is active. Since that sounded like a load of BS to me I decided to run a benchmark. The benchmark came came with a value that I would have expected only with turbo active, thus I am now convinced that Turbo is actually kicking in.

-

i have a 9900 on a z390 systemboard. It's been a while since I tried m2 in xpenology, but when i tested both slots were working. Heck, I use an NVME on a pcie card now and it works fine.

-

xpenology supply NFS to esxi 7 datastore?

mervincm replied to mervincm's question in General Questions

This issue is bewildering to me. I built a test xpenology system and it worked first try. I tried it on my main system once again, and it worked this time. I moved over a couple VMs to NFS storage on my main system, and everything is working perfectly. A week later I go to use a VM and it has read errors and cannot be restarted. sure enough, the NFS share is no longer available to vsphere and can not be re-established. I didn't change any network config, nor NFS share permissions, nothing at all on vsphere or synology that I can recall. I can still connect to the same NFS share from my backup synology, so the share is actually available, I just can't use it from vsphere ..... -

xpenology supply NFS to esxi 7 datastore?

mervincm replied to mervincm's question in General Questions

I have not had any luck yet. I wonder if it is an issue with 918+? I have not been able to scare up any spare hardware to build a test xpenology yet either -

It doesn't think the CPU is a j3455, it is just that the info screen you are looking at is not build via a query, it is just a textual representation of the CPU that synology knows they installed in there. it is costemetic. I am surprised you have issue with your LSI SAS card. Mine works without issue.

-

I can confirm on my 918+ xpenology system with 2 port 10 gig E intel card I see both NICs (my motherboard NIC is disabled)

-

new sata/ahci cards with more then 4 ports (and no sata multiplexer)

mervincm replied to IG-88's topic in Hardware Modding

jmb585 worked without issue in ESXi 6.7u3? and 7.0u1 in my limited testing -

xpenology supply NFS to esxi 7 datastore?

mervincm replied to mervincm's question in General Questions

Thank you for testing! I used a 918+ image, same for you? I will build a test NAS on the weekend. -

xpenology supply NFS to esxi 7 datastore?

mervincm replied to mervincm's question in General Questions

I am trying to use remote/shared storage to facilitate vmotion between two esxi hosts. thus I am trying to mount an NFS share hosted on my XPE box as a datastore on both of my hosts. I believe I worded that correctly, but I admit that I don't understand your comment as I don't understand how else esxi can access an NFS share. Xpenology side Control Panel - file services, NFS and NFS4.1 checked to enable. NFS advanced settings at default. Control Panel - shared folder, created a share "NFS" NFS Permissions, added 2 entries, one for each host, by single IP, Read/Write, squash>no mapping, Asyncronous>Yes, Non-priv port>Denied, Cross-mount>Allowed Control Panel, Info Center, service, confirmed NFS enabled and firewall exception in place. vmWare side html5 client login as root to the esxi host navigate to storage - datastores - click new datastore Mount NFS datastore name:DS2_NFS NFS server: 10.0.0.11 (ip of my xpenology system) NFS share: /volume2/NFS (case sensitive path to my NFS share as detailed in dsm-control panel-shared folder-NFS share, NFS permissions on bottom) NFS 3 , or NFS 4 (tried both) create NFS datastore task starts up, running, Failed - an error occurred during host configuration error bar message: failed to mount NFS datastore DS2_NFS - Operation failed, diagnostics report:Mount failed:unable to complete Sysinfo Operation. Please see the vmkernal log file for more details.:unable to connect to NFS server host - monitor - logs - 2021-01-02T23:38:54.839Z cpu0:2100027 opID=9c4e526e)NFS: 162: Command: (mount) Server: (10.0.0.11) IP: (10.0.0.11) Path: (/volume2/NFS) Label: (DS2_NFS) Options: (None) -

Is anyone using xpenology to supply NFS for esxi 7? I was not able to make it work. It does work as expected on my real Synology 1815+ running DSM beta 7.0, but no matter what I tried I could not get the esxi host to add a datastore on the XPEnology NAS. I can use nfs v3 and v4 to my real synology. if you are able to make it work I would appreciate some feedback.