haydibe

-

Posts

705 -

Joined

-

Last visited

-

Days Won

35

Posts posted by haydibe

-

-

4 hours ago, snowfox said:

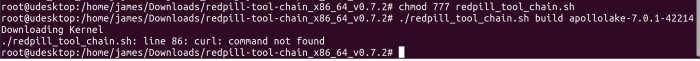

The error message says "curl: command not found". Install the curl packge of your distro

The same is true if "jq" or "realpath" are missing on your system.

Probably I should add a senity checks that indicates when required commands are missing.

Update: I see it was answered above.

-

4 hours ago, hoangminh88 said:

Can you help me!

The real work needs to be done in redpill-load.

The toolchain builder realy is just a convinent way to provide the required tool chain to build redpill-lkm and run redpill-load. It does nothing by its own.

You just created "profiles" that instruct the toolchain builder to build a specific toolchain. In order to build a bootloader for a not yet supported platform, you will still need to write a config in redpill-load. No redpill-load config == not possible to create a bootloader image.

-

2 hours ago, hoangminh88 said:

I try build img from ds.geminilake-7.0.dev.txz but error here

I assume you created the redpill-load config for geiminilake yourself?

-

1

1

-

-

1 hour ago, WiteWulf said:

DS918+ isn't compatible with that CPU. I've got the same CPU in an HP Microserver and can only use the Bromolow images, such as DS3615XS.

But, you did notice the v4 at then end, didn't you? Xeon E3-12xx v3 and newer should be compatible. I am running it without any problems on a E3-1275 v5. E3-12xx v2 and older are not compatible.

-

1

1

-

-

On Proxmox 7.0.1 it's quite easy to edit the grub.cfg without having to rebuild the image or reupload a modifed image again.

Make sure the DSM vm is shutdown before executing the commands, otherwise will get damaged!

# check if loopback device is alreay used losetup -a # configure to use next free loopback device, change if losetup -a showed the loopback device is alreay used _LOOP_DEV=/dev/loop1 # path to your redpill image, change this to reflect your path _RP_IMG=/var/lib/vz/images/XXX/redpill.img # mout image to loopback and partition to /tmp/mount losetup -P "${_LOOP_DEV}" "${_RP_IMG}" mkdir -p /tmp/mount mount /dev/loop1p1 /tmp/mount # edit file vi /tmp/mount/boot/grub/grub.cfg # unmount partition and image from loopback again umount /tmp/mount losetup -d "${_LOOP_DEV}"The restart the vm again.

-

You might want to search for DiskIdxMap and SataPortMap in this thread for this.

-

3 minutes ago, maxhartung said:

I tried mkdir folder called 100 (machine id), put the img there and see if it works but same error.

Mine looks like this:

Quoteargs: -device 'qemu-xhci,addr=0x18' -drive 'id=synoboot,file=/var/lib/vz/images/XXX/redpill.img,if=none,format=raw' -device 'usb-storage,id=synoboot,drive=synoboot,bootindex=5'

boot: order=sata0

cores: 2

machine: q35

memory: 4096

name: DSM

net0: virtio=XX:XX:XX:XX:XX:XX,bridge=vmbr0

onboot: 1

ostype: l26

sata0: local-lvm:vm-XXX-disk-0,discard=on,size=100G,ssd=1

serial0: socket

smbios1: uuid=XXX

usb0: spice,usb3=1

vmgenid: XXXI crossed out some details with X, but that's the config that works for me without any issues.

-

I am not sure if it's because of the path you choose or because of the absence of the usb0 device. Appart of having my bootloader image in /var/lib/vz/images/${MACHINEID}/${IMAGENAME} the args line looks identicaly.

Works like a charme on pve 7.0.1

-

1 hour ago, john_matrix said:

Maybe you should add a hash check before the building process?

Good idea. Next time I am in the mood, I will check how ttg did it in rp-load and take it as insipiration. It is easiest to implement directly after the download, but would be safer directly before the image is build. I am currious how ttg solved it in rp-load.

-

18 minutes ago, Amoureux said:

Maybe add clear cache every build default?

Thought about it, but it's a rather expensive operation because no build would be able to leverage any sort of build cache. I am not sure how I feel about that

Update: I hear you. Now there is a setting in `global_settings.json` that allows to enable auto clean, which does what you asked for. It is set to "false" by default and needs to set to "true" in order to be enabled.

-

2

2

-

1

1

-

-

Toolchain builder updated to 0.7.1

Changes:

- `clean` now cleans the build cache as well and shows before/after statistics of the disk consumption docker has on your system.

Bare in mind that cleaning the build cache, will require the next build to take longer, as it needs to build up the cache again.

See README.md for instructions.

Update: removed attachment, please use download 0.7.2 instead, which cleans the build cache as well.

-

1

1

-

2

2

-

-

The Dockerfile didn't change to cause this behavior. I assume it was a docker "hickup". Though, I can confirm that packages are downloaded bloody slow from the debian apt repos this morning. I was buisy in the kitchen an didn't notice it directly.

I am building image all this morning, because I want to generate images and populated build cache, to see if my latest modification works.

I forget to cover to clean the build cache in the clean action. Will release it as soon as finished with testing

-

1

1

-

-

This warning (!=error) has been from the beginning.

-

5 hours ago, T-REX-XP said:

Is it possible add UEFI support to the tool chain ?

Actualy this is nothing the tool chain does, it is something that needs to be done in redpill-load. The DSM7.0.1 versions point to repos from jumkey and chchia that actualy seem to have added support for UEFI.

-

Updated the toolchain image builder to 0.7

Changes:

- Added DSM7.0.1 support (done in 0.6.2)

- Added `clean` action that either deletes old images for a specific platform version or for all, if `all` is used instead.

- Added the label `redpill-tool-chain` to the Dockerfile, it will be embedded in new created images and allows the clean action to filter for those images.

The clean action will only work for images build from 0.7 on. It will not clean up images created by previous versions of the script.

Use something like `docker image ls --filter dangling=true | xargs docker image rm` or `docker image prune` to get rid of previous existing images (might delete all unused images, more then just the redpill-tool-chain images)

See README.md for usage.

-

7

7

-

-

As in create a new image? Since the version information is embedded into the image during build time, I am afraid it needs to be rebuilt.

If something is wrong it becomes easier to pinpoint, thus to maintain.

Though, Feel free to modify the script to support your needs. Pass TARGET_PLATFORM, TARGET_VERSION and TARGET_REVISION as --env into the container when the container is created in the runContainer function and you should be good. This is from the top of my head and might miss out other required variables, but they would follow the same principle: identify the variable and just pass it in as --env.Update: appearently it's more complicated than that. Each image is for a dedicated plattform version and set of configured git reposistories.

-

Thanx, will add it and repost. I should have tested it before *cough*

Also, I missed out on that vscode highlighted the block as incomplete...

The repost is attached.

In case I miss out on those things: feel free to post the zip here, no need to wait for me.

Update: deleted the attachment again -> look for 0.7, which has a "clean" action now to clean up old images.

-

2

2

-

2

2

-

-

-

2 minutes ago, flyride said:

UUID/superblock is how it works. Device names can (and do) change and it has no impact on normal md services.

So it is not just the drive order that is irrelevant, but it's also the device path (and thus the controller type) that is irrevant.

I was aware of the first part, but not of the second.

Good to know

-

I am glad that I have been wrong about changes regarding /dev/sas vs. /dev/sd in the driver - it makes sense because there was no evidence in the change log.

There must be some sort of config/flag that is responsible for this.

Pitty that @jumkey's suggestion with CONFIG_SYNO_SAS_DEVICE_PREFIX didn't play out. Thanks for testing @pigr8!

-

3 hours ago, Orphée said:

but as already mentionned here, even with mpt2sas loaded on redpill loader, disk passed through is detected as /dev/sas* and not handled by DSM gui.

Oh boy🙄 This will leave plenty of fun for LSI9211 owner that want to migrate from (jun's) 6.2.3 to (redpill) 7.0. Must be a behavior of the mpt2sas driver itself - almost looks like they change from /dev/sd? to /dev/sas? at one point and the new drivers happen to be created from sources after that change? Afaik Bromolow DSM6.2.3 uses 3.10.105. and the change logs of https://mirrors.edge.kernel.org/pub/linux/kernel/v3.x/ChangeLog-3.10.106, https://mirrors.edge.kernel.org/pub/linux/kernel/v3.x/ChangeLog-3.10.107 or https://mirrors.edge.kernel.org/pub/linux/kernel/v3.x/ChangeLog-3.10.108 (which is what the bromolow DSM7.0 kernel sources base on) indicate nothing that would point to such a change (or anything helpfull).. But what caused the different detection then?

I expect lvm/mdam raids to identify the drives belong to an array by device path (/dev/sd?) and not by the physical controller they are attached to - thus a switch from /dev/sd? to /dev/sas? will result in a broken array?! I hope I realy got this wrong and some sort of magic that relies on disk-uuid, serialnumber or whatever is used to self-heal the situation.

-

19 minutes ago, Orphée said:

with recommended values given by everyone : DiskIdxMap=1000 SataPortMap=4

I can install DSM and it boots OK.

I tried to change the values to understand why with my old values it did not work 'DiskIdxMap=0C SataPortMap=1)

Why would anyone provide an incomplete SataPortMap? It restricts the first controller to 4 ports and let the first drive start at position 16, which is drive 17 (+1 because of the 0 offset) in the ui and the starts the next controller to start at 0, which is drive 1 (+1 because of the 0 offset) in the ui. This may or may not work and for my taste is too much of a gamble.

The old value assumed that the bootdrive is exclusivly on the first SATA port and shifted it to the 12th position, which is the 13th drive in the ui (+1 because of the 0 offset).Though as @ThorGroup pointed out at least twice, the PCI-Bus emulation will high likely result in a different order the controllers are detected, than it was with jun's bootload without the PCI-Bus emulation.

Overall, it is no rocket science to find the right settings for your setup. The configuration depends on the number of detected controllers in DSM and the number of drives for each controller. The only part that remains dark magic is to figure out which controller has which position in SataPortMap and DiskIdxMap.

-

Somehow it rings a bell when it commes to the drive limit. Though, I don't realy recall what the baseline for desaster was ^^

Of course the meta-data in the superblocks is worth nothing if you hit a hard limitation of the platform itself.

-

Since the raid/shr information is stored in the meta-data superblock it should not matter if the drives are in the same order.

Though, when It commes to having the ordering of you choice, you might want to start tinkering with SataPortMap and DiskIdxMap.

Actualy I have never seen a QEMU XML. Haven't sumbled accross it on Proxmox so far, no such files are location in /etc or /lib.

I have yet to understand if Proxmox allows that level of configuration for storage.

Update: seems Unraid uses libvirt as a wrapper arround QEMU/KVM. Proxmox directly instructs QEMU/KVM (Warning: do not try to intall libvirt on Proxmox, it might break your installation!).

-

1

1

-

docker causing kernel panics after move to 6.2.4 on redpill

in Developer Discussion Room

Posted

Last time I checked Influxdb 2.x used a different query language, which will require you to update all queries in your dashboards... Though, this was two or three months ago, maybee they final ported the old query language to Influxdb 2.x as well.