Search the Community

Showing results for tags 'crashed volume'.

-

couldn't open RDWR because of unsupported option features (3).

ADMiNZ posted a question in General Questions

Hello. Broken write and read cache in the storage. There are disks but writes that there is no cache and the disk is not available. Tried it in ubuntu: root@ubuntu:/home/root# mdadm -D /dev/md2 /dev/md2: Version : 1.2 Creation Time : Tue Dec 8 23:15:41 2020 Raid Level : raid5 Array Size : 7794770176 (7433.67 GiB 7981.84 GB) Used Dev Size : 1948692544 (1858.42 GiB 1995.46 GB) Raid Devices : 5 Total Devices : 5 Persistence : Superblock is persistent Update Time : Tue Jun 22 22:16:38 2021 State : clean Active Devices : 5 Working Devices : 5 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Consistency Policy : resync Name : memedia:2 UUID : ae85cc53:ecc1226b:0b6f21b5:b81b58c5 Events : 34754 root@ubuntu:/home/root# btrfs-find-root /dev/vg1/volume_1 parent transid verify failed on 20971520 wanted 48188 found 201397 parent transid verify failed on 20971520 wanted 48188 found 201397 parent transid verify failed on 20971520 wanted 48188 found 201397 parent transid verify failed on 20971520 wanted 48188 found 201397 Ignoring transid failure parent transid verify failed on 2230861594624 wanted 54011 found 196832 parent transid verify failed on 2230861594624 wanted 54011 found 196832 parent transid verify failed on 2230861594624 wanted 54011 found 196832 parent transid verify failed on 2230861594624 wanted 54011 found 196832 Ignoring transid failure Couldn't setup extent tree Couldn't setup device tree Superblock thinks the generation is 54011 Superblock thinks the level is 1 Well block 2095916859392(gen: 218669 level: 1) seems good, but generation/level doesn't match, want gen: 54011 level: 1 Well block 2095916580864(gen: 218668 level: 1) seems good, but generation/level doesn't match, want gen: 54011 level: 1 Well block 2095916056576(gen: 218667 level: 1) seems good, but generation/level doesn't match, want gen: 54011 level: 1 Well block 2095915335680(gen: 218666 level: 1) seems good, but generation/level doesn't match, want gen: 54011 level: 1 root@ubuntu:/home/root# btrfs check --repair -r 2095916859392 -s 1 /dev/vg1/volume_1 enabling repair mode using SB copy 1, bytenr 67108864 couldn't open RDWR because of unsupported option features (3). ERROR: cannot open file system root@ubuntu:/home/root# btrfs check --clear-space-cache v1 /dev/mapper/vg1-volume_1 couldn't open RDWR because of unsupported option features (3). ERROR: cannot open file system root@ubuntu:/home/root# btrfs check --clear-space-cache v2 /dev/mapper/vg1-volume_1 couldn't open RDWR because of unsupported option features (3). ERROR: cannot open file system root@ubuntu:/home/root# mount -o recovery /dev/vg1/volume_1 /mnt/volume1/ mount: /mnt/volume1: wrong fs type, bad option, bad superblock on /dev/mapper/vg1-volume_1, missing codepage or helper program, or other error. All possible commands are answered: couldn't open RDWR because of unsupported option features (3). Tell me - something can be recovered from such an array? Thank you very much in advance!- 1 reply

-

- crashed volume

- lvm

-

(and 4 more)

Tagged with:

-

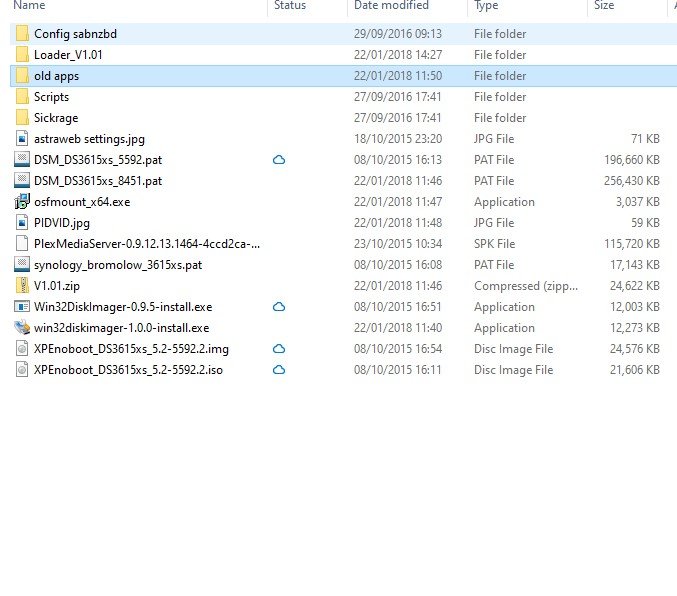

I would appreciate a little gentle guidance please (sorry this is a little rambling I just want to get it right). I set up my HP Gen8 Microserver over 5 years ago and did an interval upgrade as I am currently on DSM 6.0.2-8451 I have DS3615xs Looking at my "server" folder I see I have JunMod loader v1.01 I've slept a bit since this and will be relearning the process. The pic shows what I have already Now the issue is I had 2 x 3TB HDD as JBOD and Volume 1 (with all the software installs) has crashed - it is read only and I can boot into the server as read only so have saved the config file but of of the software works (git/python/plex/videostation etc etc). I have managed to backup my family media SO - I am planning instead to bin off the crashed drive and instead install 2 new discs in a RAID 1 (mirror) config I will use the working volume 2 elsewhere unless someone advises I can use it as some form of backup as a 3rd disc in xpenology? The 2 new discs are 6tg each and this old one is 3tb I am assuming all this means I will basically be doing a "new" install not an "upgrade" Meaning I need a new usb with loaded 1.03b is that right? Will it even be possible or worth restoring any of the settings I currently have backed up from 6.0.2 if there will be new HDD in a RAID config? I am already resigned to having to relearn all the settings for SAB etc as nothing runs and I don't have any backup for those settings "I will get around to it"

- 12 replies

-

- noob

- new install

-

(and 3 more)

Tagged with:

-

Hi I've been running DSM 5.2 happily on my HP Gen8 for a few years It contains 2 WD Red drives as JBOB (no raid) Well yesterday Volume 1 crashed - I've managed to recover my data (pix etc) So that disc needs to come out but I'm wondering if I swap out the other one (3tb) and replace with 2 x 4tb ones running as RAID 1 for integrity I figured it would be a excuse to finally upgrade to dsm6.2 while I'm at it (pretty much start again as a new install) BUT here is my main stress - all the multimedia apps that are installed are installed on the crashed volume and don't run so I can't get their settings (its been a REALLY long time since I did this and setting all those again will make me cry even more than I am already 😢) Is there any way to get a backup of these settings and configuration? What settings are found in the backup section of control panel anyway? Thanks

-

Restore data from BTRFS SHR1volume crashed competition

supermounter posted a question in General Questions

Hello. I'm trying to restore my btfrs volume2 but it seems to be not so easy, especialy for a newbee like me I followed some old post from this forum who gived some path to a solution but after many try and finaly doing a btfrs Dry run restore to check if i'm able to restore, but i'm still falling on some errors. Do I have obligation to pull out my 4 disk from my nas and connect it to a spare computer under a live CD ubuntu to get this restore/copy ? Before I purshase a new drive of 6TB to be able to receive the 5,5To data to restore, I want to be sure i can really restore my data from this crashed volume. Any help will be much appreciate, and a big Easter chocolate egg will be send to my angel keeper that will offer his knowledge and time to me ang give a solution to copy my data in another secure place. -

I been trying to troubleshoot my volume crash myself but I am at the end of my wits here. I am hoping someone can shine some light on to what my issue is and how to fix it. A couple weeks I started to receive email alerts stating, “Checksum mismatch on NAS. Please check Log Center for more details.” I hopped on my NAS WebUI and I did not really seem much in the logs. After checking my systems were still functioning properly and I could access my file, I figured something was wrong but was not a major issue…..how wrong I was. That brings us up until today, where I notice my NAS was only in read only mode. Which I thought was really odd. I tried logging into the WebUI but after I entered my username and password, I was not getting the NAS’s dashboard. I figured I would reboot the NAS, thinking it would fix the issue. I had problems with the WebUI being buggy in the past and a reboot seemed to always take care of it. But after the reboot I received the dreaded email, “Volume 1 (SHR, btrfs) on NAS has crashed”. I am unable to access the WebUI. But luckily, I have SSH enabled and logged on to the server and that’s where we are now. Some info about my system: 12 x 10TB Drives Synology 6.1.X as a DS3617xs 1 SSD Cache 24 GBs of RAM 1 x XEON CPU Here is the output of some of the commands I tried already: (Have to edit some of the outputs due to SPAM detection) Looks like the RAID comes up as md2. Seems to have the 12 drives active, not 100% sure Received an error when running the this command: GPT PMBR size mismatch (102399 != 60062499) will be corrected by w(rite). I think this might have to do something with the checksum errors I was getting before. When I try to interact with the LV it says it couldn't open file system. I tried to unmounted the LV and/or remount it, it gives me errors saying its not mounted, already mounted or busy. Can anyone comment on whether this is a possibility to recover the data? Am I going in the right direction? Any help would be greatly appreciated!

-

Hi I am using synology hybrid RAID with 5 drives. 4 are working fine, but 1 is failing. My volume is crashed. I know that SHR has 1 disk tolerance so it should recover if only 1 drive failed but i cannot recover it. Please help me.

-

So I wanted to post this here as I have spent 3 days trying to fix my volume. I am running xpenology on a JBOD nas with 11 drives. DS3615xs DSM 5.2-5644 So back a few months ago I had a drive go bad and the volume went into degraded mode, I failed to replace the bad drive at the time because the volume still worked. A few days ago I had a power outage and the nas came back up as crashed. I searched many google pages on what to do to fix it and nothing worked. The bad drive was not recoverable at all. I am no linux guru but I had similar issues before on this nas with other drives so I tried to focus on mdadm commands. Problem was that I could not copy any data over from the old drive. I found a post here https://forum.synology.com/enu/viewtopic.php?f=39&t=102148#p387357 that talked about finding the last known configs of the md raids. I was able to determine that the bad drive was /dev/sdk After trying fdisk, and gparted and realizing I could not use gdisk since it is not native in xpenology and my drive was 4tb and gpt I plugged the drive into a usb hard drive bay in a seperate linux machine. I was able to use another 4tb that was working and copy the partition tables almost identically using gdisk. Don't try to do it on windows, I did not find a worthy tool to partition it correctly. After validating my partition numbers, start-end size and file system type FD00 I stuck the drive back in my nas. I was able to do mdadm --manage /dev/md3 --add /dev/sdk6 and as soon as they showed under cat /proc/mdstat I see the raids rebuilding. I have 22tb of space and the bad drive was lost on md2, md3 and md5 so it will take a while. I am hoping my volume comes back up after they are done.

- 4 replies

-

- mdadm

- degraded volume

-

(and 1 more)

Tagged with: