flyride

-

Posts

2,438 -

Joined

-

Last visited

-

Days Won

127

Posts posted by flyride

-

-

2 hours ago, Mikeyboy2007 said:

Im unsure if it has Raid, im just using the sata ports on the motherboard and as its a server i assumed it was Raid. Its the injecting of the drivers im unsure how to do as i would need to do this for the broadcom drivers for the Ethernets to work.

I don't know if you can assume anything, and you need to know how your server works before you do anything else.

ICH10R (Intel chipset) connected ports may have a simple BIOS-accessible RAID 0/1 option which you should turn "off."

However, some server motherboards can have an embedded third-party RAID controller such as an LSI/MegaRAID which would not work well unless flashed to "IT" mode as previously described. Embedded controllers may not be flashable so ports connected to those controllers would not be good candidates for XPEnology.

-

1

1

-

-

45 minutes ago, ruffpl said:

So if I use host system ( ESXI, PROXMOX,etc) and passthrough all my needed sata disks to virtual machine (Xpenology) they will work like normal disks connected directly to it, independent from the host?

Yes, that's what passthrough is, independent from the virtual host. But at least with ESXi, you can't passthrough a disk, only a controller, in which case the attached disks will come along with it. Alternatively, RDM on ESXi provides the driver and connectivity and provides a raw translated interface to the VM, but without managing the disk in any way. So technically not a passthrough but works the same as far as what DSM sees.

45 minutes ago, ruffpl said:Then if I create btrfs pool from those disks by DSM I will have whole control of them by xpenology/ not by host system (so we can say that it will be entirely separated NAS machine located on my server)? So whole data on it will be stored on normal btrfs partition / not any kind of huge virtual disk or something similar?

This is the same question and answer as above, just restated.

45 minutes ago, ruffpl said:I am asking because I am thinking about moving my whole data pool directly to Xpenology because I want to have control of snapshots, indexing etc that I can not deal with right now when the pool is connected by NFS share into DSM

If you don't use DSM to control your disk devices, then you may as well just run a generic Linux instance and NFS mount remote storage to that. DSM offers you no advantage if it cannot control the disks.

45 minutes ago, ruffpl said:Will the system (DSM) that will be stored on virtual sata (on host ) is also going to have some copy on btrfs pool? (I had it like that on my old DSM5 that every disk was splitted on partitions and had a copy of DSM system)

The virtual disk on the host which is your bootloader image does not have DSM on it. As is the case with DSM5, DSM6 stores a copy of the OS and swap partitions on every disk that is allocated to it. Those partitions are ext4, not btrfs.

-

Passthrough disks can be moved to a baremetal system or connected to another virtual system, no issue.

But you should always have a backup method. Always.

-

One tutorial, don't reinvent the wheel. The install should be the same. ICH10R is an ACPI-compliant controller, so no special treatment required. Do you also have a RAID controller on the system? Many of those don't work or need to be flashed to "IT" mode which basically defeats their RAID configuration. Again, pretty standard stuff.

1.02b loader and DS3615xs DSM 6.1.7 should work with no changes out of the box.

1.03b loader and DS3615xs DSM 6.2.2 will work if you add an Intel NIC or the extra.lzma to support the Broadcom NICs.

-

This is what I'm using for mine. The case fan is near-silent, and the power supply fan does not turn at the loads presented by the J4105 motherboard.

http://www.u-nas.com/xcart/product.php?productid=17636

-

I'm passing through my C236 Sunrise Point SATA controller with no issues. I ran it this way on 6.5 and also now on 6.7.

-

I don't think there is a way to consume external network storage in the UI.

You might be able to manually set up an initiator from the command line, then format and mount similar to the NVMe strategy of spoofing in a volume. You'd need a script to reinitialize it on each boot and I think it would have a high likelihood of breaking.

But you should be able to NFS mount into an active share via command line, and that could easily be scripted to start at boot with no stability concerns.

-

There really isn't much CPU work for running the software RAID. These links may give you some confidence. I'm using a mix of RDM and controller passthrough.

https://xpenology.com/forum/topic/13368-benchmarking-your-synology/?do=findComment&comment=137430

-

On 11/2/2018 at 1:51 PM, flyride said:

Hint: Repeat the test a number of times and report the median value.

Loader: jun 1.04b (DS918)

DSM: 6.2.1-23824U1

Hardware/CPU: J4105-ITX

HDD: WD Red 8TB RAID 5 (4 drives)

Results:

dd if=/dev/zero bs=1M count=1024 | md5sumCPU: 422 MBps

dd bs=1M count=256 if=/dev/zero of=testx conv=fdatasyncWD Red RAID 5-4: 157 MBps

______________________________________________________________________________

My main rig is not 6.2.1, but I thought to record the results on the NVMe platform. I'll repeat at such a date where it is converted to 6.2.1.

Loader: jun 1.02b (DS3615xs)

DSM: 6.1.7-15284U2

Hardware: ESXi 6.5

CPU: E3-1230v6

HDD: Intel P3500 2GB NVMe RAID 1, WD Red 4TB RAID 10 (8 drives)

Results:

dd if=/dev/zero bs=1M count=1024 | md5sumCPU: 629MBps (I do have one other active VM but it's pretty idle)

dd bs=1M count=256 if=/dev/zero of=testx conv=fdatasyncNVMe RAID 1: 1.1 GBps

WD Red RAID 10-8: 371 MBps

The only thing above that can be directly compared is haldi's RAID5 @ 171MBps vs. mine at 157MBps, although the drives are quite different designs.

Replaced the rust-based RAID10 to a 5-way RAIDF1 using Samsung PM963 SATA SSD's. It tests out right at 1.0 GBps.

-

DSM is installed on all the data drives in the system. So by definition you create volumes on the same drives as the DSM OS. Any disk that shows "Initialized" or "Normal" has the DSM OS installed to it.

You might be thinking of the bootloader device - that is not the DSM OS. If it's visible, it's because you haven't configured VID/PID and/or SATA mapping to hide it properly. Regardless do not attempt to create a volume on it.

-

1 hour ago, Captainfingerbang said:

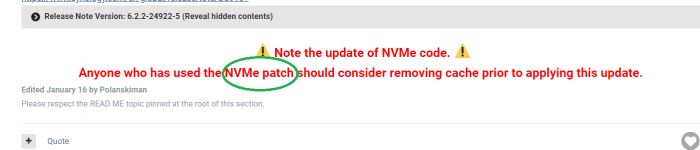

The scripts referred to in this thread implement the patch.

-

If your goal is just to build up an app server, DSM is not the best platform for this. All the "DSM compatible packages" you refer to in the original post are available via Docker.

A Linux server (Ubuntu is fine, other distros too) combined with Docker to virtualize your app suite would be very functional. Look into something like Portainer to help with UI management of the Docker environment to reduce the Linux learning curve.

Regardless of whether you run apps in DSM (via XPEnology or otherwise) or Docker on Linux, you will need to NFS mount the shares hosted on your DS1812+ to get to your data. This functionality is not platform/version specific and works with any combination of DSM version or Linux flavor.

-

I don't know anything about running the HA system but if they need to be matched, why not stand up another ESXi instance and DS3615/3617 image on that instead of a Syno device?

-

Running docker in host or bridge mode won't have any impact on your stated concern.

Your firewall, inherent Docker (in)security, the apps you are using and their configuration will determine that.

-

How are you defining "safe?" qBittorrent should be secured by a VPN if you want to make it "safe." I suggest binhex/qbittorrentvpn

Host mode allows you to solve TCP/IP port conflicts among applications, but some apps have difficulty running in host mode. If you don't have port conflicts, you can run in bridge mode without modifying the application configuration.

If you don't want your Docker application to know anything about your LAN (and you don't connect to it with any local devices), use host mode.

-

30 minutes ago, Zteam_steven said:

I will physically switch the multi operating system environment and co-exist with VMware virtualization. Multi hard disk is RDM mode.

My goal is that these drives can run completely independently and start on demand.

At the same time, it is also convenient for me to directly migrate the hard disk to other hardware compatible computer platforms.

However, most of the time, I don't use virtualization, I just start the synology DSM physical machine running mode.

It's not typical, but there is no reason here not to have two DSM bootloaders that access the same disks.

So use your USB DSM bootloader for baremetal and the image bootloader with Legacy boot from within ESXi. As long as your boot menu USB can support working in Legacy boot mode.

On occasion of a DSM upgrade, you may want to do that on ESXi first and then use the img file to burn back to the USB key to be conservatively safe... you'll have to update the VID/PID and grub boot option but they are otherwise identical. But even without that, I would guess that the second loader would realize the change and update quietly anyway.

-

I think you will be waiting a long time for EFI-compliant loader for DS3615/17xs and DSM 6.2.x

You have a few choices:

- Stay on 6.1.7

- Upgrade your CPU to a Haswell+ chip so that you can run DS918+ (and loader 1.04b which supports EFI boot)

- Solve whatever is going on with ESXi that precludes USB passthrough when using legacy boot (I have not heard of this problem before)

No details have been provided on the ESXi problem or your "other programs boot share" requirement. If you were to explain these issues further, maybe there is a solution.

-

@IG-88's extra.lzma may make your Realtek work again.

-

So your CT card is supported by the e1000e driver and is recognized:

0000:03:00.0 Class 0200: Device 8086:10d3

Subsystem: Device 8086:a01f

Kernel driver in use: e1000eSomething else is going on. Does dmesg show anything regarding the card? Also are you sure it's the Intel that is not being recognized and not Realtek? What motherboard?

-

You still haven't described in any useful terms - how would we know what your on board controller or nic were if you have not provided your brand and model of motherboard?

Which version of Jun's loader?

Which version of DSM (you have just described the platform, not the version)?

-

Intel CT and e1000e driver on 6.2.2 usually works. It's possible that there now is a CT silicon release that is not supported by the current driver. OP can you post the PCI device ID and related info of the card in question? lspci -k

-

You cannot use EFI mode with 1.03b loader. Legacy boot only. This is well documented.

Are you trying to use a USB key to start your system under ESXi? This is not required, just use the virtual HDD provided in the loader distribution. This works fine for legacy boot.

-

32 minutes ago, merve04 said:

I use ext4. Would your command be as easy as replacing “btrfs” with ext4 in place?

No, the command is different. You also have to determine the name of the host storage device in order to resize ext4. The easiest way to do that is to run "df" and look up the device associated with the volume in question. If you are using SHR, it will be something like /dev/vg1000. If you are using a simple RAID method, it will be /dev/mdx (x will be a specific number).

Then, resize the volume with this command:

$ sudo resize2fs -f <device>

This works in uptime, but I strongly suggest that you immediately restart the system after successfully running an ext4 resize.

-

You should be able to migrate install using SHR on DS3615/17xs even though SHR management is not enabled.

If you want to be able to make new SHR volumes, just enable the UI functionality per the FAQ:

https://xpenology.com/forum/topic/9394-installation-faq/?tab=comments#comment-81094

Xpenolgy install on Dell R210 Help.

in The Noob Lounge

Posted

That information is in the thread you quoted.

My first response explained three different options to you. If you pick the third option (1.03b loader, DS3615xs and DSM 6.2.2 without Intel NIC) you will probably need the drivers in extra.lzma. @zzgus just posted the link, you can follow that thread.