tcs

-

Posts

39 -

Joined

-

Last visited

-

Days Won

1

Posts posted by tcs

-

-

7 hours ago, flybird08 said:

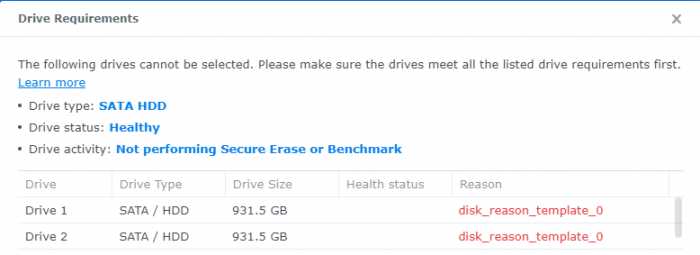

I encountered the same error on ds918+, 7.0.1-42218 version of DSM. Only one hard disk on the SAS controller can read the serial number and firmware version.

The "disk_reason_template_0" message is preventing me from creating a storage pool. Do you have a better solution?

I ended up switching to the 3615 image, I could not get the 918 image to properly see the drives.

-

1 hour ago, xPalito93 said:

Can somebody explain how to insert Drivers?

I only played around with the v0.8-0.11 toolchain without using the ext-manager, so I only used the Build & Auto command.

I'm trying to learn as much as possible but it's not that easy lol.

I have a Fujitsu Server at work which i've been using to play around.

It has a Fujitsu D2607-A21 GS2 SAS card in it, which has a LSI SAS 2008 chip on it.

What would my steps be to create an image with those drivers in it?

Can i do it with the 0.11 toolchain?

I hope someone has time to guide me through it 🙂

I forgot to add:

I was able to create an image with 3615 on it, but it never finds any drives. That's the issue i'm facing and why i wanted to ask how to add drivers

After you do redpill run, you go into redpill-load and edit bundled-exts.json to add whatever extensions you need. If you need more guidance than that, you should probably hold off running this until it's in a more stable state.

-

3 minutes ago, snowfox said:

thank you again.

I run docker in Ubuntu 20.04 in VMware Workstation in win10, not WSL2.

can you ping github from within the container? What happens when you manually curl the file from inside the container? -

16 minutes ago, snowfox said:

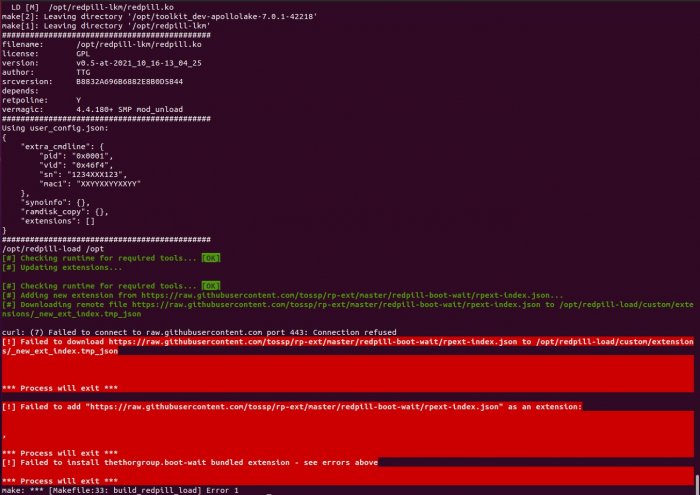

when i run ./redpill_tool_chain.sh auto apollolake-7.0.1-42218,this error occurs:

curl: (7) Failed to connect to raw.githubusercontent.com port 443: Connection refused

[!] Failed to download https://raw.githubusercontent.com/tossp/rp-ext/master/redpill-boot-wait/rpext-index.json to /opt/redpill-load/custom/extensions/_new_ext_index.tmp_json*** Process will exit ***

[!] Failed to add "https://raw.githubusercontent.com/tossp/rp-ext/master/redpill-boot-wait/rpext-index.json" as an extension:

,*** Process will exit ***

[!] Failed to install thethorgroup.boot-wait bundled extension - see errors above*** Process will exit ***

Do you have a firewall running on the box you have the container on? That’s telling you it can’t make an https connection to GitHub. Can you get to the address from a web browser? Either you’re in a country that is blocking GitHub entirely or something local to your system is doing it.

-

1 hour ago, dateno1 said:

but Everyone can't build bootloader and edit them

Then they shouldn't be using redpill. It's not considered stable yet, or in any way ready for general consumption. If someone isn't capable of building a bootloader they should wait until the final version is ready for public consumption.

-

1

1

-

-

On 10/12/2021 at 4:11 PM, WiteWulf said:

Okay, mixed results for me, I'm sad to say. @ThorGroup may find the results useful, however.

I built a new bromolow 7.0.1-42218 image using redpill-helper-v.0.12 and jumkey's "develop" branch of redpoll-load, with pocopico's tg3 extension.

I disabled the PCIe NC360T NIC and re-enabled the internal NIC from the BIOS and booted from the new USB stick.

Initially the server looked good: all docker containers started, as did Plex Media Server, with no kernel panic.

I ran a metadata update on a large library in Plex with no crashes and updated my influxdb docker container, also without a crash.

Next I ran the test TTG had previously asked me to do over on GitHub:

docker run --name influx-test -d -p 8086:8086 -v $PWD:/var/lib/influxdb influxdb:1.8 docker exec -it influx-test sh # inside of the container: wget https://golang.org/dl/go1.17.1.linux-amd64.tar.gz && tar -C /usr/local -xzf go1.17.1.linux-amd64.tar.gz && rm go1* && export PATH=$PATH:/usr/local/go/bin && go get -v 'github.com/influxdata/influx-stress/cmd/...' /root/go/bin/influx-stress insert -f --statsThis time I got a kernel panic:

[ 518.193477] Kernel panic - not syncing: Watchdog detected hard LOCKUP on cpu 6 [ 518.228126] CPU: 6 PID: 28191 Comm: influx-stress Tainted: PF O 3.10.108 #42218 [ 518.267191] Hardware name: HP ProLiant MicroServer Gen8, BIOS J06 04/04/2019 [ 518.301214] ffffffff814a2759 ffffffff814a16b1 0000000000000010 ffff880409b88d60 [ 518.337704] ffff880409b88cf8 0000000000000000 0000000000000006 0000000000000001 [ 518.374045] 0000000000000006 ffffffff80000001 0000000000000030 ffff8803f4c4bc00 [ 518.410480] Call Trace: [ 518.422504] <NMI> [<ffffffff814a2759>] ? dump_stack+0xc/0x15 [ 518.451063] [<ffffffff814a16b1>] ? panic+0xbb/0x1df [ 518.475140] [<ffffffff810a9eb8>] ? watchdog_overflow_callback+0xa8/0xb0 [ 518.508166] [<ffffffff810db7d3>] ? __perf_event_overflow+0x93/0x230 [ 518.539141] [<ffffffff810da612>] ? perf_event_update_userpage+0x12/0xf0 [ 518.571601] [<ffffffff810152a4>] ? intel_pmu_handle_irq+0x1b4/0x340 [ 518.603124] [<ffffffff814a9d06>] ? perf_event_nmi_handler+0x26/0x40 [ 518.634926] [<ffffffff814a944e>] ? do_nmi+0xfe/0x440 [ 518.659908] [<ffffffff814a8a53>] ? end_repeat_nmi+0x1e/0x7e [ 518.688056] <<EOE>> [ 518.698239] Rebooting in 3 seconds..

So this is a *huge* improvement on where I was before, but it still crashes if I really push it, and I'm not sure why @Kouill's server *didn't* crash running the same test 🤔

One thing to note is that the NC360T card is still physically installed in my machine, but disabled in the BIOS.

Just for giggles I ran this for you, not on an HP box, but a 3615 image 7.0.1-42218 on a supermicro X10SAE with an E3-1225 v3. It ran for an hour before I killed it, no issues other than it complaining about cache after filling up the disk which I expect is normal.

-

1

1

-

-

1 hour ago, RedwinX said:

Hey,

Have a little pb with a baremetal. It boot correctly (918+ 7.0 redpill repo), status : not installed

Install ok, reboot and then : status not installed...

didn't have serial outpout. Any idea ?

What is your maxdisk count set to? How many drives are in the system? And did you double check your USB drive ID?

-

2 minutes ago, haydibe said:

I am not going to implement functionality to the docker toochain that modifies the behavior of rp-lkm and rp-load.

It is already hard enough for people to distinguished if problems are located in the docker toochain or rp-load.

The only thing that might sense from my perspective is to support hooks for custom before/after scripts.

Though, even that will introduce a new vector for problems nobody wants to deal with.

On the other side, the toolchain builder script/files are all in "clear text" and not realy hard to understand..

Everyone is free to taylor them to their needs

Understand, that's why I @'d you both. I foresee endless requests for help by people adding drivers if those sizes remain the way they are. If the end goal is: usable by everyone, the manual steps listed above probably won't cut it. If the intended audience is the same as the beta: you understand enough coding and linux to unwind problems yourself, then it's not an issue.

-

3 hours ago, RedwinX said:

Just create a new disk :

dd if=/dev/zero of=redpill-template.img bs=1024k seek=256 count=0

+100M for the 1st partition

+75M for the 2nd

all for the rest

losetup -P /dev/loop18 redpill-template.img

mkdosfs -F32 /dev/loop18p1

mkfs.ext2 /dev/loop18p2mv redpill-template.img boot-image-template.img

gzip redpill-template.img

cp redpill-template.img.gz to ext folder (replace the existing)

Works fine

I think the broader point being that with the size of the current drivers being put out, that volume needs to be larger by default unless there's some reason I'm unaware of that it's stuck at 40MB. Probably wouldn't be a bad idea to make it a flag or part of the config for the docker script @haydibe @ThorGroup. For instance, I can tell you with the mptsas drivers alone that pretty much blows up all the space for drivers by themselves. If you need mptsas and mpt2sas or mpt3sas and the 42218 build you're dead in the water without a larger partition.

-

Definitely an issue with 918+ with SAS adapters on bare metal issue. Switched to 3615 image and it happily reads smart data. The only outstanding issue with 3615 with SAS on bare metal is - I do see this being spammed in scemd.log:

scemd[19875]: space_internal_lib.c:1403 Get value of sys_adjust_groups failed

space_disk_sys_adjust_select_create.c:600 Failed to generate new system disk list

Haven't spent any time to dig further but it otherwise seems happy. -

It looks like syno is trying to attach a marvell driver to the drives despite them being on an mpt2sas controller:

cat /var/log/scemd.log

2021-10-11T16:37:24-05:00 host01 scemd[21169]: disk/disk_is_mv1475_driver.c:71 Can't get sata chip name from pattern /sys/block/sdx/device/../../scsi_host/host*/proc_name

Anyone else running bare metal or passthrough LSI with the 918+ and 42218 able to check and see if you have the same errors?

-

-

9 minutes ago, WiteWulf said:

42218 is not a supported version at present, don't expect extension repos to have everything necessary for it to build

I would second this. It’s relatively easy to get a 918 build working if you understand json and can read compiler errors. That being said if you can’t, just stop trying and be patient. @Orphée - your 918 repo needed the model changed to 918p and to be pointed at the virtIO build for 4.4.180plus if you care.

-

2

2

-

-

16 hours ago, ThorGroup said:

You probably installed too many drivers or found a bug causing a loop somewhere. You shouldn't really go above the space allocated (~50MB) as this is plenty for drivers you need on a single platform.

Also a quick note on this front: it only takes two of the SAS drivers to run out of space with the 918+ build, not sure if @pocopico did something wrong building them or if they're really just that big - they're significantly larger than their 3615 counterparts. The 4.4.180plus mptsas driver alone is 6.76MB:

https://github.com/pocopico/rp-ext/blob/main/mptsas/releases/mptsas-4.4.180plus.tgz

-

1 hour ago, MaiuZ said:

hi there!

how did you update to 6.2.4 on rndu2000 i'm able only to install 6.2

thanks

What he's saying is he upgraded to 6.2.4 without reading the forums first, so I'm sure the system is currently a brick. 6.2.4 does not work with Jun's loader.

-

7 hours ago, ThorGroup said:

So a quick up-not-to-date

You guys are writing so many post and we're committed to reading all of them and responding where needed.

We promised to write something more last time but few of us had slightly unpredictable life events - given the fact we usually get together physically we encounter a small delay.

So, regarding the SAS issue: the sas-activator may be a flop. We tested it with SSDs and few spare HDDs which obviously weren't >2TB. It seems like the driver syno ships is ancient and while it does work it only runs using SAS2 (limit to 2TB is one of the symptoms). Don't we all love that ancient kernel?

Probably on systems with mpt3sas the situation isn't a problem.

Probably on systems with mpt3sas the situation isn't a problem.

As to the native SAS support and how it works: in short it's useless. The SAS support in syno is tied to just a few hardcoded models. Most of them support only external ports (via eboxes). Some, like in a few FS* products support internal ports. Unfortunately, this is completely useless as the OS checks for custom firmware, board names, responses etc. Is it possible to emulate that? Yes. Does it make sense? In our opinion not at all. With the community-provided drivers we can all be fully independent of the syno-provided .ko in the image. As the LKM supports SAS-to-SATA emulation for the purpose of syno APIs thinking it's a "SATA" device further investment into true SAS doesn't really make sense. To be clear here: there's no loss of functionality or anything. Many things in the kernel actually use the "SATA" type. It seems like syno intended it to be a true SATA but down the road changed it to really mean "a normal SCSI-based disk".

Presumably there's something in the external SAS connectivity spaghetti code that allows for more than 26 drives - maybe that's a reason to emulate? In theory it'd allow people to use external SAS trays as well (although I'm unclear on whether emulation is actually needed to support that or not).

Curious if anyone actually has a functioning native synology system with more than 26 drives to donate to unwinding the mess.

-

3 minutes ago, pocopico said:

I think its there ? would someone load tg3 on a system that can run 918 ?

https://github.com/pocopico/rp-ext/blob/main/mptsas/rpext-index.json

What am I missing? I only see the 42218 for the 3615.

"releases": {

"ds3615xs_25556": "https://raw.githubusercontent.com/pocopico/rp-ext/master/mptsas/releases/ds3615xs_25556.json",

"ds3615xs_41222": "https://raw.githubusercontent.com/pocopico/rp-ext/master/mptsas/releases/ds3615xs_41222.json",

"ds3615xs_42218": "https://raw.githubusercontent.com/pocopico/rp-ext/master/mptsas/releases/ds3615xs_42218.json",

"ds918p_41890": "https://raw.githubusercontent.com/pocopico/rp-ext/master/mptsas/releases/ds918p_41890.json",

"ds918p_25556": "https://raw.githubusercontent.com/pocopico/rp-ext/master/mptsas/releases/ds918p_25556.json"

}

}

-

10 hours ago, pocopico said:

Can you try ?

Looks like you only added it for the 3615, can you do the 918 as well?

-

38 minutes ago, pigr8 said:

ah no i did not add ds918+ on my fork, just the ds3615xs, it's a personal fork to handle just my hw.. you can find the proper config for that on jumkeys github

what you mean with proper config folder? https://github.com/jumkey/redpill-load/blob/develop/config/DS918%2B/7.0.1-42218/config.json

I was just doing a poor job of noticing the repo changes, don't mind me

-

2 hours ago, pigr8 said:

https://github.com/r0bb10/rp-ext

there you have some extensions that work with 7.0.1-42218, sas-activator toobadly patched the work from others, credits to them.

edit: if you want i have also the redpill-loader here https://github.com/r0bb10/redpill-load that has everything to build 7.0.1-42218, just add this code to @haydibe0.11 builder and it works.

if you build it without ani extra extension the build proceeds with only "thethorgroup.boot-wait" in the bundled-exts

{ "id": "bromolow-7.0.1-42218", "platform_version": "bromolow-7.0.1-42218", "user_config_json": "bromolow_user_config.json", "docker_base_image": "debian:8-slim", "compile_with": "toolkit_dev", "redpill_lkm_make_target": "prod-v7", "downloads": { "kernel": { "url": "https://sourceforge.net/projects/dsgpl/files/Synology%20NAS%20GPL%20Source/25426branch/bromolow-source/linux-3.10.x.txz/download", "sha256": "18aecead760526d652a731121d5b8eae5d6e45087efede0da057413af0b489ed" }, "toolkit_dev": { "url": "https://sourceforge.net/projects/dsgpl/files/toolkit/DSM7.0/ds.bromolow-7.0.dev.txz/download", "sha256": "a5fbc3019ae8787988c2e64191549bfc665a5a9a4cdddb5ee44c10a48ff96cdd" } }, "redpill_lkm": { "source_url": "https://github.com/RedPill-TTG/redpill-lkm.git", "branch": "master" }, "redpill_load": { "source_url": "https://github.com/r0bb10/redpill-load.git", "branch": "master" } },Still not following how this properly builds without the proper config folder? That config folder doesn't exist in any of the repo's.

[!] There doesn't seem to be a config for DS918+ platform running 7.0.1-42218 (checked /opt/redpill-load/config/DS918+/7.0.1-42218/config.json)

-

6 hours ago, Orphée said:

Jumkey's develop repo is not up to date and does not handle extensions.

master branch handles extensions.

-

17 hours ago, WiteWulf said:

You can build it with Haydib's docker scripts by adding the relevant details to the config json file. NB. TTG don't support 42218, so this is pulling from jumkey's repo and you won't be able to use extensions with this. This is what I'm using on my system.

{ "id": "bromolow-7.0.1-42218", "platform_version": "bromolow-7.0.1-42218", "user_config_json": "bromolow_user_config.json", "docker_base_image": "debian:8-slim", "compile_with": "toolkit_dev", "redpill_lkm_make_target": "dev-v7", "downloads": { "kernel": { "url": "https://sourceforge.net/projects/dsgpl/files/Synology%20NAS%20GPL%20Source/25426branch/bromolow-source/linux-3.10.x.txz/download", "sha256": "18aecead760526d652a731121d5b8eae5d6e45087efede0da057413af0b489ed" }, "toolkit_dev": { "url": "https://sourceforge.net/projects/dsgpl/files/toolkit/DSM7.0/ds.bromolow-7.0.dev.txz/download", "sha256": "a5fbc3019ae8787988c2e64191549bfc665a5a9a4cdddb5ee44c10a48ff96cdd" } }, "redpill_lkm": { "source_url": "https://github.com/RedPill-TTG/redpill-lkm.git", "branch": "master" }, "redpill_load": { "source_url": "https://github.com/jumkey/redpill-load.git", "branch": "develop" } },How exactly did you get that to build? Neither the redpill or jumkey repo contain the appropriate files for 918 or 3615:

[!] There doesn't seem to be a config for DS918+ platform running 7.0.1-42218 (checked /opt/redpill-load/config/DS918+/7.0.1-42218/config.json)

-

On 10/4/2021 at 1:28 PM, pocopico said:

tg3.ko has a dependency libphy.ko which is also included in the tg3 extension.

https://raw.githubusercontent.com/pocopico/rp-ext/main/tg3/rpext-index.json

@pocopico - any chance you can update the driver repo to support 42218? Appears to be the same kernel so I'd imagine it's just a matter of updating the json files, no?

-

2 hours ago, toyanucci said:

Many others have helped to get this up and running so we're all standing on their shoulders. The process I followed is based on the above edited quoted posts. I'm running 7.0.1-42218 baremetal on a Asrock J4105 ITX board with no modifications. I had 6.2.3 working with Jun's loader before.

I haven't seen this posted so I hope this part helps someone but I have a M1 Macbook Air which uses an arm processor and I was unable to compile an image with the docker on this machine or using docker in a Ubuntu virtual machine on the M1 Macbook Air as there were always errors relating to docker. I created a Ubuntu VM on the 6.2.3 build and used the info above to to compile the image. I tested by booting from the usb with the drives unplugged and installed to a different drive to verify it was working ok.I have attached the edited global_config.json file which has apollolake-7.0.1-42218 added so you can use that in the commands from above instead of apollolake-7.0.1-42214. Thanks @Tango38317for your help with that.

The machine gets an ip address in about 45 seconds after boot and everything works perfectly. I have several things running in docker including plex which works without issue but I had to add the following as a scheduled task that runs at boot to get hw transcoding to work for plex if you have a plex pass and a real SN and MAC:

chmod 777 /dev/dri/*

Again, I hope this helps someone.

Your branch should be master, not "develop" if you want to be able to add extensions.

Physical drive limits of an emulated Synology machine?

in Hardware Modding

Posted

If you want to have >26 drives there are really two key things that need to happen. On *INITIAL INSTALL*, you need to have "maxdisks" set to less than 26, I would suggest just doing 12. Once you have gotten through the initial install and first full boot, you then go back and modify maxdisks to a larger number and create your volumes/raid arrays. This is because on first boot, DSM takes a slice off every disk to create a RAID-1 array for DSM itself to live on. Whatever binary they use to create this partition is passed the "maxdisks" variable, and if that variable is >26 the binary will crash. After a system has been installed this script/binary is never called again that I've seen unless you're trying to do a "controller swap" - IE If you went from a 3615 to 3617 it would be called again.