DerMoeJoe

-

Posts

48 -

Joined

-

Last visited

Posts posted by DerMoeJoe

-

-

- Outcome of the update: SUCCESSFUL

- DSM version prior update: DSM 6.2.3 update 2

- Loader version and model: Jun's v1.04b - DS918+

- Using custom extra.lzma: No

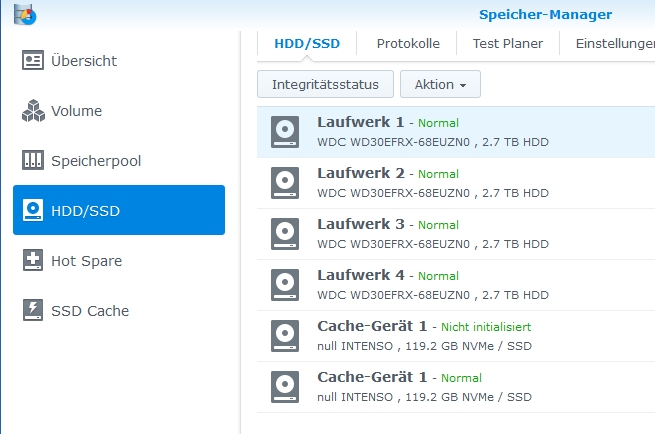

- Installation type: BareMetal | ASRock B250M Pro4 | i5-6400T | Intel X520-QDA1 | 4x WD Red 3TB & 2x 128GB Intenso NVME

- Additional comments: before upgrade removed NVME Cache, after Upgrade recreated the Cache. All Fine including the 10G NIC

-

Intel x520 and the other Variants works with Stock.

Gesendet von meinem Mi A3 mit Tapatalk-

1

1

-

-

But the read only cache still works with the new Update.

Gesendet von meinem Mi A3 mit Tapatalk -

Outcome of the update: SUCCESSFUL

- DSM version prior update: DSM 6.2.3-25426

- Loader version and model: JUN'S LOADER v1.04b - DS918+

- Using custom extra.lzma: NO

- Installation type: BareMetal | ASRock B250M Pro4 | i5-6400T | Intel X520-QDA1 | 4x WD Red 3TB & 2x 128GB Intenso NVME

- Additional comments: all fine

-

yes, the stick must stay in the NAS...

so u can use any old 256mb usb stick for the loader...

-

The Boot Stick must be placed on the Board.

The Boot Stick is Not o ly for the install of xpenology.

Its also the Boot Stick for the Software!

Gesendet von meinem Mi A3 mit Tapatalk-

1

1

-

-

- Outcome of the update: SUCCESSFUL

- DSM version prior update: DSM 6.2.3-25423

- Loader version and model: Jun's v1.04 - DS918+- Using custom extra.lzma: NO

- Installation type: BAREMETAL - ASRock B250M Pro4 | i5-6400T | Intel x520 | 2x 120GB NVME

- Additional comment : removed the NMVE Cache before Upgrade, Installd PAT Manualy, after 2 reboots and aprox. 5 minutes System was UP again. recreate the NVME Cache. 10G Nic works fine as always (without any additional drivers).

-

https://www.synology.com/en-global/releaseNote/DS3615xs

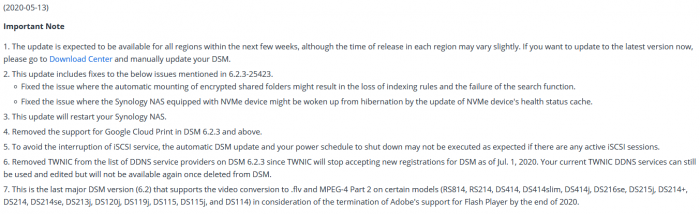

Spoiler(2020-05-13)

Important Note

- The update is expected to be available for all regions within the next few weeks, although the time of release in each region may vary slightly. If you want to update to the latest version now, please go to Download Center and manually update your DSM.

- This update includes fixes to the below issues mentioned in 6.2.3-25423.

- Fixed the issue where the automatic mounting of encrypted shared folders might result in the loss of indexing rules and the failure of the search function.

- Fixed the issue where the Synology NAS equipped with NVMe device might be woken up from hibernation by the update of NVMe device's health status cache.

- This update will restart your Synology NAS.

- Removed the support for Google Cloud Print in DSM 6.2.3 and above.

- To avoid the interruption of iSCSI service, the automatic DSM update and your power schedule to shut down may not be executed as expected if there are any active iSCSI sessions.

- Removed TWNIC from the list of DDNS service providers on DSM 6.2.3 since TWNIC will stop accepting new registrations for DSM as of Jul. 1, 2020. Your current TWNIC DDNS services can still be used and edited but will not be available again once deleted from DSM.

- This is the last major DSM version (6.2) that supports the video conversion to .flv and MPEG-4 Part 2 on certain models (RS814, RS214, DS414, DS414slim, DS414j, DS216se, DS215j, DS214+, DS214, DS214se, DS213j, DS120j, DS119j, DS115, DS115j, and DS114) in consideration of the termination of Adobe's support for Flash Player by the end of 2020.

- What's New in DSM 6.2.3

- Thin Provisioning LUN will become protected upon insufficient volume space, preventing clients from writing data to the LUN while allowing read-only access to the existing data.

- Added support for domain users to sign in to DSM using a UPN (user principal name) via file protocols (including SMB, AFP, FTP, and WebDAV).

- Added support for the option of forcing password changes for importing local users.

- Enhanced the compatibility of the imported user list, providing clearer error messages when the imported file contains syntax errors.

- Added support to record only the events of SMB transfer selected by the user, providing transfer logs that meet the requirements more closely.

- Added support for client users to monitor the changes of subdirectories under shared folders via SMB protocol.

- Added details of desktop notifications to facilitate users' timely responses.

- Added support for external UDF file system devices.

- Added support for the Open vSwitch option in a high-availability cluster.

- Added support for IP conflict detection, providing logs and notifications accordingly.

- Added support for Let's Encrypt wildcard certificates for Synology DDNS.

- Added support to waive the need of DSM login again through an HTTPS connection after a change in client's IP address.

- Added support for hardware-assisted locking for Thick Provisioning LUN on an ext4 volume.

Added support for customized footer message on DSM login pages.

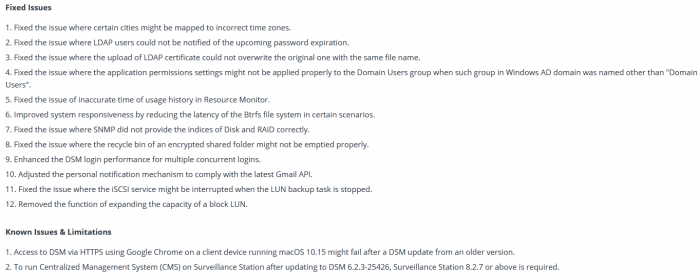

Fixed Issues

- Fixed the issue where certain cities might be mapped to incorrect time zones.

- Fixed the issue where LDAP users could not be notified of the upcoming password expiration.

- Fixed the issue where the upload of LDAP certificate could not overwrite the original one with the same file name.

- Fixed the issue where the application permissions settings might not be applied properly to the Domain Users group when such group in Windows AD domain was named other than "Domain Users".

- Fixed the issue of inaccurate time of usage history in Resource Monitor.

- Improved system responsiveness by reducing the latency of the Btrfs file system in certain scenarios.

- Fixed the issue where SNMP did not provide the indices of Disk and RAID correctly.

- Fixed the issue where the recycle bin of an encrypted shared folder might not be emptied properly.

- Enhanced the DSM login performance for multiple concurrent logins.

- Adjusted the personal notification mechanism to comply with the latest Gmail API.

- Fixed the issue where the iSCSI service might be interrupted when the LUN backup task is stopped.

- Removed the function of expanding the capacity of a block LUN.

Known Issues & Limitations

- Access to DSM via HTTPS using Google Chrome on a client device running macOS 10.15 might fail after a DSM update from an older version.

- To run Centralized Management System (CMS) on Surveillance Station after updating to DSM 6.2.3-25426, Surveillance Station 8.2.7 or above is required.

-

thanks for your help.

with the modded libary file it works again (y)

important is, that the libary must be placed as u said within the /lib64/

on my first test ive searched for the original file and found it on /usr/lib/ and replaced this file, but after this the WEB GUI wasnt working.

probably this is a good option to point the libary with the path in the STARTER Post. (or make another bookmarked topic or something for this)

-

thanks for your work, but the new nvme patch doesnt works for me with 6.2.3.

ive relpaced the old patch, modified the rights with chmod, started the script and rebooted the NAS, but im unable to see the nvme drives within the storrage manager.

-

Before the Upgrade to 6.2.3 i removed the Cache (aß always Before an Update).

After the Update u still cann See the Hardware With console.

But running the Script and performing a reboot wouldnt help until now to get the Cache up again.

-

Outcome of the installation/update: SUCCESSFUL

- DSM version prior update: DSM 6.2.2-24922 Update 6

- Loader version and model: Jun's Loader v1.04b - DS918+

- Using custom extra.lzma: NO

- Installation type: BAREMETAL - (ASRock B250M Pro4 | i5-6400T | Intel X520-DA1 | 4x WD Red 3TB, 2x Intenso NVME 120GB)

- Additional comments: Reboot required by the update. But the Nvme Cache doesnt works after the Update!

-

1

1

-

-

Update:

after restart the script for enabling the nvme cache and make a full reboot i was able again to setup the nvme cache with the 2 drives.

-

short update...

ive swapped the 2 nvme ssds ports... and now i've got the following..

root@FSK-NAS:~# nvme list

Node SN Model Namespace Usage Format FW Rev

---------------- -------------------- ---------------------------------------- --------- -------------------------- ---------------- --------

/dev/nvme0n1 3QLUS7RSFP174FJTPDR5 INTENSO 1 128.04 GB / 128.04 GB 512 B + 0 B R1115A0

/dev/nvme1n1 OWNUZUMYDV8D9WHNPMAA INTENSO 1 128.04 GB / 128.04 GB 512 B + 0 B R1115A0

/dev/nvme1n1p1 OWNUZUMYDV8D9WHNPMAA INTENSO 1 128.04 GB / 128.04 GB 512 B + 0 B R1115A0

root@FSK-NAS:~#

does someone got any idea why i can see now 3 nvme disks within the SHELL ?

and on the GUI again only 1 is usable.

-

ive upgraded my DSM (3 days before) to u6 and before the update ive removed the ssd cache.

after the upgrade was done ive recreated the cache..

and then yesterday at night one if my 2 nvme cache ssd's get corrupted (DSM said).

on the CLI i can see both nvme ssd's.

but insight synology DSM i only can see 1.

root@NAS:~# nvme list

Node SN Model Namespace Usage Format FW Rev

---------------- -------------------- ---------------------------------------- --------- -------------------------- ---------------- --------

/dev/nvme0n1 3QLUS7RSFP174FJTPDR5 INTENSO 1 128.04 GB / 128.04 GB 512 B + 0 B R1115A0

/dev/nvme0n1p1 3QLUS7RSFP174FJTPDR5 INTENSO 1 128.04 GB / 128.04 GB 512 B + 0 B R1115A0

root@NAS:~#so i removed the damaged ssd cache drive, make a proper reboot of the DSM, restarted the script from "The Chief" make again a reboot...

and after this again i only can see 1 nvme drive insight the DSM but 2 drives on CLI.

got someone a hint for me ?

-

Schau dir mal das silverstone ds380 an.

Da passen 8 3.5" Platten rein.

Gesendet von meinem Mi A3 mit Tapatalk -

hy likedoc,

d u work on a update for the nuew 6.2.2. u5 for the ds918+ ?

-

Outcome of the update: SUCCESSFUL

- DSM version prior update: DSM 6.2.2-24922-update 4

- Loader version and model: JUN'S LOADER v1.04b - DS918+

- Using custom extra.lzma: YES (IG-88 v0.6)

- Installation type: BAREMETAL - ASRock B250M Pro4 / Intel X520-DA1 / 4x WD Red 3TB / 2x Intenso nVME 120GB SSD

- Additional comments: REBOOT REQUIRED. removed SSD Cache before the Upgrade, after Reboot recreated the Cache again.

-

2

2

-

-

Sounds like your source (the Backup hdd) is the Limit.... Not your network

Gesendet von meinem Mi A3 mit Tapatalk -

for me it works fine...

with 2x 120gb intenso nvme ssd's in RW Cache Mode.

-

-

thanks for your good work,

ive tried your script to get my test cache ssd to work...

im running DSM 6.2.2-24922 U4

on Baremetal ASRock B250M

with Intel i5-6400T

ive copied your script to the NAS, started the shell script as ROOT and after this ive copied the file to /usr/local/etc/rc.d/ and rebooted the NAS with init 6....

but after the reboot my NVME isnt alive on the volume manager.

Its an Intenso 128GB NVME

root@NAS:/# nvme list Node SN Model Namespace Usage Format FW Rev ---------------- -------------------- ---------------------------------------- --------- -------------------------- ---------------- -------- /dev/nvme0n1 3QLUS7RSFP174FJTPDR5 INTENSO 1 128.04 GB / 128.04 GB 512 B + 0 B R1115A0 /dev/nvme0n1p1 3QLUS7RSFP174FJTPDR5 INTENSO 1 128.04 GB / 128.04 GB 512 B + 0 B R1115A0 /dev/nvme0n1p2 3QLUS7RSFP174FJTPDR5 INTENSO 1 128.04 GB / 128.04 GB 512 B + 0 B R1115A0 root@NAS:/ root@NAS:/# synonvme --is-nvme-ssd /dev/nvme0n1 It is a NVMe SSD root@NAS:/# root@NAS:/# synonvme --model-get /dev/nvme0 Model name: INTENSO root@NAS:/# udevadm info /dev/nvme0n1 P: /devices/pci0000:00/0000:00:1d.0/0000:01:00.0/nvme/nvme0/nvme0n1 N: nvme0n1 E: DEVNAME=/dev/nvme0n1 E: DEVPATH=/devices/pci0000:00/0000:00:1d.0/0000:01:00.0/nvme/nvme0/nvme0n1 E: DEVTYPE=disk E: ID_PART_TABLE_TYPE=gpt E: MAJOR=259 E: MINOR=0 E: PHYSDEVBUS=pci E: PHYSDEVDRIVER=nvme E: PHYSDEVPATH=/devices/pci0000:00/0000:00:1d.0/0000:01:00.0 E: SUBSYSTEM=block E: SYNO_ATTR_SERIAL=3QLUS7RSFP174FJTPDR5 E: SYNO_DEV_DISKPORTTYPE=UNKNOWN E: SYNO_INFO_PLATFORM_NAME=apollolake E: SYNO_KERNEL_VERSION=4.4 E: USEC_INITIALIZED=648737 root@NAS:/#when i look for the age of the file libsynonvme.so i can see that it was modded. (by the date)

root@NAS:/usr/lib# ls -l total 145160 lrwxrwxrwx 1 root root 16 Nov 29 17:35 libsynonvme.so -> libsynonvme.so.1 -rw-r--r-- 1 root root 33578 May 9 2019 libsynonvme.so.1d u got a hint for me?

-

Bare Metal

Gigabyte n3150-d3v

8gb so dimm

4x 3 tb wd Red @raid 5

Dual onboard nic with lacp

With 25w @ file Transfer and 39w on Video Playback

Ds3617 Image running

Gesendet von meinem SM-G950F mit Tapatalk -

I use this Board with the 3615xs Image... And it is running fine. If this helps

TinyCore RedPill Loader (TCRP)

in Loaders

Posted

ive tested it..

on esxi after converting the vmdk its not bootable.

within vmware workstation it is booting.

but within the tinycorelinux ive got a couple of problems..

ssh is not installed and enabled.

after installing openssh and enabling/starting it this works.

next problem is that the scripts arent executeable after transfering them

with chmod +x rploader.sh this is done.

but unable to start them after, cause the env variables for starting bash scripts isnt setup properly..

after doing this i only got a lot of warnings within the rploader.sh script in a couple of lines...

tested with the acutally newest verision from the repo (9days old v0.4.4)