pocopico

-

Posts

1,753 -

Joined

-

Last visited

-

Days Won

123

Posts posted by pocopico

-

-

Testers , PM

-

Polling for modules request.

Which modules would you like to be included ?

-

I would start with the RAM upgrade. Then use a new USB stick for the new loader and use the last known working settings from your 3615 loader (Sataportmap etc) and create a new loader.

Also can you PM me the output of the command dmidecode ?

-

13 hours ago, XAXISNL said:

When using the TS-x72 as the model, QuTS Hero doesn't need a license.

I used to run the TS-672 with QuTS Hero virtually within proxmox and it did run stable. The only reason I stopped using it was because I could only get the disks passed through and recognized through the /dev/by-id/ method and not by passing through the whole controller, which is what I preferred. When passing the whole controller, either on a Q35 or i440fx VM, the Qnap wouldn't recognize the disks.

Also, using virtio drivers for the NIC resulted in a working system, but the dashboard and resource monitor wouldn't show any stats for the NIC. No biggie, but needing to use e1000e and disk passthrough instead of more native solutions like sata passthrough and virtio within proxmox felt like a bit of a "half baked solution".

But to recap a long story, using a ts-x72 model would result in a working QuTS Hero (updateable) without license issues.

MODEL_TYPE="Q0121_Q0170_17_10" PATCHED_FIRMWARE="TS-X72_20230927-h5.1.2.2534" DOWNLOAD_URL="https://eu1.qnap.com/Storage/QuTShero/TS-X72/"

I will try x72 hero. x88 (1288) hero at least requires licence.

-

7 hours ago, XAXISNL said:

When using the TS-x72 as the model, QuTS Hero doesn't need a license.

I used to run the TS-672 with QuTS Hero virtually within proxmox and it did run stable. The only reason I stopped using it was because I could only get the disks passed through and recognized through the /dev/by-id/ method and not by passing through the whole controller, which is what I preferred. When passing the whole controller, either on a Q35 or i440fx VM, the Qnap wouldn't recognize the disks.

Also, using virtio drivers for the NIC resulted in a working system, but the dashboard and resource monitor wouldn't show any stats for the NIC. No biggie, but needing to use e1000e and disk passthrough instead of more native solutions like sata passthrough and virtio within proxmox felt like a bit of a "half baked solution".

But to recap a long story, using a ts-x72 model would result in a working QuTS Hero (updateable) without license issues.

MODEL_TYPE="Q0121_Q0170_17_10" PATCHED_FIRMWARE="TS-X72_20230927-h5.1.2.2534" DOWNLOAD_URL="https://eu1.qnap.com/Storage/QuTShero/TS-X72/"

It would help i you could get the model.conf file that you've used, if of course you still have it

-

1

1

-

-

QuTS Hero is still an issue, the rest has been resolved more or less

-

On 11/13/2023 at 8:47 PM, xenix96 said:

Will you optimize this the QNAP_NEW_BOOT_ISO.img ?

It has working OTA updates. The only thing you have to do manually is the right model.conf .

Is this the image I’ve translated and created the automatic model.conf generation ? It still needs some work but I’ll release an alpha release soon.

Afaik it looks that QuTS hero requires licence. It needs a lot of reverse engineering and I’m not that skilled.

I’ll be calling soon for few physical system testers.

-

1

1

-

-

It’s about time to give some love to the Qnap community. Do we have any targeted models you would like to focus ? Eg TS-1655, TS-1273 etc

-

4

4

-

-

1 hour ago, Peter Suh said:

thank you I will fix the problem soon.

Sent from my iPhone using TapatalkUse the html builder

-

2 hours ago, ssceddie said:

Hi Guys, I tried many combinataions for my Motherboard but no one work.

Could everyone tell me, whats the best option for my Motherboard? Everytime if I try it doesent work, I m note sure i used the right .pat file...there are to much to choice^^

I tried with Loader config DS918p but after "flash" the pat file from the synology Homepage to the NAS either nothing happens or the NAS tells me to restore every time or the installation fails.

Which loader are you using ? You can try DVA1622, with TCPR

-

1

1

-

-

1 hour ago, Peter Suh said:

@pocopico

Finally I completely removed the bsp file from TCRP today.

I followed the script from ARPL.In the redpill-load repo below:

buildroot/config/

build-loader.sh has been modified.

https://github.com/PeterSuh-Q3/redpill-load

You dont need redpill-load anymore, that is the most time consuming process.

-

1

1

-

-

4 minutes ago, titoum said:

hi pocopico,

is there an info on the wiki with latest supported update/tcrp version?

would nice to have to know if we can safely update.

cheers

The updates are handles by tcrpfriend so in tcrpfriend repo, i will try to update the info.

-

7 hours ago, Captainfingerbang said:

Can someone tell me if I can run tcrp or arpl or friend using an LSI SAS to SATA card using ds918+, baremetal?

Yes you can, i have two of them.

-

44 minutes ago, envision said:

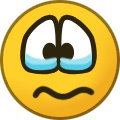

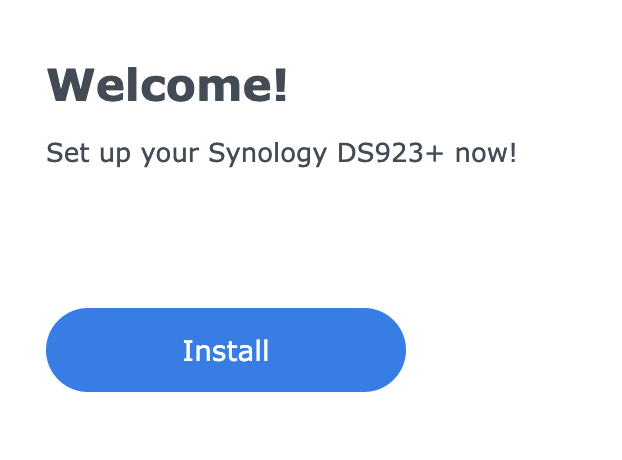

Hey Peter, pocopico, I think I just found out the issue. The tinycore-redpill seems to have a regression with DS3617xs when using newer builds. You can see in the screenshots, when using DS3617xs, we are told that no drives are detected - I created a new esxi VM to test this out and i get the same message. once i switch to the DS923+ then i can proceed with installation or migration if i connect my DS3617xs drive to it. I am not sure if it's safe to migrate my DS3617xs to DS923+

I have seen no issues migrating from one model to another.

-

9 hours ago, Peter Suh said:

I also received confirmation from @wjz304 that ARPL is no longer using bsp with the new patch method.

I will think about it for a few more days and plan to go back to downloading the DSM Pat file.

I will also take the time to analyze ARPL's patching method.

It seems that TCRP should also become free from copyright by completely removing the bsp file.I'm not using bsp files at all in TCRP HTML Builder, its only rploader that has not been adjusted to that, but i plan to fix that soon

-

1

1

-

-

1 hour ago, Peter Suh said:

Thank you for your good comments.

As long as the Pat file is downloaded initially and the model and revision are not changed, the function to back up and store the already downloaded Pat file for rebuilding is a feature that has already been prepared.

This time, the above function was blocked by using pre-extracted rd.gz and zImage files.

I would like to take a more in-depth look at pocopico and copyright-related aspects.

I left a related inquiry with him a little while ago.

If there is no good way to implement fast loader builds while protecting developers, I am currently considering returning to the original process.

thank youYes you are right with the distrubution of bsp files and at some point i was also thinking about removing them as i now use Fabios patching method in HTML Builder. I just didnt have time to re-engineer the build process in rploader.sh.

Having in mind that TTG was very cautious with the distribution of original Syno files, i would like to keep following their rules.

It might be critical for us that we are testing many models to build in faster times but for the main user i think its OK to wait for 5 minutes once in a while.

The stability plays a great role and i think at that we are all somehow in a very good place.

-

3

3

-

-

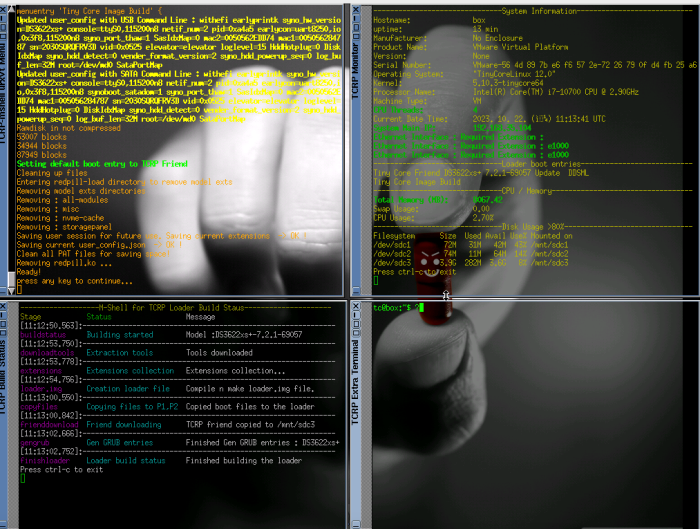

20 hours ago, Peter Suh said:

[NOTICE]

TCRP-mshell Improvements dated 2023.10.22

TCRP-mshell has had major changes in this version, so it has been upgraded from 0.9.6.0, which made the first build speed improvement,

to 0.9.7.0, which has made the second improvement.

I will soon upload version 0.9.7.0 of the GitHub img file.

Starting from version 0.9.7.0, the download process of the DSM Pat file will no longer proceed and will be skipped.

I understand that ARPL-i18n has not yet applied this method and has been finalized as the final version.

The reason for downloading and using the DSM Pat file from the Synology download home is to extract the rd.gz RAM disk compressed file and zImage file within it.

Every time the loader is built, downloading this DSM file and extracting these two files is inefficient, wastes space, and is a huge waste of loader building time,

so I extracted these files corresponding to all four revisions of all models in advance and stored them separately on GitHub. I put it in the repository.

Because the DSM download process is omitted, loader building time is significantly reduced.

The loader in VM ATA mode is built in about 12 seconds as shown in the capture,

and the loader in bare metal USB mode is measured to take about 20 seconds (based on Haswell 4 cores).

The whole Tinycore filesystem is on memory and being on RAM, there is no need to load anything to the RAMdisk. Setting the requirement for memory to 2GB to build the loader,. is one of the constraints that i decided is OK. If you have larger memory available you may speed up by writing more files to /tmp instead of the partition 3 of the loader.

The whole process is based on the main constraint of not sharing anything (kernel, ramdisk binaries, update images, etc) that is copyrighted on any shared location. If you decide to do so, you are opening a posibility to be blaimed for distributing copyrighted material. Its you choice but you might have just opened a back door to be procecuted.

-

1

1

-

-

On 10/6/2023 at 5:15 AM, Peter Suh said:

To maintain the existing partition size, these JOT files should also be written to the third partition.

Correct, i think i've got this fixed after a version, didn't I ? 🤔🤔🤔🤔🤔

-

15 hours ago, Peter Suh said:

I found the cause of unnecessary consumption of space in the 3rd partition.

Because the space occupied by these files was not calculated, an error occurred in which the available space went to 0.There is no need to provide logs.

Rebuilding the loader will automatically resolve this issue.

https://github.com/PeterSuh-Q3/tinycore-redpill/commit/2930866140dc924eba2b57309cc53616f898cd33

I've been away for some time. So i've somehow lost track of the progress of the loader.

We were always using the same partition size for 1st and 2nd partitions as these are the syno defaults, actually the same ones that someone will find on an original syno device.

We never know if thats checked somehow (now or in the future) so the recommendation from TTG was to keep it as is.

I know we've changed a lot of things by introducing new method for modules etc, but we still have a lot of spare space in the third partition to store all the required files for the loader.

-

3

3

-

-

Hi,

Please make sure you have C1E disabled in BIOS. This may have been reset for many reasons. (generic and not related to grub rescue issue)

SD-Card will never work unless its on a USB reader.

USB VID:PID should be set correctly otherwise DSM might think its a disk and will try to format it.

-

Hi,

It doesnt sound so bad. Did you set the USB VID:PID and Serial Number & MAC Address, correctly on Juns restored loader ? Can you PM me your grub.cfg

Data are always on the third disks partition and the installer usually does not mess with the hardware partitions unless it doesnt recognise the disks as DSM disks.

-

1 hour ago, inc0gnit0 said:

Hi guys, i'm trying to install tcrp on TrueNas Core 13.0-U5.3 (bhyve).

I'm able to get the installation through with this configuration :

TCRP Image (RAW) (sda)

Storage #1 (DISK) (sdb)

Storage #2 (DISK) (sdc)However, this is how it's presented when trying to set up the satamap :

tc@box: $ ./rploader.sh satamap now Machine is VIRTUAL Hypervisor= Found "00:02.0 Intel Corporation 82801HR/HO/HH (ICH8R/DO/DH) 6 port SATA Controller [AHCI mode]" Detected 6 ports/0 drives. Bad ports: -5 -4 -3 -2 -1 0. Override # of ports or ENTER to accept <6> ◊ Found "00:03.0 Intel Corporation 82801HR/HO/HH (ICHBR/DO/DH) 6 port SATA Controller [AHCI mode]" Detected 32 ports/3 drives. Bad ports: 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32. Mapping SATABOOT drive after maxdisks WARNING: Other drives are connected that will not be accessible! Computed settings: SataPortMap=11 DiskIdxMap=0010 WARNING: Bad ports are mapped. The DSM installation will fail! Should i update the user_config.json with these values ? [Yy/Nn]So it appears that two SATA Controllers are detected, with the first not holding any drives, and second holding the three drives (TCRP, Storage #1, Storage #2).

The "SATABOOT drive after maxdisks" issue seems to be the pain point, and the other drives indeed are unaccessible (synology loader indicates no drives are found).

What should I change with the setup or how should I configure the SataPortMap/DiskIdxMap in this configuration?

Do I need to find a way to blacklist/unload the SATA Controller at 00:02.0?

Any pointers would be much appreciated!

You do have the boot image on different SATA controller right ?

-

WoL is solved easily even without original interface. Look for the available addons

-

Hi, thanks for the message,

I'm trying to identify the purpose for something like that. Why would you want to perform an XXmove command on Syno ? Can you please elaborate some more ?

-

1

1

-

Howdy NAS Experts! Seeking Advice: Synology, WD, or DIY? Plus, What About StoneFly?

in Hardware Modding

Posted · Edited by pocopico

If i may ask, few things that come in mind,

- What are the required NAS requirements in terms of availability, uptime, hardware redundancy etc ?

- What is the budget ?

- What is the workload you would like to host on the procured NAS ? SMB/CIFS, NFS, S3, structured or unstructured data ?

- What other services you would like to host on the NAS device (Docker, Office, email, backup etc) ?

- Are the above services critical for your bussiness needs ?

Avoid DIY (Thats for non bussiness critical purposes). Dont just buy on features. Stick with the one that provides the best support, things CAN and WILL go wrong at some point ! You need to have a proper support organization structured to backup your business.

Both Synology and QNAP don't offer 2 or 4 hours response time, spare part availability etc and i can guarantee that both will not engage fully to solve your issue. On the other hand, both are the titans of home to small/medium size business NAS devices. Syno is lacking some features that QNAP has BUT with Syno i've never lost data and I must say its rather difficult to do so, whereas with QNAP i've read and had some very dissapointing experiences.

So if I were to prioritize my bussiness needs :

1st. Customer support with onsite presence and spare part availability

2nd. Design a backup solution in parallel with your NAS needs.

3rd. All other mentioned features

I hope this helps a bit.

And a small comment on " And of course, we need a solid backup system (RAID) 'cause those files are super important for our clients."

RAID should NEVER be considered a backup. Its just a level of hardware failure redundancy and thats all. Plan for a proper offsite/offline backup solution as well. Backup to tape or S3, cloud or on premices targets. Ransomware and/or human mistakes can lead to loss of primary hosted data, you should be prepared for that possibility.