RacerX

-

Posts

123 -

Joined

-

Last visited

-

Days Won

2

Posts posted by RacerX

-

-

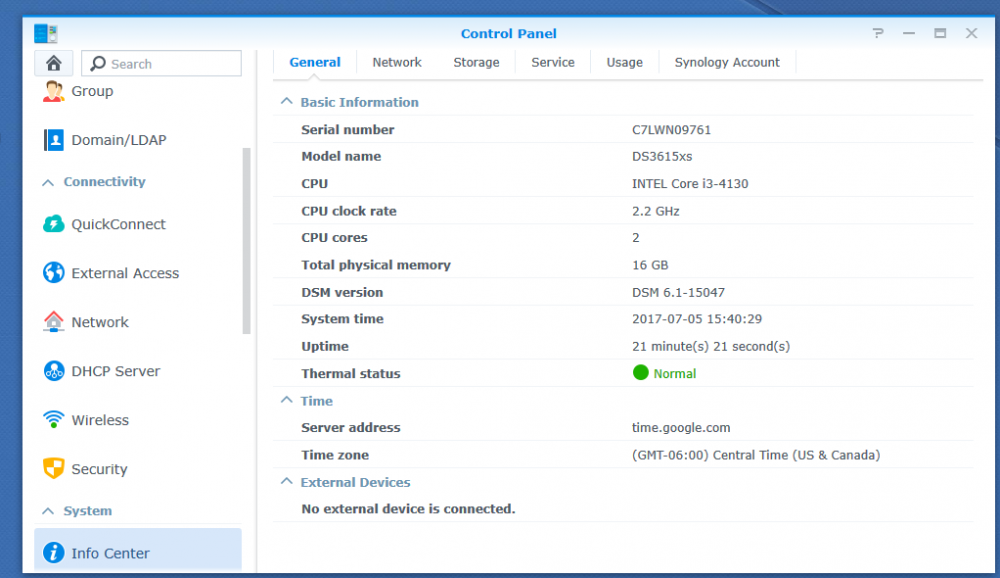

If it's working that is news to me. My test is stock DS3617xs 6.1 Jun's Mod V1.02b 7/4/2017 i did not change the usb stick. Do I need to change it for the test? The mellanox card is two ports.

cat /var/log/dmesg | grep eth1 cat /var/log/dmesg | grep eth2just returns nothing

I connected the cable from one port to the other port since I do not have a 10GB Switch

There are no link link lights and it does not show up with the network interfaces.

-

Adjusted test, removed LSI 9207 and tested the ConnetX2 card in the first slot

dmesg -wH [ +0.019285] Compat-mlnx-ofed backport release: cd30181 [ +0.000002] Backport based on mlnx_ofed/mlnx_rdma.git cd30181 [ +0.000001] compat.git: mlnx_ofed/mlnx_rdma.git [ +0.061974] mlx4_core: Mellanox ConnectX core driver v3.3-1.0.4 (03 Jul 2016) [ +0.000008] mlx4_core: Initializing 0000:01:00.0 [ +0.000031] mlx4_core 0000:01:00.0: enabling device (0100 -> 0102) [ +0.530407] systemd-udevd[5965]: starting version 204 [ +1.141257] mlx4_core 0000:01:00.0: DMFS high rate mode not supported [ +0.006462] mlx4_core: device is working in RoCE mode: Roce V1 [ +0.000001] mlx4_core: gid_type 1 for UD QPs is not supported by the devicegid _type 0 was chosen instead [ +0.000001] mlx4_core: UD QP Gid type is: V1 [ +0.750613] mlx4_core 0000:01:00.0: PCIe link speed is 5.0GT/s, device support s 5.0GT/s [ +0.000002] mlx4_core 0000:01:00.0: PCIe link width is x8, device supports x8 [ +0.000080] mlx4_core 0000:01:00.0: irq 44 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 45 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 46 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 47 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 48 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 49 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 50 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 51 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 52 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 53 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 54 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 55 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 56 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 57 for MSI/MSI-X [ +0.000002] mlx4_core 0000:01:00.0: irq 58 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 59 for MSI/MSI-X [ +0.000003] mlx4_core 0000:01:00.0: irq 60 for MSI/MSI-X [ +0.822443] mlx4_en: Mellanox ConnectX HCA Ethernet driver v3.3-1.0.4 (03 Jul 2016) [ +3.135645] bnx2x: QLogic 5771x/578xx 10/20-Gigabit Ethernet Driver bnx2x 1.71 3.00 ($DateTime: 2015/07/28 00:13:30 $) -

It does..

dmesg -wH [+0.000002] Backport generated by backports.git v3.18.1-1-0-g5e9ec4c [ +0.007100] Compat-mlnx-ofed backport release: cd30181 [ +0.000002] Backport based on mlnx_ofed/mlnx_rdma.git cd30181 [ +0.000001] compat.git: mlnx_ofed/mlnx_rdma.git [ +0.053378] mlx4_core: Mellanox ConnectX core driver v3.3-1.0.4 (03 Jul 2016) [ +0.000008] mlx4_core: Initializing 0000:03:00.0 [ +0.000033] mlx4_core 0000:03:00.0: enabling device (0100 -> 0102) [ +0.420818] systemd-udevd[6199]: starting version 204 [ +1.251641] mlx4_core 0000:03:00.0: DMFS high rate mode not supported [ +0.006420] mlx4_core: device is working in RoCE mode: Roce V1 [ +0.000001] mlx4_core: gid_type 1 for UD QPs is not supported by the devicegid _type 0 was chosen instead [ +0.000001] mlx4_core: UD QP Gid type is: V1 [ +1.253954] mlx4_core 0000:03:00.0: PCIe BW is different than device's capability [ +0.000002] mlx4_core 0000:03:00.0: PCIe link speed is 5.0GT/s, device support s 5.0GT/s [ +0.000001] mlx4_core 0000:03:00.0: PCIe link width is x4, device supports x8 [ +0.000087] mlx4_core 0000:03:00.0: irq 52 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 53 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 54 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 55 for MSI/MSI-X [ +0.000004] mlx4_core 0000:03:00.0: irq 56 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 57 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 58 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 59 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 60 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 61 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 62 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 63 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 64 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 65 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 66 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 67 for MSI/MSI-X [ +0.000003] mlx4_core 0000:03:00.0: irq 68 for MSI/MSI-X [ +1.150446] mlx4_en: Mellanox ConnectX HCA Ethernet driver v3.3-1.0.4 (03 Jul 2016) -

Small Test

HP SSF INTEL Xeon D-1527

Installed DS3617 V1.02b for DSm 6.1

Added LSi HBA 9207

Mellanox MHQH29B-XTR ConnectX 2

The system is bare metal

Results - The LSI HBA 9207 card is very transparent. It works fine right out of the box

On the other hand the Mellanox MHQH29B-XTR ConnectX 2 does not show up under network interfaces,

With SSH

Me@Test:/$ lspci 0000:00:00.0 Class 0600: Device 8086:0c00 (rev 06) 0000:00:01.0 Class 0604: Device 8086:0c01 (rev 06) 0000:00:02.0 Class 0300: Device 8086:0412 (rev 06) 0000:00:03.0 Class 0403: Device 8086:0c0c (rev 06) 0000:00:14.0 Class 0c03: Device 8086:8c31 (rev 04) 0000:00:16.0 Class 0780: Device 8086:8c3a (rev 04) 0000:00:16.3 Class 0700: Device 8086:8c3d (rev 04) 0000:00:19.0 Class 0200: Device 8086:153a (rev 04) 0000:00:1a.0 Class 0c03: Device 8086:8c2d (rev 04) 0000:00:1b.0 Class 0403: Device 8086:8c20 (rev 04) 0000:00:1c.0 Class 0604: Device 8086:8c10 (rev d4) 0000:00:1c.4 Class 0604: Device 8086:8c18 (rev d4) 0000:00:1d.0 Class 0c03: Device 8086:8c26 (rev 04) 0000:00:1f.0 Class 0601: Device 8086:8c4e (rev 04) 0000:00:1f.2 Class 0106: Device 8086:8c02 (rev 04) 0000:00:1f.3 Class 0c05: Device 8086:8c22 (rev 04) 0000:01:00.0 Class 0107: Device 1000:0087 (rev 05) 0000:03:00.0 Class 0c06: Device 15b3:673c (rev b0) 0001:00:02.0 Class 0000: Device 8086:6f04 (rev ff) 0001:00:02.2 Class 0000: Device 8086:6f06 (rev ff) 0001:00:03.0 Class 0000: Device 8086:6f08 (rev ff) 0001:00:03.2 Class 0000: Device 8086:6f0a (rev ff) 0001:00:1f.0 Class 0000: Device 8086:8c54 (rev ff) 0001:00:1f.3 Class 0000: Device 8086:8c22 (rev ff) 0001:06:00.0 Class 0000: Device 1b4b:1475 (rev ff) 0001:08:00.0 Class 0000: Device 1b4b:9235 (rev ff) 0001:09:00.0 Class 0000: Device 8086:1533 (rev ff) 0001:0c:00.0 Class 0000: Device 8086:1533 (rev ff) 0001:0d:00.0 Class 0000: Device 8086:1533 (rev ff) Me@Test:/$Not sure how to test it out any further, I only have this system for test this weekend then I have to give it back.

-

Good news

Just test bare metal 1.02b. Works fine, updated to DSM 6.1.5-15254. Then updated o DSM 6.1.5-15254 Update 1. Restoring Data (1 Day over 1GB)

Yesterday I tested ESXI 6.5 on the N54L even with 16GB it's just too slow. Today I setup a spare Sandy Bridge PC that I had to test, I needed to scrounge around for another stick of memory it's a whooping 6GB but the Core 2 E8500 runs ESXI 6.5 a lot better . I have a Supermicro X9 (Xeon) board that has poor usb boot support. I'm working on getting a hba then give it a better test. I want to test Connectx3, I see it in the device manager of Windows 2016 Essentials I need another to test with (ESXI 6.5, Xpnology 6.1). I was surprised how Widows 2016 essentials runs with a SSD on N54L. I would really like to test Hyper V with issues like images must be in iso format and samba multichannel (experimental) it seems far off.

Thanks for the help, thoughts

-

During a test today. I setup DSM 6.1 DS3617 (2-27-2017) it works properly. I also have NC360T not plugged to the switch.

Then I did the upgrade to DSM 6.1.5-15254 it went thru the whole 10 minutes and I knew something was up.. I shutdown with the power button. When it came back up "Diskstation Not Found". I shutdown again and connected NC360T to the switch. This time it found the NC360 two nics. So I logged in and I see the shutdown was not graceful, ok.... I noticed the BCM5723 is gone, it only shows the NC360T. I ran the update to DSM 6.1.5-15254 Update 1 and it is still MIA...

As for the test, I was doing a test of Windows 2016 essentials. I pulled the plug and put my drives back in and I received the wonderful "Diskstation not found" from Synology Assistant. I struggled to get all my new data moved to my other box. This synology page was helpful.

So the whole week no Xpenology until I read your post in about broadcom in german (google translate) to make sure C1 is disabled, and that did the trick awesome!

I always have used DS3615 (i3) . So today I tested DS3617 (XEON ) on accident

synoboot is 2/27/2017

It was just a test and I was surprised that the onboard nic installed but when I did the update it disappeared. I was lucky to have the other hp nic to work around it. . I need to use the onboard nic I want to try Mellanox CX324a connectX-3.

So if you want me to test it out I can because I'm in between systems at the moment I've been thinking changing from Bare Metal to ESXI so I can run both. There is good howto on youtube right now.

Thanks

-

Food for Thought

I have N54L it has HP NC107i (based on BCM5723)

During a test today. I setup DSM 6.1 works it works properly. I also have NC360T not plugged to the switch. When I did the upgrade to DSM 6.1.5-15254 it went thru the whole 10 minutes and I knew something was up.. I shutdown with the power button. When it came back up "Diskstation Not Found". I shutdown again and connected NC360T to the switch. This time it found the NC360 two nics. So I logged in and I see the shutdown was not graceful, ok.... I noticed the BCM5723 is gone, it only shows the NC360T. I ran the update to DSM 6.1.5-15254 Update 1 and it is still MIA...

-

Successfully updated bare metal HP N54L to DSM 6.1.4-15217

-

Ran a test today on N54L with NC360T,

Installed using Broadcom On-Board Nic

DSM 6.1-15047

Setup JBOD Btrfs

Works fine, I can see 3 Network Connections

Now the big mystery

In the control Panel it showed DSM 6.1.3-15152 is available

So I ran the update. It goes thru the ten minute thing and then says use Synology Assistant to find the box.

Now I can only see two ip's from the NC360T. I connect to one of those and access the N54L

It now has DSM 6.1.3-15152 but only 2 Network Connections. The On-board Broadcom Network Connection is gone......

-

-

This helped me understand the dependencies with booting a vm instead of a usb stick.

http://xpenology.com/forum/viewtopic.php?f=2&t=29582

When you create the vm make sure to change the size from 8gb to 20gb or so....

-

Baremetal Test- DS3615xs 6.0.2 Jun's Mod V1.01

Removed Data Drives, used spare USB Stick and HD.

Initial Test- N54L with NC360T

Used OSFMount to change timeout value from 1 second to10 seconds

Selected AMD boot

Success!

Next, reworked my USB stick and performed the full migration from 5.2. it was straightforward. Synology Assistant said it was migratable. So I upgraded the data and user accounts. Reconfigured my static ip, then tested performace.

So far good,it's good...seems fine

Thanks!!

-

I had a little more time to test

On my Windows 10 PC

C:\WINDOWS\system32>ibstat CA 'ibv_device0' CA type: Number of ports: 2 Firmware version: 2.9.1000 Hardware version: 0xb0 Node GUID: 0x0002c903000972dc System image GUID: 0x0002c903000972df Port 1: State: Initializing Physical state: LinkUp Rate: 40 Base lid: 0 LMC: 0 SM lid: 0 Capability mask: 0x90580000 Port GUID: 0x0002c903000972dd Link layer: IB Transport: IB Port 2: State: Down Physical state: Polling Rate: 70 Base lid: 0 LMC: 0 SM lid: 0 Capability mask: 0x90580000 Port GUID: 0x0002c903000972de Link layer: IB Transport: IBC:\WINDOWS\system32>ibstat -p 0x0002c903000972dd 0x0002c903000972de

Launched the subnet manager

C:\WINDOWS\system32>opensm ------------------------------------------------- OpenSM 3.3.11 UMAD Command Line Arguments: Log File: %windir%\temp\osm.log ------------------------------------------------- OpenSM 3.3.11 UMAD Entering DISCOVERING state Using default GUID 0x2c903000972dd Entering MASTER state SUBNET UP

Ibstat again

C:\WINDOWS\system32>ibstat CA 'ibv_device0' CA type: Number of ports: 2 Firmware version: 2.9.1000 Hardware version: 0xb0 Node GUID: 0x0002c903000972dc System image GUID: 0x0002c903000972df Port 1: State: Active Physical state: LinkUp Rate: 40 Real rate: 32.00 (QDR) Base lid: 1 LMC: 0 SM lid: 1 Capability mask: 0x90580000 Port GUID: 0x0002c903000972dd Link layer: IB Transport: IB Port 2: State: Down Physical state: Polling Rate: 70 Base lid: 0 LMC: 0 SM lid: 0 Capability mask: 0x90580000 Port GUID: 0x0002c903000972de Link layer: IB Transport: IBRunning "ibnetdiscover" gives you list of all nodes connected to your IB network and their "lid" numbers.

C:\WINDOWS\system32>ibnetdiscover # # Topology file: generated on Sun Feb 28 13:23:12 2016 # # Initiated from node 0002c903000972dc port 0002c903000972dd vendid=0x2c9 devid=0x673c sysimgguid=0x2c90300095eaf caguid=0x2c90300095eac Ca 2 "H-0002c90300095eac" # "MT25408 ConnectX Mellanox Technologies" [2](2c90300095eae) "H-0002c903000972dc"[1] (2c903000972dd) # lid 2 lmc 0 "LOFT" lid 1 4xQDR vendid=0x2c9 devid=0x673c sysimgguid=0x2c903000972df caguid=0x2c903000972dc Ca 2 "H-0002c903000972dc" # "LOFT" [1](2c903000972dd) "H-0002c90300095eac"[2] (2c90300095eae) # lid 1 lmc 0 "MT25408 ConnectX Mellanox Technologies" lid 2 4xQDR

With the extra "-d" (debug) and "-v" (verbose) flags, you should see extra info on both sides for each IB ping.

C:\WINDOWS\system32>ibping -d -v 11 ibdebug: [6052] ibping: Ping.. ibwarn: [6052] ib_vendor_call_via: route Lid 11 data 000000256ECFFC50 ibwarn: [6052] ib_vendor_call_via: class 0x132 method 0x1 attr 0x0 mod 0x0 datasz 216 off 40 res_ex 1 ibwarn: [6052] mad_rpc_rmpp: rmpp 0000000000000000 data 000000256ECFFC50 ibwarn: [6052] _do_madrpc: recv failed: m ibwarn: [6052] mad_rpc_rmpp: _do_madrpc failed; dport (Lid 11) ibdebug: [6052] main: ibping to Lid 11 failed ibdebug: [6052] ibping: Ping.. ibwarn: [6052] ib_vendor_call_via: route Lid 11 data 000000256ECFFC50 ibwarn: [6052] ib_vendor_call_via: class 0x132 method 0x1 attr 0x0 mod 0x0 datasz 216 off 40 res_ex 1 ibwarn: [6052] mad_rpc_rmpp: rmpp 0000000000000000 data 000000256ECFFC50 ibwarn: [6052] _do_madrpc: recv failed: m ibwarn: [6052] mad_rpc_rmpp: _do_madrpc failed; dport (Lid 11) ibdebug: [6052] main: ibping to Lid 11 failed ibdebug: [6052] ibping: Ping.. ibwarn: [6052] ib_vendor_call_via: route Lid 11 data 000000256ECFFC50 ibwarn: [6052] ib_vendor_call_via: class 0x132 method 0x1 attr 0x0 mod 0x0 datasz 216 off 40 res_ex 1 ibwarn: [6052] mad_rpc_rmpp: rmpp 0000000000000000 data 000000256ECFFC50 ibwarn: [6052] _do_madrpc: recv failed: m ibwarn: [6052] mad_rpc_rmpp: _do_madrpc failed; dport (Lid 11) ibdebug: [6052] main: ibping to Lid 11 failed ibdebug: [6052] report: out due signal 2 --- (Lid 11) ibping statistics --- 3 packets transmitted, 0 received, 100% packet loss, time 5650 ms rtt min/avg/max = 0.000/0.000/0.000 ms

That's it for now

-

I had some more time today to work on the Mellanox MHQH29B-XTR. I put the card into the N54L and tested some more

Lspci -v 02:00.0 Class 0c06: Device 15b3:673c (rev b0) Subsystem: Device 15b3:0048 Flags: bus master, fast devsel, latency 0, IRQ 18 Memory at fe800000 (64-bit, non-prefetchable) [size=1M] Memory at fd800000 (64-bit, prefetchable) [size=8M] Capabilities: [40] Power Management version 3 Capabilities: [48] Vital Product Data Capabilities: [9c] MSI-X: Enable+ Count=128 Masked- Capabilities: [60] Express Endpoint, MSI 00 Capabilities: [100] Alternative Routing-ID Interpretation (ARI) Capabilities: [148] Device Serial Number 00-02-c9-03-00-09-5e-ac Kernel driver in use: mlx4_coreI installed installed the infiniband-5.2 ko files and changed /etc/rc and /etc.default/rc with vi

Then rebooted, I was hoping that this would straighten it out.....

lsmod | grep mlx mlx_compat 5376 0 mlx4_ib 104330 0 ib_sa 19338 4 mlx4_ib,rdma_cm,ib_ipoib,ib_cm ib_mad 34788 4 mlx4_ib,ib_cm,ib_sa,ib_mthca ib_core 47092 10 mlx4_ib,svcrdma,xprtrdma,rdma_cm,iw_cm,ib_ipoib,ib_cm,ib_sa,ib_mthca,ib_mad mlx4_en 67584 0 mlx4_core 169852 2 mlx4_ib,mlx4_en compat 4529 1 mlx_compat

Library> ifconfig eth0 Link encap:Ethernet HWaddr 9C:B6:54:0B:E6:32 inet addr:192.168.1.11 Bcast:192.168.1.255 Mask:255.255.255.0 inet6 addr: fe80::9eb6:54ff:fe0b:e632/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:7672 errors:0 dropped:0 overruns:0 frame:0 TX packets:8736 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:2477146 (2.3 MiB) TX bytes:5347852 (5.0 MiB) Interrupt:18 lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:13942 errors:0 dropped:0 overruns:0 frame:0 TX packets:13942 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:1909832 (1.8 MiB) TX bytes:1909832 (1.8 MiB)Library> lsmod Module Size Used by Tainted: P cifs 247268 0 udf 78298 0 isofs 31935 0 loop 17688 0 nf_conntrack_ipv6 6267 0 nf_defrag_ipv6 22249 1 nf_conntrack_ipv6 ip6table_filter 1236 1 ip6_tables 16272 1 ip6table_filter xt_geoip 2934 0 xt_recent 8132 0 xt_iprange 1448 0 xt_limit 1721 0 xt_state 1135 0 xt_multiport 1630 0 xt_LOG 12099 0 nf_conntrack_ipv4 11115 0 nf_defrag_ipv4 1147 1 nf_conntrack_ipv4 iptable_filter 1296 1 ip_tables 15570 1 iptable_filter hid_generic 1057 0 usbhid 26686 0 hid 82504 2 hid_generic,usbhid usblp 10674 0 usb_storage 46383 1 oxu210hp_hcd 24469 0 xt_length 1124 4 xt_tcpudp 2311 4 nf_conntrack 52846 3 nf_conntrack_ipv6,xt_state,nf_conntrack_ipv4 x_tables 15527 13 ip6table_filter,ip6_tables,xt_geoip,xt_recent,xt_iprange,xt_limit,xt_state,xt_multiport,xt_LOG,iptable_filter,ip_tables,xt_length,xt_tcpudp bromolow_synobios 42769 0 btrfs 788682 0 synoacl_vfs 17043 1 zlib_deflate 20180 1 btrfs hfsplus 91979 0 md4 3337 0 hmac 2793 0 mlx_compat 5376 0 tn40xx 24739 0 mlx4_ib 104330 0 svcrdma 24891 0 xprtrdma 23349 0 rdma_cm 29859 2 svcrdma,xprtrdma iw_cm 6702 1 rdma_cm ib_ipoib 50417 0 ib_cm 29507 1 rdma_cm ib_sa 19338 4 mlx4_ib,rdma_cm,ib_ipoib,ib_cm ib_addr 4498 1 rdma_cm ib_mthca 116252 0 ib_mad 34788 4 mlx4_ib,ib_cm,ib_sa,ib_mthca ib_core 47092 10 mlx4_ib,svcrdma,xprtrdma,rdma_cm,iw_cm,ib_ipoib,ib_cm,ib_sa,ib_mthca,ib_mad fuse 74004 0 vfat 10009 1 fat 50784 1 vfat glue_helper 3914 0 lrw 3309 0 gf128mul 5346 1 lrw ablk_helper 1684 0 arc4 1847 0 rng_core 3520 0 cpufreq_conservative 6240 0 cpufreq_powersave 862 0 cpufreq_performance 866 2 cpufreq_ondemand 8039 0 acpi_cpufreq 6982 0 mperf 1107 1 acpi_cpufreq processor 26471 1 acpi_cpufreq cpufreq_stats 2985 0 freq_table 2380 3 cpufreq_ondemand,acpi_cpufreq,cpufreq_stats dm_snapshot 26708 0 crc_itu_t 1235 1 udf quota_v2 3783 2 quota_tree 7970 1 quota_v2 psnap 1717 0 p8022 979 0 llc 3441 2 psnap,p8022 sit 14446 0 tunnel4 2061 1 sit ip_tunnel 11572 1 sit zram 8191 2 r8152 49404 0 asix 20370 0 ax88179_178a 16864 0 usbnet 18137 2 asix,ax88179_178a etxhci_hcd 84833 0 xhci_hcd 84493 0 ehci_pci 3504 0 ehci_hcd 39963 1 ehci_pci uhci_hcd 22668 0 ohci_hcd 21168 0 usbcore 176728 14 usbhid,usblp,usb_storage,oxu210hp_hcd,r8152,asix,ax88179_178a,usbnet,etxhci_hcd,xhci_hcd,ehci_pci,ehci_hcd,uhci_hcd,ohci_hcd usb_common 1488 1 usbcore hptiop 15828 0 3w_sas 21786 0 3w_9xxx 33873 0 mvsas 51605 0 hpsa 50480 0 arcmsr 28228 0 pm80xx 134800 0 aic94xx 72946 0 megaraid_sas 138070 0 megaraid_mbox 29835 0 megaraid_mm 7816 1 megaraid_mbox nvme 40947 0 mpt3sas 220677 0 mptsas 39249 0 mptspi 13047 0 mptscsih 18838 2 mptsas,mptspi mptbase 62504 3 mptsas,mptspi,mptscsih scsi_transport_spi 19551 1 mptspi sg 25017 0 ata_piix 24664 0 sata_uli 3004 0 sata_svw 4453 0 sata_qstor 5612 0 sata_sis 3884 0 pata_sis 10858 1 sata_sis stex 15005 0 sata_sx4 9284 0 sata_promise 10799 0 sata_nv 20623 0 sata_via 7803 0 sata_sil 7783 0 pdc_adma 5868 0 pata_via 8827 0 iscsi_tcp 8897 0 libiscsi_tcp 12850 1 iscsi_tcp libiscsi 35195 2 iscsi_tcp,libiscsi_tcp enic 54982 0 qlge 79283 0 qlcnic 214986 0 qla3xxx 36934 0 netxen_nic 98970 0 mlx4_en 67584 0 mlx4_core 169852 2 mlx4_ib,mlx4_en cxgb4 115680 0 cxgb3 134726 0 cnic 71038 0 ipv6 303225 58 nf_conntrack_ipv6,nf_defrag_ipv6,ib_addr,sit bnx2x 1430214 0 bna 122380 0 be2net 89072 0 sis900 20811 0 sis190 17297 0 jme 34677 0 atl1e 28011 0 atl1c 33596 0 atl2 23124 0 atl1 30315 0 alx 26373 0 sky2 47242 0 skge 38482 0 via_velocity 30007 0 crc_ccitt 1235 1 via_velocity via_rhine 21014 0 r8101 122784 0 r8168 318247 0 r8169 30519 0 8139too 18685 0 8139cp 20694 0 tg3 171348 0 broadcom 7174 0 b44 26983 0 bnx2 189638 0 ssb 38587 1 b44 uio 7592 1 cnic forcedeth 55647 0 i40e 249005 0 ixgbe 246171 0 ixgb 37937 0 igb 178198 0 ioatdma 44390 0 e1000e 168235 0 e1000 100754 0 e100 29844 0 dca 4600 3 ixgbe,igb,ioatdma pcnet32 31251 0 amd8111e 16602 0 mdio 3365 3 cxgb3,bnx2x,alx mii 3803 15 r8152,asix,ax88179_178a,usbnet,sis900,sis190,jme,atl1,via_rhine,8139too,8139cp,b44,e100,pcnet32,amd8111e evdev 9156 0 button 4320 0 thermal_sys 18275 1 processor compat 4529 1 mlx_compat cryptd 7040 1 ablk_helper ecryptfs 75063 0 sha512_generic 4976 0 sha256_generic 9884 0 sha1_generic 2206 0 ecb 1849 0 aes_x86_64 7239 0 authenc 6600 0 des_generic 15915 0 libcrc32c 906 1 bnx2x ansi_cprng 3445 0 cts 3968 0 md5 2153 0 cbc 2448 0

I added a second card into my Windows 10 PC to try and make a direct connection to the N54L.

C:\Program Files\Mellanox>ibstat CA 'ibv_device0' CA type: Number of ports: 2 Firmware version: 2.9.1000 Hardware version: 0xb0 Node GUID: 0x0002c903000972dc System image GUID: 0x0002c903000972df Port 1: State: Initializing Physical state: LinkUp Rate: 40 Base lid: 0 LMC: 0 SM lid: 0 Capability mask: 0x90580000 Port GUID: 0x0002c903000972dd Link layer: IB Transport: IB Port 2: State: Down Physical state: Polling Rate: 70 Base lid: 0 LMC: 0 SM lid: 0 Capability mask: 0x90580000 Port GUID: 0x0002c903000972de Link layer: IB Transport: IBIt says I'm at 40GB over IB. I now have a green light lit on the back end of each card. But Windows Networking says the ports are unplugged.

So with the N54L DSM 5.2-5644 Update 5

control panel

network

network interface

the Mellanox MHQH29B-XTR ports are not available. Any thoughts.....

-

Some more Information. I removed the Mellanox MHQH29B-XTR from my N54L. To get a little more info without messing up my existing xpnology setup. I installed the Mellanox MHQH29B-XTR in a WIndows 10 64bit PC. The operating system found the card right out of the box in the device manager. There are two tools that need to downloaded

MLNX_VPI_WinOF-5_10_All_win2012R2_x64 and WinMFT_x64_3_8_0_56 for connectX 2 cards.

c:\Program Files\Mellanox\WinMFT>mst status mt26428_pci_cr0 mt26428_pciconf0

mt26428_pci_cr0 is the name of the device you need this to update the firmware.

C:\Program Files\Mellanox\WinMFT>vstat.exe hca_idx=0 uplink={BUS=PCI_E Gen2, SPEED=5.0 Gbps, WIDTH=x8, CAPS=5.0*x8} MSI-X={ENABLED=1, SUPPORTED=256, GRANTED=4, ALL_MASKED=N} vendor_id=0x02c9 vendor_part_id=26428 hw_ver=0xb0 fw_ver=2.07.0000 PSID=HP_0180000009 node_guid=0002:c903:0009:5eac num_phys_ports=2 port=1 port_guid=0002:c903:0009:5ead port_state=PORT_DOWN (1) link_speed=NA link_width=NA rate=NA port_phys_state=POLLING (2) active_speed=2.50 Gbps sm_lid=0x0000 port_lid=0x0000 port_lmc=0x0 transport=IB max_mtu=4096 (5) active_mtu=4096 (5) GID[0]=fe80:0000:0000:0000:0002:c903:0009:5ead port=2 port_guid=0002:c903:0009:5eae port_state=PORT_DOWN (1) link_speed=NA link_width=NA rate=NA port_phys_state=POLLING (2) active_speed=2.50 Gbps sm_lid=0x0000 port_lid=0x0000 port_lmc=0x0 transport=IB max_mtu=4096 (5) active_mtu=4096 (5) GID[0]=fe80:0000:0000:0000:0002:c903:0009:5eaeNotice fw_ver=2.07.0000 this means the firmware is old. The current one is fw_ver=2.09.1000

also you can see the mac address for each port.

c:\Program Files\Mellanox\WinMFT>ibstat CA 'ibv_device0' CA type: Number of ports: 2 Firmware version: 2.7.0 Hardware version: 0xb0 Node GUID: 0x0002c90300095eac System image GUID: 0x0002c90300095eaf Port 1: State: Down Physical state: Polling Rate: 10 Base lid: 0 LMC: 0 SM lid: 0 Capability mask: 0x90580000 Port GUID: 0x0002c90300095ead Link layer: IB Transport: IB Port 2: State: Down Physical state: Polling Rate: 10 Base lid: 0 LMC: 0 SM lid: 0 Capability mask: 0x90580000 Port GUID: 0x0002c90300095eae Link layer: IB Transport: IBThis page helped me figure out how to flash the firmware on the card

The firmware file for Mellanox MHQH29B-XTR is fw-ConnectX2-rel-2_9_1000-MHQH29B-XTR_A1.bin

I tested the file before I flashed the Mellanox MHQH29B-XTR.

flint -d mt26428_pci_cr0 fw-ConnectX2-rel-2_9_1000-MHQH29B-XTR_A1.bin v

Then I flashed the Mellanox MHQH29B-XTR

flint -d mt26428_pci_cr0 -i fw-ConnectX2-rel-2_9_1000-MHQH29B-XTR_A1.bin -allow_psid_change -y b

reboot

C:\Program Files\Mellanox\WinMFT>vstat.exe hca_idx=0 uplink={BUS=PCI_E Gen2, SPEED=5.0 Gbps, WIDTH=x8, CAPS=5.0*x8} MSI-X={ENABLED=1, SUPPORTED=128, GRANTED=4, ALL_MASKED=N} vendor_id=0x02c9 vendor_part_id=26428 hw_ver=0xb0 fw_ver=2.09.1000 PSID=MT_0D80110009 node_guid=0002:c903:0009:5eac num_phys_ports=2 port=1 port_guid=0002:c903:0009:5ead port_state=PORT_DOWN (1) link_speed=NA link_width=NA rate=NA port_phys_state=POLLING (2) active_speed=0.00 Gbps sm_lid=0x0000 port_lid=0x0000 port_lmc=0x0 transport=IB max_mtu=4096 (5) active_mtu=4096 (5) GID[0]=fe80:0000:0000:0000:0002:c903:0009:5ead port=2 port_guid=0002:c903:0009:5eae port_state=PORT_DOWN (1) link_speed=NA link_width=NA rate=NA port_phys_state=POLLING (2) active_speed=0.00 Gbps sm_lid=0x0000 port_lid=0x0000 port_lmc=0x0 transport=IB max_mtu=4096 (5) active_mtu=4096 (5) GID[0]=fe80:0000:0000:0000:0002:c903:0009:5eaeThe Mellanox MHQH29B-XTR is now has fw_ver=2.09.1000

These tools are available for Ubuntu, SLES, RHEL/CENTOS, OEL, FEDORA, DEBIAN, and CITRIX XENSERVER HOST

-

A little more detail

Library> lspci -v

00:00.0 Class 0600: Device 1022:9601

Subsystem: Device 103c:1609

Flags: bus master, 66MHz, medium devsel, latency 0

Capabilities: [c4] HyperTransport: Slave or Primary Interface

Capabilities: [54] HyperTransport: UnitID Clumping

Capabilities: [40] HyperTransport: Retry Mode

Capabilities: [9c] HyperTransport: #1a

Capabilities: [f8] HyperTransport: #1c

00:01.0 Class 0604: Device 103c:9602

Flags: bus master, 66MHz, medium devsel, latency 64

Bus: primary=00, secondary=01, subordinate=01, sec-latency=64

I/O behind bridge: 0000e000-0000efff

Memory behind bridge: fe600000-fe7fffff

Prefetchable memory behind bridge: 00000000f0000000-00000000f7ffffff

Capabilities: [44] HyperTransport: MSI Mapping Enable+ Fixed+

Capabilities: [b0] Subsystem: Device 103c:1609

00:02.0 Class 0604: Device 1022:9603

Flags: bus master, fast devsel, latency 0

Bus: primary=00, secondary=02, subordinate=02, sec-latency=0

Memory behind bridge: fe800000-fe8fffff

Prefetchable memory behind bridge: 00000000fd800000-00000000fdffffff

Capabilities: [50] Power Management version 3

Capabilities: [58] Express Root Port (Slot+), MSI 00

Capabilities: [a0] MSI: Enable+ Count=1/1 Maskable- 64bit-

Capabilities: [b0] Subsystem: Device 103c:1609

Capabilities: [b8] HyperTransport: MSI Mapping Enable+ Fixed+

Capabilities: [100] Vendor Specific Information: ID=0001 Rev=1 Len=010 <?>

Capabilities: [110] Virtual Channel

Kernel driver in use: pcieport

00:06.0 Class 0604: Device 1022:9606

Flags: bus master, fast devsel, latency 0

Bus: primary=00, secondary=03, subordinate=03, sec-latency=0

Memory behind bridge: fe900000-fe9fffff

Capabilities: [50] Power Management version 3

Capabilities: [58] Express Root Port (Slot-), MSI 00

Capabilities: [a0] MSI: Enable+ Count=1/1 Maskable- 64bit-

Capabilities: [b0] Subsystem: Device 103c:1609

Capabilities: [b8] HyperTransport: MSI Mapping Enable+ Fixed+

Capabilities: [100] Vendor Specific Information: ID=0001 Rev=1 Len=010 <?>

Capabilities: [110] Virtual Channel

Kernel driver in use: pcieport

00:11.0 Class 0106: Device 1002:4391 (rev 40) (prog-if 01)

Subsystem: Device 103c:1609

Flags: bus master, 66MHz, medium devsel, latency 64, IRQ 42

I/O ports at d000

I/O ports at c000

I/O ports at b000

I/O ports at a000

I/O ports at 9000

Memory at fe5ffc00 (32-bit, non-prefetchable)

Capabilities: [50] MSI: Enable+ Count=1/8 Maskable- 64bit+

Capabilities: [70] SATA HBA v1.0

Capabilities: [a4] PCI Advanced Features

Kernel driver in use: ahci

00:12.0 Class 0c03: Device 1002:4397 (prog-if 10)

Subsystem: Device 103c:1609

Flags: bus master, 66MHz, medium devsel, latency 64, IRQ 18

Memory at fe5fe000 (32-bit, non-prefetchable)

Kernel driver in use: ohci_hcd

00:12.2 Class 0c03: Device 1002:4396 (prog-if 20)

Subsystem: Device 103c:1609

Flags: bus master, 66MHz, medium devsel, latency 64, IRQ 17

Memory at fe5ff800 (32-bit, non-prefetchable)

Capabilities: [c0] Power Management version 2

Capabilities: [e4] Debug port: BAR=1 offset=00e0

Kernel driver in use: ehci-pci

00:13.0 Class 0c03: Device 1002:4397 (prog-if 10)

Subsystem: Device 103c:1609

Flags: bus master, 66MHz, medium devsel, latency 64, IRQ 18

Memory at fe5fd000 (32-bit, non-prefetchable)

Kernel driver in use: ohci_hcd

00:13.2 Class 0c03: Device 1002:4396 (prog-if 20)

Subsystem: Device 103c:1609

Flags: bus master, 66MHz, medium devsel, latency 64, IRQ 17

Memory at fe5ff400 (32-bit, non-prefetchable)

Capabilities: [c0] Power Management version 2

Capabilities: [e4] Debug port: BAR=1 offset=00e0

Kernel driver in use: ehci-pci

00:14.0 Class 0c05: Device 1002:4385 (rev 42)

Flags: 66MHz, medium devsel

00:14.3 Class 0601: Device 1002:439d (rev 40)

Subsystem: Device 103c:1609

Flags: bus master, 66MHz, medium devsel, latency 0

00:14.4 Class 0604: Device 1002:4384 (rev 40) (prog-if 01)

Flags: bus master, 66MHz, medium devsel, latency 64

Bus: primary=00, secondary=04, subordinate=04, sec-latency=64

00:16.0 Class 0c03: Device 1002:4397 (prog-if 10)

Subsystem: Device 103c:1609

Flags: bus master, 66MHz, medium devsel, latency 64, IRQ 18

Memory at fe5fc000 (32-bit, non-prefetchable)

Kernel driver in use: ohci_hcd

00:16.2 Class 0c03: Device 1002:4396 (prog-if 20)

Subsystem: Device 103c:1609

Flags: bus master, 66MHz, medium devsel, latency 64, IRQ 17

Memory at fe5ff000 (32-bit, non-prefetchable)

Capabilities: [c0] Power Management version 2

Capabilities: [e4] Debug port: BAR=1 offset=00e0

Kernel driver in use: ehci-pci

00:18.0 Class 0600: Device 1022:1200

Flags: fast devsel

Capabilities: [80] HyperTransport: Host or Secondary Interface

00:18.1 Class 0600: Device 1022:1201

Flags: fast devsel

00:18.2 Class 0600: Device 1022:1202

Flags: fast devsel

00:18.3 Class 0600: Device 1022:1203

Flags: fast devsel

Capabilities: [f0] Secure device <?>

00:18.4 Class 0600: Device 1022:1204

Flags: fast devsel

01:05.0 Class 0300: Device 1002:9712

Subsystem: Device 103c:1609

Flags: bus master, fast devsel, latency 0, IRQ 10

Memory at f0000000 (32-bit, prefetchable)

I/O ports at e000

Memory at fe7f0000 (32-bit, non-prefetchable)

Memory at fe600000 (32-bit, non-prefetchable)

Expansion ROM at [disabled]

Capabilities: [50] Power Management version 3

Capabilities: [a0] MSI: Enable- Count=1/1 Maskable- 64bit+

02:00.0 Class 0c06: Device 15b3:673c (rev b0)

Subsystem: Device 15b3:0021

Flags: bus master, fast devsel, latency 0, IRQ 18

Memory at fe800000 (64-bit, non-prefetchable)

Memory at fd800000 (64-bit, prefetchable)

Capabilities: [40] Power Management version 3

Capabilities: [48] Vital Product Data

Capabilities: [9c] MSI-X: Enable+ Count=256 Masked-

Capabilities: [60] Express Endpoint, MSI 00

Capabilities: [100] Alternative Routing-ID Interpretation (ARI)

Kernel driver in use: mlx4_core

03:00.0 Class 0200: Device 14e4:165b (rev 10)

Subsystem: Device 103c:705d

Flags: bus master, fast devsel, latency 0, IRQ 53

Memory at fe9f0000 (64-bit, non-prefetchable)

Capabilities: [48] Power Management version 3

Capabilities: [40] Vital Product Data

Capabilities: [60] Vendor Specific Information: Len=6c <?>

Capabilities: [50] MSI: Enable+ Count=1/1 Maskable- 64bit+

Capabilities: [cc] Express Endpoint, MSI 00

Capabilities: [100] Advanced Error Reporting

Capabilities: [13c] Virtual Channel

Capabilities: [160] Device Serial Number 9c-b6-54-ff-fe-0b-e6-32

Capabilities: [16c] Power Budgeting <?>

Kernel driver in use: tg3

Any thoughts would be helpful

-

Yesterday I upgraded my N54L to DSM 5.2-5644 Update 5.

It works fine, thanks....

I had a little time to test out my Mellanox MHQH29B-XTR.

lscpi

00:00.0 Class 0600: Device 1022:9601

00:01.0 Class 0604: Device 103c:9602

00:02.0 Class 0604: Device 1022:9603

00:06.0 Class 0604: Device 1022:9606

00:11.0 Class 0106: Device 1002:4391 (rev 40)

00:12.0 Class 0c03: Device 1002:4397

00:12.2 Class 0c03: Device 1002:4396

00:13.0 Class 0c03: Device 1002:4397

00:13.2 Class 0c03: Device 1002:4396

00:14.0 Class 0c05: Device 1002:4385 (rev 42)

00:14.3 Class 0601: Device 1002:439d (rev 40)

00:14.4 Class 0604: Device 1002:4384 (rev 40)

00:16.0 Class 0c03: Device 1002:4397

00:16.2 Class 0c03: Device 1002:4396

00:18.0 Class 0600: Device 1022:1200

00:18.1 Class 0600: Device 1022:1201

00:18.2 Class 0600: Device 1022:1202

00:18.3 Class 0600: Device 1022:1203

00:18.4 Class 0600: Device 1022:1204

01:05.0 Class 0300: Device 1002:9712

02:00.0 Class 0c06: Device 15b3:673c (rev b0)

03:00.0 Class 0200: Device 14e4:165b (rev 10)

dmesg|grep mlx4

[ 4.576776] mlx4_core: Mellanox ConnectX core driver v1.1 (Dec, 2011)

[ 4.576781] mlx4_core: Initializing 0000:02:00.0

[ 6.849435] mlx4_core 0000:02:00.0: irq 43 for MSI/MSI-X

[ 6.849445] mlx4_core 0000:02:00.0: irq 44 for MSI/MSI-X

[ 6.849451] mlx4_core 0000:02:00.0: irq 45 for MSI/MSI-X

[ 6.849457] mlx4_core 0000:02:00.0: irq 46 for MSI/MSI-X

[ 6.849463] mlx4_core 0000:02:00.0: irq 47 for MSI/MSI-X

[ 6.849470] mlx4_core 0000:02:00.0: irq 48 for MSI/MSI-X

[ 6.849475] mlx4_core 0000:02:00.0: irq 49 for MSI/MSI-X

[ 6.849481] mlx4_core 0000:02:00.0: irq 50 for MSI/MSI-X

[ 6.849487] mlx4_core 0000:02:00.0: irq 51 for MSI/MSI-X

[ 6.849492] mlx4_core 0000:02:00.0: irq 52 for MSI/MSI-X

[ 6.872762] mlx4_core 0000:02:00.0: command 0xc failed: fw status = 0x40

[ 6.872931] mlx4_core 0000:02:00.0: command 0xc failed: fw status = 0x40

[ 6.882538] mlx4_en: Mellanox ConnectX HCA Ethernet driver v2.0 (Dec 2011)

[ 6.882679] mlx4_en 0000:02:00.0: UDP RSS is not supported on this device.

lsmod

Module Size Used by Tainted: P

cifs 247268 0

udf 78298 0

isofs 31935 0

loop 17688 0

nf_conntrack_ipv6 6267 0

nf_defrag_ipv6 22249 1 nf_conntrack_ipv6

ip6table_filter 1236 1

ip6_tables 16272 1 ip6table_filter

xt_geoip 2934 0

xt_recent 8132 0

xt_iprange 1448 0

xt_limit 1721 0

xt_state 1135 0

xt_multiport 1630 0

xt_LOG 12099 0

nf_conntrack_ipv4 11115 0

nf_defrag_ipv4 1147 1 nf_conntrack_ipv4

iptable_filter 1296 1

ip_tables 15570 1 iptable_filter

xt_length 1124 4

xt_tcpudp 2311 4

nf_conntrack 52846 3 nf_conntrack_ipv6,xt_state,nf_conntrack_ipv4

x_tables 15527 13 ip6table_filter,ip6_tables,xt_geoip,xt_recent,xt _iprange,xt_limit,xt_state,xt_multiport,xt_LOG,iptable_filter,ip_tables,xt_length,xt_tcpudp

hid_generic 1057 0

usbhid 26686 0

hid 82504 2 hid_generic,usbhid

usblp 10674 0

usb_storage 46383 1

oxu210hp_hcd 24469 0

bromolow_synobios 42769 0

btrfs 788682 0

synoacl_vfs 17043 1

zlib_deflate 20180 1 btrfs

hfsplus 91979 0

md4 3337 0

hmac 2793 0

mlx_compat 5376 0

tn40xx 24739 0

fuse 74004 0

vfat 10009 1

fat 50784 1 vfat

glue_helper 3914 0

lrw 3309 0

gf128mul 5346 1 lrw

ablk_helper 1684 0

arc4 1847 0

rng_core 3520 0

cpufreq_conservative 6240 0

cpufreq_powersave 862 0

cpufreq_performance 866 2

cpufreq_ondemand 8039 0

acpi_cpufreq 6982 0

mperf 1107 1 acpi_cpufreq

processor 26471 1 acpi_cpufreq

cpufreq_stats 2985 0

freq_table 2380 3 cpufreq_ondemand,acpi_cpufreq,cpufreq_stats

dm_snapshot 26708 0

crc_itu_t 1235 1 udf

quota_v2 3783 2

quota_tree 7970 1 quota_v2

psnap 1717 0

p8022 979 0

llc 3441 2 psnap,p8022

sit 14446 0

tunnel4 2061 1 sit

ip_tunnel 11572 1 sit

zram 8191 2

r8152 49404 0

asix 20370 0

ax88179_178a 16864 0

usbnet 18137 2 asix,ax88179_178a

etxhci_hcd 84833 0

xhci_hcd 84493 0

ehci_pci 3504 0

ehci_hcd 39963 1 ehci_pci

uhci_hcd 22668 0

ohci_hcd 21168 0

usbcore 176728 14 usbhid,usblp,usb_storage,oxu210hp_hcd,r8152,asix ,ax88179_178a,usbnet,etxhci_hcd,xhci_hcd,ehci_pci,ehci_hcd,uhci_hcd,ohci_hcd

usb_common 1488 1 usbcore

hptiop 15828 0

3w_sas 21786 0

3w_9xxx 33873 0

mvsas 51605 0

hpsa 50480 0

arcmsr 28228 0

pm80xx 134800 0

aic94xx 72946 0

megaraid_sas 138070 0

megaraid_mbox 29835 0

megaraid_mm 7816 1 megaraid_mbox

nvme 40947 0

mpt3sas 220677 0

mptsas 39249 0

mptspi 13047 0

mptscsih 18838 2 mptsas,mptspi

mptbase 62504 3 mptsas,mptspi,mptscsih

scsi_transport_spi 19551 1 mptspi

sg 25017 0

ata_piix 24664 0

sata_uli 3004 0

sata_svw 4453 0

sata_qstor 5612 0

sata_sis 3884 0

pata_sis 10858 1 sata_sis

stex 15005 0

sata_sx4 9284 0

sata_promise 10799 0

sata_nv 20623 0

sata_via 7803 0

sata_sil 7783 0

pdc_adma 5868 0

pata_via 8827 0

iscsi_tcp 8897 0

libiscsi_tcp 12850 1 iscsi_tcp

libiscsi 35195 2 iscsi_tcp,libiscsi_tcp

enic 54982 0

qlge 79283 0

qlcnic 214986 0

qla3xxx 36934 0

netxen_nic 98970 0

mlx4_en 67584 0

mlx4_core 169852 1 mlx4_en

cxgb4 115680 0

cxgb3 134726 0

cnic 71038 0

ipv6 303225 49 nf_conntrack_ipv6,nf_defrag_ipv6,sit

bnx2x 1430214 0

bna 122380 0

be2net 89072 0

sis900 20811 0

sis190 17297 0

jme 34677 0

atl1e 28011 0

atl1c 33596 0

atl2 23124 0

atl1 30315 0

alx 26373 0

sky2 47242 0

skge 38482 0

via_velocity 30007 0

crc_ccitt 1235 1 via_velocity

via_rhine 21014 0

r8101 122784 0

r8168 318247 0

r8169 30519 0

8139too 18685 0

8139cp 20694 0

tg3 171348 0

broadcom 7174 0

b44 26983 0

bnx2 189638 0

ssb 38587 1 b44

uio 7592 1 cnic

forcedeth 55647 0

i40e 249005 0

ixgbe 246171 0

ixgb 37937 0

igb 178198 0

ioatdma 44390 0

e1000e 168235 0

e1000 100754 0

e100 29844 0

dca 4600 3 ixgbe,igb,ioatdma

pcnet32 31251 0

amd8111e 16602 0

mdio 3365 3 cxgb3,bnx2x,alx

mii 3803 15 r8152,asix,ax88179_178a,usbnet,sis900,sis190,jme ,atl1,via_rhine,8139too,8139cp,b44,e100,pcnet32,amd8111e

evdev 9156 0

button 4320 0

thermal_sys 18275 1 processor

compat 4529 1 mlx_compat

cryptd 7040 1 ablk_helper

ecryptfs 75063 0

sha512_generic 4976 0

sha256_generic 9884 0

sha1_generic 2206 0

ecb 1849 0

aes_x86_64 7239 0

authenc 6600 0

des_generic 15915 0

libcrc32c 906 1 bnx2x

ansi_cprng 3445 0

cts 3968 0

md5 2153 0

cbc 2448 0

At XPEnoboot boot

Loading Module Megaraid_SAS fails it's red

All other Modules load correctly in green

The Mellanox MHQH29B-XTR is the only card in the N54L.

Starting to think that the Mac Address for the Mellanox MHQH29B-XTR needs to be written into the firmware.

-

Update

I noticed that XPEnoboot_DS3615xs_5.2-5644.4 img is now available at

http://

I downloaded the img file and then took the usb drive out of my N54L and reimaged it with XPEnoboot_DS3615xs_5.2-5644.4 on a different machine using Win32DiskImager. When I put the usb drive back in my N54L I selected the first option from the grub boot menu and it showed all the packages getting updated. When I logged seems just like normal.

I'm now on the DSM 5.2-5644.4

-

Update

I noticed that XPEnoboot_DS3615xs_5.2-5644.1 is now available at

http://

I downloaded the img file and then took the usb drive out of my N54L and reimaged it with XPEnoboot_DS3615xs_5.2-5644.1 on a different machine using Win32DiskImager. When I put the usb drive back in my N54L I selected the first option from the grub boot menu and it showed all the packages getting updated. When I logged in and checked the updates it still showed DSM 5592 so I downloaded and then installed it through the update screen and it worked.

I'm now on the DSM 5.2-5644.1

-

Hello

During the summer I tested my HP N54L with DSM_DS3615xs_5565 and it was fine. So I purchased a second HP N54L do some more testing to see it if would work for me, I started reading this forum and found a lot of good info. The first time I installed it, I remember it recommended not to run the update and so I left it stock DSM 5565. So lately I have noticed that the control panel is prompting for updates. The first time I tried to upgrade to DSM 5592 through the menu it would start fine and then stop at 65% with a Error 21 and then roll back to the main screen. Most of the stuff on the web about this error is old and does not really apply.

Next,go here for the updates

http://xpenology.me/downloads/

The best way I found to manage the updates is to download each one in a different folder.

for example

DSM 5565

Update 1

Update 2

DSM 5592

Update 1

Update 2

Update 3

Then upload each update one at a time. It takes a while to do each one, but it was straght forward to do DSM 5565 Update 1, and Update 2. However, when I tried DSM 5592 I received error 21. The way now understand is that each DSM revision requires a custom bootstrap on the usb drive. So I took the usb drive out of my N54L and reimaged it with XPEnoboot 5.2-5592.1 DS3615xs on a different machine using Win32DiskImager.

When I put the usb drive back in my N54L I selected upgrade from the grub boot menu and it showed all the packages getting updated. When I logged in and checked the updates it still showed DSM 5565 so I uploaded DSM 5592 and this time it worked

When I ran the updates for DSM 5592 I noticed that it did not require a reboot. That was fine but when I did Update 3. I checked Storage Manager and the Volume information was missing. So I shutdown the N54L and then started it back up and this time the Volume Information was present.

So now there is a new Version 5.2-5644 released. If your not running a VM I would recommend waiting for the developers release of the cutom XPEnoboot for 5.2-5644...

10Gbe setup - will this work with 6.1?

in Hardware Modding

Posted · Edited by RacerX

Hi

I have another single port card

02:00.0 Network controller: Mellanox Technologies MT27500 Family [ConnectX-3]

In another computer if that I could test tomorrow that helps