eptesicus

-

Posts

8 -

Joined

-

Last visited

Posts posted by eptesicus

-

-

40 minutes ago, Kanedo said:

No problem. Glad it worked. You'll have to run this after every reboot though. Or you can put it in some startup script.

Will creating a task for the script to run at startup not work for this? I'll have to test it out.

-

1 hour ago, Kanedo said:

Try this and see if it works.

sudo find /sys/devices/ -type f -name locate -exec sh -c 'echo 0 > "$1"' -- {} \;

I think I love you. That worked! Thank you so much! It was driving me nuts!

-

I have an SC846 chassis with the SAS2 backplane that's connected to the motherboard via a Dell H310 card (flashed to IT mode).

Do any of you have a similar setup, that some how makes it so your locating LEDs on each of your drive bays is flashing? I noticed after flashing the card and getting it all setup again that the red drive LEDs were flashing, and they won't turn off. Now that I'm not running my M5015 cards where I can run the MegaRAID software, I can't go in and stop the LEDs.

Any insight here?

-

1 hour ago, bearcat said:

1 - According to the FAQ : "There is a physical limit of 26 drives due to limitations in drive addressing in Linux"

2 - Forget all about Quicknick, as his work has been put on a hold, and might never be released/updated.

Unfortunate about Quicknick. I imagine a lot of people would have loved to have used his more-than-26-drives bootloader/DSM package.

-

Thanks all. So with Jun's loader, I can get a max of 26 drives... I see instructions from Quicknick on his loader to get up to 64 drives, and found what I thought were the files here: https://xpenology.club/downloads/, but I find nothing else on his loader. Did he make it, provide instructions, but not actually release it?

-

25 minutes ago, sbv3000 said:

If you check the forum you will see that there are various posts about the maximum number of drives and I think there is a limit of 24. Some people have reported exceeding this but on reboot the config is lost.

That's great to know, thanks. I've searched for "xpenology more than 12 drives" and other terms on Google and found nothing about a 24 drive limit.

How would I go about ensuring that DSM then only sees my 24x hot-swap bays and not my internal Santa connections on my motherboard? In storage manager, I believe the disks start at an odd number, and then the hot swap bays were disks 11-34. How do I get DSM to see the hotswap bays as disks 1-24?

-

Preface: I'm new to Xpenology and DSM...

Long story short, I'm building a new (really just reallocating hardware) 24x SAS drive SAN and decided to run Xpenology on it following THIS to do the install and THIS to increase the disk capacity beyond 12 drives. Everything went great. I had a couple 3TB drives that I copied my vCenter VMs to, took all of the SAS drives from my hosts, put them in the 24 bays of my SAN, and started to migrate the VMs to the two R10 arrays I created via iSCSI. Migration was going great... I migrated everything but the vCenter appliance.... Well, I was migrating the vCenter appliance...

Then my UPS faulted, and my SAN shutdown. See... I have two UPS', and each server and my SAN have two PSUs each. One PSU goes to one UPS, and another PSU goes to the other UPS. It's a good, redundant system... However... My SAN only had one PSU connected at the time, and it was to the UPS that faulted...

Ok, so hopefully I can boot up the SAN, and it's no big deal. I could probably recover VCSA...

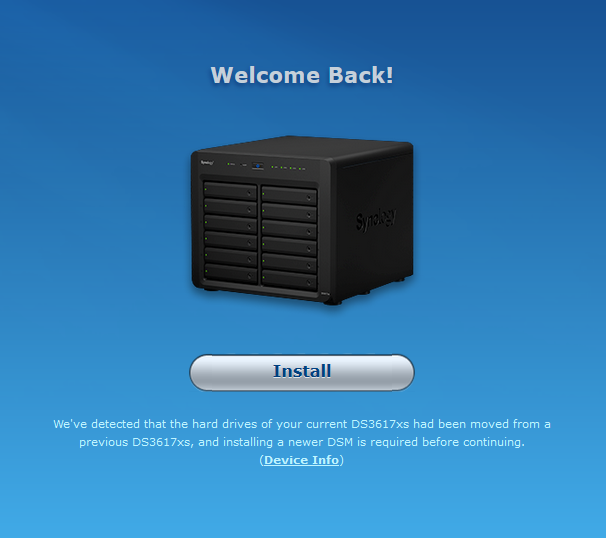

Nope. I boot up the SAN, go to the GUI, and am prompted with this message:

Geez... ok... I select "Migration: Keep my data and most of the settings" from the installation type selection, select the DSM that I downloaded in accordance with the first video I referenced, and let it install. Of course, when it came back up and went to the GUI, only 12 disks were visable, and not 45, so of course DSM thinks the array crashed. OK... FINE... I edit the synoinfo.conf to allow 45 drives again. But that requires a reboot. So I rebooted the SAN, and I'm then greeted with the same message prompting for me to reinstall DSM...

WHAT DO I DO!? How can I recover DSM and my arrays?

For those of you with a Supermicro chassis...

in Hardware Modding

Posted

I actually only had to run it once, and I never had to run it again... YMMV.