C-Fu

-

Posts

90 -

Joined

-

Last visited

Posts posted by C-Fu

-

-

So I have a bunch of drives in /volume1:

5x3TB

3x6TB (added later)

Now I have a bunch more drives that currently in /volume3 (/volume2 was deleted, just 1 SSD for VM storage):

1x3TB

2x10TBand a bunch of unused drives:

3x3TB

So I wanted to add all of the drives into one big /volume1. So...

1. I backed up /volume1 using HyperBackup into /volume2.

2. delete/destroy /volume1,

3. add all 3TB drives first (minus the one 3TB drive in /volume2) as well as the 3x6TB drives into the newlybuilt /volume1. All in SHR.

4. restore the HyperBackup from /volume2, destroy /volume2

5. Expand/add the 2x10TB from /volume2 into /volume1. So I'm left with 1x3TB unused.

Questions:

1. Will this work?

2. What will happen to my DSM and apps during deletion, creation of SHR, and during restoration from HyperBackup?

3. Will the newly created volume/storage pool be in /volume1 or /volume4 after I removed /volume1?

4. Anything else I need to worry about? Because /volume1 used size is 20TB, and the HyperBackup in /volume2 is 18.4TB in size.

Cheers and thanks!

-

Because of the 24/26 disks hardlimit of syno/xpenology, I was trying to figure out if there is a way of combining multiple Xpenology rigs to create a combined volume.

Example:

DS3617

1TB /volume1/FolderA/SubFolderA,B,C

DS3615

2TB/volume1/FolderA/SubFolderC,D,E

3TB /volume1/FolderB/SubFolderX,Y,Z

Result:

DS36157

3TB /volume1/FolderA/SubFolderA,B,C,D,E

3TB /volume1/FolderB/SubFolderX,Y,Z

Is there a way? Currently reading up on GlusterFS and LizardFS and seems like the right idea/the future. Is there a way to do this? Clients will only see shares from DS36157. I read up on High Availability, and seems like that's more on having a redundant/failover Xpenology system. Also, how would I design the whole system? 2 Xpe box and 1 Xpe "master" or something?

Cheers and thanks!

-

Any updates as to the current status with DSM 6.2 with regards to > 24 drives?

Saw a video where a guy successfully made a > 48 SHR array or something like that.

-

This would be awesome for mine that's sitting dust

even the forum was taken down by seagate

but I don't think it works since it's not x86. Last I read the blackarmor forums was someone removing the blackarmor web UI and taking everything commandline, with raid provided by a native linux madm or something - increasing the speed quite drastically.

-

On 5/31/2019 at 5:59 AM, mervincm said:

Port multipliers will allow you to connect multiple HDD to each Sata port. That's not what you will be doing here. You are natively hooking a single HDD to each Sata port on these cards. as long as the chipset itself is supported, you will be fine.

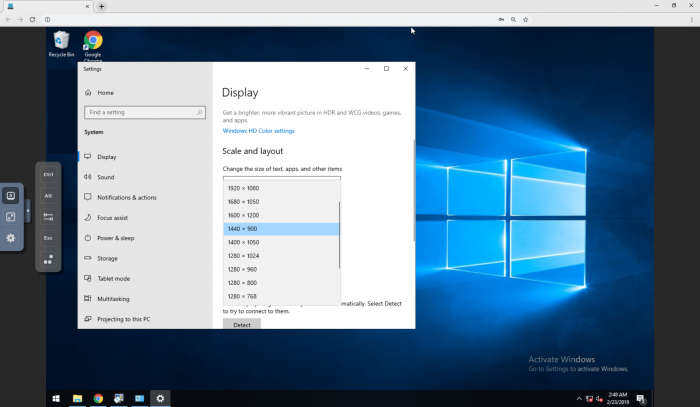

So does this chipset support then? Hey I don't mind hooking up just one SATA drive to each PCI-e x1 ports available. That's like 18 additional SATA ports

-

On 4/6/2019 at 3:29 AM, bearcat said:

It's a fun experiment, but how would you be able to physically fit sata controllers to all the slots

as they look as they will "collide" lenghtwise.

You may consider using some 10 port cards if they are supoorted by DSM.

physically might be difficult, but even if it works with 1/3 of the pci-e ports it'll still be awesome I think

-

17 hours ago, jensmander said:

Usually you can find this information on the vendor‘s website. HBAs/S-ATA cards with port multipliers are not supported by XPEnology so you‘ll not be able to access all hdds.

Well that's strange, it works with my current setup though, a Z97 box

Anyway this is a random China stuff with no documentation and no "brand" so I don't know where would the proper site be

Although I remembered seeing a video before on youtube, someone using a bunch of these for FreeNAS and it worked fine. If you searched for SU-SA3014 there'll be like a billion vendors with this model.

-

-

On 3/13/2019 at 2:52 AM, usus said:

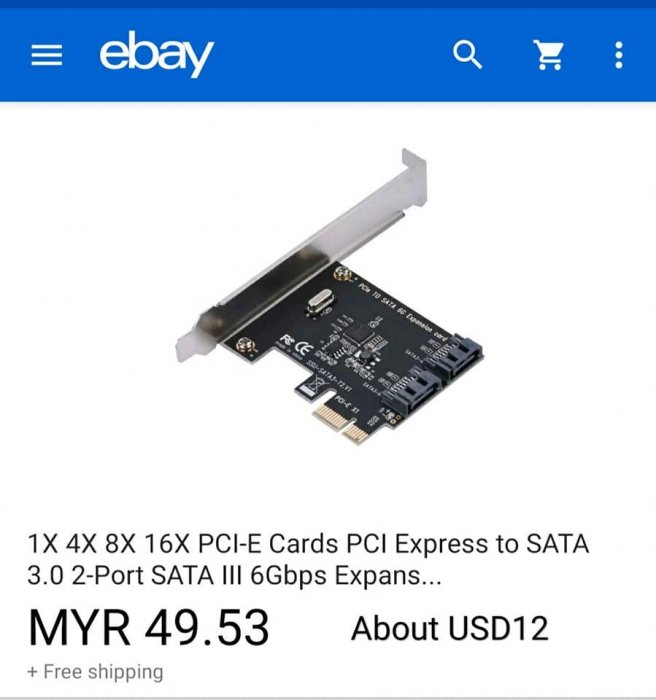

Or u can try something like this

I suppose my problem is a bit unique. There are no > 2U servers where I live. I've been searching for years trying to find 4U servers, let alone 4U server that's full of HDD trays at the front.

On 3/13/2019 at 12:57 AM, endormmc said:Well why not? Aplying the right config changes.....

But you can buy a HBA like dell H200 and plug in 8 drives. I'm no expert, but there are plenty of HBA, even for more drives, and with port multipliers the amount of hard drives you can connect grows.

For example, Petabytes on a budget: How to build cheap cloud storage, they use only 4 sata cards and 9 multipliers to connect 45 drives

You can. However this is a spare, unused mobo and cpu that I have lying around. I reckon the mobo might be cheap now that GPU mining isn't like in 2017 anymore. Mind you that *IF* this is possible, you're looking at a cheap board plus a bunch of 4 sata cards that can hold a (theoretical) bunch of AT LEAST 80 drives. That's bigger (and cheaper) than any other solution I think.

Just wanna see if anybody had any experience with this board before I start. I have no DDR4 RAM at the moment, so I'm just collecting infos and ideas at the moment.

But thanks for replying!

-

Hmmm... Would this even work? With 18x 4 port SATA card... Why? Well... Why not! 😂

I don't have a spare DDR4 RAM to test it out at the time being, and I just found this board in my storage cabinet (it's a board for mining during glory days 🤣) B250 Mining Expert.

Imagine a consumer board that can take at least 18x4=72 HDDs...

The motherboard in question: https://www.asus.com/my/Motherboards/B250-MINING-EXPERT/specifications/

-

-

-

On 10/25/2017 at 10:31 PM, cableguy said:

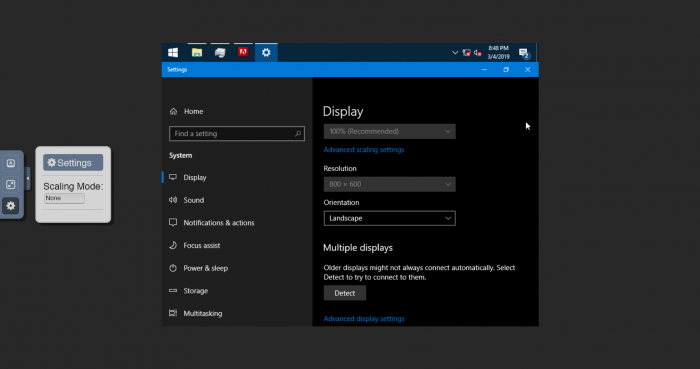

As you can see in the image it shows up as an i3 and only 2 core are showing as working but when I SSH into the DSM, 8 of the 12 cores are working.

That's awesome! Planning to get a Ryzen myself, so this is pretty comforting.

But can you fully utilize all cores in VMM? Since DSM sees the machine as having only 2 cores.

Adding smaller disks to SHR, will this work?

in General Post-Installation Questions/Discussions (non-hardware specific)

Posted

I know that. That's why I said I want to remove the SHR, then add all the smaller drives including new ones first while having a backup copy in the biggest drives. Please reread my post and tell me what you think, thanks