Tibag

-

Posts

67 -

Joined

-

Last visited

-

Days Won

1

Posts posted by Tibag

-

-

So I tried to rebuilt the loader with only my working disks... When it came back DSM was only suggesting to be reinstalled. So I let it go, hoping it would fix my Storage Manager. It came back, I restored my settings, and when opening the Storage Manager... it's still hanging! Sounds like I will never see my Storage Manager again.

EDIT: in the end I gave up on it and proceeded with the upgrade of the version to the latest and... tada! Storage Manager is now fixed.

-

So I actually tried to boot with the latest loader by just keeping my disk 0 (SATA 1:0, meant to be that volume 1) without any disk. When I try to recover it I get the "No drives detected in DS3622xs+". I feel like this is the source of all my issues. Does anyone know how to solve?

In Junior UI I can see from dmesg:

Quote[ 6.124089] ata32: SATA link up 6.0 Gbps (SStatus 133 SControl 300)

[ 6.124148] ata32.00: ATA-6: VMware Virtual SATA Hard Drive, 00000001, max UDMA/100

[ 6.124168] ata32.00: 5860533168 sectors, multi 0: LBA48 NCQ (depth 31/32)

[ 6.124188] ata32.00: SN:11000000000000000001

[ 6.124268] ata32.00: configured for UDMA/100

[ 6.124296] ata32.00: Find SSD disks. [VMware Virtual SATA Hard Drive]

[ 6.124372] I/O scheduler elevator not found

[ 6.124531] scsi 31:0:0:0: Direct-Access VMware Virtual SATA Hard Drive 0001 PQ: 0 ANSI: 5

[ 6.124878] sd 31:0:0:0: [sdaf] 5860533168 512-byte logical blocks: (3.00 TB/2.73 TiB)

[ 6.125011] sd 31:0:0:0: [sdaf] Write Protect is off

[ 6.125071] sd 31:0:0:0: [sdaf] Mode Sense: 00 3a 00 00

[ 6.125187] sd 31:0:0:0: [sdaf] Write cache: disabled, read cache: enabled, doesn't support DPO or FUA

[ 6.126275] sdaf: sdaf1 sdaf2 sdaf5

[ 6.126830] sd 31:0:0:0: [sdaf] Attached SCSI diskand

QuoteSynologyNAS> fdisk -l

Disk /dev/synoboot: 4096 MB, 4294967296 bytes, 8388608 sectors

522 cylinders, 255 heads, 63 sectors/track

Units: sectors of 1 * 512 = 512 bytesDevice Boot StartCHS EndCHS StartLBA EndLBA Sectors Size Id Type

/dev/synoboot1 * 0,32,33 9,78,5 2048 149503 147456 72.0M 83 Linux

/dev/synoboot2 9,78,6 18,188,42 149504 301055 151552 74.0M 83 Linux

/dev/synoboot3 18,188,43 522,42,32 301056 8388607 8087552 3949M 83 Linux

fdisk: device has more than 2^32 sectors, can't use all of them

Disk /dev/sdaf: 2048 GB, 2199023255040 bytes, 4294967295 sectors

267349 cylinders, 255 heads, 63 sectors/track

Units: sectors of 1 * 512 = 512 bytesDevice Boot StartCHS EndCHS StartLBA EndLBA Sectors Size Id Type

/dev/sdaf1 0,0,1 1023,254,63 1 4294967295 4294967295 2047G ee EFI GPTI am just no sure a re-install would work.

-

44 minutes ago, Orphée said:

Did you try to force a DSM re-install ? (junior mode if I'm not wrong ?)

I suppose I could boot with only the "broken" disk (volume1 not mounting), reinstall and add back volume2?

-

On 1/20/2024 at 8:50 PM, Tibag said:

I tried it and it definitely fixed the SAN Manager package. Thanks!

Nevertheless, my Storage Manager still doesn't start.

I am still unable to find how to fix my Storage Manager. I can't seem to find anyone in the same situation as me. I strongly suspect it's because my volume1 doesn't mount anymore. So, not the right forum but I know we have experienced people here, do you know how to either force a remount of volume1 or even completely destroy it? At that stage I don't care about the data I had on it really.

-

4 hours ago, Peter Suh said:

We mentioned the addition of an improved addon on the previous page.

To use this new addon you will need to rebuild your loader.

I tried it and it definitely fixed the SAN Manager package. Thanks!

Nevertheless, my Storage Manager still doesn't start.

-

On 1/15/2024 at 4:49 PM, Tibag said:

Looks like the above is a red herring - I fixed it anyway.

I am trying to understand why storage manager doesn't look happy:

Does that "Invalid serial number" ring a bell?

Hi all,

I haven't managed to progress much with the above. My Storage Manager package still refuses to load. Any suggestions on how to fix it?

-

Hi all,

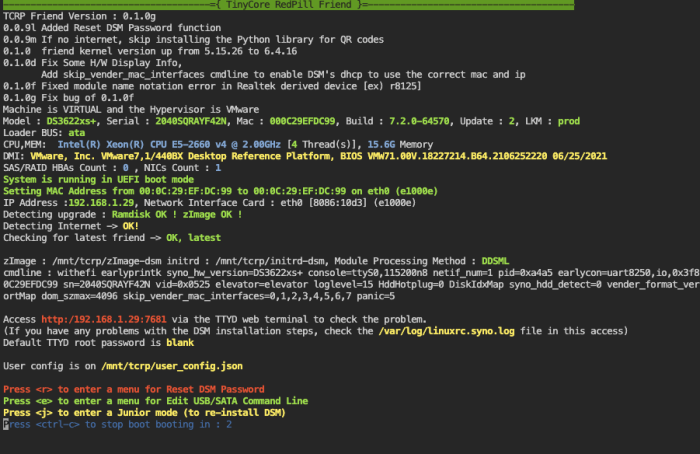

I did an upgrade to 7.2-64570 Update 3(hosted on ESXI as a DS3622xs) and upon recovery my Storage Manager doesn't start. I am using TCRP (( M-Shell )). I suspect it's to do with the fact my volume1 doesn't load because the associated disk doesn't work (for some reason, I can't see why because no Storage Manager).

Quoteroot@Diskstation:/var/log# systemctl status synostoraged.service

● synostoraged.service - Synology daemon for monitoring space/disk/cache status

Loaded: loaded (/usr/lib/systemd/system/synostoraged.service; static; vendor preset: disabled)

Active: active (running) since Mon 2024-01-15 16:32:54 GMT; 12min ago

Process: 26275 ExecStart=/usr/syno/sbin/synostoraged (code=exited, status=0/SUCCESS)

Main PID: 26282 (synostoraged)

CGroup: /syno_dsm_storage_manager.slice/synostoraged.service

├─26282 synostoraged

├─26283 synostgd-disk

├─26284 synostgd-space

├─26285 synostgd-cache

├─26287 synostgd-volume

└─26289 synostgd-external-volumeJan 15 16:32:54 Diskstation [26291]: disk/disk_sql_cmd_valid_check.c:24 Invalid string ../../../33:0:0:0

Jan 15 16:32:54 Diskstation [26291]: disk/disk_error_status_get.c:50 Invalid serial number

Jan 15 16:32:54 Diskstation [26296]: disk/disk_sql_cmd_valid_check.c:24 Invalid string ../../../34:0:0:0

Jan 15 16:32:54 Diskstation [26296]: disk/disk_error_status_get.c:50 Invalid serial number

Jan 15 16:32:54 Diskstation [26291]: disk/disk_sql_cmd_valid_check.c:24 Invalid string ../../../33:0:0:0

Jan 15 16:32:54 Diskstation [26291]: disk/disk_error_status_get.c:50 Invalid serial number

Jan 15 16:32:54 Diskstation [26296]: disk/disk_sql_cmd_valid_check.c:24 Invalid string ../../../34:0:0:0

Jan 15 16:32:54 Diskstation [26296]: disk/disk_error_status_get.c:50 Invalid serial number

Jan 15 16:32:54 Diskstation [26296]: disk/disk_sql_cmd_valid_check.c:24 Invalid string ../../../34:0:0:0

Jan 15 16:32:54 Diskstation [26296]: disk/disk_error_status_get.c:50 Invalid serial numberObviously restarting doesn't help.

Any idea all?

-

Looks like the above is a red herring - I fixed it anyway.

I am trying to understand why storage manager doesn't look happy:

Quoteroot@Diskstation:/var/log# systemctl status synostoraged.service

● synostoraged.service - Synology daemon for monitoring space/disk/cache status

Loaded: loaded (/usr/lib/systemd/system/synostoraged.service; static; vendor preset: disabled)

Active: active (running) since Mon 2024-01-15 16:32:54 GMT; 12min ago

Process: 26275 ExecStart=/usr/syno/sbin/synostoraged (code=exited, status=0/SUCCESS)

Main PID: 26282 (synostoraged)

CGroup: /syno_dsm_storage_manager.slice/synostoraged.service

├─26282 synostoraged

├─26283 synostgd-disk

├─26284 synostgd-space

├─26285 synostgd-cache

├─26287 synostgd-volume

└─26289 synostgd-external-volumeJan 15 16:32:54 Diskstation [26291]: disk/disk_sql_cmd_valid_check.c:24 Invalid string ../../../33:0:0:0

Jan 15 16:32:54 Diskstation [26291]: disk/disk_error_status_get.c:50 Invalid serial number

Jan 15 16:32:54 Diskstation [26296]: disk/disk_sql_cmd_valid_check.c:24 Invalid string ../../../34:0:0:0

Jan 15 16:32:54 Diskstation [26296]: disk/disk_error_status_get.c:50 Invalid serial number

Jan 15 16:32:54 Diskstation [26291]: disk/disk_sql_cmd_valid_check.c:24 Invalid string ../../../33:0:0:0

Jan 15 16:32:54 Diskstation [26291]: disk/disk_error_status_get.c:50 Invalid serial number

Jan 15 16:32:54 Diskstation [26296]: disk/disk_sql_cmd_valid_check.c:24 Invalid string ../../../34:0:0:0

Jan 15 16:32:54 Diskstation [26296]: disk/disk_error_status_get.c:50 Invalid serial number

Jan 15 16:32:54 Diskstation [26296]: disk/disk_sql_cmd_valid_check.c:24 Invalid string ../../../34:0:0:0

Jan 15 16:32:54 Diskstation [26296]: disk/disk_error_status_get.c:50 Invalid serial numberDoes that "Invalid serial number" ring a bell?

-

I actually noticed a lot of errors in my syslog like

Quote[2024-01-15T10:37:44.178538] Error opening file for writing; filename='/var/packages/SynoFinder/var/log/fileindexd.log', error='File exists (17)'

Then check that folder

Quoteroot@Diskstation:~# ll /var/packages/SynoFinder/var/log

lrwxrwxrwx 1 root root 24 Mar 9 2022 /var/packages/SynoFinder/var/log -> /volume1/@SynoFinder-logSo that's pointing to a dead path, because my volume1 is not loading (for whatever reason). I wonder if it's preventing the Storage Manager to start.

-

-

36 minutes ago, Orphée said:

You may want to try :

cd / du -ax | sort -rn | moreTo show what are biggest folder/files size consuming.

to check if they are all needed.

Yeah that's a nice way of doing it - I did have a go when I wanted to free my / mount. I can't see a lot jumping out of the ordinary. See attached in case anything catches your eye.

-

7 minutes ago, Peter Suh said:

[ 0.538330] pci 0000:00:0f.0: can't claim BAR 6 [mem 0xffff8000-0xffffffff pref]: no compatible bridge window [ 0.538534] pci 0000:02:02.0: can't claim BAR 6 [mem 0xffff0000-0xffffffff pref]: no compatible bridge window [ 0.538749] pci 0000:02:03.0: can't claim BAR 6 [mem 0xffff0000-0xffffffff pref]: no compatible bridge window [ 0.538991] pci 0000:0b:00.0: can't claim BAR 6 [mem 0xffff0000-0xffffffff pref]: no compatible bridge window [ 0.539323] pci 0000:00:15.0: bridge window [io 0x1000-0x0fff] to [bus 03] add_size 1000 [ 0.539499] pci 0000:00:15.1: bridge window [io 0x1000-0x0fff] to [bus 04] add_size 1000 [ 0.539745] pci 0000:00:15.2: bridge window [io 0x1000-0x0fff] to [bus 05] add_size 1000 [ 0.539963] pci 0000:00:15.3: bridge window [io 0x1000-0x0fff] to [bus 06] add_size 1000 [ 0.540193] pci 0000:00:15.4: bridge window [io 0x1000-0x0fff] to [bus 07] add_size 1000 [ 0.540404] pci 0000:00:15.5: bridge window [io 0x1000-0x0fff] to [bus 08] add_size 1000 [ 0.540617] pci 0000:00:15.6: bridge window [io 0x1000-0x0fff] to [bus 09] add_size 1000 [ 0.540849] pci 0000:00:15.7: bridge window [io 0x1000-0x0fff] to [bus 0a] add_size 1000 [ 0.541110] pci 0000:00:16.1: bridge window [io 0x1000-0x0fff] to [bus 0c] add_size 1000 [ 0.541321] pci 0000:00:16.2: bridge window [io 0x1000-0x0fff] to [bus 0d] add_size 1000 [ 0.541529] pci 0000:00:16.3: bridge window [io 0x1000-0x0fff] to [bus 0e] add_size 1000 [ 0.541740] pci 0000:00:16.4: bridge window [io 0x1000-0x0fff] to [bus 0f] add_size 1000 [ 0.541975] pci 0000:00:16.5: bridge window [io 0x1000-0x0fff] to [bus 10] add_size 1000 [ 0.542203] pci 0000:00:16.6: bridge window [io 0x1000-0x0fff] to [bus 11] add_size 1000 [ 0.542414] pci 0000:00:16.7: bridge window [io 0x1000-0x0fff] to [bus 12] add_size 1000 [ 0.542639] pci 0000:00:17.0: bridge window [io 0x1000-0x0fff] to [bus 13] add_size 1000 [ 0.542869] pci 0000:00:17.1: bridge window [io 0x1000-0x0fff] to [bus 14] add_size 1000 [ 0.543080] pci 0000:00:17.2: bridge window [io 0x1000-0x0fff] to [bus 15] add_size 1000 [ 0.543307] pci 0000:00:17.3: bridge window [io 0x1000-0x0fff] to [bus 16] add_size 1000 [ 0.543541] pci 0000:00:17.4: bridge window [io 0x1000-0x0fff] to [bus 17] add_size 1000 [ 0.544037] pci 0000:00:15.0: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.544225] pci 0000:00:15.0: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.544422] pci 0000:00:15.1: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.544643] pci 0000:00:15.1: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.544873] pci 0000:00:15.2: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.545067] pci 0000:00:15.2: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.545247] pci 0000:00:15.3: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.545439] pci 0000:00:15.3: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.545608] pci 0000:00:15.4: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.545811] pci 0000:00:15.4: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.546005] pci 0000:00:15.5: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.546233] pci 0000:00:15.5: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.546488] pci 0000:00:15.6: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.546711] pci 0000:00:15.6: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.546923] pci 0000:00:15.7: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.547103] pci 0000:00:15.7: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.547300] pci 0000:00:16.1: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.547513] pci 0000:00:16.1: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.547769] pci 0000:00:16.2: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.547980] pci 0000:00:16.2: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.548199] pci 0000:00:16.3: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.548379] pci 0000:00:16.3: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.548545] pci 0000:00:16.4: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.548725] pci 0000:00:16.4: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.548929] pci 0000:00:16.5: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.549122] pci 0000:00:16.5: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.549336] pci 0000:00:16.6: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.549525] pci 0000:00:16.6: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.549711] pci 0000:00:16.7: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.549928] pci 0000:00:16.7: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.550145] pci 0000:00:17.0: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.550379] pci 0000:00:17.0: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.550561] pci 0000:00:17.1: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.550747] pci 0000:00:17.1: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.550944] pci 0000:00:17.2: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.551123] pci 0000:00:17.2: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.551303] pci 0000:00:17.3: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.551482] pci 0000:00:17.3: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.551682] pci 0000:00:17.4: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.551889] pci 0000:00:17.4: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [ 0.552078] pci 0000:00:0f.0: BAR 6: assigned [mem 0xff100000-0xff107fff pref] [ 0.552229] pci 0000:00:15.0: BAR 13: no space for [io size 0x1000] [ 0.552360] pci 0000:00:15.0: BAR 13: failed to assign [io size 0x1000] [ 0.552501] pci 0000:00:15.1: BAR 13: no space for [io size 0x1000] [ 0.552624] pci 0000:00:15.1: BAR 13: failed to assign [io size 0x1000] [ 0.552765] pci 0000:00:15.2: BAR 13: no space for [io size 0x1000] [ 0.552913] pci 0000:00:15.2: BAR 13: failed to assign [io size 0x1000] [ 0.553059] pci 0000:00:15.3: BAR 13: no space for [io size 0x1000] [ 0.553209] pci 0000:00:15.3: BAR 13: failed to assign [io size 0x1000] [ 0.553349] pci 0000:00:15.4: BAR 13: no space for [io size 0x1000] [ 0.553480] pci 0000:00:15.4: BAR 13: failed to assign [io size 0x1000] [ 0.553613] pci 0000:00:15.5: BAR 13: no space for [io size 0x1000] [ 0.553743] pci 0000:00:15.5: BAR 13: failed to assign [io size 0x1000] [ 0.553905] pci 0000:00:15.6: BAR 13: no space for [io size 0x1000] [ 0.554041] pci 0000:00:15.6: BAR 13: failed to assign [io size 0x1000] [ 0.554210] pci 0000:00:15.7: BAR 13: no space for [io size 0x1000] [ 0.554336] pci 0000:00:15.7: BAR 13: failed to assign [io size 0x1000] [ 0.554489] pci 0000:00:16.1: BAR 13: no space for [io size 0x1000] [ 0.554621] pci 0000:00:16.1: BAR 13: failed to assign [io size 0x1000] [ 0.554767] pci 0000:00:16.2: BAR 13: no space for [io size 0x1000] [ 0.554959] pci 0000:00:16.2: BAR 13: failed to assign [io size 0x1000] [ 0.555105] pci 0000:00:16.3: BAR 13: no space for [io size 0x1000] [ 0.555253] pci 0000:00:16.3: BAR 13: failed to assign [io size 0x1000] [ 0.555393] pci 0000:00:16.4: BAR 13: no space for [io size 0x1000] [ 0.555521] pci 0000:00:16.4: BAR 13: failed to assign [io size 0x1000] [ 0.555675] pci 0000:00:16.5: BAR 13: no space for [io size 0x1000] [ 0.555801] pci 0000:00:16.5: BAR 13: failed to assign [io size 0x1000] [ 0.555947] pci 0000:00:16.6: BAR 13: no space for [io size 0x1000] [ 0.556068] pci 0000:00:16.6: BAR 13: failed to assign [io size 0x1000] [ 0.556199] pci 0000:00:16.7: BAR 13: no space for [io size 0x1000] [ 0.556320] pci 0000:00:16.7: BAR 13: failed to assign [io size 0x1000] [ 0.556451] pci 0000:00:17.0: BAR 13: no space for [io size 0x1000] [ 0.556537] pci 0000:00:17.0: BAR 13: failed to assign [io size 0x1000] [ 0.556673] pci 0000:00:17.1: BAR 13: no space for [io size 0x1000] [ 0.556816] pci 0000:00:17.1: BAR 13: failed to assign [io size 0x1000] [ 0.556956] pci 0000:00:17.2: BAR 13: no space for [io size 0x1000] [ 0.557121] pci 0000:00:17.2: BAR 13: failed to assign [io size 0x1000] [ 0.557280] pci 0000:00:17.3: BAR 13: no space for [io size 0x1000] [ 0.557482] pci 0000:00:17.3: BAR 13: failed to assign [io size 0x1000] [ 0.557611] pci 0000:00:17.4: BAR 13: no space for [io size 0x1000] [ 0.557756] pci 0000:00:17.4: BAR 13: failed to assign [io size 0x1000] [ 0.557920] pci 0000:00:17.4: BAR 13: no space for [io size 0x1000] [ 0.558067] pci 0000:00:17.4: BAR 13: failed to assign [io size 0x1000] [ 0.558219] pci 0000:00:17.3: BAR 13: no space for [io size 0x1000] [ 0.558345] pci 0000:00:17.3: BAR 13: failed to assign [io size 0x1000] [ 0.558480] pci 0000:00:17.2: BAR 13: no space for [io size 0x1000] [ 0.558616] pci 0000:00:17.2: BAR 13: failed to assign [io size 0x1000] [ 0.558756] pci 0000:00:17.1: BAR 13: no space for [io size 0x1000] [ 0.558898] pci 0000:00:17.1: BAR 13: failed to assign [io size 0x1000] [ 0.559034] pci 0000:00:17.0: BAR 13: no space for [io size 0x1000] [ 0.559160] pci 0000:00:17.0: BAR 13: failed to assign [io size 0x1000] [ 0.559295] pci 0000:00:16.7: BAR 13: no space for [io size 0x1000] [ 0.559455] pci 0000:00:16.7: BAR 13: failed to assign [io size 0x1000] [ 0.559592] pci 0000:00:16.6: BAR 13: no space for [io size 0x1000] [ 0.559749] pci 0000:00:16.6: BAR 13: failed to assign [io size 0x1000] [ 0.559931] pci 0000:00:16.5: BAR 13: no space for [io size 0x1000] [ 0.560053] pci 0000:00:16.5: BAR 13: failed to assign [io size 0x1000] [ 0.560184] pci 0000:00:16.4: BAR 13: no space for [io size 0x1000] [ 0.560305] pci 0000:00:16.4: BAR 13: failed to assign [io size 0x1000] [ 0.560436] pci 0000:00:16.3: BAR 13: no space for [io size 0x1000] [ 0.560528] pci 0000:00:16.3: BAR 13: failed to assign [io size 0x1000] [ 0.560665] pci 0000:00:16.2: BAR 13: no space for [io size 0x1000] [ 0.560809] pci 0000:00:16.2: BAR 13: failed to assign [io size 0x1000] [ 0.560960] pci 0000:00:16.1: BAR 13: no space for [io size 0x1000] [ 0.561099] pci 0000:00:16.1: BAR 13: failed to assign [io size 0x1000] [ 0.561230] pci 0000:00:15.7: BAR 13: no space for [io size 0x1000] [ 0.561351] pci 0000:00:15.7: BAR 13: failed to assign [io size 0x1000] [ 0.561482] pci 0000:00:15.6: BAR 13: no space for [io size 0x1000] [ 0.561592] pci 0000:00:15.6: BAR 13: failed to assign [io size 0x1000] [ 0.561733] pci 0000:00:15.5: BAR 13: no space for [io size 0x1000] [ 0.561887] pci 0000:00:15.5: BAR 13: failed to assign [io size 0x1000] [ 0.562073] pci 0000:00:15.4: BAR 13: no space for [io size 0x1000] [ 0.562208] pci 0000:00:15.4: BAR 13: failed to assign [io size 0x1000] [ 0.562378] pci 0000:00:15.3: BAR 13: no space for [io size 0x1000] [ 0.562504] pci 0000:00:15.3: BAR 13: failed to assign [io size 0x1000] [ 0.562644] pci 0000:00:15.2: BAR 13: no space for [io size 0x1000] [ 0.562774] pci 0000:00:15.2: BAR 13: failed to assign [io size 0x1000] [ 0.562915] pci 0000:00:15.1: BAR 13: no space for [io size 0x1000] [ 0.563058] pci 0000:00:15.1: BAR 13: failed to assign [io size 0x1000] [ 0.563209] pci 0000:00:15.0: BAR 13: no space for [io size 0x1000] [ 0.563340] pci 0000:00:15.0: BAR 13: failed to assign [io size 0x1000]I suspect this part in dmesg.

I think the lack of IO space is related to not being able to open Disk Manager.

https://bugzilla.redhat.com/show_bug.cgi?id=1334867

Isn't there more room somewhere to cause a space shortage?

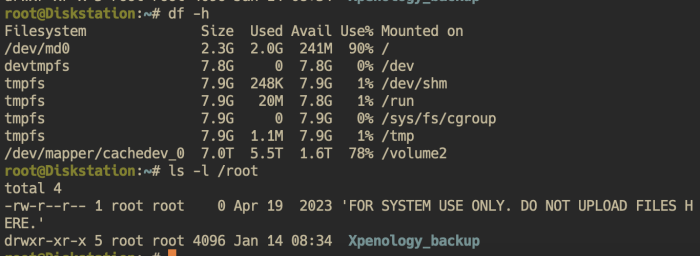

Hm good catch. I will try googling around that. Regarding the storage here is my df:

Quoteroot@Diskstation:~# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/md0 2.3G 1.6G 591M 74% /

devtmpfs 7.8G 0 7.8G 0% /dev

tmpfs 7.9G 248K 7.9G 1% /dev/shm

tmpfs 7.9G 19M 7.8G 1% /run

tmpfs 7.9G 0 7.9G 0% /sys/fs/cgroup

tmpfs 7.9G 1.1M 7.9G 1% /tmp

/dev/mapper/cachedev_0 7.0T 5.5T 1.6T 78% /volume2So nothing obvious here.

-

Adding a log from dmesg, there is a lot about sata stuff. Can you see anything you are familiar with?

-

Right so initially I had removed the disk not showing up anymore and put it back in ESXI. It allowed me to see which one it is in /dev:

Quoteroot@Diskstation:~# ll /dev/sd*

brw------- 1 root root 65, 224 Jan 15 13:19 /dev/sdae

brw------- 1 root root 65, 225 Jan 15 13:19 /dev/sdae1

brw------- 1 root root 65, 226 Jan 15 13:19 /dev/sdae2

brw------- 1 root root 65, 229 Jan 15 13:19 /dev/sdae5

brw------- 1 root root 66, 0 Jan 15 13:19 /dev/sdag

brw------- 1 root root 66, 1 Jan 15 13:19 /dev/sdag1

brw------- 1 root root 66, 2 Jan 15 13:19 /dev/sdag2

brw------- 1 root root 66, 5 Jan 15 13:19 /dev/sdag5

brw------- 1 root root 66, 16 Jan 15 13:19 /dev/sdah

brw------- 1 root root 66, 17 Jan 15 13:19 /dev/sdah1

brw------- 1 root root 66, 18 Jan 15 13:19 /dev/sdah2

brw------- 1 root root 66, 21 Jan 15 13:19 /dev/sdah5

brw------- 1 root root 66, 32 Jan 15 13:19 /dev/sdai

brw------- 1 root root 66, 33 Jan 15 13:19 /dev/sdai1

brw------- 1 root root 66, 34 Jan 15 13:19 /dev/sdai2

brw------- 1 root root 66, 37 Jan 15 13:19 /dev/sdai5So it's sdae. I scanned them all through smartctl and they all return

Quote=== START OF READ SMART DATA SECTION ===

SMART Health Status: OKSo somehow it's not bothered here.

-

5 minutes ago, Peter Suh said:

Connect via SSH and check the health of the disks with the command below.

sudo -i

ll /dev/sata*

smartctl -H /dev/sata1

smartctl -H /dev/sata2

smartctl -H /dev/sata3

...There is nothing under "sata":

Quoteroot@Diskstation:~# ll /dev/sata*

ls: cannot access '/dev/sata*': No such file or directoryI suspect it's all under sda, no?

Quoteroot@Diskstation:~# ll /dev/sda*

brw------- 1 root root 66, 0 Jan 15 10:54 /dev/sdag

brw------- 1 root root 66, 1 Jan 15 10:54 /dev/sdag1

brw------- 1 root root 66, 2 Jan 15 10:54 /dev/sdag2

brw------- 1 root root 66, 5 Jan 15 10:54 /dev/sdag5

brw------- 1 root root 66, 16 Jan 15 10:54 /dev/sdah

brw------- 1 root root 66, 17 Jan 15 10:54 /dev/sdah1

brw------- 1 root root 66, 18 Jan 15 10:54 /dev/sdah2

brw------- 1 root root 66, 21 Jan 15 10:54 /dev/sdah5

brw------- 1 root root 66, 32 Jan 15 10:54 /dev/sdai

brw------- 1 root root 66, 33 Jan 15 10:54 /dev/sdai1

brw------- 1 root root 66, 34 Jan 15 10:54 /dev/sdai2

brw------- 1 root root 66, 37 Jan 15 10:54 /dev/sdai5 -

Thanks all - so I cleared out the directory and have DSM a restart. No issues / errors during the boot, all good. Now the UI doesn't complain about the lack of storage on the update panel.

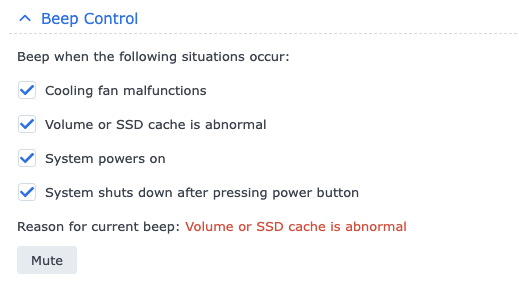

But Storage Manager still doesn't load. I suspect it's related the missing disk from my volume 1:

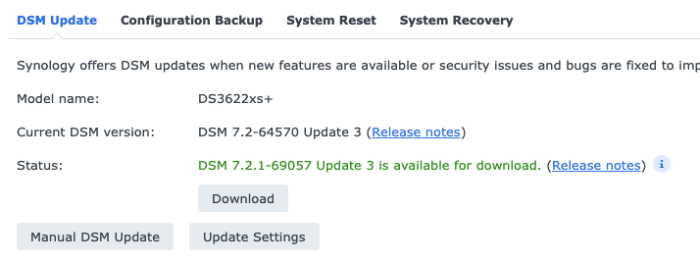

Any suggested fix? Would you recommend upgrading to 7.2.1?

-

4 hours ago, Peter Suh said:

There is too little space.

First, to update DSM, a Pat file larger than 300Mbytes is written to /root, which may cause a file corruption error.

The file download stops in the middle.

Search for ways to secure space in Synology /dev/md0 on the Internet and apply them.Yep started to clear stuff out. In your experience, can `/upd@te/` be removed? Tt looks like an old update folder.

-

-

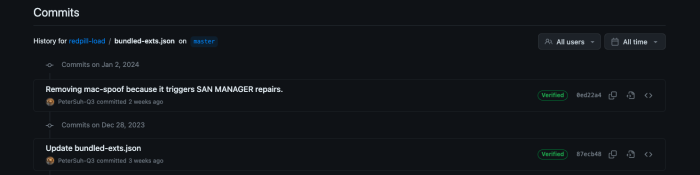

1 hour ago, Peter Suh said:

Have you rebuilt and used the TCRP-mshell loader between December 28 and January 2 ?

There was an issue with SAN MANAGER being corrupted only if the loader was built during these 5 days.

However, my Mac-Spoof addon may not necessarily be the cause.

Recently, I experienced SAN Manager being damaged for several reasons.

For example, if the SHR raid is compromised, the SAN MANAGER is also compromised.I think Synology should take some action.

The SAN MANAGER seems to be damaged too easily.Well, mine was installed this week so well after it and SAN Manager is un-repairable.

Does it make Storage Manager unable to start too?

-

1 hour ago, Peter Suh said:

If so, proceed as you see fit. All you need to do is run the ramdisk patch (postupdate) no matter what loader you use.

Right, I rebuilt a new image with yours and it recovered successfully!

Well, not fully. It says one of my disk didn't load on my first volume. I suspect it's the one I tried to mount manually... anyhow before I can drill into it Storage Manager doesn't load. It hows in the top bar as "loading". In my package manager I can see "SAN Manager" needing a repair but it's looping whenever I hit repair. Is this a known issue?

-

1 hour ago, Peter Suh said:

pocopico's friend is an old version. The ramdisk patch, which means automatic postupdate, may not be desired. Try changing to mshell.

To switch to mshell you mean switching back to your fork? Build a new loader? I don't think it allows me to pick 64570.

-

5 minutes ago, Peter Suh said:

At the very end of this log, you can see that a smallfixnumber mismatch has been detected. This is a completely normal detection. Now, normal ramdisk patching should proceed on the Friend kernel. It appears in yellow letters. Can you take a screenshot of this screen and show me?

Sent from my iPhone using TapatalkWell, because I am back to my previous loader it's the old one (Version : 0.10.0.0) not using your own build. So Friend doesn't load automatically, I think?

Do I need to do a postupdate maybe? Or use the Friend entry from my Grub?

Thanks for keeping up the help!

-

2 hours ago, Peter Suh said:

In a recovery action, essentially nothing happens.

Does the recovery happen over and over again?If so, please upload the junior log like you did yesterday.

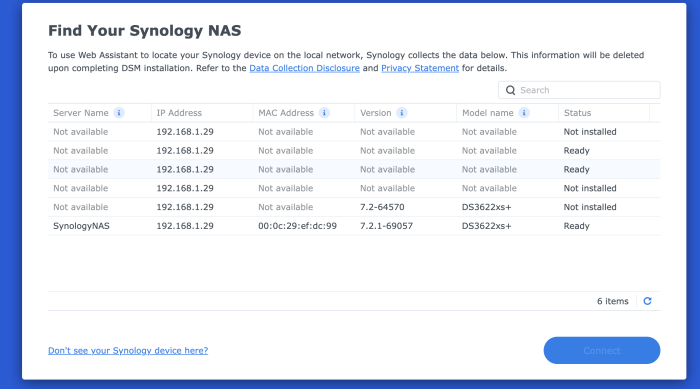

Yes it does always bring the recovery when it comes back.

Find attached the logs, hopefully it shows something useful!

Oddly, on the find synology I get:

-

1 minute ago, Peter Suh said:

Please check the parts that were problematic yesterday.

Is the /root directory really full?

And above all, back up your DSM settings separately.

If full backup is not possible, at least perform a selective backup of only packages using hyperbackup.

I make these backups every day on Google Drive.

It can be used for recovery if the DSM of the system partition is initialized due to an unexpected accident.

I think you should focus more on preparing in advance rather than upgrading.Thanks, will do. Regarding backups I have cloud backup of the data, settings too, so no risk here (apart from more time wasted).

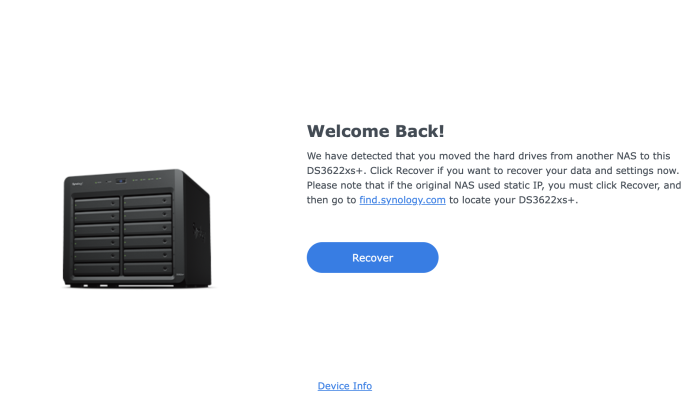

Actually the recovery doesn't work, oddly. I get that screen:

Then after the restart (I can see it reboots) then nothing happens.

Any idea why?

Storage manager failing

in General Post-Installation Questions/Discussions (non-hardware specific)

Posted

I eventually fixed my issue by upgrading to 7.2.1 ; as the system came back Storage Manager crashed one more time then a restart fixed it. Storage Manager was complaining about my volume missing data scrubbing, it may have been the reason why it was "unhealthy". Also when it booted it complained about the DB about disks couldn't identify the disks, so could have been another clue!