sarieri

-

Posts

32 -

Joined

-

Last visited

Posts posted by sarieri

-

-

2 hours ago, IG-88 said:

yes, but you can also append it manually to the arguments in "set common_args_918"

Now this is interesting. I tried 3617 with jun's loader 1.03 and it works. I have no idea why the 918+ works differently.

-

6 minutes ago, IG-88 said:

yes, but you can also append it manually to the arguments in "set common_args_918"

Not working...Well, I did some research on google and maybe I will give it a try later. For now, KVM and UNRAID passthru the AHCI controller fine but not ESXI. Might be something wrong with reset mode(d3d0) of the controller.

-

15 minutes ago, IG-88 said:

maybe try add the following to the grub.cfg as additinal kernel parameter

intel_iommu=on iommu=softShould that be added here ? set extra_args_918='intel_iommu=on iommu=soft'

-

2 minutes ago, IG-88 said:

i'd say no, the ahci driver can't establish a connection to the device

This is weird, the controller itself must be okay since I passed through them to DSM in UNRAID before. So this might be something related to ESXI.

-

2 minutes ago, IG-88 said:

1st controller is vmwares own sata

one boot disk, two other

[Sun Jun 28 01:24:51 2020] sd 0:0:0:0: [sda] 102400 512-byte logical blocks: (52.4 MB/50.0 MiB) ... [Sun Jun 28 01:24:52 2020] sd 1:0:0:0: [sdb] 4000797360 512-byte logical blocks: (2.05 TB/1.86 TiB) ... [Sun Jun 28 01:24:52 2020] sd 2:0:0:0: [sdc] 12502446768 512-byte logical blocks: (6.40 TB/5.82 TiB)thats the 6 port sata pass through

[Sun Jun 28 01:24:52 2020] ata5: SATA max UDMA/133 abar m2048@0xfd2ff000 port 0xfd2ff100 irq 57 [Sun Jun 28 01:24:52 2020] ata6: SATA max UDMA/133 abar m2048@0xfd2ff000 port 0xfd2ff180 irq 57 [Sun Jun 28 01:24:52 2020] ata7: SATA max UDMA/133 abar m2048@0xfd2ff000 port 0xfd2ff200 irq 57 [Sun Jun 28 01:24:52 2020] ata8: SATA max UDMA/133 abar m2048@0xfd2ff000 port 0xfd2ff280 irq 57 [Sun Jun 28 01:24:52 2020] ata9: SATA max UDMA/133 abar m2048@0xfd2ff000 port 0xfd2ff300 irq 57 [Sun Jun 28 01:24:52 2020] ata10: SATA max UDMA/133 abar m2048@0xfd2ff000 port 0xfd2ff380 irq 57and this are the errors to look for and resolve

[Sun Jun 28 01:24:52 2020] ata5: SATA link up 3.0 Gbps (SStatus 123 SControl 300) [Sun Jun 28 01:24:53 2020] clocksource: Switched to clocksource tsc [Sun Jun 28 01:24:57 2020] ata5.00: qc timeout (cmd 0x27) [Sun Jun 28 01:24:57 2020] ata5.00: failed to read native max address (err_mask=0x4) [Sun Jun 28 01:24:57 2020] ata5.00: HPA support seems broken, skipping HPA handling [Sun Jun 28 01:24:58 2020] ata5: SATA link up 3.0 Gbps (SStatus 123 SControl 300) [Sun Jun 28 01:25:03 2020] ata5.00: qc timeout (cmd 0xec) [Sun Jun 28 01:25:03 2020] ata5.00: failed to IDENTIFY (I/O error, err_mask=0x4) [Sun Jun 28 01:25:03 2020] ata5: limiting SATA link speed to 1.5 Gbps [Sun Jun 28 01:25:04 2020] ata5: SATA link up 1.5 Gbps (SStatus 113 SControl 310) [Sun Jun 28 01:25:14 2020] ata5.00: qc timeout (cmd 0xec) [Sun Jun 28 01:25:14 2020] ata5.00: failed to IDENTIFY (I/O error, err_mask=0x4) [Sun Jun 28 01:25:14 2020] ata5: SATA link up 1.5 Gbps (SStatus 113 SControl 310) ... [Sun Jun 28 01:28:20 2020] ata10: SATA link up 6.0 Gbps (SStatus 133 SControl 300) [Sun Jun 28 01:28:25 2020] ata10.00: qc timeout (cmd 0xec) [Sun Jun 28 01:28:25 2020] ata10.00: failed to IDENTIFY (I/O error, err_mask=0x4) [Sun Jun 28 01:28:25 2020] ata10: SATA link up 6.0 Gbps (SStatus 133 SControl 300) [Sun Jun 28 01:28:35 2020] ata10.00: qc timeout (cmd 0xec) [Sun Jun 28 01:28:35 2020] ata10.00: failed to IDENTIFY (I/O error, err_mask=0x4) [Sun Jun 28 01:28:35 2020] ata10: limiting SATA link speed to 3.0 Gbps [Sun Jun 28 01:28:35 2020] ata10: SATA link up 3.0 Gbps (SStatus 123 SControl 320) [Sun Jun 28 01:29:05 2020] ata10.00: qc timeout (cmd 0xec) [Sun Jun 28 01:29:05 2020] ata10.00: failed to IDENTIFY (I/O error, err_mask=0x4) [Sun Jun 28 01:29:05 2020] ata10: SATA link up 3.0 Gbps (SStatus 123 SControl 320)So this has nothing to do with the configuration of set extra_args_918 in grub.cfg right?

-

14 minutes ago, IG-88 said:

i would google that error messages, might not be synology related

also i never had a case where disks where not visible in dmesg and anything in grub.cfg made them available, i only know that as way to rearrange already visible drives i a way (like synology does to give them proper order to match hardware slots and software slots in dsm)

cant say much as you not posted your dmesg but when nmve is done by pcie passthroug then it would need a patch in dsm to make a non synology nvme hardware work

https://xpenology.com/forum/topic/13342-nvme-cache-support/page/3/?tab=comments#comment-126755

if its mapped as rdm then it should be visible and usable (check the forum search, that was the old way to use nvme disks before the native way worked)

Hi,

Here is the full version dmesg...I thought that vim could pull out the full version...my bad.

[Sun Jun 28 01:24:51 2020] pci 0000:13:00.0: Signaling PME through PCIe PME interrupt ..... [Sun Jun 28 01:24:52 2020] ahci 0000:13:00.0: AHCI 0001.0300 32 slots 6 ports 6 Gbps 0x3f impl SATA mode [Sun Jun 28 01:24:52 2020] ahci 0000:13:00.0: flags: 64bit ncq led clo pio slum part ems apst [Sun Jun 28 01:24:52 2020] scsi host4: ahci [Sun Jun 28 01:24:52 2020] scsi host5: ahci [Sun Jun 28 01:24:52 2020] scsi host6: ahci [Sun Jun 28 01:24:52 2020] scsi host7: ahci [Sun Jun 28 01:24:52 2020] scsi host8: ahci [Sun Jun 28 01:24:52 2020] scsi host9: ahciThe only part that mentions "0000:13:00.0" was this.

-

2 minutes ago, IG-88 said:

and the proper driver is loaded/used (ahci)

next stop dmesg and compare this with the dmesg results later when tuning switches in grub.cfg

Does "failed to assign" meant that there's no space for the ahci controller anymore so that I need to edit the "set extra_args_918" line in grub.cfg? I looked into disk mapping issues a while ago but never really understand how this actually work...

It seems to me that there are only two controllers for DSM in my case. One is the virtual controller connected to the disk with loader and other two RDM passthru NVME drives, the other controller is the passed through AHCI controller.

-

19 minutes ago, IG-88 said:

the step with lspci was about to check that the driver is used for the device and next would be to check /var/log/dmesg about the disk(s) connected to this controller

Sarieri@Sarieri:/$ lspci -k 0000:00:00.0 Class 0600: Device 8086:7190 (rev 01) Subsystem: Device 15ad:1976 Kernel driver in use: agpgart-intel 0000:00:01.0 Class 0604: Device 8086:7191 (rev 01) 0000:00:07.0 Class 0601: Device 8086:7110 (rev 08) Subsystem: Device 15ad:1976 0000:00:07.1 Class 0101: Device 8086:7111 (rev 01) Subsystem: Device 15ad:1976 0000:00:07.3 Class 0680: Device 8086:7113 (rev 08) Subsystem: Device 15ad:1976 0000:00:07.7 Class 0880: Device 15ad:0740 (rev 10) Subsystem: Device 15ad:0740 0000:00:0f.0 Class 0300: Device 15ad:0405 Subsystem: Device 15ad:0405 0000:00:11.0 Class 0604: Device 15ad:0790 (rev 02) 0000:00:15.0 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.1 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.2 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.3 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.4 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.5 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.6 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.7 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.0 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.1 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.2 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.3 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.4 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.5 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.6 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.7 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.0 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.1 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.2 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.3 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.4 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.5 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.6 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.7 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.0 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.1 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.2 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.3 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.4 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.5 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.6 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.7 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:02:00.0 Class 0c03: Device 15ad:0774 Subsystem: Device 15ad:1976 Kernel driver in use: uhci_hcd 0000:02:01.0 Class 0c03: Device 15ad:0770 Subsystem: Device 15ad:0770 Kernel driver in use: ehci-pci 0000:02:03.0 Class 0106: Device 15ad:07e0 Subsystem: Device 15ad:07e0 Kernel driver in use: ahci 0000:03:00.0 Class 0200: Device 8086:10fb (rev 01) Subsystem: Device 8086:000c Kernel driver in use: ixgbe 0000:0b:00.0 Class 0200: Device 8086:10fb (rev 01) Subsystem: Device 8086:000c Kernel driver in use: ixgbe 0000:13:00.0 Class 0106: Device 8086:8d02 (rev 05) Subsystem: Device 15d9:0832 Kernel driver in use: ahci 0001:00:12.0 Class 0000: Device 8086:5ae3 (rev ff) 0001:00:13.0 Class 0000: Device 8086:5ad8 (rev ff) 0001:00:14.0 Class 0000: Device 8086:5ad6 (rev ff) 0001:00:15.0 Class 0000: Device 8086:5aa8 (rev ff) 0001:00:16.0 Class 0000: Device 8086:5aac (rev ff) 0001:00:18.0 Class 0000: Device 8086:5abc (rev ff) 0001:00:19.2 Class 0000: Device 8086:5ac6 (rev ff) 0001:00:1f.1 Class 0000: Device 8086:5ad4 (rev ff) 0001:01:00.0 Class 0000: Device 1b4b:9215 (rev ff) 0001:02:00.0 Class 0000: Device 8086:1539 (rev ff) 0001:03:00.0 Class 0000: Device 8086:1539 (rev ff)So, the ahci controller is found by DSM(0000:13:00.0 Device 8086:8d02). And here is the output of /var/log/dmesg:

Sarieri@Sarieri:/$ vi /var/log/dmesg [Sun Jun 28 01:23:04 2020] pci 0000:00:16.5: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:16.5: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:16.6: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:16.6: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:16.7: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:16.7: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.3: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.3: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.4: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.4: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.5: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.5: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.6: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.6: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.7: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.7: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.2: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.2: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.3: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.3: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.4: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.4: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.5: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.5: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.6: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.6: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.7: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.7: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:0f.0: BAR 6: assigned [mem 0xc0400000-0xc0407fff pref] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.3: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.3: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.4: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.4: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.5: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.5: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.6: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.6: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.7: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.7: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.3: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.3: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.4: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.4: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.5: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.5: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.6: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.6: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.7: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.7: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.3: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.3: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.4: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.4: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.5: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.5: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.6: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.6: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.7: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.7: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.2: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.2: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.3: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.3: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.4: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.4: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.5: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.5: BAR 13: failed to assign [io size 0x1000]It seems the disks connected to the controller were failed to assign (I don't really understand what that means, but it seems to be the problem here).

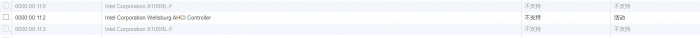

My motherboard is the X10SRL-F with two Wellsburg AHCI Controller. I passed through one of them which connected to 6 disks and I also have two pcie NVME drives passed through to DSM(RDM passthru, so DSM won't really see them as actually NVME disks).

-

7 hours ago, IG-88 said:

if its in ahci mode (bios setting?) it should work, ahci (driver) is part of the synology kernel

you can check with lspci -k in the vm if the device is present and what driver is used

maybe this helps?

Is it possible that Xpenology has the driver for the controller but I will need to edit the grub.cfg and change SataPortMap and DiskIdxMap so that it can recognize it properly.

-

4 hours ago, IG-88 said:

if its in ahci mode (bios setting?) it should work, ahci (driver) is part of the synology kernel

you can check with lspci -k in the vm if the device is present and what driver is used

maybe this helps?

It’s in AHCI mode. 100% sure

-

On 10/4/2019 at 1:18 AM, IG-88 said:

i did some search about alternatives to old lsi 8 port sas controllers and 4 port ahci controllers - namely "cheap" 8 port ahci controllers without multiplexers, i found 2 candidates

(using AHCI makes independent from external/additional drivers, if you fall back to just using the drivers synology provides, the ahci will still work in the 918+ image)

IO Crest SI-PEX40137 (https://www.sybausa.com/index.php?route=product/product&product_id=1006&search=8+Port+SATA

1 x ASM 1806 (pcie bridge) + 2 x Marvell 9215 (4port sata)

~$100

QNINE 8 Port SATA Card (https://www.amazon.com/dp/B07KW38G9N?tag=altasra-20)

1 x ASM 1806 (pcie bridge) + 4 x ASM1061 (2port sata)

~$50

but both "only" use max 4x pcie 2.0 lanes on the asm1806 and 2x pcie lane for 4 sata ports (Marvell 9225) or 1 lane for 2 sata ports (asm1061), so they might be ok for hdd's but maybe not deliver enough throughput for ssd's an all 8 ports

the good thing is as ahci controllers they will work with the drivers synology has in its dsm, so no dependencies of additional drivers

ASM1806, https://www.asmedia.com.tw/eng/e_show_products.php?item=199&cate_index=168

Marvell 9215, https://www.marvell.com/storage/system-solutions/assets/Marvell-88SE92xx-002-product-brief.pdf

ASM1061, https://www.asmedia.com.tw/eng/e_show_products.php?cate_index=166&item=118

if they turn out to be bough enough then there might be newer/better ones, there are better/newer pcie bridge chips and 2/4 port sata controller being able to use more pcie lanes (making it 1 lane per sata port)

i bought the IO Crest SI-PEX40137 as it has the better known/tested marvell 9215 (lots of 4port sata cards with it)

but i only tested it shortly to see if its working as ahci controller as intended - it does and with lspci it looks like having 2 x marvell 9215 in the system - all good

the SF8087 connector and the delivered cables do work as intended, the same cable did work an my "old" lsi sas controller so nothing special about the connector or the cables, they are ~1m long

there are LED's for every sata port on the controller (as we are used to from the lsi controllers)

i haven't tested the performance yet, i have 3 older 50GB ssd so even with these as raid0 i will not be able to max it out but at least i will see if there is any unexpected bottleneck - as i'm planing to use it for old fashioned hdd's i guess that one will be ok (and if not it will switch places with the good old 8 port sas from the system doing backup)

EDIT:

after reviewing the data of the cards again my liking for the 9215 based card has vanished, its kind of a fake with its pcie 4x interface as the marvell 9215 is a pcie 1x chip and as there are two of them the card can only use two pcie lanes, so when it comes to performance the asm1061 based card should be the winner as it uses four 2port controller and every controller uses one pcie lane, making full use of the pcie 4x interface of the card

so its 500 MByte/s for four sata ports (max 6G !!! per port) on the 9215 based card and 500 MByte/s for two sata ports on the asm1061 based card

the asm1061 ´based card can be found as sa3008 under a lot of different labels - if the quality and reliability can hold up against the old trusty lsi cards is another question

better comparison on marvell chips:

(conclusion 9230 and 9235 should be the choice for a 4port controller instead of a 9215)

edit2: the ASM1806/ASM1061 card in different but also has a design flaw, the ASM1806 pci express bridge only has 2 lanes as "upstream "port (to the pcie root aka computer chipset) so in the end it will be caped to ~1000 MB/s for all 8 drives and both cards will end in a measly performance and are unable to handle sdd's in a meaningful way

looks like two marvell 9230/9235 cards would perform better then one of these two 8port cards

Hi,

Do you know if dsm918+ have driver for the onboard SATA controller? I have a Supermicro X10SRL-F with two onboard Wellsburg AHCI Controllers. They were fine passsing through to Xpenology in KVM but not in ESXI. I'm kind of lost whether it's a driver issue of xpenology or something wrong with ESXI. I tried to passthrough the Wellsburg AHCI controller to windows in ESXI and the disks were recognized without problem...make the situation even more wired.

I guess it might be something wrong with the reset method I set in passthru.map in ESXI. The Wellsburg AHCI Controller was originally grayed out in ESXI I have to edit the passthru.map file to make it possible to toggle the controller as passthrough. I have tried default, link, d3d0, and bridge but it seems that only d3d0 reset method will prevent ESXI from grayout the controller.

-

7 hours ago, flyride said:

That is incorrect. Without the patch they are not recognized at all by Synology's utilities (they are accessible by Linux). You can hack them in as storage but it is not supported or safe, and it does not matter whether the patch is installed or not. If you want to remove the patch, put the original file back.

Thank you so much for clearification.

-

22 minutes ago, mervincm said:

Synology does not permit the use of NVME as storage, only cache, at lease in the 918+

Yes, I understand that but before applying the NVME patch, synology recognize nvme drives as normal drives only available to use as storage drives. So, I'm just wondering if there's a way to remove the patch.

-

Does anyone know how to remove this nvme patch so that one can use the nvme drive as storage again?

-

On 5/2/2020 at 9:00 PM, MarcoH said:

Wow, thanks

Dmesg is in youre PM

I heard someone talking about the motherboard of gen 10+ blocked the integrated graphic card from the CPU. I don’t know if that’s true but if not it would really be the best compact xpenology ever.

-

7 hours ago, IG-88 said:

it DOES, if someone is impatient he can create his own extra/extra2 until i upload 0.11 (later this evening)

you will get the new mpt3sas.ko with 0.11

Tried v0.11. SMART info is now correct. Thank You!

-

3 hours ago, IG-88 said:

as a newer mpt3sas.ko made no difference and jun's mpt3sys did not work i assumed the difference might be on level lower so i kept the new mpt3sas v27.0.0.1 and replaced my own new complied version (synology kernel source 24992) of scsi_transport_spi.ko, scsi_transport_sas.ko, raid_class.ko with the files from jun's original extra/extra2

test looks good, no problems loading mpt3sas and there are smart infos now

Hi,

Should this method help resolving the no SMART info issue of mpt3sas in extra.lzma v0.1 for dsm6.23?

-

22 hours ago, Balrog said:

Yes. The Dell H200 HBA will be driven by the mpt2sas-driver.

I checked around and to be honest I still have no idea whether this is a driver issue or something I did wrong.

Here's the output of lspci -v and lsmod:

lspci -v [....] 0000:00:05.0 Class 0107: Device 1000:0086 (rev 05) Subsystem: Device 15d9:0691 Flags: bus master, fast devsel, latency 0, IRQ 11 I/O ports at c000 [size=256] Memory at 8000c0000 (64-bit, non-prefetchable) [size=64K] Memory at 800080000 (64-bit, non-prefetchable) [size=256K] Expansion ROM at c8100000 [disabled] [size=1M] Capabilities: [50] Power Management version 3 Capabilities: [68] Express Endpoint, MSI 00 Capabilities: [d0] Vital Product Data Capabilities: [a8] MSI: Enable- Count=1/1 Maskable- 64bit+ Capabilities: [c0] MSI-X: Enable+ Count=16 Masked- Kernel driver in use: mpt3sas [....]lsmod [....] mdio 3127 5 alx,sfc,cxgb,bnx2x,cxgb3 mpt3sas 197815 16 mptsas 38563 0 megaraid_sas 111224 0 megaraid 43521 0 mptctl 26181 0 mptspi 13060 0 mptscsih 18913 2 mptsas,mptspi mptbase 63170 4 mptctl,mptsas,mptspi,mptscsih megaraid_mbox 28864 0 megaraid_mm 7952 1 megaraid_mbox vmw_pvscsi 15406 0 [....]There's no mpt2sas/3sas.ko file under /lib/modules/ but there's a mpt3sas.ko file under path /lib/modules/update

root@Sarieri_NAS:~# ls -lah /lib/modules/update/mpt3sas.ko -rw-r--r-- 1 root root 335K Apr 19 20:01 /lib/modules/update/mpt3sas.ko root@Sarieri_NAS:~# cat /sys/class/scsi_host/host0/board_name LSI2308-IT root@Sarieri_NAS:~# cat /sys/class/scsi_host/host0/version_fw 20.00.07.00 root@Sarieri_NAS:~# cat /sys/module/mpt3sas/version 09.102.00.00I have reinstall the dsm6.2.3 baremetal and as vm quite a few times, and I always switch extra.lzma/lzma2 and zlmage/rd.gz file from pat file. SMART info was fine in 6.22.

-

2 hours ago, Balrog said:

I just have a look on my 2 VMs:

- the first VM has a Dell H200 HBA flashed to IT-Mode and is configured as passthrough (under ESXi 6.7 and also 7.0 on a HPE Microserver Gen 8):

SMART works very well! All values are shown in DSM and I run successfully a short SMART-Test on the disks.

- on the second VM I have passthroughed the original "Cougar Point 6 port SATA AHCI Controller" of the HPE Microserver Gen8:

SMART is working also very well without any issues.

Both VMs are running as "DS3615xs".

I hope this helps a little bit to localize a little bit where to look for the root cause for your SMART issues.

Does your HBA card uses a mpt2sas/3sas driver? The SMART issue should be about those drivers.

-

3 hours ago, IG-88 said:

yes its on my list what to check and to do next

Thank you so much.

-

6 hours ago, IG-88 said:

no, not related and your xeon does not have a gpu like a desktop cpu, quick sync video is a gpu feature

I saw another person passing through a lsi card and has missing smart info as well. Is this a driver issue?

-

Can someone check if the SMART info is showing correctly? I passed through the onboard lsi2308 and smart info is missing(it was there before the update.

-

13 hours ago, IG-88 said:

the new package is not well tested i just did some tests with hardware i have at hand (ahci, e1000e, r8168, igb, bnx2x, mpt2sas/mpt3sas) and tested update from 6.2.2 to 6.2.3

Hi, could you check if the smart info is showing correctly? I updated from 6.22 24922 (ds918+) and swapped the new extra and extra2 files after installation. The mpt2sas driver seems to be working but no SMART info (the SMART info was there before update).

Installation type: VM(unraid) - e3 1280 v3, on board lsi2308 passthrough, on board Intel NIC

I didn't know if I should swap the rd.gz and zlmage file in the loader with those from the .pat file but I did it anyway. I also realized that /dev/dri folder is missing (I don't use hw transcoding at all but maybe that is related to the smart info missing problem?)

-

Outcome of the installation/update: SUCCESSFUL

- DSM version prior update: DSM 6.2.2-24922 Update 5

- Loader version and model: Jun's Loader v1.04b - DS918+

- Using custom extra.lzma: YES (v0.1 for 6.23)

- Installation type: VM(unraid) - e3 1280 v3, on board lsi2308 passthrough, on board Intel NIC

- Additional comments: SMART info is not showing, might be a bug in extra.lzma

Sata Controllers not recognized

in Hardware Modding

Posted · Edited by sarieri

Hi,

Sorry to bother again, but it looks there is still some problem. So I played with SataPortmap in grub.cfg in order to have all six drives that connect to the AHCI controller recognized by DSM. DSM did recognize all 6 drives, but it performs a repair on the storage pool(SHR). The Storage pool is repaired now and shows healthy, but the volume is creashed and data is read only. Do you know if there's anything I can do now or I just have to backup things and rebuild everything.