sarieri

Member-

Posts

32 -

Joined

-

Last visited

Everything posted by sarieri

-

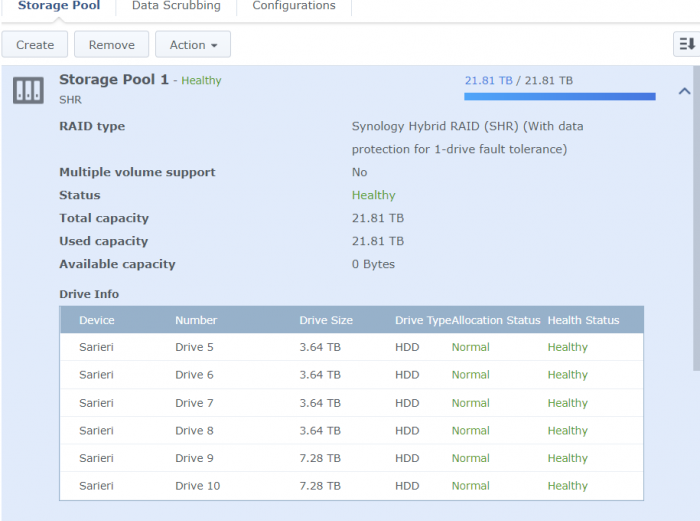

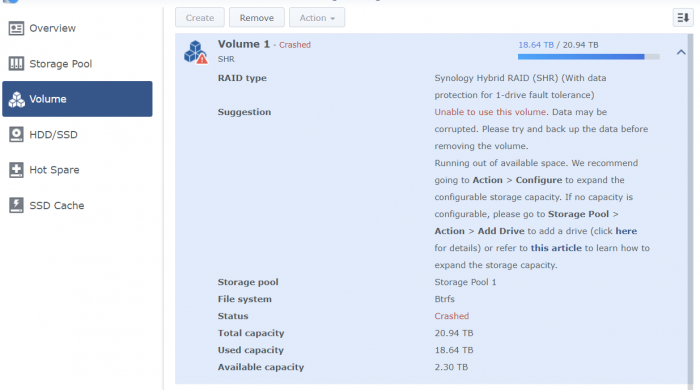

Hi, Sorry to bother again, but it looks there is still some problem. So I played with SataPortmap in grub.cfg in order to have all six drives that connect to the AHCI controller recognized by DSM. DSM did recognize all 6 drives, but it performs a repair on the storage pool(SHR). The Storage pool is repaired now and shows healthy, but the volume is creashed and data is read only. Do you know if there's anything I can do now or I just have to backup things and rebuild everything.

-

Now this is interesting. I tried 3617 with jun's loader 1.03 and it works. I have no idea why the 918+ works differently.

-

Not working...Well, I did some research on google and maybe I will give it a try later. For now, KVM and UNRAID passthru the AHCI controller fine but not ESXI. Might be something wrong with reset mode(d3d0) of the controller.

-

Should that be added here ? set extra_args_918='intel_iommu=on iommu=soft'

-

This is weird, the controller itself must be okay since I passed through them to DSM in UNRAID before. So this might be something related to ESXI.

-

So this has nothing to do with the configuration of set extra_args_918 in grub.cfg right?

-

Hi, Here is the full version dmesg...I thought that vim could pull out the full version...my bad. [Sun Jun 28 01:24:51 2020] pci 0000:13:00.0: Signaling PME through PCIe PME interrupt ..... [Sun Jun 28 01:24:52 2020] ahci 0000:13:00.0: AHCI 0001.0300 32 slots 6 ports 6 Gbps 0x3f impl SATA mode [Sun Jun 28 01:24:52 2020] ahci 0000:13:00.0: flags: 64bit ncq led clo pio slum part ems apst [Sun Jun 28 01:24:52 2020] scsi host4: ahci [Sun Jun 28 01:24:52 2020] scsi host5: ahci [Sun Jun 28 01:24:52 2020] scsi host6: ahci [Sun Jun 28 01:24:52 2020] scsi host7: ahci [Sun Jun 28 01:24:52 2020] scsi host8: ahci [Sun Jun 28 01:24:52 2020] scsi host9: ahci The only part that mentions "0000:13:00.0" was this. dmesg

-

Does "failed to assign" meant that there's no space for the ahci controller anymore so that I need to edit the "set extra_args_918" line in grub.cfg? I looked into disk mapping issues a while ago but never really understand how this actually work... It seems to me that there are only two controllers for DSM in my case. One is the virtual controller connected to the disk with loader and other two RDM passthru NVME drives, the other controller is the passed through AHCI controller.

-

Sarieri@Sarieri:/$ lspci -k 0000:00:00.0 Class 0600: Device 8086:7190 (rev 01) Subsystem: Device 15ad:1976 Kernel driver in use: agpgart-intel 0000:00:01.0 Class 0604: Device 8086:7191 (rev 01) 0000:00:07.0 Class 0601: Device 8086:7110 (rev 08) Subsystem: Device 15ad:1976 0000:00:07.1 Class 0101: Device 8086:7111 (rev 01) Subsystem: Device 15ad:1976 0000:00:07.3 Class 0680: Device 8086:7113 (rev 08) Subsystem: Device 15ad:1976 0000:00:07.7 Class 0880: Device 15ad:0740 (rev 10) Subsystem: Device 15ad:0740 0000:00:0f.0 Class 0300: Device 15ad:0405 Subsystem: Device 15ad:0405 0000:00:11.0 Class 0604: Device 15ad:0790 (rev 02) 0000:00:15.0 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.1 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.2 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.3 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.4 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.5 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.6 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:15.7 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.0 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.1 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.2 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.3 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.4 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.5 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.6 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:16.7 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.0 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.1 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.2 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.3 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.4 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.5 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.6 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:17.7 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.0 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.1 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.2 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.3 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.4 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.5 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.6 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:00:18.7 Class 0604: Device 15ad:07a0 (rev 01) Kernel driver in use: pcieport 0000:02:00.0 Class 0c03: Device 15ad:0774 Subsystem: Device 15ad:1976 Kernel driver in use: uhci_hcd 0000:02:01.0 Class 0c03: Device 15ad:0770 Subsystem: Device 15ad:0770 Kernel driver in use: ehci-pci 0000:02:03.0 Class 0106: Device 15ad:07e0 Subsystem: Device 15ad:07e0 Kernel driver in use: ahci 0000:03:00.0 Class 0200: Device 8086:10fb (rev 01) Subsystem: Device 8086:000c Kernel driver in use: ixgbe 0000:0b:00.0 Class 0200: Device 8086:10fb (rev 01) Subsystem: Device 8086:000c Kernel driver in use: ixgbe 0000:13:00.0 Class 0106: Device 8086:8d02 (rev 05) Subsystem: Device 15d9:0832 Kernel driver in use: ahci 0001:00:12.0 Class 0000: Device 8086:5ae3 (rev ff) 0001:00:13.0 Class 0000: Device 8086:5ad8 (rev ff) 0001:00:14.0 Class 0000: Device 8086:5ad6 (rev ff) 0001:00:15.0 Class 0000: Device 8086:5aa8 (rev ff) 0001:00:16.0 Class 0000: Device 8086:5aac (rev ff) 0001:00:18.0 Class 0000: Device 8086:5abc (rev ff) 0001:00:19.2 Class 0000: Device 8086:5ac6 (rev ff) 0001:00:1f.1 Class 0000: Device 8086:5ad4 (rev ff) 0001:01:00.0 Class 0000: Device 1b4b:9215 (rev ff) 0001:02:00.0 Class 0000: Device 8086:1539 (rev ff) 0001:03:00.0 Class 0000: Device 8086:1539 (rev ff) So, the ahci controller is found by DSM(0000:13:00.0 Device 8086:8d02). And here is the output of /var/log/dmesg: Sarieri@Sarieri:/$ vi /var/log/dmesg [Sun Jun 28 01:23:04 2020] pci 0000:00:16.5: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:16.5: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:16.6: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:16.6: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:16.7: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:16.7: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.3: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.3: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.4: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.4: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.5: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.5: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.6: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.6: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.7: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:17.7: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.2: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.2: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.3: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.3: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.4: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.4: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.5: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.5: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.6: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.6: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.7: res[13]=[io 0x1000-0x0fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:18.7: res[13]=[io 0x1000-0x1fff] res_to_dev_res add_size 1000 min_align 1000 [Sun Jun 28 01:23:04 2020] pci 0000:00:0f.0: BAR 6: assigned [mem 0xc0400000-0xc0407fff pref] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.3: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.3: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.4: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.4: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.5: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.5: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.6: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.6: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.7: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:15.7: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.3: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.3: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.4: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.4: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.5: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.5: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.6: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.6: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.7: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:16.7: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.3: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.3: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.4: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.4: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.5: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.5: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.6: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.6: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.7: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:17.7: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.2: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.2: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.3: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.3: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.4: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.4: BAR 13: failed to assign [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.5: BAR 13: no space for [io size 0x1000] [Sun Jun 28 01:23:04 2020] pci 0000:00:18.5: BAR 13: failed to assign [io size 0x1000] It seems the disks connected to the controller were failed to assign (I don't really understand what that means, but it seems to be the problem here). My motherboard is the X10SRL-F with two Wellsburg AHCI Controller. I passed through one of them which connected to 6 disks and I also have two pcie NVME drives passed through to DSM(RDM passthru, so DSM won't really see them as actually NVME disks).

-

Is it possible that Xpenology has the driver for the controller but I will need to edit the grub.cfg and change SataPortMap and DiskIdxMap so that it can recognize it properly.

-

It’s in AHCI mode. 100% sure

-

Hi, Do you know if dsm918+ have driver for the onboard SATA controller? I have a Supermicro X10SRL-F with two onboard Wellsburg AHCI Controllers. They were fine passsing through to Xpenology in KVM but not in ESXI. I'm kind of lost whether it's a driver issue of xpenology or something wrong with ESXI. I tried to passthrough the Wellsburg AHCI controller to windows in ESXI and the disks were recognized without problem...make the situation even more wired. I guess it might be something wrong with the reset method I set in passthru.map in ESXI. The Wellsburg AHCI Controller was originally grayed out in ESXI I have to edit the passthru.map file to make it possible to toggle the controller as passthrough. I have tried default, link, d3d0, and bridge but it seems that only d3d0 reset method will prevent ESXI from grayout the controller.

-

Thank you so much for clearification.

-

Yes, I understand that but before applying the NVME patch, synology recognize nvme drives as normal drives only available to use as storage drives. So, I'm just wondering if there's a way to remove the patch.

-

Does anyone know how to remove this nvme patch so that one can use the nvme drive as storage again?

-

I heard someone talking about the motherboard of gen 10+ blocked the integrated graphic card from the CPU. I don’t know if that’s true but if not it would really be the best compact xpenology ever.

-

I checked around and to be honest I still have no idea whether this is a driver issue or something I did wrong. Here's the output of lspci -v and lsmod: lspci -v [....] 0000:00:05.0 Class 0107: Device 1000:0086 (rev 05) Subsystem: Device 15d9:0691 Flags: bus master, fast devsel, latency 0, IRQ 11 I/O ports at c000 [size=256] Memory at 8000c0000 (64-bit, non-prefetchable) [size=64K] Memory at 800080000 (64-bit, non-prefetchable) [size=256K] Expansion ROM at c8100000 [disabled] [size=1M] Capabilities: [50] Power Management version 3 Capabilities: [68] Express Endpoint, MSI 00 Capabilities: [d0] Vital Product Data Capabilities: [a8] MSI: Enable- Count=1/1 Maskable- 64bit+ Capabilities: [c0] MSI-X: Enable+ Count=16 Masked- Kernel driver in use: mpt3sas [....] lsmod [....] mdio 3127 5 alx,sfc,cxgb,bnx2x,cxgb3 mpt3sas 197815 16 mptsas 38563 0 megaraid_sas 111224 0 megaraid 43521 0 mptctl 26181 0 mptspi 13060 0 mptscsih 18913 2 mptsas,mptspi mptbase 63170 4 mptctl,mptsas,mptspi,mptscsih megaraid_mbox 28864 0 megaraid_mm 7952 1 megaraid_mbox vmw_pvscsi 15406 0 [....] There's no mpt2sas/3sas.ko file under /lib/modules/ but there's a mpt3sas.ko file under path /lib/modules/update root@Sarieri_NAS:~# ls -lah /lib/modules/update/mpt3sas.ko -rw-r--r-- 1 root root 335K Apr 19 20:01 /lib/modules/update/mpt3sas.ko root@Sarieri_NAS:~# cat /sys/class/scsi_host/host0/board_name LSI2308-IT root@Sarieri_NAS:~# cat /sys/class/scsi_host/host0/version_fw 20.00.07.00 root@Sarieri_NAS:~# cat /sys/module/mpt3sas/version 09.102.00.00 I have reinstall the dsm6.2.3 baremetal and as vm quite a few times, and I always switch extra.lzma/lzma2 and zlmage/rd.gz file from pat file. SMART info was fine in 6.22.

-

Can someone check if the SMART info is showing correctly? I passed through the onboard lsi2308 and smart info is missing(it was there before the update.

-

Hi, could you check if the smart info is showing correctly? I updated from 6.22 24922 (ds918+) and swapped the new extra and extra2 files after installation. The mpt2sas driver seems to be working but no SMART info (the SMART info was there before update). Installation type: VM(unraid) - e3 1280 v3, on board lsi2308 passthrough, on board Intel NIC I didn't know if I should swap the rd.gz and zlmage file in the loader with those from the .pat file but I did it anyway. I also realized that /dev/dri folder is missing (I don't use hw transcoding at all but maybe that is related to the smart info missing problem?)

-

Outcome of the installation/update: SUCCESSFUL - DSM version prior update: DSM 6.2.2-24922 Update 5 - Loader version and model: Jun's Loader v1.04b - DS918+ - Using custom extra.lzma: YES (v0.1 for 6.23) - Installation type: VM(unraid) - e3 1280 v3, on board lsi2308 passthrough, on board Intel NIC - Additional comments: SMART info is not showing, might be a bug in extra.lzma