Chuck12

-

Posts

27 -

Joined

-

Last visited

-

Days Won

2

Posts posted by Chuck12

-

-

4 hours ago, IG-88 said:

thats a behavior of dsm itself not the loader, if you block internet access for your dsm system (router/firewall) and upload 7.0.1 42218-0 as file to your system then it can't automatically update and you should end with 7.0.1 42218-0

I'm not sure if you used tinycore. My router or firewall is not blocking anything related to DSM. I go through the process to create a loader for 7.0.1-42218-0 because tinycore only shows 7.0.1-42218-0 as the latest, download 7.0.1-42218-0 pat file from Synology, uploaded to DSM during the initial setup and after reboot, DSM show I have 7.0.1-42218-2.

-

I ran the patch as per the instructions and I get this:

Detected DSM version: 7.0.1 42218-0

Patch for DSM Version (7.0.1 42218-0) not found.

Patch is available for versions:

ends at 6.2.3 25426-3

-

How do you apply the 7.0.1 patch?

I downloaded the synocodectool.7.0.1-42216-0_7.0.1-42218-0.patch file.

Now what?

-

Many thanks for all great work!

I have 7.0.1-42218 working in VMWare workstation.

I'm curious if I can change the SN and MAC when it's already set up in a VM or do I need to create a new compiled image?

-

I have disabled it since the host redo.

there were a few things that caused the problem. one of the drives in the pool was bad which caused vmware to halt during a scrub. another was a kernel power failure from a bad windows update. a clean install of windows so far have been stable.

I have the vhds set aside. still have not recovered yet. need to read up on Linux some more. I thought I did everything from my first attempt but it's not working second time.

-

it's definitely off

-

Well that didn't last long. A windows update forced a reboot while the nas was doing a consistency check on a separate volume. Unfortunately, this time, I can't mount the array in ubuntu to access the data. Below are the diagnostic data of the drives in the nas. Note that two of the missing drives are shown as Initialized and healthy in the HDD/SSD group but they are not assigned to the specific pool. HELP!

root@NAS:~# cat /proc/mdstat

Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1]

md4 : active raid5 sdi5[0] sdo5[3]

7749802112 blocks super 1.2 level 5, 64k chunk, algorithm 2 [4/2] [U__U]md3 : active raid1 sdp5[0]

1882600064 blocks super 1.2 [1/1] [U]md5 : active raid5 sde5[0] sdd5[3] sdf5[1]

9637230720 blocks super 1.2 level 5, 64k chunk, algorithm 2 [3/3] [UUU]md6 : active raid5 sdj6[0] sdl6[2] sdk6[1]

13631455616 blocks super 1.2 level 5, 64k chunk, algorithm 2 [3/3] [UUU]md2 : active raid5 sdj5[0] sdl5[3] sdk5[1]

1877771392 blocks super 1.2 level 5, 64k chunk, algorithm 2 [3/3] [UUU]md7 : active raid1 sdi6[0] sdo6[1]

838848960 blocks super 1.2 [2/2] [UU]md1 : active raid1 sdp2[11] sdo2[10] sdn2[9] sdl2[8] sdk2[7] sdj2[6] sdi2[5] sdh2[4] sdg2[3] sdf2[2] sde2[1] sdd2[0]

2097088 blocks [24/12] [UUUUUUUUUUUU____________]md0 : active raid1 sdd1[0] sde1[1] sdf1[9] sdg1[8] sdh1[3] sdi1[11] sdj1[10] sdk1[5] sdl1[4] sdn1[7] sdo1[6] sdp1[2]

2490176 blocks [12/12] [UUUUUUUUUUUU]unused devices: <none>

Odd I only have a total of 4 Volumes?

root@NAS:~# mdadm --detail /dev/md4

/dev/md4:

Version : 1.2

Creation Time : Sat Feb 1 18:20:02 2020

Raid Level : raid5

Array Size : 7749802112 (7390.79 GiB 7935.80 GB)

Used Dev Size : 3874901056 (3695.39 GiB 3967.90 GB)

Raid Devices : 4

Total Devices : 2

Persistence : Superblock is persistentUpdate Time : Tue Mar 9 08:12:16 2021

State : clean, FAILED

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0Layout : left-symmetric

Chunk Size : 64KDelta Devices : 1, (3->4)

Name : NAS:4 (local to host NAS)

UUID : f95f4a28:1cd4a412:0df847fe:4645d62b

Events : 8456Number Major Minor RaidDevice State

0 8 133 0 active sync /dev/sdi5

- 0 0 1 removed

- 0 0 2 removed

3 8 229 3 active sync /dev/sdo5

root@NAS:~# fdisk -l /dev/sdi5

Disk /dev/sdi5: 3.6 TiB, 3967899729920 bytes, 7749804160 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytesroot@NAS:~# fdisk -l /dev/sdo5

Disk /dev/sdo5: 3.6 TiB, 3967899729920 bytes, 7749804160 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

root@NAS:~# pvs

/dev/md4: read failed after 0 of 4096 at 0: Input/output error

/dev/md4: read failed after 0 of 4096 at 7935797297152: Input/output error

/dev/md4: read failed after 0 of 4096 at 7935797354496: Input/output error

/dev/md4: read failed after 0 of 4096 at 4096: Input/output error

Couldn't find device with uuid eJGcET-fSVZ-JCMi-kaHL-dipI-G4s6-r1R32F.

PV VG Fmt Attr PSize PFree

/dev/md2 vg1003 lvm2 a-- 1.75t 0

/dev/md3 vg1002 lvm2 a-- 1.75t 0

/dev/md5 vg1000 lvm2 a-- 8.98t 0

/dev/md6 vg1003 lvm2 a-- 12.70t 0

/dev/md7 vg1001 lvm2 a-- 799.98g 0

unknown device vg1001 lvm2 a-m 7.22t 0

root@NAS:~# vgs

/dev/md4: read failed after 0 of 4096 at 0: Input/output error

/dev/md4: read failed after 0 of 4096 at 7935797297152: Input/output error

/dev/md4: read failed after 0 of 4096 at 7935797354496: Input/output error

/dev/md4: read failed after 0 of 4096 at 4096: Input/output error

Couldn't find device with uuid eJGcET-fSVZ-JCMi-kaHL-dipI-G4s6-r1R32F.

VG #PV #LV #SN Attr VSize VFree

vg1000 1 1 0 wz--n- 8.98t 0

vg1001 2 1 0 wz-pn- 8.00t 0

vg1002 1 1 0 wz--n- 1.75t 0

vg1003 2 1 0 wz--n- 14.44t 0

root@NAS:~# lvs

/dev/md4: read failed after 0 of 4096 at 0: Input/output error

/dev/md4: read failed after 0 of 4096 at 7935797297152: Input/output error

/dev/md4: read failed after 0 of 4096 at 7935797354496: Input/output error

/dev/md4: read failed after 0 of 4096 at 4096: Input/output error

Couldn't find device with uuid eJGcET-fSVZ-JCMi-kaHL-dipI-G4s6-r1R32F.

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

lv vg1000 -wi-ao---- 8.98t

lv vg1001 -wi-----p- 8.00t

lv vg1002 -wi-ao---- 1.75t

lv vg1003 -wi-ao---- 14.44troot@NAS:~# pvdisplay

/dev/md4: read failed after 0 of 4096 at 0: Input/output error

/dev/md4: read failed after 0 of 4096 at 7935797297152: Input/output error

/dev/md4: read failed after 0 of 4096 at 7935797354496: Input/output error

/dev/md4: read failed after 0 of 4096 at 4096: Input/output error

Couldn't find device with uuid eJGcET-fSVZ-JCMi-kaHL-dipI-G4s6-r1R32F.

--- Physical volume ---

PV Name unknown device

VG Name vg1001

PV Size 7.22 TiB / not usable 2.12 MiB

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 1892041

Free PE 0

Allocated PE 1892041

PV UUID eJGcET-fSVZ-JCMi-kaHL-dipI-G4s6-r1R32F--- Physical volume ---

PV Name /dev/md7

VG Name vg1001

PV Size 799.99 GiB / not usable 4.44 MiB

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 204796

Free PE 0

Allocated PE 204796

PV UUID E9ibKv-ZC3q-yWDp-l0C6-bxlf-5Kvq-SFNh7g--- Physical volume ---

PV Name /dev/md5

VG Name vg1000

PV Size 8.98 TiB / not usable 1.56 MiB

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 2352839

Free PE 0

Allocated PE 2352839

PV UUID PfIiwF-d5d5-t46T-7a0W-TMjL-4nnh-SvRJQF--- Physical volume ---

PV Name /dev/md3

VG Name vg1002

PV Size 1.75 TiB / not usable 640.00 KiB

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 459619

Free PE 0

Allocated PE 459619

PV UUID JLchK0-rzk8-lYZd-s2dR-tiAE-xFhq-10cE8q--- Physical volume ---

PV Name /dev/md2

VG Name vg1003

PV Size 1.75 TiB / not usable 0

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 458440

Free PE 0

Allocated PE 458440

PV UUID U44r7Z-5MC3-nHMi-dwFA-gYtD-V0mq-tve2RK--- Physical volume ---

PV Name /dev/md6

VG Name vg1003

PV Size 12.70 TiB / not usable 3.81 MiB

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 3327991

Free PE 0

Allocated PE 3327991

PV UUID uxtEKL-6X6d-1QYL-2mny-kvSe-X3fV-qPpesk

root@NAS:~# vgdisplay

/dev/md4: read failed after 0 of 4096 at 0: Input/output error

/dev/md4: read failed after 0 of 4096 at 7935797297152: Input/output error

/dev/md4: read failed after 0 of 4096 at 7935797354496: Input/output error

/dev/md4: read failed after 0 of 4096 at 4096: Input/output error

Couldn't find device with uuid eJGcET-fSVZ-JCMi-kaHL-dipI-G4s6-r1R32F.

--- Volume group ---

VG Name vg1001

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 4

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 0

Max PV 0

Cur PV 2

Act PV 1

VG Size 8.00 TiB

PE Size 4.00 MiB

Total PE 2096837

Alloc PE / Size 2096837 / 8.00 TiB

Free PE / Size 0 / 0

VG UUID 3ZKEpX-f4o2-8Zc8-AXax-NAC2-xEGs-BgQRzp--- Volume group ---

VG Name vg1000

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 4

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 1

Max PV 0

Cur PV 1

Act PV 1

VG Size 8.98 TiB

PE Size 4.00 MiB

Total PE 2352839

Alloc PE / Size 2352839 / 8.98 TiB

Free PE / Size 0 / 0

VG UUID aHQHQk-zx96-OWTT-tjK0-hIy4-up1V-4DrBq3--- Volume group ---

VG Name vg1002

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 2

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 1

Max PV 0

Cur PV 1

Act PV 1

VG Size 1.75 TiB

PE Size 4.00 MiB

Total PE 459619

Alloc PE / Size 459619 / 1.75 TiB

Free PE / Size 0 / 0

VG UUID 7GhuzZ-Ba7k-juDK-X1Ez-C6Xk-Dceo-K0SiEa--- Volume group ---

VG Name vg1003

System ID

Format lvm2

Metadata Areas 2

Metadata Sequence No 5

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 1

Open LV 1

Max PV 0

Cur PV 2

Act PV 2

VG Size 14.44 TiB

PE Size 4.00 MiB

Total PE 3786431

Alloc PE / Size 3786431 / 14.44 TiB

Free PE / Size 0 / 0

VG UUID ga4uN9-NL9S-o9Cr-uK1L-nENj-9Mcw-71toaL

root@NAS:~# lvdisplay

/dev/md4: read failed after 0 of 4096 at 0: Input/output error

/dev/md4: read failed after 0 of 4096 at 7935797297152: Input/output error

/dev/md4: read failed after 0 of 4096 at 7935797354496: Input/output error

/dev/md4: read failed after 0 of 4096 at 4096: Input/output error

Couldn't find device with uuid eJGcET-fSVZ-JCMi-kaHL-dipI-G4s6-r1R32F.

--- Logical volume ---

LV Path /dev/vg1001/lv

LV Name lv

VG Name vg1001

LV UUID 3ZaAe2-Bs3y-0G1k-hr9O-OSIj-F0XN-12COkF

LV Write Access read/write

LV Creation host, time ,

LV Status NOT available

LV Size 8.00 TiB

Current LE 2096837

Segments 2

Allocation inherit

Read ahead sectors auto--- Logical volume ---

LV Path /dev/vg1000/lv

LV Name lv

VG Name vg1000

LV UUID R5pH8E-X9AL-2Afw-OPsq-nOpy-ffHO-CCvWfp

LV Write Access read/write

LV Creation host, time ,

LV Status available

# open 1

LV Size 8.98 TiB

Current LE 2352839

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 4096

Block device 253:0--- Logical volume ---

LV Path /dev/vg1002/lv

LV Name lv

VG Name vg1002

LV UUID KnvT1S-9R4B-wFui-kYnQ-i9ns-BGQP-yotSts

LV Write Access read/write

LV Creation host, time ,

LV Status available

# open 1

LV Size 1.75 TiB

Current LE 459619

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 4096

Block device 253:2--- Logical volume ---

LV Path /dev/vg1003/lv

LV Name lv

VG Name vg1003

LV UUID c4intm-bTUc-rC4n-jI2q-f4nv-st63-Uz5ze3

LV Write Access read/write

LV Creation host, time ,

LV Status available

# open 1

LV Size 14.44 TiB

Current LE 3786431

Segments 3

Allocation inherit

Read ahead sectors auto

- currently set to 4096

Block device 253:1

root@NAS:~# cat /etc/fstab

none /proc proc defaults 0 0

/dev/root / ext4 defaults 1 1

/dev/vg1001/lv /volume2 btrfs 0 0

/dev/vg1002/lv /volume1 btrfs auto_reclaim_space,synoacl,ssd,relatime 0 0

/dev/vg1003/lv /volume4 btrfs auto_reclaim_space,synoacl,ssd,relatime 0 0

/dev/vg1000/lv /volume3 btrfs auto_reclaim_space,synoacl,relatime 0 0

-

After lots of googling, I was able to get access to my data from that crashed volume. I removed the vhd from the VM, added them to an ubuntu VM and followed the Synology link to recover the data. This was done after the raid was repaired that it showed up in the ubuntu files manager app. Previously when the failed drive was not repaired, the volume was not available. It was visible as an option but an error appeared when I tried to access it. I ran the lvscan to display what to mount and mine showed /dev/vg1003/lv. Since it was a btrfs file system, I then installed the btrfs-progs package and ran the following mount command: mount -t btrfs -o ro,recovery /dev/vg1003/lv /mnt/disk. For other linux noobs like myself, I had already created a /mnt/disk earlier so remember to do that if you had not. I waited for about 10 seconds and the mount command completed. Went back to Files manager and the volume disappeared. I thought CRAP! but then I just remember that I had mounted it in /mnt/disk. Sure enough, opened /mnt/disk and the folders are there!

-

1

1

-

-

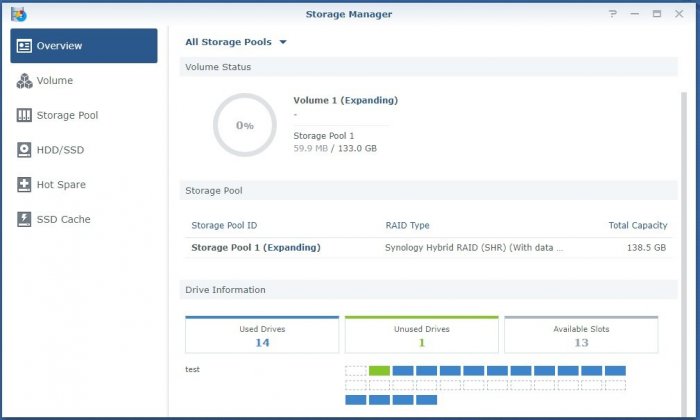

The repair process completed, drives are shown as healthy, storage pool shows healthy but volume shows crashed. This is for Storage Pool 4 / Volume 4. I'm running xpenology in VMWare Workstation 16.

root@NAS:~# cat /proc/mdstat

Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1]

md5 : active raid5 sde5[0] sdd5[3] sdf5[1]

9637230720 blocks super 1.2 level 5, 64k chunk, algorithm 2 [3/3] [UUU]md3 : active raid1 sdp5[0]

1882600064 blocks super 1.2 [1/1] [U]md2 : active raid5 sdj5[3] sdl5[5] sdk5[4]

15509261312 blocks super 1.2 level 5, 64k chunk, algorithm 2 [3/3] [UUU]md4 : active raid5 sdi5[0] sdh5[2] sdg5[1]

7749802112 blocks super 1.2 level 5, 64k chunk, algorithm 2 [3/3] [UUU]md1 : active raid1 sdp2[9] sdl2[8] sdk2[7] sdj2[6] sdi2[5] sdh2[4] sdg2[3] sdf2[2] sde2[1] sdd2[0]

2097088 blocks [24/10] [UUUUUUUUUU______________]md0 : active raid1 sdd1[0] sde1[1] sdf1[2] sdg1[4] sdh1[6] sdi1[5] sdj1[3] sdk1[8] sdl1[7] sdp1[9]

2490176 blocks [12/10] [UUUUUUUUUU__]unused devices: <none>

mdadm --detail /dev/md2

/dev/md2:

Version : 1.2

Creation Time : Wed Feb 5 00:22:58 2020

Raid Level : raid5

Array Size : 15509261312 (14790.78 GiB 15881.48 GB)

Used Dev Size : 7754630656 (7395.39 GiB 7940.74 GB)

Raid Devices : 3

Total Devices : 3

Persistence : Superblock is persistentUpdate Time : Sat Feb 27 16:43:49 2021

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0Layout : left-symmetric

Chunk Size : 64KName : Plex:2

UUID : f9ac4c36:6d621467:46a38f36:e75e077a

Events : 52639Number Major Minor RaidDevice State

3 8 149 0 active sync /dev/sdj5

4 8 165 1 active sync /dev/sdk5

5 8 181 2 active sync /dev/sdl5

root@NAS:~# fdisk -l /dev/sdj5

Disk /dev/sdj5: 7.2 TiB, 7940742873088 bytes, 15509263424 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

root@NAS:~# fdisk -l /dev/sdk5

Disk /dev/sdk5: 7.2 TiB, 7940742873088 bytes, 15509263424 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytesroot@NAS:~# fdisk -l /dev/sdl5

Disk /dev/sdl5: 7.2 TiB, 7940742873088 bytes, 15509263424 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

root@NAS:~# vgchange -ay

1 logical volume(s) in volume group "vg1000" now active

1 logical volume(s) in volume group "vg1001" now active

1 logical volume(s) in volume group "vg1002" now active

1 logical volume(s) in volume group "vg1003" now active

root@NAS:~# cat /etc/fstab

none /proc proc defaults 0 0

/dev/root / ext4 defaults 1 1

/dev/vg1003/lv /volume4 btrfs auto_reclaim_space,synoacl,relatime 0 0

/dev/vg1002/lv /volume1 btrfs auto_reclaim_space,synoacl,ssd,relatime 0 0

/dev/vg1000/lv /volume3 btrfs auto_reclaim_space,synoacl,relatime 0 0

/dev/vg1001/lv /volume2 btrfs auto_reclaim_space,synoacl,relatime 0 0

root@NAS:~# lvm pvscan

PV /dev/md5 VG vg1000 lvm2 [8.98 TiB / 0 free]

PV /dev/md4 VG vg1001 lvm2 [7.22 TiB / 0 free]

PV /dev/md3 VG vg1002 lvm2 [1.75 TiB / 0 free]

PV /dev/md2 VG vg1003 lvm2 [14.44 TiB / 0 free]

Total: 4 [32.39 TiB] / in use: 4 [32.39 TiB] / in no VG: 0 [0 ]Please let me know what other details you need.

Thanks in advance!

-

I put the drives back and the repair option re-appeared. One of the drive was bad so I'll need to get a replacement drive in its place to do the repair. Thanks

-

I read the other post by pavey but my situation is different. One of my pool crashed and the actions button is grayed out. The volume is a SHR-2. I followed Synology's suggestion to recover the data from https://www.synology.com/en-us/knowledgebase/DSM/tutorial/Storage/How_can_I_recover_data_from_my_DiskStation_using_a_PC but got stuck at step 9. I get the following when I run mdadm -Asf && vgchange -ay

mdadm: No arrays found in config file or automatically

I launched gparted and can see the volume info

/dev/md127 14.44Tib

Partition: /devmd127

FileSystem: lvm2 pv

MountPoint: vg1003

Size: 14.44TiB

/dev/sda5/7.22TiB

/dev/sdb5/7.22TiB

/dev/sdc5/7.22TiB

Help! Any suggestions? Thank you!

-

Just wanted to let those interested v6.2.3-25426-Update 3 works fine on this VM. The update was applied to the original upload (synobootfix applied) and not the updated appliance.

-

1

1

-

-

Hi,

I have a remote NAS DSM 6.2.3-Update2 that I don't have physical access to and I want to encrypt the shared folders. When I initialize the Key Manager, it recommends the keys to be stored on a usb drive. In a VM setup, how do I add a thin disk virtual usb drive to xpenology and just move the virtual usb drive to the cloud for safe storage?

For VM setups, what is your process to encrypt shared folders and safeguard the keys outside of DSM for safe keeping?

Thanks!

-

I was able to update mine after following flyride's post. I'm a linux newb and followed his 2nd post exactly. Update-2 worked perfectly on an existing VM.

Many many thanks to armazohairge, flyride and everyone else's help!

-

2

2

-

-

It's working great now. Thank you! 24 drives is more than enough for my needs. I have 13 drives and running two separate vms was cumbersome.

I was pleasantly surprised that i was able to combine the existing shr drives from a second vm without any troubles. All of folder shares and permissions were intact. Just had to do a quick system partition repair and it was all good.

To simplify in setting up for a friend that have more than 12 drives like i do, would setting the usb and esata ports as 0x0 be ok?

-

I'm curious since I don't use any virtual usb connected drives, does it make sense to zero the usb configuration out and just have the internalportcfg value only?

esataportcfg="0x0"

internalportcfg="0xffffff"

usbportcfg="0x0"

What would be the purpose of an attached virtual usb drive for?

-

THANK YOU!

-

After posting the above, I tried moving drives > 13 to SATA2:x in the VM settings.

Sure enough, the drives are now shown in Storage Manager and I am able to add them a volume just like any other drive. Also, the graphic display of available drives is not like the other screens I've seen. As long as the drives are seen and can be used, I think I'm good for now.

I'll continue to play around with this so more.

-

I have the DSM VM setup (DS3615xs DSM v6.2.3-25426-Release-final) in VMWARE Workstaion 15 with mods to the synoinfo.conf file as follows:

maxdisks="24"

esataportcfg="0xff000"

internalportcfg="0xFFFFFF"

usbportcfg="0x300000"24 disk slots are displayed within the Storage Manager overview. I added about 18 VHDs (20GB each) to my VM. The first 12 are shown in Storage Manager and can be included in a volume. The remaining VHDs are listed as ESATA Disks. I'm able to format these designated ESATA drives in File Station but what I'm looking to do is to have them populate in the Storage Manager to be used in volumes. Not sure what I'm missing to get them all populated within Storage Manager as usable drives.

Thanks!

-

For me, I used SHR instead of the typical RAIDx configurations. Anytime I need to increase the storage pool, I just add another drive to the pool. SHR is no longer a choice in the defaults. You'll need to SSH and modify the /etc.defaults/synoinfo.conf file.

-

Sorry if this is hijacking the thread but anyone that wants to move their existing VM setup to this updated setup, here's how I did mine on Workstation 15.

Open and import Armazohairge's appliance.

- you might want to change the processor and memory allocations of this imported VM

Power it up, find it using Synology Assistant and configure just the initial setup.

Shut down the VM, through the Synology shutdown process.

Add your old Virtual drives to this new VM BUT make sure all of those drives are on SATA1 and not SATA0. Jun's bootloader is on SATA0.

Launch the VM

Go into Storage Manager and repair the System Partition that is prompted.

Update the basic packages that are prompted.

That's it.

-

1

1

-

-

Great work armazohairge!! Appears there's an update 2 to the current version.

Note sure if anyone else noticed but I didn't at first. The 10GB resides on SATA 1:0 while the boot is on SATA0:0. By default, in Workstation 15, adding Virtual drives is automatically assigned to SATA0. Make sure you reconfigure any additional drives to SATA1 in the ADVANCED settings of that drive. My added drives did not show up in Storage Manager until I changed them to SATA1.

Again, thank you!

Chuck

-

1

1

-

-

switched to VMware workstation pro 15.5 and it's been rocking solid.

-

Hi All,

Host - i9-9900K w/64GB RAM, Win10-1803 ENT, Build 17134.1184

Virtualbox 6.1.0 r135406

Guest - DS3615XS running DSM6.2-23739

OS specified Linux 2.6/3.x/4.x (64-bit)

Base mem 4096

Processor 2

Video mem 128MB

Graphics controller VMSVGA

All VDI are specified as SATA

Bridged Network adapter is set to Intel PRO/1000MT Desktop (8254OEM)

Audio disabled

USB 2 controller

The VM freezes every 4-5 days and requires a reboot to get it going again. I'm wondering what are the optimal VB options that you all are using for your VB environment? In the meantime, I'll check the VB logs for any anomalies.

Thanks in advance!

Chuck

synocodectool-patch for DSM 7.0+

in Software Modding

Posted

I hadn't realized that. Thank you. If I'm doing it via a VM, would blocking synology.com be sufficient?