martine

-

Posts

8 -

Joined

-

Last visited

Posts posted by martine

-

-

@flyride Great now it makes more sense in my head thanks for "spelling it out" 😀 then it is a success.

18 hours ago, flyride said:What we have done is changed two of your four drives to hotspares in /dev/md0, the RAID array for the DSM partition. The hotspare drives must have the partition configured (or DSM will call the disk "Not Initialized"), and must be in the array (or DSM will call the system partition "Failed") but are idle unless one of the remaining members fails.

Unfortunately DSM does not tolerate hotspares on the swap partition (/dev/md1) so that RAID 1 will always span all the drives actively.

i had the idea that the "system partition" would not show up i DSM storage manager, but now i get it

Thanks a lot for helping out a Newbie with very limited Linux knowhow 👍🥇

/Martine

-

-

After trying what it think is the right commands

admin@BALOO:/$ sudo mdadm /dev/md0 -f /dev/sdc1 /sdd1 Password: mdadm: set /dev/sdc1 faulty in /dev/md0 mdadm: Cannot find /sdd1: No such file or directory admin@BALOO:/$ sudo mdadm /dev/md0 -f /dev/sdd1 mdadm: set /dev/sdd1 faulty in /dev/md0 admin@BALOO:/$ sudo mdadm --manage /dev/md0 --remove faulty mdadm: hot removed 8:49 from /dev/md0 mdadm: hot removed 8:33 from /dev/md0 admin@BALOO:/$ cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] md3 : active raid1 sdc3[0] sdd3[1] 13667560448 blocks super 1.2 [2/2] [UU] md2 : active raid1 sda3[0] sdb3[1] 245237056 blocks super 1.2 [2/2] [UU] md1 : active raid1 sda2[0] sdb2[1] sdc2[2] sdd2[3] 2097088 blocks [16/4] [UUUU____________] md0 : active raid1 sda1[0] sdb1[1] 2490176 blocks [2/2] [UU] unused devices: <none> admin@BALOO:/$ sudo mdadm --add /dev/md0 /dev/sdc1 mdadm: added /dev/sdc1 admin@BALOO:/$ sudo mdadm --add /dev/md0 /dev/sdd1 mdadm: added /dev/sdd1 admin@BALOO:/$ cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] md3 : active raid1 sdc3[0] sdd3[1] 13667560448 blocks super 1.2 [2/2] [UU] md2 : active raid1 sda3[0] sdb3[1] 245237056 blocks super 1.2 [2/2] [UU] md1 : active raid1 sda2[0] sdb2[1] sdc2[2] sdd2[3] 2097088 blocks [16/4] [UUUU____________] md0 : active raid1 sdd1[2](S) sdc1[3](S) sda1[0] sdb1[1] 2490176 blocks [2/2] [UU] unused devices: <none> admin@BALOO:/$

this is what i get from my system

when i use the first part of the command the DSM storage manager reports faulty system partition as before, but after the second set of commands it it back to "normal" with what seems like it is having DSM system partition on all disks once more without me having used the DSM storage manger to fix the system partition

-

-

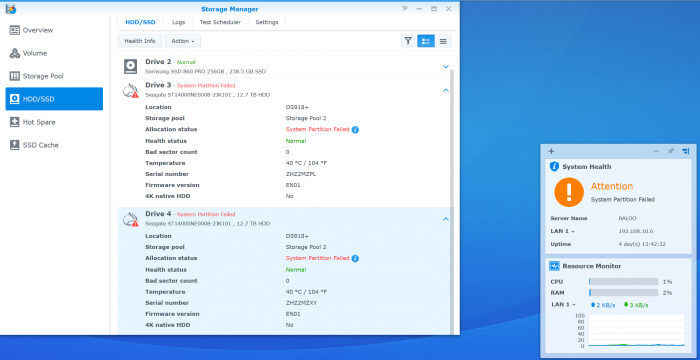

After the "grow command" the DSM just reports that it have fixed the partitions and the DSM system partition is back on all drives and no hot spare. please see attached screen dumps..

but correct i have tried the command multiple times

-

This is a new system that will eventually take over from my trusted DS214play that are getting to slow, but at the moment there is NO important data on so if it fails i will just start over i am also in this for the learning this is what my

cat /proc/mdstatdisplays when i have failed the drive sdc1 and sdd1

admin@BALOO:/$ cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] md3 : active raid1 sdc3[0] sdd3[1] 13667560448 blocks super 1.2 [2/2] [UU] md2 : active raid1 sda3[0] sdb3[1] 245237056 blocks super 1.2 [2/2] [UU] md1 : active raid1 sda2[0] sdb2[1] sdc2[2] sdd2[3] 2097088 blocks [16/4] [UUUU____________] md0 : active raid1 sdd1[2](F) sdc1[3](F) sda1[0] sdb1[1] 2490176 blocks [2/2] [UU] unused devices: <none>

and the DF command

admin@BALOO:/$ df Filesystem 1K-blocks Used Available Use% Mounted on /dev/md0 2385528 939504 1327240 42% / none 8028388 0 8028388 0% /dev /tmp 8074584 816 8073768 1% /tmp /run 8074584 4252 8070332 1% /run /dev/shm 8074584 4 8074580 1% /dev/shm none 4 0 4 0% /sys/fs/cgroup cgmfs 100 0 100 0% /run/cgmanager/fs /dev/md2 235427576 978700 234448876 1% /volume1 /dev/md3 13120858032 17844 13120840188 1% /volume2hope this is the info you need @flyride

/Martine

-

On 3/21/2018 at 5:59 AM, flyride said:

Just to clarify the layout:

/dev/md0 is the system partition, /dev/sda.../dev/sdn. This is a n-disk RAID1

/dev/md1 is the swap partition, /dev/sda../dev/sdn. This is a n-disk RAID1

/dev/md2 is /volume1 (on my system /dev/sda and /dev/sdb RAID1)

/dev/md3 is /volume2 (on my system /dev/sdc.../dev/sdh RAID10)

and so forth

Failing RAID members manually in /dev/md0 will cause DSM to initially report that the system partition is crashed as long as the drives are present and hotplugged. But it is still functional and there is no risk to the system, unless you fail all the drives of course.

At that point cat /proc/mdstat will show failed drives with (F) flag but the number of members of the array will still be n.

mdadm -grow -n 2 /dev/md0 forces the system RAID to the first two available devices (in my case, /dev/sda and /dev/sdb). Failed drives will continue to be flagged as (F) but the member count will be two.

After this, in DSM it will still show a crashed system partition, but if you click on Repair, the failed drives will change to hotspares and the system partition state will be Normal. If one of the /dev/md0 RAID members fails, DSM will instantly activate one of the other drives as the hotspare, so you always have two copies of DSM.

FYI, this does NOT work with the swap partition. No harm in trying, but DSM will always report it crashed and fixing it will restore swap across all available devices.

It's probably worth mentioning that I do not use LVM (no SHR) but I think this works fine in systems with SHR arrays.

Hello All!

I know it have been some time since this post has been active

but have been reading on the forums for some time but never posted anything so i hope that someone will be able to point me in the right direction

I have xpenology running on DSM 6.2.2-24922 on loader 1.04 with DS918+

and trying to accomplish what @flyride have on the count of limiting DSM system to specific drives but I am unsuccessful on the following command:

mdadm -grow -n 2 /dev/md0I am able to fail the drives just fine and DSM storage manager reports los of system partition on the drives i want to bee excluded

i have 2 samsung 860pro ssd´s as "sda" and "sdb" and would like to keep DSM only SSD storage and 2 seagate ironwolf drives as "sdc" and "sdd"

but every time I trie it get

admin@BALOO:/$ sudo mdadm --grow -n 2 /dev/md0 mdadm: /dev/md0: no change requestedhope anyone can point me to the next steps as i am no Linux guy

thanks from a neewbie

/martine

Automated RedPill Loader (ARPL)

in Loaders

Posted

@fbelavenuto

What great work 👍

Thanks so much for all your hard work on "modernisation" the user friendliness of the loader.

i am tying it out in my Hypervisor of choice XCP-NG/xen-orchestra and i can make it boot just fine and configure the loader but it just sees no disks at all.

my Hypervisor is not very flexible about "emulating" hardware, so i don´t relay have and way to choose the "disks" other than virtual disk and Add new disk,

other operation systems see these disks just fine, both linux and windows.

i have tried DS920 and 3622+ but the loader config sees no disks in the "green" options.

hope for support the future as XCP-NG seems to grow i popularity, and it is based on the open source xen project

Thanks