conhulio2000

-

Posts

9 -

Joined

-

Last visited

Posts posted by conhulio2000

-

-

To be honest, from my understands the B120i internal controller on the Gen8 is not that good. I did alot of research about this 6 years ago, and many blogs said its not that great.

I bought the : LSI IBM M1015, this is a very good controller that fits nicely into the PCI slot on the Gen 8 and allows you to have upto 8 drives connected.

i dont use RAID on Synology, i managed to get Synology Hydrid RAID available by hacking the install file on the boot, which allowed me to use SHR. I think SHR because it creates volumes as standard ext4, which i can manage to read independantly in windows with the "linux reader", if a drive fails and i can manage to get it back. with Raid i dont think thats as easy.

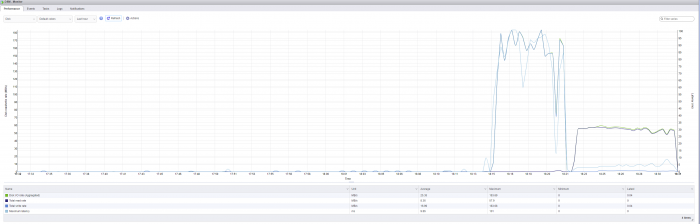

I've uploaded my speeds. First i coped a 29gb folder with lots of vid files, then i moved them back. I have a 1gb network, a switch.

Hope this helps you see a difference. definitely it seems your speeds are particularly slow. but im not sure why that is.

Regards

R.

-

1

1

-

-

On 11/13/2020 at 7:36 AM, haydibe said:

Not sure about the performance statement. We are speaking about the controller beeing managed from ESXi vs. directly managed in DSM.. When I move files from one volume to another (each volume on a different set of wd red drives), I get arround 400-500mb/s with direct-io. The Flexibility aspect is true, if you partioned your raid and assigned raid volumes as RDM partitions to the DSM vm. Though, If you would've used the drives in IT mode and added each drive as RDM individualy, you would've been able to switch the VM to use direct-io and DSM would not even notice the difference.. With a direct-io setup, esxi7 wouln't be an issue either.

I had two of these beauties. Though, because of the outdated cpu-architecture and the ram limit of 16GB, I ended up replacing them with two Dell T30, where I threw E3-1275v5 cpus and 32GB ECC udims in.

Glad you found a solution!

Thanks bud,

I'll keep that in mind about the SAS and IT Mode for next time. usually, I dont break sometime if it works consistently. But the DSM5.2 was getting old, and i realised i could upgrade VSphere too, so i took a leap of faith and did the jump. Im still rebuilding it now. I originally bought some very large Toshiba N300 drives, but man! they are flippin noisey. So replaced with the HGST UltraStar he12, and they are much quieter (not NAS specific, but i dont really care about that, if a drive fails it fails).

So, much oblidged of your help, and see you in next 5 years! ........

-

4 hours ago, haydibe said:

I am afraid I won't be able to help. I use an unbranded LSI9211-8i (IT mode) in passthrough mode. The build-in mpt2sas driver in DSM6.x recognizes the controller and makes all the attached drives available to DSM. I have no idea if branded LSI9211-8i controllers or even controllers in IR mode would be supported by the mpt2sas driver if passed into the vm in passthrough mode...

I have zero experience with RDM drives.

There is no need to attache the synoboot.img at all. From what I remember the "vmdk" is just a declaration that points to synoboot.img. Though, what I don't understand why you set it to SCSI 0:0. Mine is on SATA:0.0. Also you need to use the E1000e network interface (like written on the first page of this thread. My LSI9211-8i is flashed to be a SATA HBA.. Why would this be needed to be configured as SCSI controller when used with RDM disks?

[Update] the second part of you sentence does not match the first part of your sentence... None of the settings you made can be found in the tutorial.[/update]

The 1.04b bootloader only works for DS918+ and only if your cpu is newer or equal Haswell cpu architecture.

The Gen8 uses an older cpu architecure, thus it is not possible to use the 1.04b bootloader.

You are right, you need to stick to 1.03b.

I actually got around the problem. I finally tried VSphere v6.7 U3. Followed the guide, properly, and it worked.

The issue was i was trying to using my existing Vpshere 5.5, which normally brings up drives in SCSI interface mode, probably according to my particular IBM M1015 card.

Your right, in 6.7, i didnt need to add the CD Drive and point to the synboot.img, i just did the SATA 0:0 to the vmdk.

I prefer to use RDM, as using Expenology with RDM i read somewhere is better performace, and if i need to use part of that drive i will just mount a NFS drive on DSM, and share that with whatever. I also had to tweak the DSM conf file so i could re-instate SHR for DS3615xs, as it is removed by default, RAID meant i had to attach exactly the amount of drives and at exactly the same size ( i didnt want that restriction ) - hence i re-enabled SHR.

So, now i managed to get it all working with LSI SAS(m1015 - IR Mode), and RDM the disks into the vm.

Thanks for this blog everyone, thanks Haydibe for replying, and I hope i dont get any issues with this Vpshere 6.7 setup. Unfortunately, VSphere 7.0 doesnt support the old IBM M1015 Raid cards anymore, so this is why i had to goto 6.7U3.

The Gen8 Server rocks! never ever failed me in 8 years since ive had it. I also dont have the stock crappy CPU, i replaced it with the "4 CPUs x Intel(R) Xeon(R) CPU E31230 @ 3.20GHz". Works a dream on the Gen8.

Thanks everyone.

-

On 11/18/2018 at 10:32 PM, haydibe said:

Depending on whether you have added a SATA1 controller, the problems and the settings to prevent them will be different.

In my setup, the SATA1 controller is recognzied as first, this is why DiskIdxMap=0C mapped my SATA1:0 disk to the first eSata slot, which made the volume unusable for me. Setting DiskIdxMap=09 moved my SATA1:0 disk to the 10th slot, which made it usable again.

Since I have SATA0, SATA1 and the Passthrough controller, I used SataPortMap to tell DSM that each of the controller has 4 harddisks, regardless of the real number of drives.

I had 114 before, though the result was slot 1,3,4,5 have been the LSI-Harddisks, 2 was SATA0:0 and whatever DiskIdxMap inidicated was SATA1:1.

With my setup, I use DiskIdxMap=09 and SataPortMap=444. This settings work without modifiying any of the two synoinfo.conf files

Those are not the best feasable settings! DiskIdxMap determins the first disk on a controller and is a two digit hex value, while SataPortMap is a single digit value from 1-9 per controller.

I am pretty sure that DiskIdxMap=000C01 SataPortMap=114 would result in SATA1:0 = Slot1, SATA0:0 = Slot13, LSI Slot2-5.

Hello Haydibe,

I have a bit of a problem, im hoping you'll be able to help me with.

My Kit: HP Gen8, LSI IBM M1015 in IR Mode, ESXi 5.5, which was working fine with RDM DSM 5.2.

I have now blated the server (did neccessary backups), and now want to create DSM 6.2I have followed this guide: https://xpenology.com/forum/topic/13061-tutorial-install-dsm-62-on-esxi-67/

However, I just cant get it to install. After manually installing the PAK file, it reboots, but then says "my disk has moved ..... blah blah", and all that shows up now is "RECOVER".

Ive done all the things in the guide correctly (amended SN, amended MAC), set synboot.img to IDE0:0 CD drive, and set SCSI 0:0 for synboot.vmdk, and added my RDM 12TB as SCSI 1:0, and NIC as E1000. No matter how many times a try, it just keeps booting up to the "recover" screen.???

do you possibly have any ideas? the only thing thats different is that he talks about SATA 0:0 and SATA 1:0, unfortunately i dont see SATA, as i have a IBM M1015 RAID card, being used for my drives, so i only see SCSI drives selectable.

any help would be much appreciated.

note: Ive heard that best is to install 1.03b with DS3615xs. I dont really wanna go down the route of the VID/PID USB workaround.

thanks guys

Richie

-

Hi,

OK - i Found a way to get around this. (DOWNGRADE FROM offical DSM 6.0)

1. I created a brand new VM with 5664 DSM ISO, same setup as my previous VM.

2. attached the first RDM disk.

3. booted up, and it should boot directly to Install page, if it doesnt, use Synology Assistant, to do "INSTALL".

4. Install - and choose DSM 5664 PAT file, from Xpenology (200mb file)

5. On completion, you should now have a DSM working, with your existing drive, with all data still there OK.

6. I then restored my old config settings from 5592.

7. albeit i lost all my packages loaded up, and more importantly lost my Sickbeard and sannzb installs. Luckily, i took a copy of those apps/settings before it all went pear shaped....

HOWEVER, now 5664, i do not see Sickbeard up for install in Packages from the community source. aarrrggggg

so, now i will be doing the same process to get back to 5592, which i had BEFORE all this mess.

-

hi,

thanks for that. but it still didnt fix it.

on my NAS before it broke it had 5592.4, and was using Expenology's 5592.2 ISO (boot).

i corrected the VERSION file in "etc.default", to exactly what the details where in that link you sent. However, after rebooting, and trying the INSTALL function on the Synology Assistant. after 9% installing, it stopped and said something like "install failed, your version is from a previous version". in the Synology Assistant, it shows the 6.6707 version or whatever the latest version is of DSM. RC.

Im feeling a bit at the end of my tether here now.

Isnt there a way i could simply create a new VM of 5592 with the 5592 ISO, and then simply, re-attach the 4x4TB RDM drives to the VM NAS once DSM is installed. what happens to my existing settings? and my settings from Sickbeard and SabNzb?

thanks for help!

-

hi,

thanks, but after the install of DCM 6, it wouldnt start, however looking on console in ESXi, something do fail, and there is a login prompt, however - the admin password I used doesnt work now?

connecting via Putty doesnt work either, connection refused.

so it seems as though its blatted my admin passwords.?

anyway around this ?

-

Hi everyone!

yes im sure your all laughing your faces off!

I stupidly, downloaded the DSM 6.0 RC for DS1612xs and installed via the Manual Upgrade on DSM 5.2

mine was 5592 update 4.

i have ESXi, i had XPenology 5592 ISO, and the Xpenology install for 5592, which updated fine to U4.

i have 4 RDM mapped 4TB drives.

After the install - it wouldnt start, at which point i realised oh "shhhh...." i forgot i needed the Xpenology ISO and PAT files. realising that DSM 6 has not been patched up yet.

So, now i cant seem to downgrade back to 5592, and i cant seem to SSH in with Putty either, the root or admin password seems to have been changed???

Does anyone know how do i get back to where i was?

could i simply create a new VM with the old 5592 install, and the simply re-attached the drives? would that do it? i dont know.

PLEASE ANY HELP would be greatly appreciated......

Tutorial: Install/DSM 6.2 on ESXi [HP Microserver Gen8] with RDM

in Tutorials and Guides

Posted · Edited by conhulio2000

To be honest, I have the same issue, and therefore i just dont bother with the SMART details showing up in Expen. If i want to know what the SMART values are, I would just run a script in ESXi host to find them out. At least you can do that. If the drive is going to fail, it will fail, i dont care- but its probably very highly likely that 2 drives wont fail at the same time, therefore im not that bothered (hence i have 2 drives in a volume mirrored)

Regards