4li3n

-

Posts

3 -

Joined

-

Last visited

Posts posted by 4li3n

-

-

Hello,

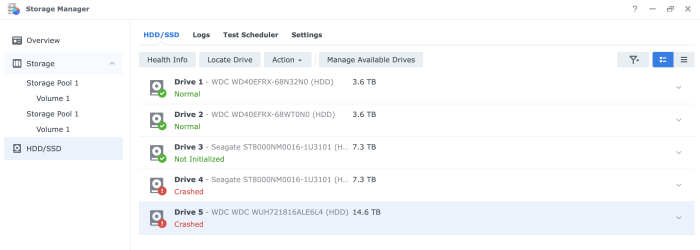

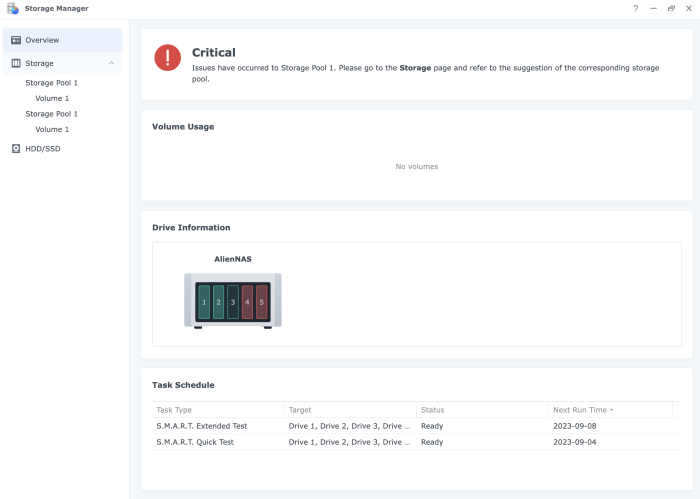

I had the Synology DS1515+ (5 drive slots) and used to have one single Storage Pool consists of 4 drives (two 4TB drives and two 8TB drives, using SHR configuration), the extra slot on the machine was not in use. One day Drive #4 crashed and the Storage Pool went in Degraded state. I bought a new 16TB disk hoping to replace it and recover the Storage Pool.

But stupid me, I mistakenly took out the healthy Drive #3, replaced it with the new disk, and booted up the machine. I then clicked the Online Assemble option from the overview page in the Storage Manager app, but it had always failed to do so. After a few attempts and reboots, somehow I got to see a Repair option, I clicked it and it starts repairing the Storage Pool, with two originally healthy Drives #1 & #2, the mistakenly replaced 16TB Drive #3, and the originally crashed Drive #4. Up to this point, I had access to the data via the File Manager app, but the response was very slow.

After that, I moved the 16TB disk out from Drive #3 to Drive #5, and re-inserted the original 4TB healthy disk back into Drive #3. Now Drive #3 becomes Not Initialized (Healthy), and Drive #5 becomes Crashed (Healthy). I no longer have access to the storage via File Manager. But fortunately, the files seems to be accessible via the SSH terminal.

Any advice or guidance on my journey to recover the Storage Pool and the data would be gratefully received.

$ cat /proc/mdstat

Personalities : [raid1] [raid6] [raid5] [raid4] [raidF1] md3 : active raid1 sdd6[0] 3906998912 blocks super 1.2 [2/1] [U_] md2 : active raid5 sda5[4] sde5[5] sdb5[7] 11706562368 blocks super 1.2 level 5, 64k chunk, algorithm 2 [4/3] [UU_U] md1 : active raid1 sda2[0] sdd2[2] sdb2[1] 2097088 blocks [5/3] [UUU__] md0 : active raid1 sdb1[0] sda1[3] sdd1[1] 2490176 blocks [5/3] [UU_U_] unused devices: <none>$ vgdisplay --verbose

Using volume group(s) on command line. --- Volume group --- VG Name vg1000 System ID Format lvm2 Metadata Areas 2 Metadata Sequence No 4 VG Access read/write VG Status resizable MAX LV 0 Cur LV 1 Open LV 1 Max PV 0 Cur PV 2 Act PV 2 VG Size 14.54 TiB PE Size 4.00 MiB Total PE 3811904 Alloc PE / Size 3811904 / 14.54 TiB Free PE / Size 0 / 0 VG UUID 1e1yFU-tU4H-iDgZ-biKn-nD1S-nK4V-P2lohD --- Logical volume --- LV Path /dev/vg1000/lv LV Name lv VG Name vg1000 LV UUID AXdWh7-vAGX-2d7U-wXtb-quk3-LsHy-kPmdBx LV Write Access read/write LV Creation host, time , LV Status available # open 1 LV Size 14.54 TiB Current LE 3811904 Segments 2 Allocation inherit Read ahead sectors auto - currently set to 768 Block device 253:0 --- Physical volumes --- PV Name /dev/md2 PV UUID hK1a7S-pdX1-5hjp-m53A-Tlpy-Jk9L-McEdHH PV Status allocatable Total PE / Free PE 2858047 / 0 PV Name /dev/md3 PV UUID cJCeIW-KpCY-ez6a-JFk1-iRmT-djVM-Trec1B PV Status allocatable Total PE / Free PE 953857 / 0

$ lvdisplay --verbose

Using logical volume(s) on command line. --- Logical volume --- LV Path /dev/vg1000/lv LV Name lv VG Name vg1000 LV UUID AXdWh7-vAGX-2d7U-wXtb-quk3-LsHy-kPmdBx LV Write Access read/write LV Creation host, time , LV Status available # open 1 LV Size 14.54 TiB Current LE 3811904 Segments 2 Allocation inherit Read ahead sectors auto - currently set to 768 Block device 253:0

$ pvdisplay --verbose

Using physical volume(s) on command line. Wiping cache of LVM-capable devices --- Physical volume --- PV Name /dev/md2 VG Name vg1000 PV Size 10.90 TiB / not usable 1.81 MiB Allocatable yes (but full) PE Size 4.00 MiB Total PE 2858047 Free PE 0 Allocated PE 2858047 PV UUID hK1a7S-pdX1-5hjp-m53A-Tlpy-Jk9L-McEdHH --- Physical volume --- PV Name /dev/md3 VG Name vg1000 PV Size 3.64 TiB / not usable 640.00 KiB Allocatable yes (but full) PE Size 4.00 MiB Total PE 953857 Free PE 0 Allocated PE 953857 PV UUID cJCeIW-KpCY-ez6a-JFk1-iRmT-djVM-Trec1B$ df

Filesystem 1K-blocks Used Available Use% Mounted on /dev/md0 2385528 1920488 346256 85% / devtmpfs 8208924 0 8208924 0% /dev tmpfs 8212972 4 8212968 1% /dev/shm tmpfs 8212972 15568 8197404 1% /run tmpfs 8212972 0 8212972 0% /sys/fs/cgroup tmpfs 8212972 1092 8211880 1% /tmp /dev/mapper/cachedev_0 14989016436 14486635592 502380844 97% /volume1

$ mdadm --detail /dev/md0

/dev/md0: Version : 0.90 Creation Time : Mon Nov 30 17:33:21 2015 Raid Level : raid1 Array Size : 2490176 (2.37 GiB 2.55 GB) Used Dev Size : 2490176 (2.37 GiB 2.55 GB) Raid Devices : 5 Total Devices : 3 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Sat Sep 2 14:02:50 2023 State : active, degraded Active Devices : 3 Working Devices : 3 Failed Devices : 0 Spare Devices : 0 UUID : 7622664e:1657328c:3017a5a8:c86610be Events : 0.37057847 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 49 1 active sync /dev/sdd1 - 0 0 2 removed 3 8 1 3 active sync /dev/sda1 - 0 0 4 removed$ mdadm --detail /dev/md1

/dev/md1: Version : 0.90 Creation Time : Sun Apr 28 18:35:40 2019 Raid Level : raid1 Array Size : 2097088 (2047.94 MiB 2147.42 MB) Used Dev Size : 2097088 (2047.94 MiB 2147.42 MB) Raid Devices : 5 Total Devices : 3 Preferred Minor : 1 Persistence : Superblock is persistent Update Time : Wed Jan 1 08:12:37 2014 State : clean, degraded Active Devices : 3 Working Devices : 3 Failed Devices : 0 Spare Devices : 0 UUID : abcf619a:3398cc73:e7b2e964:f815f961 (local to host NAS) Events : 0.45611 Number Major Minor RaidDevice State 0 8 2 0 active sync /dev/sda2 1 8 18 1 active sync /dev/sdb2 2 8 50 2 active sync /dev/sdd2 - 0 0 3 removed - 0 0 4 removed$ mdadm --detail /dev/md2

/dev/md2: Version : 1.2 Creation Time : Mon Nov 30 18:19:21 2015 Raid Level : raid5 Array Size : 11706562368 (11164.25 GiB 11987.52 GB) Used Dev Size : 3902187456 (3721.42 GiB 3995.84 GB) Raid Devices : 4 Total Devices : 3 Persistence : Superblock is persistent Update Time : Sat Sep 2 13:32:07 2023 State : clean, degraded Active Devices : 3 Working Devices : 3 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Name : NAS:2 (local to host NAS) UUID : 5ffcb2a1:80bc4d39:7f19eb5f:2d922605 Events : 753119 Number Major Minor RaidDevice State 4 8 5 0 active sync /dev/sda5 7 8 21 1 active sync /dev/sdb5 - 0 0 2 removed 5 8 69 3 active sync /dev/sde5$ mdadm --detail /dev/md3

/dev/md3: Version : 1.2 Creation Time : Mon Dec 3 12:19:09 2018 Raid Level : raid1 Array Size : 3906998912 (3726.00 GiB 4000.77 GB) Used Dev Size : 3906998912 (3726.00 GiB 4000.77 GB) Raid Devices : 2 Total Devices : 1 Persistence : Superblock is persistent Update Time : Sat Sep 2 14:03:35 2023 State : clean, degraded Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 Name : NAS:3 (local to host NAS) UUID : adf75971:3ab713a6:ae03a0ce:ac3dc03d Events : 52290 Number Major Minor RaidDevice State 0 8 54 0 active sync /dev/sdd6 - 0 0 1 removed

Storage Pool crashed (replacement disk inserted into the wrong slot)

in General Post-Installation Questions/Discussions (non-hardware specific)

Posted

I've been struggling to restore the data for the past month. Here's the progress I made so far.

I tried to reboot the NAS. When it's restarted the Storage Manager UI showed an "Assemble Online" option. I clicked and waited, but after about 20 minutes, it failed and said "Cannot be assembled online". I thought it was the network problem, so I tried to reboot a few dozen times and made sure my Internet connection is OK. But the Storage Manager always showed the "Assemble Online" and always failed after I clicked it.

I tried to SSHed into the NAS and examine the folder structure. I found something interesting which might be helpful to restore the array and recover the data.

If you happen to have such experience in fixing the MD devices, would you please share it with me? Any advice and suggestions are appreciated. Thanks!