PeteyNice

-

Posts

7 -

Joined

-

Last visited

Posts posted by PeteyNice

-

-

It had some impact because when I rebooted it came up as "Not Installed". Anyway, I abandoned DS920/gemninilake and went back to the beginning with DS918/apollolake. That worked perfectly. Thank you for the wonderful guide and reviewing my issue.

-

Actually, I found a file that looks like that at /home/tc/ds920.dts. Editing it now.

Required a re-install. Will pick it up tomorrow. Thanks!

-

$ cat /proc/cmdline

BOOT_IMAGE=/zImage syno_hw_version=DS920+ console=ttyS0,115200n8 netif_num=1 synoboot2 earlycon=uart8250,io,0x3f8,115200n8 mac1=00113241BBBA sn=2040SBRQ3A5FS HddEnableDynamicPower=1 intel_iommu=igfx_off DiskIdxMap=0002 vender_format_version=2 root=/dev/md0 SataPortMap=22 syno_ttyS1=serial,0x2f8 syno_ttyS0=serial,0x3f8sh-4.4# dtc -I dtb -O dts /var/run/model.dtb

<stdout>: Warning (unit_address_vs_reg): /DX517/pmp_slot@1: node has a unit name, but no reg or ranges property

<stdout>: Warning (unit_address_vs_reg): /DX517/pmp_slot@2: node has a unit name, but no reg or ranges property

<stdout>: Warning (unit_address_vs_reg): /DX517/pmp_slot@3: node has a unit name, but no reg or ranges property

<stdout>: Warning (unit_address_vs_reg): /DX517/pmp_slot@4: node has a unit name, but no reg or ranges property

<stdout>: Warning (unit_address_vs_reg): /DX517/pmp_slot@5: node has a unit name, but no reg or ranges property

<stdout>: Warning (unit_address_vs_reg): /internal_slot@1: node has a unit name, but no reg or ranges property

<stdout>: Warning (unit_address_vs_reg): /internal_slot@2: node has a unit name, but no reg or ranges property

<stdout>: Warning (unit_address_vs_reg): /internal_slot@3: node has a unit name, but no reg or ranges property

<stdout>: Warning (unit_address_vs_reg): /internal_slot@4: node has a unit name, but no reg or ranges property

<stdout>: Warning (unit_address_vs_reg): /esata_port@1: node has a unit name, but no reg or ranges property

<stdout>: Warning (unit_address_vs_reg): /usb_slot@1: node has a unit name, but no reg or ranges property

<stdout>: Warning (unit_address_vs_reg): /usb_slot@2: node has a unit name, but no reg or ranges property

<stdout>: Warning (unit_address_vs_reg): /nvme_slot@1: node has a unit name, but no reg or ranges property

<stdout>: Warning (unit_address_vs_reg): /nvme_slot@2: node has a unit name, but no reg or ranges property

/dts-v1/;/ {

compatible = "Synology";

model = "synology_geminilake_920+";

version = <0x01>;

syno_spinup_group = <0x02 0x01 0x01>;

syno_spinup_group_delay = <0x0b>;

syno_hdd_powerup_seq = "true";

syno_cmos_reg_secure_flash = <0x66>;

syno_cmos_reg_secure_boot = <0x68>;DX517 {

compatible = "Synology";

model = "synology_dx517";pmp_slot@1 {

libata {

EMID = <0x00>;

pmp_link = <0x00>;

};

};pmp_slot@2 {

libata {

EMID = <0x00>;

pmp_link = <0x01>;

};

};pmp_slot@3 {

libata {

EMID = <0x00>;

pmp_link = <0x02>;

};

};pmp_slot@4 {

libata {

EMID = <0x00>;

pmp_link = <0x03>;

};

};pmp_slot@5 {

libata {

EMID = <0x00>;

pmp_link = <0x04>;

};

};

};internal_slot@1 {

protocol_type = "sata";

power_pin_gpio = <0x14 0x00>;

detect_pin_gpio = <0x23 0x01>;

led_type = "lp3943";ahci {

pcie_root = "00:12.0";

ata_port = <0x00>;

};led_green {

led_name = "syno_led0";

};led_orange {

led_name = "syno_led1";

};

};internal_slot@2 {

protocol_type = "sata";

power_pin_gpio = <0x15 0x00>;

detect_pin_gpio = <0x24 0x01>;

led_type = "lp3943";ahci {

pcie_root = "00:13.3,00.0";

ata_port = <0x02>;

};led_green {

led_name = "syno_led2";

};led_orange {

led_name = "syno_led3";

};

};internal_slot@3 {

protocol_type = "sata";

power_pin_gpio = <0x16 0x00>;

detect_pin_gpio = <0x25 0x01>;

led_type = "lp3943";ahci {

pcie_root = "00:12.0";

ata_port = <0x02>;

};led_green {

led_name = "syno_led4";

};led_orange {

led_name = "syno_led5";

};

};internal_slot@4 {

protocol_type = "sata";

power_pin_gpio = <0x17 0x00>;

detect_pin_gpio = <0x26 0x01>;

led_type = "lp3943";ahci {

pcie_root = "00:12.0";

ata_port = <0x03>;

};led_green {

led_name = "syno_led6";

};led_orange {

led_name = "syno_led7";

};

};esata_port@1 {

ahci {

pcie_root = "00:13.0,00.0";

ata_port = <0x03>;

};

};usb_slot@1 {

vbus {

syno_gpio = <0x1d 0x01>;

};usb2 {

usb_port = "1-3";

};usb3 {

usb_port = "2-1";

};

};usb_slot@2 {

vbus {

syno_gpio = <0x1e 0x01>;

};usb2 {

usb_port = "1-2";

};usb3 {

usb_port = "2-2";

};

};nvme_slot@1 {

pcie_root = "00:14.1";

port_type = "ssdcache";

};nvme_slot@2 {

pcie_root = "00:14.0";

port_type = "ssdcache";

};

};

sh-4.4#I also tried other random HDD I have lying around and see the same thing. Any disk not in Bay 1 is not shown in Storage Manager but is available in shell.

-

-

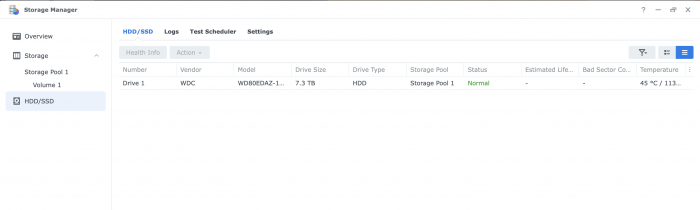

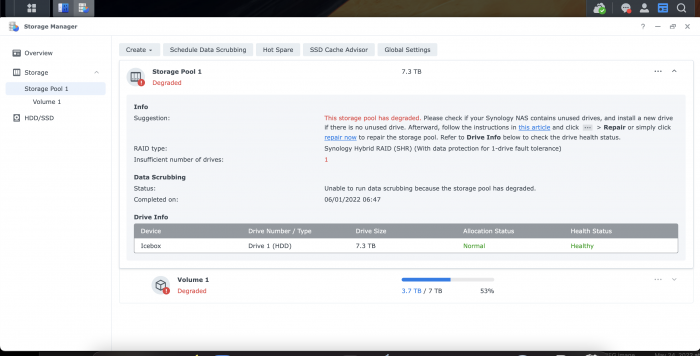

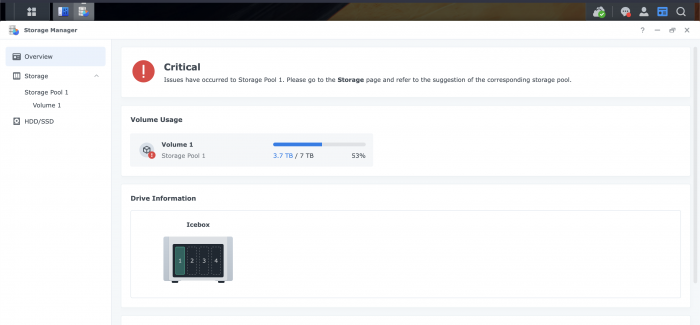

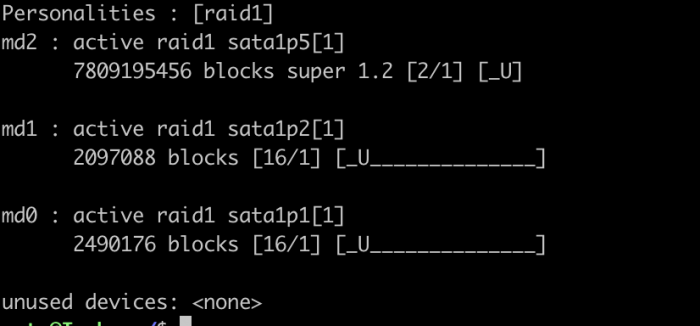

I recently used RedPill to migrate from 6.2.3. Now Storage Manager will only recognize the drive in Bay 1 (whichever drive I put in there). The other drives show up if I ssh in and check fdisk, they pass smartctl etc. So they exist.

When in Bay 1 Storage Manager reports each drive as healthy.

I am ready to format one of the drives in the hopes that that will cause Syno to accept it and rebuild the array but I wanted to see if there were less drastic things I should try first.

Hardware: ASRock J5040-ITX

Drives: 2x 8 TB WD

Loader: RedPill geminilake-7.1-42661 update 2

fdisk (identifiers changed😞

Disk /dev/sata2: 7.3 TiB, 8001563222016 bytes, 15628053168 sectors

Disk model: WD80EDAZ-11TA3A0

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: DDDDDDD-RRRR-SSSS-YYYY-XXXXXXXXXDevice Start End Sectors Size Type

/dev/sata2p1 2048 4982527 4980480 2.4G Linux RAID

/dev/sata2p2 4982528 9176831 4194304 2G Linux RAID

/dev/sata2p5 9453280 15627846239 15618392960 7.3T Linux RAID

Disk /dev/sata1: 7.3 TiB, 8001563222016 bytes, 15628053168 sectors

Disk model: WD80EDAZ-11TA3A0

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: OOOOOOO-PPPP-7777-8888-AAAAAAAAAAAADevice Start End Sectors Size Type

/dev/sata1p1 2048 4982527 4980480 2.4G Linux RAID

/dev/sata1p2 4982528 9176831 4194304 2G Linux RAID

/dev/sata1p5 9453280 15627846239 15618392960 7.3T Linux RAIDThanks!

-

I have been using Jun's Loader/6.2.3 for successfully for over two years. I had avoided RedPill because I assumed that I would need to buy a new drive, pull all the drives, install RedPill, reconfigure Syno from scratch, add back the old drives and depend on Syno to migrate everything.

Then I started seeing people posting in the Updates forum that they went from 6.2.3 to RedPill. They provide no comments about how this was done. Is there another method? One that does not require reconfiguring everything?

DSM 7.2.1 69057-Update 3

in DSM Updates Reporting

Posted

- Outcome of the update: SUCCESSFUL (eventually)

- DSM version prior update: TCRP DS918+ DSM 7.1.1-42962 update 1

- Loader version and model: arc-24.1.27-next, DS923+

- Using custom extra.lzma: NO

- Installation type: BAREMETAL ASRock J5040-itx

- Additional comments:

This was an adventure. Initially tried to upgrade with TCRP and got stuck in a Recovery loop. Grabbed a different USB stick and tried Arc. That was worse as it would try to boot DSM and then shutdown after five minutes. In another thread someone mentioned using a DS923+ instead of a DS918+. That worked like a charm.