-

Posts

9 -

Joined

-

Last visited

Posts posted by bucu

-

-

8 hours ago, r27 said:

Can some share steps to pass LSI controller to xpenology running on Proxmox ? I did everything possible and impossible, using multiple different loaders etc. I can pass controller to ubuntu vm and see drives but xpenology VM doesn't see drives.

Sure.

"lspci" and "lspci -k" are two commands you will need to check your LSI card. You want to see if it is in a separate IOMMU group from other devices and your chipset. For me it was already in a separate group. There is a guide on what do to if it isn't in a separate group somewhere on the forums here. You can search that.

You need to make sure your hardware is virtualization compatible as well.

You want to make sure you have IOMMU enabled in your grub file on Proxmox. You can refer to the guide here: Xpenology on Proxmox Install Guide

Another post that might be illuminating: PCI Passthrough IOMMU (proxmox forum)

After those steps are taken. You need to update the system files with the changes made to the grub file. Those steps are also listed in the guide.

Then modify your VM to passthrough the PCI device with the appropriate hardware ID that you saw by using "lspci" command.

I personally restarted proxmox server before starting the VM. I am not sure if that step is necessary but it was what I did to make sure the changes were initialized.

I'm a beginner at proxmox so if someone more knowledgeable than me can correct or add to this. Feel free!

You can also check your dmesg on synology VM to see if there are any errors after completing the guide's steps. (possible errors can be driver conflict, driver not loading, card is not in IT mode (HBA))

Hope that helps R27. -

Hey Speedyrazor, any update? I working a similar problem. I can get connection on my 10gb linux bridge through proxmox to the xpe vm but only at 1gb. I am using the ds918 while the examples above reference ds3615. If you have learned anything further, I'd love to know. If not, well, cheers as we commiserate.

-

anyone get the i40e drivers working for proxmox to enable 10gb? I have the intel x710 and I want to use one of the 10gb ports for the VM. The onyl options in the hypervisor are the e1000, virtio, realtek, vmxnet3. I find only the e1k and vmx profiles work but only provide a 1gb virtual nic to the VM.

SpoilerSubsystem: Intel Corporation Ethernet Converged Network Adapter X710-T

Kernel driver in use: i40e

Kernel modules: i40e -

I think I found the error looking through the dmesg log. These appear to be the default commands that were probably loaded with the grub file embedded with the synoboot.img loader.

Quote[ 0.000000] Command line: syno_hdd_powerup_seq=1 SataPortMap=333 HddHotplug=0

It seems to make sense that it is passing through all these sata devices I'm not using because all my drives are on the LSI controller. How do I modify that startup config to run a SataPortMap=111 or SataPortMap=222 so that I can make the space available for that one remaining drive without modifying the same code to increase the max drives as that is unnecessary IMO.

I have only done baremetal xpenology stuff before and that was about 6 years ago back on dsm5. My real syno box has served me just fine until now. This time I want to use it as a VM to learn more virtualization stuff but man being a newb at something feels like being blind and fumbling around in the dark.

I can be happy with 6 drives if that is where I am. However, if I can get the 7th that'd be swell because then I can set up the hot spare to kick in if a drive failure occurs. -

Thanks for the reply IG88. I will re-read in depth later today if I have time. I have some work related projects I need to finish that take priority first.

Quoteif the drive that had sdh goes missing, on next boot another drive will get sdh and dsm will have that in its gui

when using disk positions in a shelf its not "stable" (at least when rebooting), you need to keep track by serial number of disks and check that before pulling a drive

That's good to know. I assumed it would orientate them in an ordered way.

Quoteso you dont use a extended extra.lzma

a way how to handle the boot image (*.img) is described in the "normal" tutorial (Win32DiskImager 1.0 for windows)

https://xpenology.com/forum/topic/7973-tutorial-installmigrate-dsm-52-to-61x-juns-loader/

I figured there must be some parallels, but I missed the section about loading that to a virtual partition without the normal operation of OSFmount that you would use on the USB. I'll read it again later.

Quoteits about your vm configuration, there should only be things you define for your vm

you can check /var/log/dmesg about controllers and there number of ports and try to map that back to your vm configuration to find out whats wrong

if you define a 6 port controller in the vm then dsm will block 6 slots aka numbers of possible drives

in esxi the boot drive gets its own controller so it does get separated, leaving the 2nd controller for system/data disks

so check your definition file for the vm and compare that with the tutorial section

i never used proxmox but you problem is kind of generic and based on how dsm works and counts drives

Yeah, that makes sense. I just don't remember specifying those in the configuration. I will check again. Then I will check it a second time. HAHA

-

After wracking my brain for the New Years weekend and scouring both Xpe and Proxmox forums, I finally got IOMMU pass-through working for my LSI card. (Pay attention to the little details guys!!! The code goes on one line, one line!!!! It isn't delineated line by line. 😩 /rant)

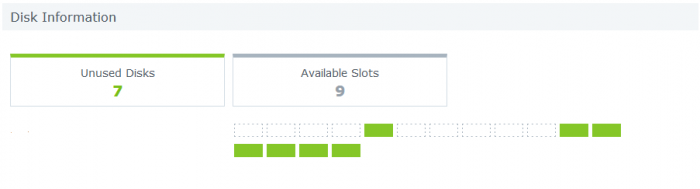

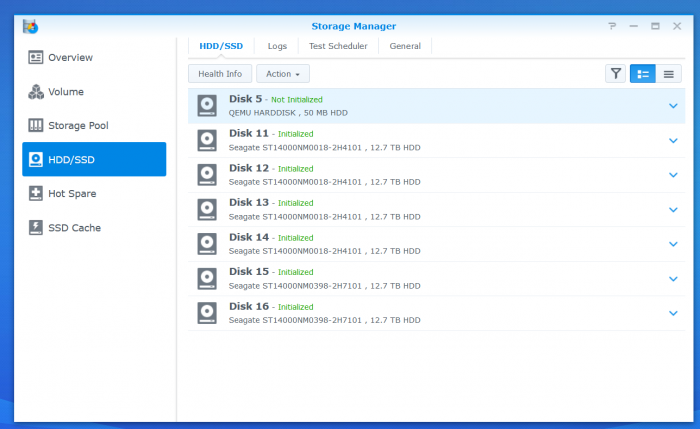

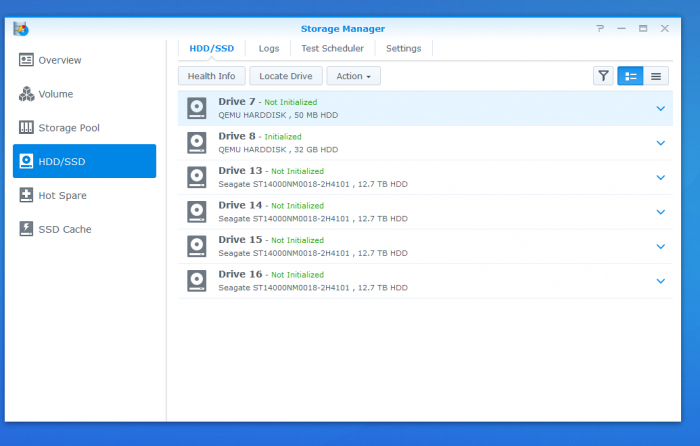

Prior to the PT fix, Proxmox was showing all of the 7 drives installed on the LSI PCI card. After pass-through obviously not as the Xpenology VM is accessing. However, upon logging into DSM I'm seeing some weird behavior and I don't even know where to begin so maybe someone has seen this before and can point me in the right direction. [Just as a side note, yes only 7 drives even though hard drive caddy and PCI card can support 8.]

As you can see in the picture, drives are listed from 7-16.

I am running two ssd's zfsmirror as the boot for Proxmox and image loader for VMs. I have 7 drives of 8 installed on the LSI 9211-8i PCI card. I see 4 of those drives as Drive 13-16. Drive 7 and 8 are the VM sata drives for the boot and loader information. Missing 3 on the other LSI SAS plug [assuming the three missing are all on the second SAS/SATA plug as it makes sense and it is port #2 on the card].

My guess is there is a max capacity of 16 drives in the DSM software. The mobo has a 6sata chipset (+2 NVME PCI/SATA unused), the two boot sata devices [drive 7 and 8] are technically virtual.

6 from the physical sata ports from chipset, +2 virtual sata for boot, +4 [of 8] from the LSI = the 16 spots listed.

Is my train of thought on the right track? If so, my next thought then is, how do we block the empty [non-used] sata ports from the Chipset from taking up wasted space on the Xpe-VM?

Like I said, I'm stuck. I need a helpful push in the right direction.

SpoilerBasic specs for info:

Proxmox VM running: 1.04 loader with no Extra.lzma (i haven't figured out how to configure the lzma using proxmox without using a USB yet)Boot from virtual sata drive [qemu drive] partitioned on the Proxmox SSD drive as part of the VM

DSM 6.2.3-25426

Hardware: i7-9700, Asrock b365 Pro4, 32gb ram, Intel x710 PCI NIC, LSI 9211-8i PCI HBA, 2x SATA SSD ZFS-Mirror

Drives on LSI Card: 7x 14TB Seagate EXOSSpace below left for future editing of OP for any requested information.

-

- Outcome of the update: SUCCESSFUL

- DSM Version installed: DSM 6.2.3-25426 Update 2 (New Install)

- Loader version and model: JUN'S LOADER v1.04 - DS918+

- Using custom extra.lzma: NO

- Installation type: Proxmox - i7-9700 on Asrock b365m mobo, 32gb ram only 20 allocated to xpenology vm

- Additional comments: LSI mpt2sas iommu errors for pass-through and 10gb only showing as 1gbe. Still researching and troubleshooting these two errors for best solution method.

-

On 12/21/2020 at 3:35 AM, Skyinfire said:

Great.

As I understand it, IR-mode is RAID mode, and IT-mode is HBA (host bus adapter) mode.

And all adapters for xpenology should be in IT-mode. All adapters in IR-mode are not compatible with xpenology, right?

Why is this super-important information not listed in the compatibility list in a huge red font???

I selected the controller, relying entirely on the compatibility list. Picked it up. I bought it. And it doesn't work. Because there is no warning anywhere that all the controllers listed in the so-called "compatibility sheet" are actually useless garbage if they are in IR mode.It may not be useless garbage. See IG-88's previous post. There are some guides about flashing LSI adapters into IT mode (aka putting them into basic HBAs) Give it a try first. If it still doesn't work, then yeah maybe it is useless garbage to you. Good luck.

On 12/21/2020 at 2:21 AM, IG-88 said:you controller Lsi 9285CV-8e seems to be in raid mode as it refers to virtual drives in the screenshot you provided

if you go int the controller bios you should se a "IR" somewhere in the upper area as reference to raid mode and in the other controller there should be "IT" in the same place

so look for a IT firmware and if that does not exist there might be a way to switch the controller to a way presenting disks just as simple devices

https://geeksunlimitedinc.wordpress.com/2017/02/16/flashing-lsi-hba-to-it-mode-or-ir-mode/

LSI HBA Passthrough error - Drives not showing in DSM

in DSM 6.x

Posted

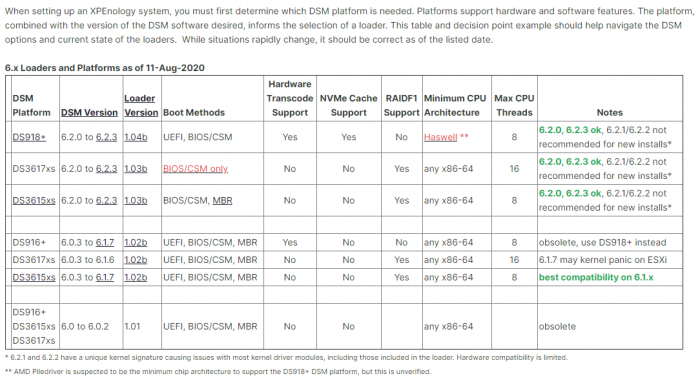

I used 1.04 with the DS918 image and relevant PAT file. I want hardware transcoding for the future and currently the ds3617/15 don't support that otherwise I would use one of those builds.

I have heard that using the ds3615/ds3617 images have less issues with running large arrays and 10gb depending on your needs though. There is a wonderful chart on the forums that shows some basic information about the different loaders/images.