dr_drache

-

Posts

13 -

Joined

-

Last visited

Posts posted by dr_drache

-

-

just FYI - this guide - the Chinese portion (mostly the .vmdk file ) allowed a perfect migration from 6.1 (1.02b2) to a 6.2 with a LSI passthrough

created the vmdk for the new synoboot.img (renamed synoboot62.img, and synoboot62.vmdk respectivly)

uploaded to datastore

removed old synoboot file HDD from config

switched to bios booting

added new Synboot making sure to use SATA/SATA

Used remote console to boot (VMRC) so I can force into VMWARE/ESXi mode

wizard migrated perfectly -

this sounds like a great product - much more useful than JUST DSM.

-

my experience is this :

I was not able to deploy using the HTML5 "web UI" - i had to dig out my old 6.0 vsphere client to deploy.

-

Quck update...

1.02Alpha doesn't support LSI 2xxx cards - it never boots after initial installation; remove the card and you get the "please install harddrives" message.

I was wrong - after using the OVF download in another thread - everything is perfect.

this was an user error (mine).

-

Quck update...

1.02Alpha doesn't support LSI 2xxx cards - it never boots after initial installation; remove the card and you get the "please install harddrives" message.

-

As of yet, I have been UNABLE to get any of these suggested DSM 6 solutions working in any way, on ESXi 5.5, 6, or 6.5. I have no issues with the 5x versions though.

I always end up with some variation of "Failed to power on virtual machine DSM 6. Unsupported or invalid disk type 2 for 'scsi0:0'. Ensure that the disk has been imported. Click here for more details. "

Has anyone developed a bootable ISO for install yet?

I can provide a working 6.0.x OVA that I have remotely deployed to 6/6.5 ESXi, PM me for info.

-

No worries, Thank you for the lecture.

I know I'm not expert in this subject, just the general perception of what it feels like when working with ISCSI with the NAS devices that's been available in the market, which as you can tell it has not been pretty due the poor speed performance compared to the regular NFS / CIFS layer.

I've seen complains of ISCSI as well even from people using 10 Gbps network, not just in this forum, but else where as well for other NAS devices.

It appears as you said, only authentic devices specifically created for iSCSI, can take advantage of iSCSI and provide the actual performance that it was supposed to offer, everything else feels like a poor-man's version of iSCSI

I apology again if I went too far - i looked back and a few things can be better said;

I have not, but I can; look at what iscsi is doing on my ESXi DSM(5.2), and see what is required for good block access.

my setup will NOT be a good test case, I am using SHR - which in all aspects, is file level (or more correctly - quite a few layers to get to block level) - so iscsi will be hampered by that.

if DSM is using decent raid (raid-5 with write hole, ) then you should be able to get pretty decent speed.

looking at DSM's site :

https://www.synology.com/en-us/knowledgebase/DSM/help/DSM/StorageManager/iscsilun

looks like it explains it better than I did;

but then as you said "devices specifically created for iSCSI, can take advantage of iSCSI and provide the actual performance that it was supposed to offer, everything else feels like a poor-man's version of iSCSI"

we can do some tweaking, and some MPIO work - LUN packet sizes, proper raid; LUN allocation unit size (should match your type) but if you really want/need iscsi - DSM may not be for you.

for 99.999% of home labs, early mount NFS via fstabs (or other node supported ways) is going to get you what you need.

I do not mean to Lecture; there are things I learn every day myself.

-

patching DSM OS is not such a simple thing

while it's a linux distribution, it's not your typical linux distribution

everything needs to be compile from the grounds up to make it work with DSM.

and even if you manage to patch NFS 4 to 4.1 the new features you are looking for might not be usable from the user interface, you could always do it from the command line i guess.

that being said, if you want ISCSI to be fast, you'll need a faster network, something along the lines of 10 Gbps network card and fiber cables on both ends.

but if you are going to be spending that kind of money on a 10 Gbps network, might as well use the same money to slap in a proper RAID card + HDDs into the machine you want to use ISCSI, mainly because when ISCSI is enabled, it locks out a big chunk of the storage space just for the ISCSI file (which is the size of the HDD size you choosed to from OS side)

and depending on some implementations of ISCSI it might not be share-able concurrently with other machines.

it's not a true SAN, it's a fake ISCSI, all it does it creates a virtual drive inside a huge file like 500 GB or whatever size you choose your ISCSI device to be, when entering the size from the Control Panel.

I know WD, Qnap, Synology and most other NAS storage does it the same way, only true SAN servers that cost upwards of 10K can let you have true ISCSI which can be shared among multiple machines.

For the same reason, as you mentioned, I just stick with NFS and Samba shares, it works much better at any network speed.

you are not quite 100% in your terms sir.

the iSCSI presented by WD/Qnap/Synology/Linux - is 100% TRUE iSCSI.

you are getting iscsi (a transportation layer (read: networking protocol)- allows the SCSI command to be sent end-to-end over local-area networks (LANs), wide-area networks (WANs) or the Internet)

with SAN (Storage Area Network)

the Virtual drive you are refering to is a LUN - which is how iscsi and FibreChannel work.

LUN : A logical unit number (LUN) is a unique identifier to designate an individual or collection of physical or virtual storage devices that execute input/output (I/O) commands with a host computer, as defined by the Small System Computer Interface (SCSI) standard

in more simpler terms - Iscsi is used for LUN access, and in this case we can say a LUN device is a block accessible device. (you are serving a full virtual drive for example)

NFS and SAMBA (CIFS) serve FILES. (the ride on the operating systems and Layer 7 networking, not layer 2 as isci)

Iscsi is inherently faster for systems designed to use it. because you are working DIRECTLY with block levels. no abstraction layers. where these lower end devices "FAIL" at is speed.

if you want faster Iscsi - you need faster drives with faster IO (raid10/SSDs/ETC) and you need to use it for the right reason; it's slow in file because it's not "really" designed for that. - you can 100% saturate a gigabit network with a "whitebox" SAN/NAS - remember - gigabit is slower than most sata3 Spinners.

there is more complete information i can provide; if this is questioned.

TL:DR - it's real iscsi - iscsi is for full drive sharing (block accessing, say VM images) - cifs/nfs is for files - iscsi (done for the right reason) will always be faster.

(yes, I am aware of file-based iscsi - I am not sure I know anyone who actually uses it. it's inherently slow (due to required overhead))

Sources : I am a network/storage engineer

EDIT - I have edited this a few times to clarify my hasty writing; forgive me for that.

-

Hello

I managed to create a DSM6 under ESXi6 (using the OVF), but I am unable to add a drive for the volume.

I use the free ESXi hypervisor with the free client.

any tips?

-Roei

make sure you are running ESXi 6U2 - then use the web UI : https://ESXI_HOST/ui/

for anyone else using ESXi and want the easy way to update :

http://www.v-front.de/2016/01/how-to-use-esxi-patch-tracker-to-update.html

-

how would I move my current xpenology NAS to this?

-

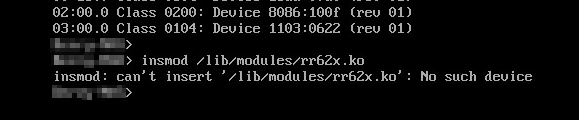

just a peice of info - this is with ESXi passthrough :

fuuny thing - works find in OSX and WIndows - will try some other methods.

-

DSM 6.2 Loader

in Loaders

Posted